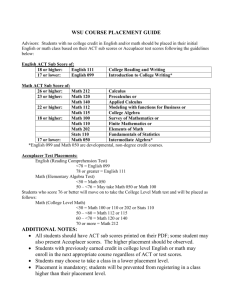

Placement of Students 1 Placement of Students into First-Year Writing Courses

advertisement