Lecture 5: Decision and inference Part 2

advertisement

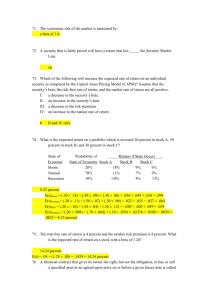

Lecture 5: Decision and inference Part 2 Influence diagrams Decision-theoretic components can be added to a Bayesian network. The complete network is then related to as a Bayesian Decision Network or more common Influence diagram (ID) Return to the example with banknotes. Let H0: Dye is present H1: Dye is not present States of nature E1: Method gives positive detection E2: Method gives negative detection Data and let A simple Bayesian network can be constructed for the relevance between the state of nature and data: H H Probabilities H0 0.001 H1 0.999 Probabilities E H: E H0 H1 E1 0.99 0.02 E2 0.01 0.98 Now, we will add two nodes to the network, one for the actions that can be taken and one for the loss function A A a1 Destroy banknote a2 Use banknote A: L H: L a1 a2 H0 H1 H0 H1 0 100 500 0 Neither of the nodes are random nodes. Node L must be a child node with nodes H and A as parents. A H L E With this network, an influence diagram, we would like to be able to propagate data from node E to a choice of decision in node A. Hence, in the loss node L the expected posterior loss should be calculated. Using GeNIe: In GeNIe (and other software), this node is per definition a utility node, but it can be used as a loss node by representing losses as negative utilities Run the network Instantiate node E to E1 The expected posterior utility (negative loss) can be read off in node A (and also in node L ) About prior distributions Conjugate prior distributions Example: Assume the parameter of interest is , the proportion of some property of interest in the population (i.e. the probability for this property to occur) A reasonable prior density for is the Beta density: 1 1 p ; , B , 1 1 B , x 1 1 x 0 1 , 0 1; 0, 0 hyperparam eters dx Beta function Beta(1,1) Beta(5,5) Beta(1,5) Beta(5,1) Beta(2,5) Beta(5,2) Beta(0.5,0.5) Beta(0.3,0.7) Beta(0.7,0.3) 0 0.5 1 Now, assume a sample of size n from the population in which y of the values possess the property of interest. The likelihood is n y L ; y 1 n y y 1 1 n y 1 n y 1 y B , L | y p q | y 1 1 1 1 0 Lx | y px dx 0 n x y 1 x n y x 1 x dx B , y y 1 n y x 1 x 1 0 y n y 1 1 y 1 1 1 x 1 1 x 1 n y 1 dx 1 x 0 y 1 1 x n y 1 dx y 1 1 B y , n y n y 1 Thus, the posterior density is also a Beta density with hyperparameters y + and n – y + . Prior distributions that combined with the likelihood gives a posterior in the same distributional family are named conjugate priors. (Note that by a distributional family we mean distributions that go under a common name: Normal distribution, Binomial distribution, Poisson distribution etc. ) A conjugate prior always go together with a particular likelihood to produce the posterior. We sometimes refer to a conjugate pair of distributions meaning (prior distribution, sample distribution = likelihood) In particular, if the sample distribution, i.e. f (x; ) belongs to the k-parameter exponential family (class) of distributions: k Aj θ B j x C x D θ f x; θ e j1 we may put Aj θ j k 1 D θ K 1 ,, k , k 1 k p θ e j1 k Aj θ j k 1 D θ e j1 where 1 , … , k + 1 are parameters of this prior distribution and K( ) is a function of 1 , … , k + 1 only . Then q θ | x L θ | x p θ k n n k A j θ B j xi C xi nD θ A j θ j k 1 D θ K 1 ,, k , k 1 i 1 i 1 e j 1 e j 1 n e e A j θ j 1 k e C xi i 1 A j θ j 1 k K 1 ,, k , k 1 e B j xi j n k 1 D θ i 1 n B j xi j n k 1 D θ i 1 n i.e. the posterior distribution is of the same form as the prior distribution but with parameters n n i 1 i 1 1 B1 xi , , k Bk xi , k 1 n instead of 1,, k , k 1 Some common cases (within or outside the exponential family): Conjugate prior Sample distribution Posterior Beta Binomial ~ Beta , Beta X ~ Bin n, Normal, known 2 Normal ~ N , 2 Gamma X i ~ N , 2 Normal 2 2 2 2 n | x ~ N 2 x , 2 2 2 2 n 2 n n Poisson ~ Gamma , Pareto Gamma X i ~ Po Uniform p ; | x ~ Beta x, n x | xi ~ Gamma xi , n Pareto X i ~ U 0, q ; x n ; max , xn Non-informative priors (uninformative) A prior distribution that gives no more information about than possibly the parameter space is called a non-informative or uninformative prior. Example: Beta(1,1) for an unknown proportion simply says that the parameter can be any value between 0 and 1 (which coincides with its definition) A non-informative prior is characterized by the property that all values in the parameter space are equally likely. Proper non-informative priors: 0.25 0.2 0.15 The prior is a true density or mass function 0.1 0.05 1.2 0 1 2 3 4 5 1 0.8 0.6 Improper non-informative priors: 0.4 0.2 0 0 The prior is a constant value over Rk Example: N ( , ) for the mean of a normal population 0.2 0.4 0.6 0.8 1