Change-point detection in vector time series using tree algorithms and smoothers He Zhang

advertisement

Master Thesis in Statistics, Data Analysis and Knowledge

Discovery

Change-point detection in vector

time series using tree algorithms

and smoothers

He Zhang

The back cover shall contain the ISRN number obtained from the department. This

number shall be centered and at the same distance from the top as the last line on the

front page.

LiU-IDA-???-SE

Datum

Date

2009-08-22

Avdelning, Institution

Division, Department

Department of Computer and Information Science

Svenska/Swedish

Examensarbete

ISBN

___________________________________________________

__

ISRN

Engelska/English

C-uppsats

_________________________________________________________________

D-uppsats

Serietitel och serienummer

Övrig rapport

Title of series, numbering

Språk

Rapporttyp

Language

Report category

Licentiatavhandling

ISSN

____________________________________

URL för elektronisk version

http://www.ep.liu.se

Titel

Title

Change-point detection in vector time series using tree algorithms and smoothers

Författare

Author

He Zhang

Sammanfattning

Abstract

Detection of abrupt level shifts in observational data is of great interest in climate research, bioinformatics, surveillance of manufacturing

processes, and many other areas. The most widely used techniques are based on models in which the mean is stepwise constant. Here, we

consider detection of more or less synchronous level shifts in vector time series of data that also exhibit smooth trends. The method we propose

is based on a back-fitting algorithm that alternates between estimation of smooth trends for a given set of change-points and estimation of

change-points for given smooth trends. More specifically, we combine an existing non-parametric smoothing technique with a new two-step

technique for change-point detection. First, we use a tree algorithm to grow a large decision tree in which the space of vector coordinates and

time points is partitioned into rectangles where the response (adjusted for smooth trends) has a constant mean. Thereafter, we reduce the

complexity of the tree model by merging adjacent pairs of rectangles until further merging would cause a significant increase in the residual sum

of squares. Our algorithm was tested on synthetic vector time series of data with promising results. An application to a vector time series of

water quality data showed that such data can be decomposed into smooth trends, abrupt level shifts and noise. Further work should focus on

stopping rules for reducing the complexity of the tree model.

Nyckelord

Keyword

Change-point detection, regression trees, nonparametric smoothing

Abstract

Detection of abrupt level shifts in observational data is of great interest in

climate research, bioinformatics, surveillance of manufacturing processes, and

many other areas. The most widely used techniques are based on models in

which the mean is stepwise constant. Here, we consider detection of more or

less synchronous level shifts in vector time series of data that also exhibit

smooth trends. The method we propose is based on a back-fitting algorithm

that alternates between estimation of smooth trends for a given set of

change-points and estimation of change-points for given smooth trends. More

specifically, we combine an existing non-parametric smoothing technique with

a new two-step technique for change-point detection. First, we use a tree

algorithm to grow a large decision tree in which the space of vector coordinates

and time points is partitioned into rectangles where the response (adjusted for

smooth trends) has a constant mean. Thereafter, we reduce the complexity of

the tree model by merging adjacent pairs of rectangles until further merging

would cause a significant increase in the residual sum of squares. Our

algorithm was tested on synthetic vector time series of data with promising

results. An application to a vector time series of water quality data showed that

such data can be decomposed into smooth trends, abrupt level shifts and noise.

Further work should focus on stopping rules for reducing the complexity of the

tree model.

Keywords: change-point detection, regression trees, nonparametric smoothing

I

II

Acknowledgements

I would like to express my deep gratitude to my supervisor Prof. Anders

Grimvall who introduced a very interesting and powerful research topic. He

spent a lot of time on helping me develop the ideas and improve this thesis both

in contents and language.

I would like to thank Ph.D student Sackmone Sirisack for his kind suggestion

and discussion on the thesis.

I also would like to thank my parents Ailing Zheng and Decheng Zhang and my

good friend Johan Hemström dearly for their support and encouragement.

III

IV

Table of contents

1

1.1

1.2

2

2.1

Introduction ...................................................................................................... 2

Background ...................................................................................................... 2

Objective .......................................................................................................... 3

Methods............................................................................................................ 4

Detection of change-points ............................................................................... 4

2.1.1Parameter estimation ............................................................................................... 5

2.1.2 Model selection........................................................................................................ 6

2.1.3 A generalized tree algorithm .................................................................................... 8

3

4

5

6

7

8

2.2 Smoothing of vector time series of data .............................................................. 8

2.3 A general back-fitting algorithm .......................................................................... 9

Mean shift model and datasets ....................................................................... 10

3.1 Computer-generated data ................................................................................... 11

3.2 Observational data ............................................................................................. 11

Test beds for the proposed algorithms ........................................................... 12

4.1 Runs involving surrogate data ........................................................................... 12

4.2 Runs involving real data ................................................................................ 13

Results ............................................................................................................ 14

5.1 Analysis of surrogate data .............................................................................. 14

5.1 Analysis of observational water quality data ................................................. 20

Discussion and conclusions ........................................................................... 21

Literature ........................................................................................................ 23

Appendix ........................................................................................................ 25

1

1 Introduction

1.1 Background

Change-point analysis aims to detect and estimate abrupt level shifts and other

sudden changes in the statistical properties of sequences of observed data. This

form of statistical analysis has a great variety of applications, such as quality

control

of

manufactured

items,

early

detection

of

epidemics,

and

homogenization of environmental quality data. In some cases, the

change-points represent real changes in the systems under consideration. In

other cases, abrupt changes in the collected data may be due to systematic

measurement errors.

Control charts are considered to be the oldest statistical instruments for

change-point detection. The basic ideas of such charts were outlined by

Shewhart (1924) who was a pioneer in statistical quality control for industrial

manufacturing processes. Other authors have contributed to a solid theory for

retrospective analyses of changes in the mean of a probability distribution.

Hawkins (1977) proposed a test for a single shift in location of a sequence of

normally distributed random variables, and Srivastava & Worsley (1986)

proposed a test for a single change in the mean of multivariate normal data.

Shifts in the mean were also examined by Alexandersson (1986) who proposed

the so-called standard normal homogeneity test (SNHT) for detecting artificial

level shifts in meteorological time series when a reliable reference series is

available. Fitting multiple change-point models to observational data is a

computationally demanding problem that requires algorithms in which a global

optimization is separated into simpler optimization tasks (Hawkins, 2001). This

is particularly true when the number of change-points is unknown (Caussinus

& Mestre, 2004; Picard et al., 2005). A fast Bayesian change-point analysis was

recently published by Erdman & Emerson (2008).

2

The cited methods are based on the assumption that the mean is stepwise

constant or that this condition is fulfilled after removing a common trend

component in all the investigated series. Here, we consider the problem of

detecting change-points in the presence of smooth trends that may be different

for different vector components. Recently, it was shown how a synchronous

level shift in all vector components can be estimated in the presence of trends

that vary smoothly across series (Wahlin et al., 2008). In this thesis we consider

change-points that are not necessarily synchronous.

1.2 Objective

The general objective of this thesis is to develop an algorithm that enables

detection of an unknown number of level shifts in vector time series of data

that also exhibit smooth trends. In particular, we shall investigate how

change-point detection involving regression trees can be combined with a

nonparametric smoothing technique (Grimvall et al., 2008) into a back-fitting

algorithm.

The specific objectives are as follows:

•

Implement a tree algorithm for splitting the space of vector coordinates and

time points into a set of rectangular subsets (regions).

•

Reduce the complexity of the derived tree model by successively merging

regions until further merging would lead to a significant increase in the residual

sum of squares.

•

Integrate the tree algorithm with an existing nonparametric smoothing

technique (MULTITREND) into an algorithm for decomposing the variation of

the given data into smooth trends, abrupt level shifts and noise.

•

Test the tree method and the integrated algorithm on synthetic data and real

data.

3

2 Methods

2.1 Detection of change-points

Decision trees provide simple and useful structures for decision processes and

for estimation of piecewise constant functions in nonlinear regression. A

widely used algorithm that we shall refer to as the tree algorithm was

developed by Breiman et al. (1984). It assumes that the mean response is a

function of p predictors or inputs and that the input domain can be split into

cuboids where the mean is constant. We shall consider tree algorithms for

segmentation of vector time series of data. The mean response is assumed to be

piecewise constant with an arbitrary number of level shifts. Some of these level

shifts may be synchronous for two or more series, whereas others only affect

the mean of a single series.

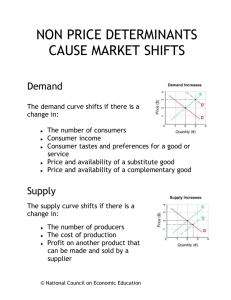

Figure 1 Arbitrary partitions and CART (Hastie et al, 2001)

4

Figure 1 compares arbitrary partition and CART splitting where the latter

partitions the dataset by building a tree structure or segmenting the feature

space into a set of rectangles. More specifically, the top left panel shows a

general partition that is obtained from an arbitrary (not recursively binary)

splitting; while the top right panel illustrates a partition of a two-dimensional

feature space by recursively binary splitting, as used in CART. The bottom left

panel shows the corresponding tree structure, and a perspective plot of the

prediction surface appears in the last panel (Hastie et al., 2001). We shall use a

tree algorithm to partition the two-dimensional space of time points and vector

coordinates.

2.1.1 Parameter estimation

Here, we describe a general tree algorithm outlined by Breiman and co-workers

(1984) and Hastie and co-workers (2001). Furthermore, we explain how this

algorithm can be simplified when we are estimating a function that is piecewise

constant in two ordinal-scale inputs.

Consider the dataset ( xi , yi ) for i 1, 2, , N , with xi

( xi1 , xi 2 ,

, xip )

comprising N observations with p independent variables (inputs) and one

response. The cited algorithm then recursively identifies splitting variables and

split points so that the input space is partitioned into cuboids (or regions)

R1 , R2 ,

, RM in which the response is modeled as a constant cm .

f ( x)

M

c I (x

m 1 m

Rm )

When searching for the first two regions, we let

R1 ( j , s) { X X j

s} and R2 ( j , s) { X X j

s}

and determine the splitting variable j and split point s that solve

min[min

j ,s

c1

xi R1 ( j , s )

( yi c1 ) 2

min

c2

xi R2 ( j , s )

( yi c2 ) 2

This procedure is then repeated on each of the derived regions until a large tree

5

model has been constructed.

Because the sum of squares

( yi

f ( xi ))2 is employed as measure of the

goodness-of-fit, the best estimator of the mean cm of yi in region Rm is equal to

the average observed response.

cm

ave( yi xi

Rm )

In our case, we have a choice between splitting with respect to time (j=1) or

vector coordinates (j=2) of the observed time series in each step. The number

of combinations of j and s that shall be investigated is thus equal to the sum of

the number of time points and the number of time series in the region under

consideration. Furthermore, it is sufficient to use information about the number

of observations and their sum in arbitrary rectangles of the input space. This

makes the tree algorithm a very fast algorithm with complexity O(N).

2.1.2 Model selection

Breiman’s procedure for selecting a suitable tree model involves two basic

steps. First, a large tree is built to ensure that the input domain is split into

regions that are pure in the sense that the response exhibits little variation. Then,

regions are merged by pruning branches of the decision tree.

Generally, the target of the tree algorithms is to achieve the best partition with

minimum costs. In other words, the split at each node should generate the

greatest improvement in decision accuracy. This is usually measured by the

node impurity (sum of squared residuals) which provides an indication of the

relative homogeneity of all the cases within one region. Furthermore, another

criterion must be established to determine when to stop the splitting and

pruning processes.

6

The tree size is determined by considering the trade-off between the derived

number of regions (node size or model complexity) and the goodness-of-fit to

the given data. A huge tree may lead to over-fit the given data and increased

variance of model predictions. A small tree might not capture important

features of the underlying model structure and produce biased predictions. One

approach to the model selection problem is to perform a significance test for

each suggested split. However, this strategy is short-sighted since a seemingly

worthless split might lead to a very good split after other splits have been

undertaken. Therefore, it is often recommended to first grow a large tree and

then reduce the model complexity by pruning. Because the size of the large tree

is not very crucial, one can simply set a threshold for the node size (the number

of observations in a node) in each terminal region (Hastie et al., 2001).

The final pruning requires a more elaborated assessment of the tradeoff

between model complexity (no. of terminal nodes) and goodness-of-fit (sum of

squared errors). Hastie and co-workers (2001) suggest a cost complexity

criterion which is composed of the total cost (total sum of squared errors) and a

penalty quantity indicating the complexity of the derived tree in terms of

number of terminal nodes. The expression is

M

RSS m

M , where RSSm

( yi

cm ) 2

xi Rm

m 1

Adaptively choosing the penalty factor

is the main difficulty in using the

cited procedure to prune a tree into the right size. Alternative model selection

procedures may be based on information criteria such as Akaike’s Information

Criterion (AIC), Bayesian Information Criterion (BIC) or minimum description

length.

7

2.1.3 A generalized tree algorithm

We have previously noted that tree algorithms generate partitions of the input

domain into cuboids or rectangles. To be able to fit more complex structures to

a set of collected data we consider models in which the mean is constant in

regions that are formed as unions of rectangles. First, a tree algorithm is used to

create a set of relatively small rectangular regions, and then adjacent regions

are step by step merged until there is a significant increase in the segmentation

cost.

We tried to control the merging of regions using two different procedures: (i) a

standard partial F-test; (ii) block cross-validation. The F-test compares the

residual sum of squared errors before and after the merging. Block

cross-validation splits the data sets into training and test sets, fits models to the

training sets, and evaluates the predictive power of the fitted models on the test

sets.

2.2 Smoothing of vector time series of data

When vector time series of data are analyzed, it is often appropriate to assume

that the expected response is a smooth function of both time and vector

coordinate. For example, this is a natural assumption when the analyzed dataset

represents environmental quality at sampling sites located along a transection

or along an elevation gradient. It may also be appropriate for data representing

measured concentrations of chemical compounds that can be ordered linearly

with respect to volatility or polarity.

So-called gradient smoothing (Grimvall et al., 2008) refers to fitting smooth

response surfaces to a vector time series of data by minimizing the sum

8

S( , , )

L( )

1 1

n

2

L2 ( ) , where

( yt( j )

( j)

t

i 1 j 1

(

( j)

t

(

( j)

t

( j)

t 1

t 2 j 1

n m 1

L2 ( )

2

are roughness penalty factors;

( j)

t

( xit( j ) xi( j ) ))2 is the residual sum of squares;

i 1

n 1 m

L1 ( )

and

p

m

S( , , )

1

( j)

t 1 2

) represents roughness over time;

2

( j 1)

t

( j 1)

2

t

2

t 1 j 2

) represents roughness across coordinates.

Minor modifications of this smoothing method can make it appropriate also for

seasonal or circular data.

Figure 2 Gradient smoothing for data collected at different sites along a

gradient

2.3 A general back-fitting algorithm

The following pseudo code given by Wahlin et al. (2008) illustrates the back

fitting algorithm for joint estimation of smooth trends and discontinuities (level

shifts).

1) Initialize

2) Initialize

0

by using a multiple linear regression model with intercept to

regress y on x1,

, xp

3) Initialize press =0

4) Initialize s =1

5) Repeat

9

6) T = {1, …, s-1, s+1, …, n}

Cycle

p

ut( j )

yt( j )

( j)

k

( xkt( j )

xk( j ) )

( j)

k

k 1

m

(ut( j )

arg min[

( j) 2

t

)

L (W1 , )

1 1

2

L2 (W2 , )]

t T j 1

ut( j )

yt( j )

( j)

t

( j)

t

, t T , j 1,

,m

p

m

(ut( j )

arg min[

( j)

k

0

t T j 1

( xkt( j )

xk( j ) )) 2 ]

k 1

p

ut( j )

yt( j )

( j)

t

( j)

k

( xkt( j )

xk( j ) ), t T , j 1,

,m

k 1

m

(ut( j )

arg min[

( j) 2

t

) ]

t T j 1

m

( j)

t

( j)

t

( j)

t

mean(

)

t T j=1

until the relative change in the penalized sum of squares on T is below a

predefined threshold

p

m

press

( ys( j )

press

( j)

s

j 1

s

( j)

k

( xks( j )

xk( j ) )

( j) 2

s

)

k 1

s 1

7) Until s=n

Here,

represents the smooth trend,

abrupt level shifts and

the impact of

covariates.

3 Mean shift model and datasets

A univariate mean shift model can be written

t

yt

t

S t

t

.

t 1,

, n , where S t

i

i

is a level shift at time t and yt is the observed response at time t. S(t) is thus

10

the cumulated level shifts from time 1 to t. If there is no level shift at time i,

t

is zero. Error terms are assumed to be independent and normally distributed

with zero mean and constant standard deviation. Multivariate mean shift

models can be defined analogously, and the level shifts can be more or less

synchronous.

3.1 Computer-generated data

A set of surrogate data representing mean monthly temperatures recorded

during the period 1913-1999 was downloaded from www.homogenisation.org. This

data is part of a larger benchmark dataset for assessing the ability of statistical

methods to detect artificial level shifts in climate data with realistic marginal

distributions, temporal and spatial correlations. More information about the

surrogate data set can be found in the following link:

ftp://ftp.meteo.uni-bonn.de/pub/victor/costhome/monthly_benchmark/inho/

3.2 Observational data

Water quality data representing the concentrations of total phosphorus at three

different depths (0.5, 10 and 20m) at Dagskärsgrund in Lake Vänern (Sweden),

1991-2005, were downloaded from the Swedish University of Agricultural

Science. The mean value of the observed concentrations for each combination

of year and depth were then used as inputs to our change-point detection

algorithm.

11

4 Test beds for the proposed algorithms

4.1 Runs involving surrogate data

Data with a negligible trend slope, such as our surrogate temperature data, can

be analyzed using models with piecewise constant means. Accordingly, we

applied our tree algorithm directly to that dataset. The input domain was step

by step subjected to binary splits using the combination of splitting variable

and splitting point that produced the most significant “gain”, i.e. the strongest

reduction in total residual sum of squares (RSS). Furthermore, region features,

such as size (the number of observations in each derived region), mean, and

RSS were updated after each split. As the number of regions increases, the total

RSS may first decrease sharply and then level out as the “gain” decreases. To

assess the statistical significance of the observed gain, we computed the

following F-statistic at each step:

F

RSS1 RSS 2 RSS 2

/

p2 p1

n p2

Here, RSS1 denotes the residual sum of squares before splitting, whereas RSS2

is the residual sum of squares after splitting. Furthermore, n represents the

number of observations, while the degrees of freedom of the two sums are n-p1

and n-p2, respectively. The split under consideration was accepted when the

F-statistic exceeded 2.71, which corresponds to a significance level of

approximately 0.1 when p2 - p1 = 1 and n is large. When no splitting was

statistically significant the growth of the decision tree was terminated.

To reduce the risk of over-fitting, pairs of adjacent regions were then merged

until the F-statistic

F

RSS1 RSS 2 RSS 2

/

p2 p1

n p2

was above a predefined level for all adjacent pairs of regions. Different

12

significance levels were examined. However, in general, we used a lower

significance level when regions were merged. The final result of the entire

process is a partition of the input domain into regions that are now unions of

rectangles.

Cross-validation was used as an alternative method to determine how many

pairs of regions should be joined. More specifically, we used K-fold

cross-validation. This means that the original sample is partitioned into K

subsamples and that K pairs of training and test sets are formed by letting one

of the subsamples be the test set while all other data constitute the training set.

The prediction error sum of squares (PRESS) was computed for all test sets,

and the total PRESS-value was then used to examine how long regions could

be joined without reducing the predictive power of the model.

4.2

Runs involving real data

Datasets that have a smooth trend can be analyzed for change-points using a

back-fitting algorithm that alternates between estimation of change-points for a

given smooth trend and estimation of smooth trends for a given set of

change-points. We used the previously described water quality data from Lake

Vänern for our test runs. Initial estimates of one smooth and one discontinuous

component were obtained by employing the decomposition method developed

by Wahlin and co-workers (2008). Thereafter, the decomposition was step by

step improved using our back-fitting algorithm where the level shifts are not

necessarily synchronous. More specifically, our tree algorithm was used to

estimate change-points for given trends, whereas the software MULTITREND

(Grimvall et al., 2008) was used to estimate smooth trends for a given set of

change-points. A noise component was extracted by subtracting the smooth

trends and the level shifts from observed data.

13

5 Results

5.1 Analysis of surrogate data

Station1

20

Station2

Temperature

15

Station3

Station4

10

Station5

Station6

5

Station7

0

1900

-5

Station8

1920

1940

1960

1980

2000

Station9

Station10

-10

Station11

Year

Station12

Figure 3. Surrogate monthly temperature data for 12 stations in 1913-1999.

Station 1

20

Station 2

Cell mean of temperature

15

Station 3

Station 4

10

Station 5

Station 6

5

Station 7

Station 8

0

1900

-5

1920

1940

1960

1980

2000

Station 9

Station 10

Station 11

-10

Year

Station 12

Figure 4. Estimated region means after using our tree algorithm to split the

input domain into rectangles.

The analyzed surrogate data are illustrated in Figure 3. Visual inspection

strongly indicates that there is a major level shift around 1960 and possibly two

other significant level shifts. When our tree algorithm was applied to the data

14

shown in Figure 3, the input domain was partitioned into 126 rectangles

defining a total of 131 change-points.

Figure 4 shows the region mean for each cell, i.e each combination of year and

month. It can be seen that each monthly series has several level shifts. In

addition, the tree algorithm has identified several outliers that are shown as

segments with a single observation.

Table 1 in the appendix reveals the results of implementing cross-validation

model selection method and compares the derived total predicted residual sum

of squares (PRESS) between ten-fold and leave one out cross-validation

methods at different significance levels. The two cross-validation methods are

implemented by using one year of observations as a unit. For instance, leave

one out cross-validation leaves out the observations in one year each time.

Analogously, ten-fold cross-validation divides the whole data set into 10

subsamples, and each subsample is used as a test data set once to measure the

predicted residual sum of squares while the remaining nine subsamples are

employed as training data. In both cases, predicted values are computed as the

mean value in the associated region. A model is then selected by choosing the

alpha level with the smallest PRESS in each method. In our test runs, we

obtained

=0.5 using ten-fold CV while

=0.01 was obtained with the

leave-one-out method. A significance level of 0.5 did not entail a large

reduction in the number of terminal regions (from 126 to 109), whereas

merging the number of regions decreased to only 44 when

was 0.01.

Figure 5 and 6 compare the results of the two cross-validation methods in terms

of the derived PRESS when different significance levels were used. Both

graphs have a minimal PRESS that was used to select the significance level in

the merging step.

15

3300

3200

3100

PRESS

3000

2900

2800

2700

2600

2500

0

0,2

0,4

0,6

0,8

1

alpha level

Figure 5. Total sum of squared prediction errors using ten-fold cross-validation

to select the prediction model

3820

3800

3780

PRESS

3760

3740

3720

3700

3680

3660

3640

0,000001

0,00001

0,0001

0,001

0,01

0,1

1

alpha level

Figure 6. Total sum of squared prediction errors obtained by leaving out one

year of observations at a time.

Next step of the algorithm is to remove the superfluous segmentations, and

figure 7 displays the region mean for each observational cell after merging the

adjacent regions. Compared with the previous region means graph, there are

less short lines or single dots after merging procedure, and hence the data

before or after a change-point becomes more unified in one series. In more

detail, the merging procedure was based on the derived 126 rectangles, and

each time the merging of all possible adjacent pairs of regions were evaluated

16

using an F test statistic that measured if the difference in mean between the two

regions was small enough. Furthermore, the corresponding p-value of each test

statistic was calculated and used as a criterion for selecting which pair to join.

The algorithm always joins the pair with the largest p-value and stops when

this value is less than or equal to a given threshold. For the synthetic data set,

0.01 is chosen as a significance level to stop merging regions.

Station 1

14

Station 2

Station 3

Cell mean of temperature

9

Station 4

Station 5

Station 6

4

Station 7

Station 8

-1

1900

Station 9

1920

1940

1960

1980

2000

Station 10

Station 11

-6

Year

Station 12

Figure 7. Region means after merging regions not significantly different at level

0.01.

The sum of squared residuals (RSS) of all the regions are calculated and

recorded in each step of our CART splitting and region merging procedure

respectively. The total RSS is decreasing as the number of regions increases by

recursive splitting while it is increasing slowly when the algorithm merges the

regions. Figure 8 illustrates that our CART splitting algorithm produced 126

terminal regions under the condition that the F-statistic for splitting a region

should be greater than 2.71 (with a significance level of 0.1). In addition, this

figure compares the results from merging adjacent regions using different

stopping rules. The final number of regions after merging was 109 with a

significance level of 0.5 and 44 with a significance level of 0.01. In more detail,

17

the merging was stopped when the maximum p-value was less than or equal to

the given significance level.

3700

Total RSS

3200

2700

Splitting

Joining0.01

2200

Joining0.5

1700

1200

0

50

100

150

No.of regions

Figure 8 Total residual sums of squares observed during: (i) the binary

splitting, (ii) merging of regions with significance level 0.01, and (iii) merging of

regions with significance level 0.5.

Figure 9 shows the adjusted temperature after removing the cumulated level

shifts from the original cell value. It can be seen that the temperature in each

series moves along its main trend with small fluctuation and there are no

obvious level shifts although there are still some outliers. The splitting

algorithm detected 131 change-points while there were 92 change-points left

after merging adjacent regions. The algorithm worked well in the sense that it

produced a large number of homogeneous rectangles and then reduced the

model complexity by removing superfluous segments. We regarded the abrupt

level shifts as false or artificial changes. Accordingly, the corrected series

obtained by removing cumulated level shifts should provide a more realistic

picture of the variation in temperature over time.

18

Station 1

14

Station 2

Corrected temperature

Station 3

Station 4

9

Station 5

Station 6

4

Station 7

Station 8

-1

1900

1920

1940

1960

1980

2000

Station 9

Station 10

Station 11

-6

Year

Station 12

Figure 9 Cell means after removing cumulated level shifts.

15

Predicted value

10

-10

5

0

-5

0

5

10

15

20

-5

-10

Observational value

Figure 10 Predicted versus observed values, when the model selection was

based on 10-fold cross-validation with 0.01 as the significance level for

stopping the merging of regions.

Predictions and prediction errors obtained from the ten-fold cross-validation

method are plotted in figures 10 and 11. The first one describes the

goodness-of-fit of the prediction model. The second graph shows the prediction

errors versus its corresponding predictions. As can be seen, the residuals have

zero mean and small variance, which also indicates that the predicted values

are reasonable.

19

6

4

Predicted errors

2

0

-10

-5

-2

0

5

10

15

-4

-6

-8

Prediction

Figure 11 Prediction errors versus predicted values obtained by 10-fold

cross-validation with 0.01 as significance level for stopping the merging of

regions.

5.1

Analysis of observational water quality data

The integration of our tree model into a back-fitting algorithm that alternates

between estimation of smooth trends and change-points enabled a

decomposition of a given vector time series into: (i) a smooth trend surface, (ii)

a piecewise constant function representing abrupt level shifts, and (ii) irregular

variation or noise. The results obtained are illustrated in figures 12-15.

Mean total-P

7,3

7,2

7,2-7,3

7,1

7,1-7,2

7

7-7,1

6,9

6,9-7

6,8

1991 1993

"D0.5"

1995 1997

1999 2001

2003 2005

Figure 12 Corrected trend surface

20

6,8-6,9

Cumulated level shifts

2

1

1-2

0

0-1

-11991 1993 1995 1997

1999 2001

-2

"D0.5"

2003

-1-0

-2--1

2005

-3--2

-3

Year

Noise

Figure 13 Cumulated level shifts standardized to mean zero.

2

1,5

1

0,5

0

-0,51991

1993 1995

1997 1999

-1

2001

-1,5

1,5-2

1-1,5

0,5-1

0-0,5

"D0.5"

2003

2005

-0,5-0

-1--0,5

-1,5--1

Year

Figure 14 Noise

6 Discussion and conclusions

Detection of an unknown number of change-points in vector time series of data

is a computationally demanding task. This is particularly true for change-point

detections integrated into larger algorithms where the detection step is repeated

many times. The work presented in this thesis demonstrated that the

21

computational burden can be substantially reduced by using a tree algorithm to

identify a set of candidate change-points.

Our tree algorithm in which the space of time points and vector coordinates is

partitioned into rectangular is very fast. This is so because the binary splitting

is based solely on the number of observations and their sum in rectangular

subsets of the input domain. Theoretically, the binary splitting is an O(n)

algorithm, i.e. the computational burden is approximately proportional to the

number of observations. The dynamic programming algorithm described by

Hawkins (2001) is an O(n2) algorithm that will take much longer time for large

datasets.

The merging of rectangles into larger subsets of more complex shape is also a

fast process, because it is based solely on the number of observations and their

sum in the already identified regions or segments. In addition, the number of

candidate change-points is typically much smaller than the number of

observations. Test runs involving surrogate temperature data confirmed the

computational speed of our tree algorithm.

The integration of our tree algorithm into a back-fitting algorithm that

alternates between estimation of smooth trends and change-points is still in its

infancy. However, our test runs involving water quality data demonstrated that

such algorithms can be used to partition a vector time series into three parts: (i)

a smooth trend surface, (ii) a piecewise constant function representing abrupt

level shifts, and (iii) irregular variation or noise.

The stopping rules for growing and reducing our tree model require further

work. We used hypothesis testing and cross-validation for the model selection,

but none of these methods performed satisfactorily. The F-tests are not

theoretically correct, because we considered the maximum gain or loss for

22

large sets of splitting and merging operations. Cross-validation has previously

been successfully used to fit smooth response surfaces to observed data

(Grimvall et al., 2008). However, when the model includes abrupt level shifts

at unknown time points it is not obvious how models fitted to training sets shall

be used to predict the response at untried points.

Despite the preliminary character of much of the work in this thesis, it shall not

lessen the achievements that have been made. There is obviously a great

demand for methods that can detect and estimate abrupt level shifts in the

presence of smooth trends, and our work shows that using a tree algorithm to

identify a set of candidate change-points is a key step in the development of

such algorithms.

7 Literature

[1] Alexandersson H. (1986). A homogeneity test applied to precipitation data.

Journal of Climatology 6:661-675.

[2] Caussinus, H., and Mestre, O. (2004). Detection and correction of artificial

shifts in climate series, Applied Statistics 53: 405–425.

[3] Erdman C. and Emerson, J.W. (2008) A fast Bayesian change-point analysis

for the segmentation of microarray data. Bioinformatics 24:2143-2148.

[4] Grimvall A., Wahlin K., Hussian M., and von Bromssen C. (2008).

Semiparametric smoothers for trend assessment of multiple time series of

envirionmental quality data. Submitted to Environmetrics.

[5] Hastie T., Tisbshirani R., and Friedman J. (2001). The elements of statistical

learning: Data Mining, Inference, and Prediction. Springer: New York.

[6] Hawkins D.M. (1977). Testing a sequence of observations for a shift in

location. Journal of the American Statistical Association 68:941-943.

[7] Hawkins D.M. (2001). Fitting multiple change-point models to data

23

Computational Statistics & Data Analysis 37:323-341.

[8] Wahlin K, Grimvall A., and Sirisack S. (2008). Estimating artificial level

shifts in the presence of smooth trends. Submitted to Environmental

Monitoring and Assessment.

[9] Khaliq M.N. and Ouarda T. B. M. J. (2006). Short Communications on the

critical values of the standard normal homogeneity test (SNHT) Journal of

Climatology 27:681-687.

[10] Gey S. and Lebarbier E. (2008), Using CART to detect multiple

change-points in the mean for large samples. Technical report, Preprint

Statistics for Systems Biology 12.

[11] Picard F., Robin S., Lavielle M., Vaisse C., and Daudin J. (2005). A

statistical approach for array CGH data analysis. BMC Bioinformatics. 6:1-14.

[12] Sristava M.S. and Worsley K.J. (1986). Likelihood ratio test for a change

in multivariate normal mean. Journal of the American Statistical Association

81:199-204.

[13] Worsley K.J. (1979). On the likelihood ratio test for a shift in location of

normal population. Journal of the American Statistical Association 74:365-367.

24

8 Appendix

Table 1 PRESS corresponding to different significance levels derived from

ten-fold and leave-one-out cross-validation. The smallest PRESS in each

method is highlighted by yellow shadow and their corresponding alpha levels

are selected as significance levels used to stop the merging process.

Ten-fold CV

Significance level

0.9

0.8

0.7

0.6

0.5

0.4

0.3

0.2

0.1

0.01

0.001

0.0001

0.00001

0.000001

2536.2

2531.9

2531.2

2533.1

2526.7

2547.1

2560.9

2626.6

2645.7

2792.8

2785.8

2902.3

3083.4

3219.7

25

Leave-one-year-out CV

PRESS value

3788.6

3789.8

3787.0

3785.1

3786.6

3782.6

3796.1

3795.2

3772.6

3658.2

3725.1

3694.9

3738.0

3811.1

Table 2 Rectangle output derived from tree algorithm after the merging

procedure

Region

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

No. obs

35

13

47

17

70

9

99

21

105

25

54

34

63

18

35

14

48

12

5

21

13

3

7

10

1

36

1

4

20

17

1

1

14

1

10

6

27

4

65

11

1

7

13

2

Mean

-0.2

6.4

3.0

3.9

-1.8

0.6

11.0

7.3

7.4

4.4

-0.1

-1.1

3.9

2.1

4.8

1.2

13.4

4.3

4.1

8.4

8.9

1.8

1.0

11.8

-4.8

1.5

5.9

4.3

5.0

8.0

5.2

5.6

13.3

-4.4

8.5

1.9

1.3

10.2

9.2

2.3

-2.9

2.2

5.9

13.5

26

SSR

72.3

11.8

68.5

21.8

142.8

8.4

171.2

43.0

262.8

27.2

142.3

74.8

97.5

25.0

45.6

9.7

87.1

10.6

5.7

38.9

12.7

0.1

6.4

4.2

0.0

46.2

0.0

3.9

14.6

18.2

0.0

0.0

8.6

0.0

8.1

1.0

32.7

4.2

103.5

14.8

0.0

2.1

13.7

3.1

Table 3 is the final detection of change-points derived by our tree algorithm.

There are 92 change-points detected by implementing the whole tree

algorithm and they are presented by when and where the change-point has

occurred and how large is the level shift. “Nr” denotes the index of the

change-points; “Y” represents the vector coordinate, “S” indicates when the

change-point has occurred and “Level shift” evaluates the change in mean.

Detected change-points Detected change-points

Detected change-points

Nr. Y S Level shift Nr. Y S Level shift Nr. Y S Level shift

1 1 14

2.2

32 4 82

2.5

63 8 46

-5.9

2 1 23

1.8

33 5 14

2.0

64 8 48

1.8

3 1 27

-8.7

34 5 34

2.5

65 8 81

2.7

4 1 28

6.3

35 5 38

-7.1

66 9 5

3.7

5 1 45

4.4

36 5 39

3.5

67 9 8

-3.7

6 1 46

-7.7

37 5 46

-3.5

68 9 12

3.7

7 1 81

3.0

38 5 62

1.1

69 9 15

2.5

8 2 14

2.2

39 5 82

2.4

70 9 18

-2.5

9 2 23

1.8

40 6 3

-2.4

71 9 45

2.4

10 2 27

-2.3

41 6 13

2.4

72 9 46

-5.9

11 2 46

-3.3

42 6 42

-3.6

73 9 82

2.8

12 2 81

3.0

43 6 43

5.9

74 10 13

1.6

13 3 6

2.4

44 6 44

-5.9

75 10 26

2.1

14 3 9

-2.4

45 7 13

-5.8

76 10 29

-3.0

15 3 10

4.1

46 7 14

5.8

77 10 46

-3.6

16 3 11

-4.1

47 7 15

2.5

78 10 71

3.0

17 3 14

4.1

48 7 16

-7.8

79 10 75

-3.1

18 3 46

-4.0

49 7 17

7.8

80 10 81

3.1

19 3 82

1.3

50 7 38

-2.5

81 11 10

1.8

20 4 6

-1.9

51 7 40

2.4

82 11 45

-1.8

21 4 10

1.7

52 7 46

-5.9

83 11 46

-2.4

22 4 18

4.5

53 7 48

1.8

84 11 55

-1.7

23 4 19

-4.5

54 7 51

-1.8

85 11 56

2.4

24 4 20

2.0

55 7 52

1.8

86 11 80

3.0

25 4 33

-2.0

56 7 81

2.7

87 12 8

-5.4

26 4 45

3.5

57 8 4

-3.7

88 12 9

7.4

27 4 46

-7.5

58 8 5

6.2

89 12 46

-4.1

28 4 59

4.0

59 8 7

-2.5

90 12 79

2.4

29 4 60

-1.6

60 8 15

2.5

91 12 82

-4.2

30 4 71

-2.4

61 8 38

-2.5

92 12 83

4.7

31 4 76

1.9

62 8 40

2.4

27

Table 4 Water quality dataset representing phosphorus concentrations ( g/l) in

Lake Vänern.

Year

1991

1992

1993

1994

1995

1996

1997

1998

1999

2000

2001

2002

2003

2004

2005

Depth 0.5m

9.3

8

8.8

10.3

9.3

6

6

6.6

7

6.2

6.6

6.2

5.2

6

6.8

Depth10m

8.17

8.3

8.3

10.3

8.7

5.8

6.4

6

7.2

5.2

6.6

6

4.8

6.6

7

28

Depth20m

9.3

8.8

8.8

8.3

8.3

5.6

5.5

7.6

6.6

6

8.6

6.2

5.2

5.4

5.8