Tentamen / Examination TDDB68/72 Processprogrammering och Operativsystem /Concurrent programming and Operating Systems/

advertisement

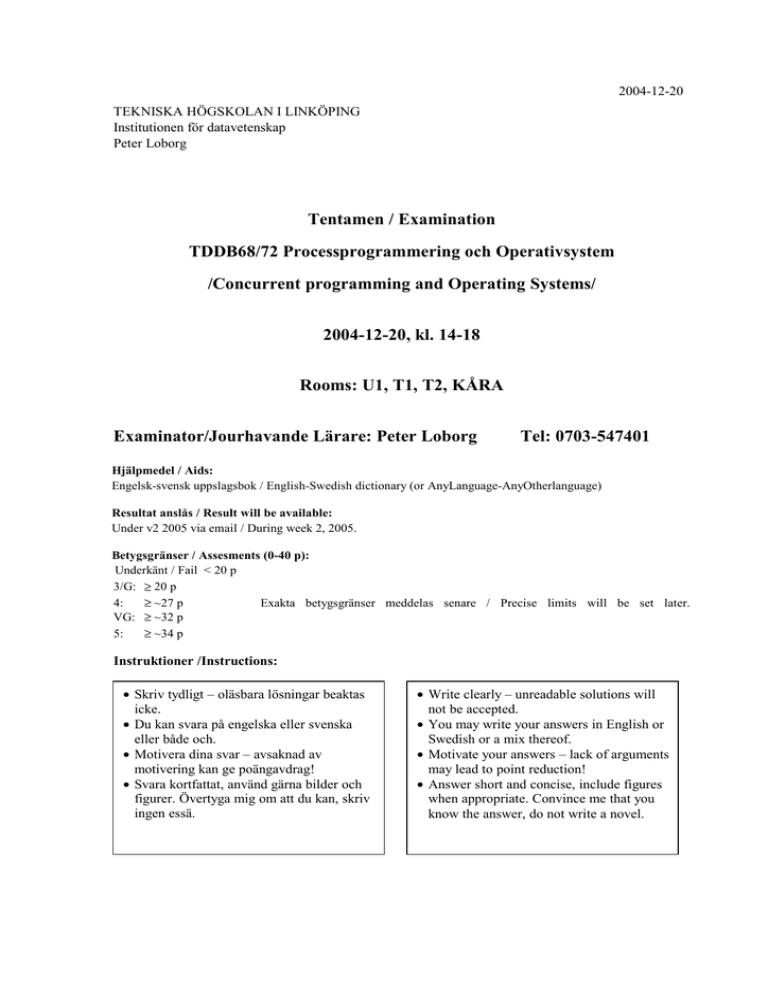

2004-12-20 TEKNISKA HÖGSKOLAN I LINKÖPING Institutionen för datavetenskap Peter Loborg Tentamen / Examination TDDB68/72 Processprogrammering och Operativsystem /Concurrent programming and Operating Systems/ 2004-12-20, kl. 14-18 Rooms: U1, T1, T2, KÅRA Examinator/Jourhavande Lärare: Peter Loborg Tel: 0703-547401 Hjälpmedel / Aids: Engelsk-svensk uppslagsbok / English-Swedish dictionary (or AnyLanguage-AnyOtherlanguage) Resultat anslås / Result will be available: Under v2 2005 via email / During week 2, 2005. Betygsgränser / Assesments (0-40 p): Underkänt / Fail < 20 p 3/G: ≥ 20 p 4: ≥ ~27 p Exakta betygsgränser meddelas senare / Precise limits will be set later. VG: ≥ ~32 p 5: ≥ ~34 p Instruktioner /Instructions: • Skriv tydligt – oläsbara lösningar beaktas icke. • Du kan svara på engelska eller svenska eller både och. • Motivera dina svar – avsaknad av motivering kan ge poängavdrag! • Svara kortfattat, använd gärna bilder och figurer. Övertyga mig om att du kan, skriv ingen essä. • Write clearly – unreadable solutions will not be accepted. • You may write your answers in English or Swedish or a mix thereof. • Motivate your answers – lack of arguments may lead to point reduction! • Answer short and concise, include figures when appropriate. Convince me that you know the answer, do not write a novel. 2004-12-20 Assignment 1 – Deadlock handling [6p] A classical example to demonstrate starvation and deadlock problems is the “Dining Philosophers”. a) Using a resource allocation graph, show the situation when the philosopher system deadlocks. [1p] b) Present a deadlock free solution to the philosopher problem. You may write pseudo code, C, Java or explain in plain text – the important thing is that it is clear how your algorithm works, what synchronisation primitive(s) you are using, and when and how they are used. [2p] c) Which one (or several?) of Coffman’s four Conditions did you invalidate in order to achieve your deadlock free solution? [1p] d) Above you encoded a static solution (i.e., the system can no longer deadlock due to its design) but there also exist deadlock prevention algorithms. One such algorithm is the Bankers Algorithm. What is the principal behaviour of this algorithm? Your answer should clarify what information this algorithm need, how you would use the algorithm from within your application (i.e., as an alternative solution to question a) and how all this will make your system deadlock free and still work correctly. [2p] Assignment 2 – Processes and Threads [4p] a) Describe and contrast the concepts of a process, kernel thread and user thread. Among other things, describe what happens (for the concepts to which this apply) when your code blocks on a system call – how does that affect the rest of your executing program and other executing programs? [3p] b) In the Nachos labs, which of the concepts above did you implement? [1p] Assignment 3 – Memory Management [5p] In most CPU’s there are things such as the MMU, segmentation and/or paging etc. a) How does a simple, segmented memory management system (which protects processes from each other) work? [2p] b) When your physical memory is not enough, you may implement a paged virtual memory with demand paging. What is it and how does it work? [2p] c) What is that thing called TLB? How is it used, and what is the purpose of having it? [1p] Assignment 4 – Scheduling [5p] In the table to the right you will find a set of processes with arrival times and (expected) maximum execution time, and priority. For each of the following scheduling algorithms, create a diagram (e.g., a Gantt chart) that shows how the processes are executed by the following scheduling algorithms and time quantum is set to 1: a) FIFO Process Arr. time Exec. time Priority b) Shortest Job First without pre-emption c) Shortest Job First with pre-emption P1 0 6 3 d) A strictly priority driven scheduler (e.g., P2 1 2 2 Rate Monotonic Scheduler), without preP3 2 4 4 emption P4 3 5 1 e) A strictly priority driven scheduler, with Priority 1 is the highest priority, 4 is the lowest! pre-emption 2004-12-20 Assignment 5 – Discs and File System [9p] Since a disc drive is a rather slow device, it is often the case that a number of read/write requests for different blocks of data will queue up. The disc drive itself may then service these requests in an order as it sees fit. a) When the disc is about to choose what request to serve next, what property of the request (i.e., block number, disc platter number, cylinder number or what?) is used to make the decision, and why that property? [1p] b) Hard drives are rather slow devices, but still – the average read time for a block of data from a disc into the primary memory is often smaller than the actual time it takes to read a single block of data from the disc into primary memory. How is this possible? What two mechanisms are used to achieve this? [2p] c) What do we mean by a virtual file system and why would we want to have that? [1p] d) By using the Unix command echo “Hello!” >> bigfile.txt you are appending the string “Hello!” to the end of the 50 block big file bigfile.txt. Assuming that the last block of this file has room for yet another line of text, that common information for the entire file system is cached in primary memory, but no part of the file bigfile.txt is cached – how many blocks of data are read from the disc and how many are written to the disc during the execution of the command above if your file system uses… [5p] a) Linked allocation [1p] b) Contiguous allocation [1p] c) Indexed allocation using Unix I-nodes (where the file node has room for 10 direct references, 1 indirect and 1 double indirect reference, and where pure index blocks has room for 20 references) [2p] d) Linked allocation but using a FAT (where the FAT itself is one of those cached data structures) [1p] Assignment 6 – Protection and Security [5p] The terms protection and security has been used in this course, but in practice we have focused mainly on protection. a) What do we, in the context of this course, mean by these two words? [2p] b) All mechanisms discussed in this area depend on the integrity of the operating system – that some code or functionality cannot be reached or executed if you (the process) do not have the right to do so. What is the basic mechanism that must exist in our CPU and OS to provide such a level of integrity? I.e., what stops you from writing and executing an assembler program that changes the page table and totally corrupts the system? [1p] c) An access matrix is an abstract view of how domains are assigned different rights, and is in reality never implemented as a matrix per se. There are at least two different types of implementations, and yet some variants that combine these two. Our problem is that we have a huge repository of documents, some hundred thousand entries or so, and a large set of users in the order of tens of thousands. A typical problem is to list all the ids of all documents a certain user has access to, and when a user leaves our organisation, to revoke the rights of that user in our repository. How would you choose (among the techniques presented in this course) to implement the access matrix in this case? Motivate your choice! [2p] 2004-12-20 Assignment 7 – Processes, communication and the lot! [6p] Now, at the end of this course, with your profound understanding of all details from the CPU hardware and upwards…. The notion of protected address spaces for different processes was introduced during the second lecture of the course, and the fact that it was no longer possible used shared variables for communication. Since then we have discussed many details about memory management, so… …what if you should implement interprocess communication, i.e., the ability for the processes within an OS to communicate with each other although they operate in different address spaces? The solution will have to use some form of system call to set up the connection and perhaps for some synchronisation, but it should not hand over all the data copying to the kernel. You may assume that the OS has a paged memory model. The mechanism you implement shall have the following user process api: • ComChan openComChan() is used by a process to wait for some other process to connect. As soon as any other process has made a connection (by calling connectComChan) this call will return a handle to the communication channel. • ComChan connectComChan(int pid) will return a handle to a communication channel (a positive integer), or –1 if the operation failed. This operation shall never block (put the calling process to sleep). It will fail if the process pid does not exist or if it is not waiting for a connection. • int send(ComChan cid, char* buff, int len) will send len bytes from the buffer buff to the open channel cid. The operation should block if there is insufficient room in the internal buffer used for the communication, and it will return an integer to signal success (0) or failure (-1, e.g., if the other process has terminated). • int recive(ComChan cid, char*buff, int bufLen) will wait until befLen number of bytes has been received and placed into the buffer buff. It will return the number of bytes actually received, which may be fewer than requested if the other process closes the channel. • close(ComeChan cid) will close the channel. All data not received by the process that closes the channel are lost. The other process may still receive the last data sent. If a process terminates wile holding an open channel, that end of the channel shall be closed by the OS. The operating system you extend is similar to most OS:es, it has data structures for processes (i.e., AddrSpace or ProcessControlBlock or similar), a data structure for each running thread and tables over all processes and all threads in the system. And you are now about to extend this operating system! • What new data structures do you need, which existing data structures do you want to change? • What synchronisation primitives will you need, and where, and how will they be used? • What part or parts of the existing OS would you need to add code to, and how would that code operate? Describe in natural language, as pseudo code or Java or C – or in a combination of them all! Draw diagrams! Become an OS designer! Hint 1: You have implemented parts of this assignment as a test program in one of the Nachos labs! Hint 2: It is common for these kinds of routines to allocate a record (struct) and return a pointer to that struct (which in C is the same as a large positive integer). You may use this principle regardless of what notation you use to describe the design, since we assume that the implementation will be in C. Your design will be graded based on functionality and how effective it is (obviously inefficient solutions will loose points). Your design need not be fool proof, but it should work when correctly used and it should not open up a security hole. GOOD LUCK & MERRY X-MAS! 2004-12-20 ANSWERS: 1- Deadlock… a) Resource allocation graph – see fig. 8.2 on page 248 in Silberschatz/Galvin/Gagne. Since the resources are not interchangeable, we must treat each fork as unique resources. Thus, there should be five boxes with one dott in each, or even without a dott, and five circles representing the processes, and a circle of arrows between them. Se fig 8.6 or 8.7a. b) The answer must present an algorithm so that we can see for sure that it works! See the conditions in the question. c) …which of Coffmans four conditions? d) The principal behaviour: The system keeps a record of your total need of resources, and for each new request it checks that there are still enough free resources for at least one of the processes to obtain all its needed resources. It further checks that there exist a safe path, i.e., that if the process that could have all its resources would allocate them all and then return all them all, then another process can do the same. And yet another one. Until all are done. This all depends on the assumption that a process operates in three phases: it allocates resources, uses them, and de-allocates resources. Once it has begun de-allocating, it can not (re)allocate a resource. The answer must cover all three parts that are marked with emphasis above. 2 – Processes and threads… a) Process – has memory limits, code and data. Consists of one or more threads. Thread – has stack and backup store for all registers etc. Executes within the context (memory limits etc.) of a process. Kernel Thread – a thread that is scheduled and managed by the operating system, the backup store is kernel data. User Thread – a thread that is scheduled and managed by the process is belongs to, the backup store is user data. At least one kernel thread is needed to execute a process (all the user threads in that process). If only one kernel thread is used, a blocking user thread will block the entire process (all threads). If several kernel threads are used to execute the user threads, a blocking user thread will not block the other threads and thus not the entire application. In no case will a blocking thread affect other processes. b) We “implemented” kernel threads and the process (which then implicitly only had one user process inside). This is a somewhat tricky question since the relation between kernel threads and user threads is not mentioned explicitly… …for full credits some (correct) argumentation about when/how a process becomes blocked must be presented. 3 – Memory Management a) Segmented memory model: Memory is allocated in chunks of different size corresponding to portions of code/data that they are to be used for. Each process will have a segment table with base and limit information for each segment used. This table is used by the MMU to translate 2004-12-20 logical address to a physical one. The OS will have to manage a free segment list and use techniques for managing this list (collapsing adjacent free segments into a larger free segment, use a policy for how to allocate segments, e.g., “best fit”, “first fit” etc b) The page table contains info about if the page is in the PM or not, a “dirty-bit” telif it has been midified or not (and possibly more flags). A memory access is processed as previously, but the MMU may now issue a page fault interrupt if the referenced page is not available. That interrupt will load the missing page (possibly throwing some other page out) and restart the execution. c) A cache in which address translations are stored. This cache is searched by the MMU before the page table is used 4 – Scheduling Note: • • FIFO is a non-preemptive technique, it is called Round Robin otherwise. SJF with pre-emption (c) – depending on if we compare original execution time or remaining execution time when scheduling, P1 (at the end) and P4 may switch place. a) b) c) d) e) 5 – Discs… a) Cylinder number is important – moving the arm is a slow operation… b) There is a cache inside the hard drive that will contain all blocks from the last cylinders read, and some blocks can be found there without actually reading the disc. The OS will often request more (and adjacent) blocks of data than what is actually requested, with the result that requested blocks may already exist in the block cache inside the PM. c) The virtual file system is an API that is common to most file systems. It will allow an OS to use several different file systems, even simultaneously… d) Number of accesses are: a. 50 r + 1 w (unless there is a pointer to the last block from within the directory, then its only 1 r + 1 w) b. 1 r + 1 w (it is clear from the directory info which block to update) c. 4 r + 1 w – in order to find block nr 50, we need to read the I-node (containing direct pointers to block 0..9, skip the indirect block containing pointers to block 10..29), read the double indirect block, read the first index block it points at (containing pointers to block 30..49) and read the last block (block 49, the 50th block). This block must then be written back again. d. 1 r + 1 w (following the pointers inside the cached FAT we directly find what block to update) 2004-12-20 6 – Protection… a) Protection – the mechanisms with which we implement security. E.g., dual mode operation is a protection mechanism – it only provides security if properly managed. Security – a measure of confidence that the system is as intended, i.e., that no one managed to tamper with it in a manner we did not allow. b) The basic mechanism is dual mode operation – that instructions to alter MMU registers and such cannot be executed by normal user level programs, only by code called from within an interrupt handler of the operating system. c) The two operations mentioned in the assignment are best served by a capability list implementation – i.e., that each user as a list of “document access capabilities” describing what they can do and not with a given document. Listing all documents a certain user has access to is then simply a matter of traversing this list of capabilities, and revoking the access rights amounts to clearing that list. Other operations (such as given a specific document, find all users who have read access) are not so well catered for by this solution. An alternative may be a lock-and-key approach… It is important that the answer is motivated! 7 – ComChan The OS needs a new table to store open communication channel descriptions (ComChanTable). The description must contain… • information about the server process (who created the channel), • a boolean flag indicating if this process is waiting for a connection or not, • information about the current client process (if any), • what memory page(s) is(are) used as shared pages between the two processes to implement the shared buffer. The shared page/pages must contain two bounded buffers (for communication in both directions). All synchronisation primitives for these bounded buffers must also be allocated on these shared pages. All data about the channel and its two bounded buffers (including these synchronisation primitives) is defined in a record (struct), and it is an instance of that struct that is allocated on the common page(s). The synchronisation primitives (e.g. counting semaphores) are assumed to exist in kernel space, only the handles are stored in the ComChan struct. Operations on these synchronisation primitives are done via system calls. The operations will do the following: • openComChan() – must be a system call, will create a new entry in the ComChanTable and allocate e.g. two memory pages in the process address space for this purpose. One instance of a bounded buffer is allocated on each of the pages. • connectComChan() – must be a system call, will look for an entry in the ComChanTable with the proper pid, chek that the client pid field is free or that the boolean flag is true (i.e., that the channel is not bussy), will then fill in the client pid, change status of the boolean flag and place the server process in the ready queue (i.e., wake it up). Then it will return to the caller with the proper return value. • send() – this is an ordinary library procedure executed in user space that will operate on the struct pointed to by the ComChan handle. It will perform the normal read operation on a bounded buffer, which will only include system calls when operating o the synchronisation primitives, not when adding/reading data to/from the buffer. • receive() – see the send() operation. If the channel is empty, this operation will check that the channel is still open before waiting for more data. If not it will instead return with an appropriate return value (the actual number of bytes read). 2004-12-20 • close() – this is a system call. Will mark the channel as closed on that end. If the other end is currently sleeping, awaiting more data, it should be woken up (and will thus find the channel closed and will return 0). If the other end is already closed, the entry in the ComChanTab is removed. For full credit the main idea of this design must be presented, including the fact that… • for send() and receive() it is only the signalling on the synchronisation primitives that must be implemented as system calls (if we want to avoid busy waiting) • that we used shared memory pages in our paged memory management system, only accessible for the two processes involved in the communication • that other processes can not access these pages by other means than via a successful call to open or connect methods It is not required that a server side can handle more than one request, erre