Lectures TDDD10 AI Programming Machine Learning

advertisement

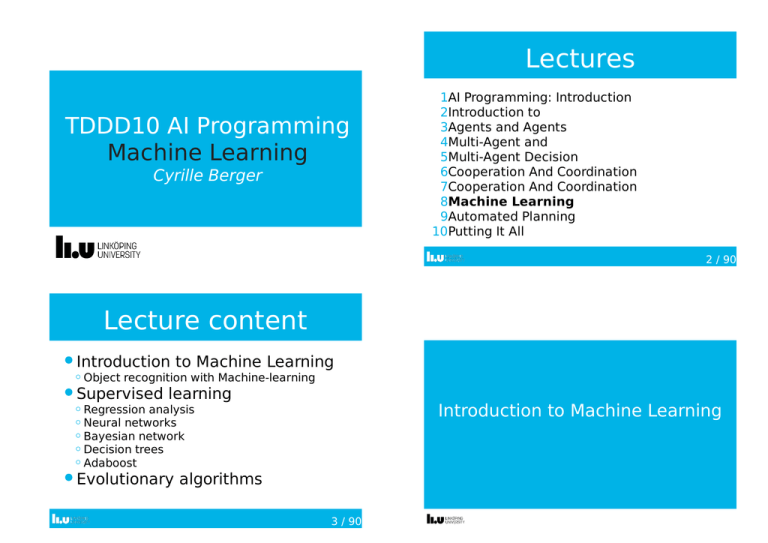

Lectures TDDD10AIProgramming MachineLearning CyrilleBerger 1AIProgramming:Introduction 2Introductionto 3AgentsandAgents 4Multi-Agentand 5Multi-AgentDecision 6CooperationAndCoordination 7CooperationAndCoordination 8MachineLearning 9AutomatedPlanning 10PuttingItAll 2/90 Lecturecontent IntroductiontoMachineLearning ObjectrecognitionwithMachine-learning Supervisedlearning IntroductiontoMachineLearning Regressionanalysis Neuralnetworks Bayesiannetwork Decisiontrees Adaboost Evolutionaryalgorithms 3/90 WhatisMachineLearningabout? Whatislearning? Toimbuethecapacitytolearnintomachines Ouronlyreferenceframeforlearningisfromtheanimal kingdom “anychangeinasystemthat allowsittoperformbetterthe secondtimeonrepetitionofthe sametaskoronanothertask drawnfromthesamedistribution” ---HerbertSimon …butbrainsarehideouslycomplexmachines,theresultofagesofrandom mutation LikemuchofAI,MachineLearningtakesanengineering approach! Humanitydidn’tfirstmasterflightbyjustimitating Althoughthereissomeoccasionalbiologicalinspiration 5 6 WhyMachineLearning?(1/2) Thereisno(good)humanexpert knowledge Predictwhetheranewcompoundwillbeeffectivefor treatingsomedisease Weatherforecast,stockmarket Hardtoimplementan Imageandsceneinterpretation Handwrittentextrecognition Itmaybeimpossibletomanually programforeverysituationinadvance. Themodeloftheenvironmentisunknown,incompleteor outdated,iftheagentcannotadaptitwillfail. 7 8 WhyMachineLearning?(2/2) Bigdata LibraryofCongresstextdatabaseof~20TB AT&T323TB,1.9trillionphonecallrecords. WorldofWarcraftutilizes1.3PBofstoragetomaintainits game. Avatarmoviereportedtohavetakenover1PBoflocal storageatWetaDigitalfortherenderingofthe3DCGI effects. Googleprocesses~24PBofdataperday. YouTube:24hoursofvideouploadedeveryminute.More videoisuploadedin60daysthanall3majorUSnetworks createdin60years.Accordingtocisco,internetvideowill generateover18EBoftrafficpermonthin2013 Approximatingcomplex Datacollectionsaretoolargefor humanstocopewith(bigdata). Facebook,Google... Forcustomizingtheresultof thealgorithm Emailclassification(spam...) Thealgorithmswehavesofarhave shownthemselvestobeusefulina widerangeofapplications! 9 10 SceneCompletion Givenaninputimagewithamissingregion, usingalargecollectionofphotographs, machinelearningcanhelpcompletethe image SceneCompletionUsingMillionsofPhotographs.James HaysandAlexeiA.Efros.ACMTransactionsonGraphics (SIGGRAPH2007).August2007,vol.26,No.3. 11 ObjectrecognitionwithMachine-learning ObjectrecognitionwithMachine-learning Bagofwords Aclassificationapproach Morethan10000objectcategories Bagofwords 13 14 StanfordHelicopterAcrobatics Recognition …innarrowapplicationsmachinelearningcanevenrivalhumanperformance 15 16 ToDefineMachineLearning “Amachinelearnswithrespecttoa particulartaskT,performancemetricP,and typeofexperienceE,ifthesystemreliably improvesitsperformancePattaskT, followingexperienceE”---TomMitchell Fromtheagent Threetypesoflearningalgorithms Supervisedlearning learntopredict Unsupervisedlearning Learntounderstandanddescribethedata Reinforcementlearning Learntoact 17 18 SupervisedLearning:examples(1/2) SupervisedLearning Thecorrectoutputisgiventothe algorithmduringatrainingphase ExperienceEisthustuplesoftraining data PerformancemetricPissomefunctionof howwellthepredictedoutputmatchesthe givencorrectoutput Mathematically,canbeseenastryingto approximateanunknownfunctionf(x)=y givenexamplesof(x,y) Learntopredicttherisklevelofa loanapplicantbasedonincomeand savings 19 20 SupervisedLearning:examples(2/2) Applicationsofsupervisedlearning Spamfilters Collaborativefiltering predictingifacustomerwillbeinterestedin anadvertisement Ecological predictingifaspeciesisabsent/presentina certainenvironment Medical Diagnosis 21 UnsupervisedLearning 22 UnsupervisedLearning:example TheTaskistofindamoreconcise representationofthedata Neitherthecorrectanswer,nor arewardisgiven ExperienceEisjustthegivendata PerformancemetricPdependson thetaskT 23 24 Applicationsofunsupervisedlearning ReinforcementLearning InReinforcementLearning: Clustering Arewardisgivenateachstepinsteadofthecorrectinput ExperienceEconsistsofthehistoryofinputs,thechosenoutputsandtherewardsR Whenthedatadistributionisconfinedtolieinasmallnumber of“clusters“wecanfindtheseandusetheminsteadofthe originalrepresentation PerformancemetricPissomesumofhowmuchrewardtheagentcanaccumulate MarketSegmentation:divideamarketinto distinctsubsetsofcustomers Inspiredbyearlyworkinpsychologyandhowpetsare trained Theagentcanlearnonitsownaslongasthereward signalcanbeconciselydefined. Collectdifferentattributesofcustomersbasedon theirgeographicalandlifestyle Findclustersofsimilarcustomers,whereeachclustermay conceivablybeselectedasamarkettargettobereachedwith adistinctmarketingstrategy DimensionalityReduction Findingasuitablelowerdimensionalrepresentation whilepreservingasmuchinformationaspossible 25 26 Applicationsofreinforcementlearning ReinforcementLearning:example Robotcontrols Planning Elevatorscheduling Gameplaying (chess, backgammon...) 27 28 Evolutionaryalgorithms:example Evolutionaryalgorithms InEvolutionLearning: Randomagentsaregenerated Ateachstep,thebestperformingagentsarekeptand combinedtogeneratenewsolutions ExperienceEconsistsofthehistoryofsolutions PerformancemetricPissomesumofhowmuchan agentperform Inspiredbyearlyworkbythetheoryof evolution Mostclassicalexempleisthesocalled geneticalgorithm 29 30 Applicationsofevolutionaryalgorithms Non-convexoptimizationproblem Trainingofneuralnetworks Parameters Travellingsalesmanproblem Supervisedlearning 31 TheStepsofSupervisedLearning Supervisedlearning Canbeseenassearchingforanapproximation totheunknownfunctiony=f(x)givenN examplesofxandy:(x₁,y₁),…,(xₙ,yₙ) Thegoalistohavethealgorithmlearnfroma smallnumberofexamplestosuccessfully generalizetonewexamples Generalprinciple Giventhecorrectoutputy,fora giveninputx,approximatethe unknownfunctionf(x)=y Manydifferentalgorithms,among which: Regression Neural Bayesian Decision Adaboost Firstconstructafeaturevectorxᵢofexamplesbyencoding relevantproblemdata.Examplesofxᵢ,yᵢisthetrainingset. Thealgorithmistrainedontheexamplesbysearchingafamily offunctions(thehypothesisspace)fortheonethatmost closelyapproximatestheunknowntruefunction Ifthequalityispooratthispoint,changealgorithmor 33 34 Linearregression Assumethattherelationbetweeninput andoutputislinear y=ɛ+x₁β₁+...+ Regressionanalysis Example:learntherelationshipbetween ageandheight: 36 Trainingalearningalgorithm... LogisticRegression(1/2) Featurevectorxᵢ=(age),yᵢ=(height) Wanttofindapproximationh(x)totheunknownfunctionf(x) Howdowefindparametersthatresultinagoodhypothesis h? Lossfunction: Inaverylargenumberofproblemsincognitivescience andrelatedfields theresponsevariableiscategorical,oftenbinary(yes/no;acceptable/not acceptable;phenomenontakesplace/doesnottakeplace) potentiallyexplanatoryfactors(independentvariables)are categorical,numericalorboth Example:classifyifadaywassunnyorrainybasedon dailysalesofumbrellasandicecreamatastore. Optimisation: Featurevectorxᵢ=(umbrellas,icecreams),yᵢ=“sunny”or“rainy” 37 38 Limitoflinear/logisticregression LogisticRegression(2/2) Thelogisticfunction: Inmanycasesthemodelis notquietlinear: Logisticregressionformulation Itisactuallythesameformulationas1-neuron neuralnetwork 39 40 LinearModelsinSummary Advantages Variationsonregressionanalysis Polynomialregression Linearalgorithmsaresimpleandcomputationally Trainingthemisoftenaconvexproblem,sooneisguaranteed efficient tofindthebesthypothesisinthehypothesisspace y=a₀+a₁x+a₂x²+aₙxⁿ Multinomiallogisticregression Disadvantages forhandlingmultipleclass Thehypothesisspaceisveryrestricted,itcannothandlenonlinearrelationswell Non-linearregression ... Theyarewidelyusedinapplications RecommenderSystems–InitialNetflixCinematchwasalinear regression,beforetheir$1millioncompetitiontoimproveit AtthecoreoftherecentGoogleGmailPriorityfeatureisa linearclassifier Andmany 41 42 Neuralnetworks Neuralnetworks Biologically inspiredwithsome differences Basicallya function Basedonunits(aka approximation neurons) Connectedbylinks(aka axons) Aunitisacivted bystimulation Structuredinanetwork 44 Neuron Activationfunctions Theactivationfunctionistypicallythesigmoid Theactivationfunctioninaperceptronisthestepfunction Eachneuronisalinearmodelofalltheinputs passedthroughanon-linearactivationfunction g 45 DesignofNeuralNets 46 NetworkTopology Atwolayernetwork(onehiddenlayer)withasufficient numberofneuronsisgoodenoughformanyproblems Networksarecomposedoflayers Allneuronsinalayeraretypically connectedtoallneuronsinthe nextlayer,butnottoeachother 47 48 WhentoConsiderNeuralNets Trainingofneuralnetworks Likebefore,trainingistheresultofan optimization! Wecancomputealossfunction(errors) foroutputsofthelastlayeragainstthe trainingexamples Andforhiddenlayers,use Inputishigh-dimensional discreteorreal-valued Outputisdiscreteorreal-valued Outputisavectorofvalues Possiblynoisy Formoftargetfunctionisunknown Humanreadabilityofresultis unimportant Usetheexpectedoutputvaluetocomputetheexpected outputvalueofeachneuroninthehiddenlayer,and computethedeltaneededforoptimization “Thetrickistoassesstheblameforanerroranddivideit amongthecontributingweights”Russel&Norvig 49 50 ArtificialNeuralNetworks-Summary Obstacleavoidance Advantages Verylargehypothesisspace,undersomeconditionsitisauniversal approximatortoanyfunctionf(x) Themodelcanberathercompact Hasbeenmoderatelypopularinapplicationseversincethe90ies Toleranttonoisydata Disadvantages Trainingisanon-convexproblemwithmanylocal Takestimetolearn Hasmanytuningparameterstotwiddlewith(numberof neurons,layers,startingweights...) Topologydesignis Verydifficulttointerpretordebugweightsinthe Thebiologicalsimilarityisvastlyover-simplified(andfrequently overstated) 51 52 Abuseofneuralnetworks Deeplearning Newtechniqueshavebeendevelopedtotrainmulti-layer neuralnetwork Usingunsupervisedtechniquesbetweenlayers Technologicaldevelopmenthashelpedimproved parallelismcomputation(especiallyinGPU) 53 54 Deeplearning-Applications imagesrecognition Bayesiannetwork Speechrecognition 55 BayesianNetworkformodelingdependencies Bayesiannetwork ABayesianNetworkisagraphof probabilisticdependencies. Itcontains: Variablesasnodes Joint-Probabilityasdirectededges 57 BayesianNetworkforsolvingcrimes 58 BayesianNetworkforSPAMFiltering Mostknownforpopularizing learningspamfilters Spamclassification Eachmailisaninput,somemailsareflaggedasspamor notspamtocreatetrainingexamples. Featurevector:encodetheexistenceofafixedsetof relevantkeywordsineachmailasthefeaturevector. 59 60 BayesianNetworkforDecisionMaking Trainingaprobabilisticmodel Howdowetrainaprobabilisticmodelgiven examples? Generallybyoptimizationlikewedidwithlineardeterministic models,butderivingthelossfunctionisabitmorecomplex Inthiscasethehypothesisspaceisthefamilyoffully conditionallyindependentandbinaryworddistributions Theonlyparametersinthemodelthatneedtobesearched overaretheprobabilitydistributionsontherighthandside. Inthiscasetheyhaveasimpleclosedformsolution:word frequenciesforexamplesofspamandnon-spaminthe trainingset,inadditiontothefrequencyofspamingeneral! 61 62 BayesianNetworks-Summary Advantages Veryeasytodebugorinterprettheweightsin thenetwork. Exposetherelationshipsbetweenvariables andeasyconversionindecision. Decisiontrees Disadvantages Difficulttolearn. Topologydesigniscritical.Andespecially whichnodestoinclude? Difficultytotraincyclicnetworks 63 Decisiontrees-learning Decisiontrees WhatisgreataboutDecisionTrees? Itisagraphofpossibledecisions andtheirpossibleconsequences. Usedtoidentifythebeststrategy toreachagivengoal. ...youcanlearnthem. Itisoneofthemostwidelyusedand practicalmethodforclassification. Thegoalistocreateamodelthat predictstheoutputvaluebasedon severalinputvariables. Itisusefullwhenonewantsacompact andeasilyunderstoodrepresentation. 65 ExampleofDecisionTreesLearning 66 DecisionTreesLearning Decisiontreesarelearntby constructingthemtop-down: Selectthebestrootofthe statisticaltesttofindhowwellitclassifiesthetrainingsample thebestattributeisselected Descendantsarecreatedforeach possiblevalueoftheattributeandthe attributematchingarepropagateddown Theentireprocessisrepeateduntil eachdescendentofthenodeselectthe bestattribute 67 68 InformationGain Informationgain Wewantthemostuseful attributetoclassifythe examples. Itmeasureshowwellagiven attributeseparatesthetraining examplesaccordingtotheirtarget classification. 69 ExampleofDecisionTreesLearning 70 Selectingthe"Best"Attribute 71 72 DecisionTrees-Summary Advantages Theyhaveanaturally interpretablerepresentation. Veryfasttoevaluate,astheyare Theycansupportcontinuousinputs andmultipleclassesoutput. Therearerobustalgorithmsandsoftware thatarewidelyused. Adaboost Disadvantages Notnecesseralyanoptimal Riskof 73 Combiningclassifiers AdaptiveBoosting AdaBoostisanalgorithmforconstructinga”strong” classifieraslinearcombinationofweakclassifiers: Themostcommonweakclassifierusedisadecisiontree 75 76 LimitationsofTrainingaLearningAlgorithm AdaBoost-Summary Locallygreedyoptimizationapproachesonlyworkiftheloss functionisconvexw.r.t.w,i.e.thereisonlyoneminima Linearregressionmodelsarealwaysconvex,howevermore advancedmodelsarevulnerabletogettingstuckinlocal minina Advantages: Easytouse Almostnoparameterstotune(exceptfor numberofclassifiers) Improvetheperformanceofanylearning algorithm Inconvenients Overfitting Canbeslowtoconverge Startpositionsinredareawillgetstuckinlocalminima! Careshouldbetakenwhentrainingsuchmodelsbyutilizing forexamplerandomrestarts 77 78/90 EvolutionaryTechniques Evolutionaryprogramming Fixprogramstructure,numericalparameters areallowedtoevolve. Evolutionaryalgorithms Individualscompeteandthe mostfitsurvivesandgenerates newoffspringforthenext generation. Anindividualisaset ofparameters 80 Algorithmicperspective Selection Fitness-value-computedbya fitness-functionanddetermineshow fittheindividualis. Selection Foreachgeneration: Foreachindividual(akasetofparameters) computethefitnessfunction Combinethesolutionstocreateoffsprings Introducerandommutation Selecttheindividualforthenextgeneration Roulette-basedselection Thefittertheindividualis,themorechanceofbeingselected. Tournamentselection 2+Individualsrandomlyselected,fitness-valuedetermines probabilityofwinning.Restrictedroulette-basedselection. Elitismselection Anumberofthemostfitindividualsarealwaysselected,the restisdecidedbyanotheralgorithm. 81 GenerateOffspring 82 OffspringOperators Encoding Matchinganindividualsgenestoasolutionin theproblemspace. Crossover Nindividuals->Mnewindividuals Mutation Smallchangeinoneindividual 83 84 AGeneticAlgorithmProblem GeneticAlgorithmForRobots Encoding Howdoweencodesothatthesolutionspaceexactly matchestheproblemspace? Fitness-function Whatisagoodsolution?Howmuchdoesitdifferfromanother solution? Objectivefunction Whatistheobjective? Whenarewe Othervariables Initialpopulation Selection/recombination Mutation End 85 EvolutionaryTechniques-Summary 86 CMA-ES:CovarianceMatrixAdaptation-EvolutionStrategies CMA-ESreliesontwo Advantages Aprobabilisticdistribution(withameanandcovariance),usedtodraw newcandidates Searchpathisrecorded Movesapopulationofsolutionstowardtheoptimal solutiondefinedbythefitness-functionoveranumber ofgenerations. Anoptimisationtechnique,ifyoucanencode Solvesproblemwithmultiplesolutionsandmultiple minima! Searchisconductedorthogonallytotheexpectation Avoidconvergencetoalocalminima Disadvantages Slowtoconverge. Someoptimisationproblemscannotbesolved. Noguaranteetofindaglobaloptimum. Notreasonnableforonlineapplications! 87 88 CMA-ES-Summary Conclusion Advantages IntroductiontoMachineLearning Supervisedlearning Fasterconvergencerate Detectcorrelationsbetweenparameters Linearregression Neuralnetworks Bayesiannetworks Decisiontrees Adaboost Disadvantages Noguaranteetofindaglobaloptimum. Evolutionarytechniques 89 90/90