How does Metaprogramming affect software flexibility in Object-Relational Mapping

advertisement

How does Metaprogramming affect software

flexibility in Object-Relational Mapping

Abstract—Metaprogramming is an important element in

object-relational mapping. It is integrated, because we need to

analyze the class structure. The result will be an adaptable

Mapper for data models. In this paper we discuss about the

flexibility of object-relational mappers that apply metaprogramming. Flexibility is an important term for the maintainability

of software, because flexible software or software component

can adapt to different requirements, such as design patterns

provide components where its inner parts are flexible. Moreover,

modifcations and integrations of data models shall be done easily.

Therfore we try to quantify, measure and compare the objectrelational mapping with usual data providing and try to outline

out the flexibility by using metaprogramming.

I.

I NTRODUCTION

Object-orientation is a well known programming paradigm

to create flexible software components. With Polymorphism,

for example, can we implement reusable software interfaces

and abstraction and encapsulate the inner code [10]. Objects

of the real world are modeled as classes in object oriented

world. Since we want to obtain the state of these objects for

a longer time than the lifetime of an object, they need to be

persisted to a database, e.g. a relational database. Relational

databases, however, use tables (or relations) to store record

sets. Thus, both object orientation and relational databases use

different ways to represent the data.

Furthermore there are object oriented elements like inheritance,

encapsulation, or accessibility and we would like to keep these

elements of object orientation alive. Hence, persisting object

states into a relational database managemant system (RDBMS)

provides several challenges for the a software developer [1].

This problem is also known as impedance of object orientation

and there are different ways to solve it. On the one hand, we

can use a low level API, such as the java database connectivity

API (JDBC) from Java. Low level in this case means, that the

API offers a general interface to communicate with different

relational database types and a developer has to take care for

the communication and transformation of objects to relational

record sets and vice versa.

A solution for the problem is object-relational mapping

(ORM). ORMs offer a higher level of abstraction than database

drivers. Object-relational mappers consists of several metaprogramming components to examine the attributes to provide a

proper mapping in both directions. Thus, metaprogramming

plays an essintial role for object-relational mapping. The

Hibernate ORM in Java, for instance, uses the reflection API

is used to examine and analyse the data models. A developer

using Hibernate has to declare and annotate the data model,

such that the data model will be integrated into the data model.

Since metaprogramming plays an important role to provide a

tool to analyze the code, this paper deals with the flexibility

of object-relational mappers that apply metaprogramming.

The following section II describes JDBC and a introduces

briefly into metaprogramming and object-relational mapping.

Afterwards, the topic software flexibilty will be discussed. The

next section III compares the flexibility of the Object-relational

Mapper Hibernate with a JDBC implementation. In section IV,

we evaluate the case study and try to make a point about the

flexibility of an ORM. Here, we compare the Hibernate ORM

with a plain JDBC solution. Last but not least, we conclude

the results in section V.

II.

BACKGROUND

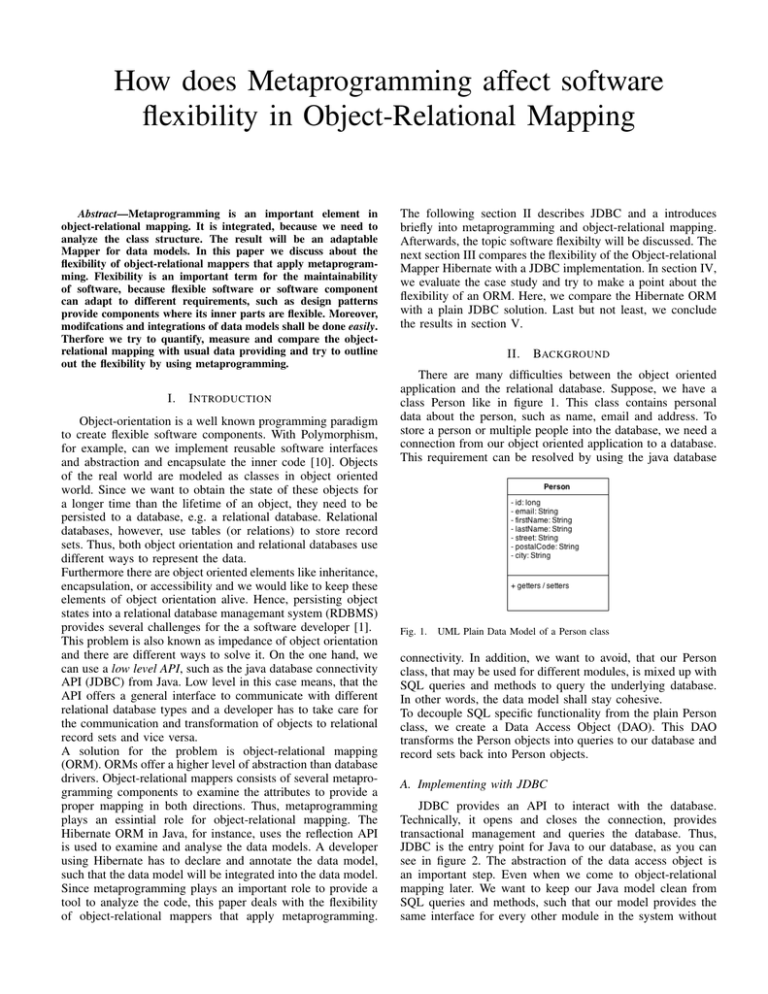

There are many difficulties between the object oriented

application and the relational database. Suppose, we have a

class Person like in figure 1. This class contains personal

data about the person, such as name, email and address. To

store a person or multiple people into the database, we need a

connection from our object oriented application to a database.

This requirement can be resolved by using the java database

Fig. 1.

UML Plain Data Model of a Person class

connectivity. In addition, we want to avoid, that our Person

class, that may be used for different modules, is mixed up with

SQL queries and methods to query the underlying database.

In other words, the data model shall stay cohesive.

To decouple SQL specific functionality from the plain Person

class, we create a Data Access Object (DAO). This DAO

transforms the Person objects into queries to our database and

record sets back into Person objects.

A. Implementing with JDBC

JDBC provides an API to interact with the database.

Technically, it opens and closes the connection, provides

transactional management and queries the database. Thus,

JDBC is the entry point for Java to our database, as you can

see in figure 2. The abstraction of the data access object is

an important step. Even when we come to object-relational

mapping later. We want to keep our Java model clean from

SQL queries and methods, such that our model provides the

same interface for every other module in the system without

...

}

Listing 1.

Fig. 2.

Layered architecture of plain JDBC

any smudgy functionality. Assume, you would pack all the

code of a DAO into your model. Your class may become very

large, because you have to take into account that there there

can be different kind to query your data model, e.g. you need

to query all people, you need to count people with first name

Max and you need to create, update or delete a single person.

A create method for a single person in a DAO can look like in

listing 1. We have a predefined SQL statement for the creation.

This statement contains all attributes, except the personal id.

The question marks in the end are replaced by a call of a

prepared statement. The developer has to take into account,

that parameter have to be in the same order as listed in the

SQL statement.

public class PersonDAOJDBC implements IPersonDAO {

...

private static final String SQL_CREATE =

"INSERT INTO Person (email, firstName, lastName, ...) VALUES

(?, ?, ?, ...)";

...

public void create(Person person) {

// set values as parameters for prepared statement

Object[] values = {

person.getEmail(),

// ... further values

};

Method to create record set for a Person object

Assume, an attribute name of a class and a column name of

a relation shall store the same values but have an inconsistent

naming for those, e.g. firstName is the attribute name and

first name is the name of the column. Consequently, we have

to take this into account for every query and backtransformation in JDBC.

Consider now, you have many data models. To query your

database you probably have a lot of DAOs to access the data

of these objects. First, you would have to copy the database

operations into every DAO. Thereby, we have to copy and

paste a lot of code and modify it afterwards. This also contain

the risk to generate error prone code or to forget an attribute

mapping. Second, if there are many inconsistencies, you would

have to modify it for every DAO with respect to its SQL

statement and the backtransformation, too.

Metaprogramming, such as the Java Reflection API, can be

used to tackle this problem. During this section we will

introduce into metaprogramming and how it is used in objectrelational mapping.

B. Metaprogramming

Metaprogramming is used to examine, analyse and

modify the run-time behaviour of code [8]. The so called

computational reflection is established because we want to

implement adaptable and reusable software [5]. We know

that the mechanisms of object orientation are flexible enough

because we can design reusable code and design patterns help

us to create dynamic and adaptable software components [3].

Even though this is true for many use cases, we may need

information about the class of the object.

In Java, the Java Reflection API is used to inspect classes

to gather information about fields, methods and types and

modify the runtime behaviour of objects. Listing 2 shows

a little mapper. This mapper stores the type that should be

mapped in an instance attribute myClass.

try (

Connection con = daoFactory.getConnection();

PreparedStatement preStmt = prepareStatement(con,

SQL_CREATE, true, values);

)

{

// Execute create operation

int rowsAffected = preStmt.executeUpdate();

if (rowsAffected == 0) {

throw new DAOException("Creation failed! No

rows were created");

}

// Get new primary key as ID

try (ResultSet generatedKeys = preStmt.

getGeneratedKeys()) {

if (generatedKeys.next()) {

person.setId(generatedKeys.getLong(1));

}

else {

throw new SQLException("Creating user failed, no

ID obtained.");

}

} catch (SQLException e) {

throw new DAOException(e);

}

} catch (SQLException e) {

throw new DAOException(e);

}

public final class Mapper<T> {

...

private Class<T> myClass;

public Mapper(Class<T> myClass) {

this.myClass = myClass;

}

public T map(ResultSet rs) {

T instance = null;

try {

instance = myClass.newInstance();

} catch (IllegalAccessException \\

| InstantiationException ie) {

return null;

}

// attribute mapping

for (Field f : myClass.getDeclaredFields()) {

f.setAccessible(true);

try {

f.set(instance, rs.getObject(f.getName()));

} catch (SQLException sqlE) {

// field of rs not find, ignore it...

} catch (IllegalAccessException ie) {

// nothing happens

}

f.setAccessible(false);

}

return instance;

}

Listing 2.

Example Mapper with Metaprogramming

If method map with a record set is called, we try to instantiate a

object of class with type T. Thus, we need a default constructor

for our data model in order to invoke method newInstance. If

the instantiation succeeds, we try to map the attributes of the

record set to the object attributes. With this mapper we will be

able to exchange the get methods provided by the RecordSet

object as the listings 5 and 6 in section III exemplify.

Regard, that this is a simple mapper that sets the attributes of

the known type T. This mapper is neither able to manage joins

nor able to transform name inconsistencies.

C. Object-relational Mapping

Object-relational mappers, e.g. Hibernate and OpenJPA go

a bit further than our example mapper from listing 2. They

accomplish to simplify the impedance of object orientation.

Both implement the Java Persistence API (JPA) and take a lot

of use cases into account for database interaction, such as the

relationships between the data models or name inconsistencies.

The interconnection between the database and the object

oriented system is still realized by JDBC, as figure 3 illustrates.

ORMs that implement JPA have annotated data models. The

}

Listing 3.

Data Model with JPA Annotations

The ORM must know in beforehand which objects shall

be mapped. Therefore, they often have to be configured in

declarative XML files. These XML files are read before the

application starts. Hereby, the ORM knows which classes shall

be examined and integrated for the mapping process [1].

Since we have these two ways to interact with our database,

we could ask ourselves: Which solution is more flexible to a

new problem. Before we elaborate the case study in section

III, we discuss the topic software flexibility.

D. Software Flexibility

During the process of software development, new requirements and problems can occur everytime. Thus, one demand in

software engineering a flexible software design [4]. If there are

new requirements or problems, the old parts should be adapt

to the new situtation. But what is exactly flexibility? IEEE

defines software flexibility as the following:

Flexibility: The ease with which a system or

component can be modified for use in applications

or environments other than those for which it was

specifically designed [4].

This definition provides a wide range of interpretation. One

would usually say, a system or a component is flexible, if it

modifications need a low effort to implement and software

components are adaptable to new problems. Gamma et. al.

developed another definition for software flexibility in case of

software patterns:

Each design pattern lets some aspect of system structure vary independently of other aspects,

thereby making a system more robust to a particular

kind of change [3].

Fig. 3.

Layered architecture of the system with an ORM

annotations are used to set database related attributes, such

as annotation @Entity in listing 3. By setting the provided

annotations, an ORM can gather meta information about the

database. ORMs analyse the class structure and build the

mappings between relational tables and our objects. Even

though annotations extend our code, it is more decent than

whole methods would do it.

@Entity

public class Person {

@Id

@GeneratedValue(strategy = GenerationType.IDENTITY)

private long id;

...

Gamma et al. refer to rather patterns where the inner components can be modified by following the pattern structure. In

addition, the inner components are often exchangable during

runtime and thus flexible [3].

Even though both quotations show claims to software flexibility, one would rather rely on emprical aspects in software

development, such as time, knowledge and experience and

labour power.

Eden and Mens introduce in their paper another approach

and introduce the terms of evolutional step and cost metric

functions for software flexibility [2]. A contribution to describe

software flexibilty provides the software complexity notation,

the O notation. To distinguish between a current step and a

future step in the process of software development, Eden and

Mens define the term evolutional step.

1) Evolutional Step: Every software consists of a set

of function and non-functional requirements. Those can be

abstracted as problem. In addition, software development

is an evolutionary process where modifications affect the

implementation [2].

To distinguish between changes of problem and

implementation, modifications of problems are called

shifts and modifications in the implementation are called

adjustements. A shifted problem and following adjusted

implementation are called evolution step of software [2].

According to Eden and Mens, a evolutional step is defined as

a function:

Definition 1: Evolution function is a functional

relation E such that:

E := P×P×I and E(pold , pshif ted , iold ) = iadjusted

only if iold realizes pold and iadjusted realizes

pshif ted

An evolution step is a pair

= ((pold , pshif t , iold ), E((pold , pshif t , iold )) [2]

line counts as added code. This could be done trivially by the

following equation.

LoC(m) When counts to the new module

Add/LoC(m) ≈

0

otherwise

By using the evolutional step and the cost metric function,

we can define software flexibility O. Eden and Mens call a

old implementation flexible if there is a constant growth of

the affected classes. If there is a linear growth the software

count as rather inflexible. In contrast, if we achieve a constant

growth, the old software components are more flexible.

1 implementation is flexible

O(C()) =

n implementation is inflexible

In other words, consider, you have an old implementation

iold that relies on problem pold . Once, you will get a new

requirement. This requirement can be seen as problem pshif ted .

Consequently, an implementation iadjusted would be an evolutional step according to Eden and Mens. Let us recapitulate

our example from the introduction. We have a persistable class

Person.

In other words, if a mofification is needed, there will be

less lines to add. In contrast, when you change a interface

specification by adding or changing a method and integrate

those functions in your system, you have to touch a lot of

classes or add lines of code.

Example: We shall integrate a new data model

Address. All attributes regarding the address of a

person (address, postal code, city, ...) shall be moved

into the data model and removed from entity Person.

Consequently, the problem pold is the old state with

just a single entity Person with implementation of

the Person class iold and the new requirement above

becomes pshif ted . This three evolution elements lead

to the implementation of the modified Person and the

new Address entity iadjusted .

In our example to describe evolutional step in section II,

we have introduced a little modification. In this section we will

analyse the flexibility. We look at the costs for a rudimentary

implementation with just a little use of metaprogramming and

compare it to a solution with an object-relational mapper.

Before our example we had one data model, Person. This class

Person is accompanied by a DAO that queries our underlying

database. We design a new entity address and transfer the

attributes regarding the address of a person. Consider, several

people can own different houses or appartments and a house or

appartment can be shared by several people. Hence, we have

here a many-to-many relationship, as figure 4 demonstrates.

This evolutional step function can be used to differentiate

between two steps in the software development process. To

determine the costs of added modules Eden and Mens proposes a evolution cost metric for adding software modules or

components. This cost metric based on the generalized cost

metric [2].

III.

C ASE S TUDY: A DD A DDRESS E NTITY

2) Evolution Cost Metric for Adding Modules: Since we

need a cost metric that regards the adding/modification of new

modules, we can use the evolution cost metric [2].

Definition 2: Evolution cost metric calculates the

Add/LoC

total cost CM odules for executing an evolution step

= ((pold , pshif t , iold ), iadjusted ) as the total sum

of the lines of code in each module

P

Add/LoC

CM odules = m∈M odules(iold )\M odules(ishif ted ) LoC(m)

Fig. 4.

M odules(iold ) \ M odules(ishif ted ) returns the set

of modules added to the LoC(m) designates the

number of ‘lines of code’ of module m [2].

According to Eden and Mens, a module can be a function,

a class or a whole component package. This cost metric

concerns and counts only the added code lines in the old

modules that were modified by the adjusted implementation,

as M odules(iold ) \ M odules(ishif ted ) emphasizes.

What means now Add/Loc? Before we go into our case study

and apply the definitions, we need to clarify, when a code

Layered architecture of plain JDBC

Therefore, we define our problems as the following:

•

pold describes our single data model with its own DAO

•

pshif ted is the introduction of a new data model

Address and the establishment of the relationship

between people and addresses.

•

iold is the implementation of our single data model

component, i.e. the implementation of our system in

pold

•

Finally, iadjusted is the completed implementation of

our shifted problem.

Address adr;

while (rs.next()) {

adr = new Address();

// call all getters and find the

// appropriate database columnes

These single elements of a evolutional step will be inserted in

the evolutional function:

adr.setAddress(rs.getString("address"));

...

E((single data model), (new data model),

(implementation of single data model)) =

(implemented many-to-many relationship for two entities)

This evolutional step function will be used for the cost metric

function II-D2. First we will have a look at our implementation

without full metaprogramming support.

addresses.add(adr);

}

// set

p.setAddresses(addresses);

return p;

}

}

Listing 5.

Query a single person

A. Implementation without Metaprogramming

Like our model Person and its data access object, we have

implemented the same structure for data model. This DAO

takes care for every database query related to addresses, such

in reading record sets of addresses or create new addresses.

All operations are quite similar compared to our DAO for class

Person.

Since we have a many-to-many relationship between addresses,

both Person and Address have a container to store sets of the

related entity. Hereby, we can query how many places does a

person live or which people live at the same place.

During this case study we look at our Person object. Here, we

establish an association by adding a Container of addresses

as instance attribute. The next step is to modify the database

operations to create, read, update and delete record sets for

person. Listing 4 exemplifies the new queries and to join the

relational tales either to insert or to read record sets of person

with an address. The first query searches JOINs our manyto-many relationship by using the relational table. The second

query is required that former query will work, because our

relations need foreign keys to join the tables.

public class PersonDAOJDBC {

...

private static final String SQL_FIND_BY_ID_WITH_ADDRESS =

SELECT a.* FROM Person p, Address a, Person_Address

pa

+ WHERE (p.id = ?) AND (p.id = pa.person_id) AND (a

.id = pa.address_id);

As you can see, we call all setters and pass the appropriate

database column to it. Suppose, we have several DAOs that

use addresses. In that case, we would have to change the code

of every data access object in the system. Furthermore, this

code not safe, because there can occur mistakes in the manual

mapping. In addition, if there is a change in the address model,

we have to take care about that in the PersonDAO class and it

may happen that a column is not set properly by the developer.

By using our small Mapper from section II, we can avoid the

call series of setters and getters and iterate over all mappable

attributes via the Java reflection API, i.e. column name and

attribute name must be the same.

...

while (rs.next()) {

adr = (Address) Mapper.Get(Address.class).map(rs);

addresses.add(adr);

}

...

Listing 6.

In contrast to the variant to call getter, we let the reflection

API resolve the appropriate attributes. The functionality is

encapsulated in the class Mapper (see listing 2 in section

II). However, we will still run into some problems with that

implementation.

•

The name inconsistency problem is still existent. Despite that fact, the call of the method map seems more

flexible, because we need just to modify the code

inside the Mapper and not any DAO.

•

Moreover, the relationship is resolved outside the

mapper class and managed by the data access object.

Suppose, a new data model Country is established and

associated with an address of a person. We would have

to query the countries as well, and associate every

address with a country. This relationship resolution

should be done by the ORM, such that we just query

our ORM and get the desired data sets.

private static final String SQL_ESTABLISH_JOIN =

INSERT INTO Person_Address (person_id, address_id)

VALUES (?, ?);

...

}

Listing 4.

Queries that regard our relational table Person Address

In order to read a single record set of a person with all its

addresses, we have modified the method find as listing 5 shows.

First we search for a person by its id. Afterwards we send a

second query to our database to get all associated addresses.

We iterate over every address, pass the record set parameter

to the appropriate attribute and add the address to a container.

Finally, we inject the container into our object of a person.

public Person findByIDWithAddress(long id) {

Person p = null;

Collection<Address> addresses = new ArrayList<>();

Map a record set to an Address object

This example shows just the query and mapping of a Person

and its addresses. To compare the proposed solution, we

have the another adjusted implementatio to accomplish an

evolutional step with Hibernate ORM.

B. Implementation with ORM

p = find(id); // find single person

/* query database and return

all addresses into the record set

*/

In order to start with the implementation, a configuration

file has to be created for the ORM. This configuration file

contains the connection settings and mappable objects in our

class. We have set the configuration entries forour class Person

and annotated our class, as the source code excerpt in listing

7 visualizes.

amount of code lines added to our old modules in our model

Person and the related data access object.

O(C(Add/Loc)) =

O(C(# added code lines Person))

O(C(# added code lines in PersonDAO))

= O(0 + 76) = O(76)

@Entity

public class Person {

@Id

@GeneratedValue(strategy = GenerationType.IDENTITY)

private long id;

@Column(nullable = false)

private String email;

@Column(nullable = false)

private String firstName;

@Column(nullable = false)

private String lastName;

@ManyToMany(

targetEntity=Address.class,

cascade={CascadeType.PERSIST, CascadeType.MERGE}

)

@JoinTable(

name="Person_Address",

joinColumns = @JoinColumn(name="person_id"),

inverseJoinColumns = @JoinColumn(name="address_id")

)

private Collection<Address> addresses = new ArrayList<

Address>();

In our concrete example, we add over 76 lines of code

compared to our old PersonDAO, in order create a person an. If

we would have N models requiring address information from

the address entity inside a DAO, we would have been forced

to add k lines of code * N models which leads to a linear

flexibility O(n). The parameter k depends on modifications

related to the own DAO.

In comparision with the solution in Hibernate we do not have

any changes in class PersonDAO. Since we annotated the

models Address and Person, we were forced to add annotation

code. In addition, we had could copy and modify the code of

a PersonDAO into

O(C(Add/Loc))

= O(C(# added code lines in PersonDAO))

+ O(C(# added code lines in model Person))

= O(0 + 20) = O(20)

...

}

Listing 7.

Map a record set to an Address object

When we start our application, model Person will be integrated

in Hibernate ORM. The biggest annotation is our associated

addresses because we have to join the relational table and our

address model layer. The relational table Person Address, for

example, differs from the naming of the ID keys. The column

id of a person is named person id in the relational table. By

setting the CascateType, we can define the cascadable database

operations. In listing 7 we allow our Person model to store or

modify every address when a Person will be peristed. The

removal of a person will not delete an address record set,

because we have not set the annotation to regard this operation.

Even though we added a new entity address and modified the

structure of our model Person, there is no change to query

a person with all related addresses, as you can notice from

listing 8.

When we compare the lines of code, we can save over the half

of code, if we use an ORM that applies metaprogramming.

Despite, we have these savings, we will maybe run into the

same problems of linear flexibility. Suppose you have N data

models, that have address information, then you would have to

modify all of these data models which lead in linear flexibilty

O(n), too.

Even though we could save some code, one might wonder:

Is an ORM more flexible than a plain JDBC implementation.

There are also other code metrics that can be applied to the

generalized cost metric, such as cyclomatic complexity or

McCabe-Metric [2]. In addition to the quantified flexibility

measure, there is a rather empirical support in the analysis

of the adjusted implementation:

•

First, an ORM regards many use cases that accompany with the impedance of object orientation. It is

able to resolve associations into joined relations, if

the association in the class is properly annotated. In

addition, name inconsistencies between class attributes

and table columns can be solved by simple code

annotating.

•

Second, the coupling of the different DAOs, as we

have seen in our JDBC example, is reduced. All

code related to database access and operations is

encapsulated in an ORM.

•

Last but not least, the mapping of object is a hard

problem [9]. We have to concern, that mapping is

always a synchronization between two different representations. As we can see by our Mapper example

in section II, there are more steps necessary to have

a flexible and adaptable mapper that applies metaprogramming

public Person find(long id) {

return (Person) session.createQuery("FROM Person WHERE id =

:id")

.setLong("id", id).list().get(0);

}

Listing 8.

PersonDAO find id with Hibernate ORM implementation

session.createQuery needs a partial of an SQL like syntax.

Since the ORM knows about the relationship in data model, it

will automatically resolve and map all addresses to a object of

type person. As last step we have to cast the object explicitly.

Our implementation is done so far. In the next section IV we

examine the cost metrics and evaluate the flexibility for both

evolutional steps.

IV.

A NALYSIS & D ISCUSSION

First we will look at our solution without any mapper with

a plain JDBC. The costs will be calculated by the sum of the

Another advantage that we used Hibernate with an implementation of the Java Persistence API. This means, we could

exchange most parts of our data models and transfer it to

another ORM, that implements the Java Persistence API. This

makes our data model mostly independent from the underlying

ORM.

A negative aspect of our adjusted implmentation is that we are

supposed to cast explicitly, if we may remember listing 8 in

section 4. There may occur situations where this code could be

error prone, e.g. when we would use polymorphism for all data

models. Consequently, a ClassCastException could occur if we

try to cast two different children of an base class. Therefore,

we should always use the real data model type and cast into

the abstract class. In addition, a developer may try to transfer

the relational representation to a object oriented systems. This

contradicts elementary principles of object oriented programming, such as polymorphism and cohesive components, that

encapsulate the functionality that they are supposed to do.

Therefore we can assume that an ORM using metaprogramming is more flexible than an plain object oriented implementation without metaprogramming support.

V.

C ONCLUSION

To conclude our words, we have shown that it can be a

problem to transfer objects to a relational database. One way

to solve this problem can be a plain JDBC implementation.

Even though we have the full control about our design and

code, there is maybe the risk to produce error prone code

while implementing the operations for the data access objects.

By using plain JDBC, the coupling of DAOs is very tight,

because we interweave code of different data access objects.

The impedance of object orientation hampers the development

in this way.

On the other hand there is the support of object-relational

Mappers. ORMs, such as Hibernate in Java, implement the

Java persistence API and use metaprogramming to examine the

class structure of a mappable class. Via appropriate annotations

and the Java reflection API, Hibernate is able to solve adapt

to several complex problems.

Furthermore we tried to analyse the flexibility of ORMs by

having a look at the evolutional steps and cost metric function

according to Eden and Mens. We could see, that the usage

of ORM is more flexible than a plain object oriented JDBC

implementation, because the proper annotated code combined

with metaprogramming creates a dynamic mapper, produces

less code and encapsulates the whole database interaction in

one library. Finally, we can hold on the fact that ORM solutions

adapt to the requirements of the data model architecture and

simplyfy the problem to persist object oriented entities into a

relational database.

R EFERENCES

[1]

The Hibernate Team, HIBERNATE - Relational Persistence for

Idiomatic Java, http://docs.jboss.org/hibernate/orm/4.3/manual/enUS/html/, last access: May 28th, 2015

[2] Amnon H. Eden and Tom Mens, Measuring Software Flexibility, IEE

Software, Vol. 153, No. 3 (Jun. 2006), pp. 113–126. London, UK: The

Institution of Engineering and Technology.

[3] E. Gamma, R. Helm, R. Johnson, J. Vlissides. Design Patterns: Elements of Reusable Object-Oriented Software. Reading: Addison-Wesley,

1995.

[4] IEEE. Standard Glossary of Software Engineering Terminology 610.121990, Vol. 1. Los Alamitos: IEEE Press, 1999.

[5]

[6]

[7]

[8]

[9]

[10]

[11]

Weiyu Miao and Jeremy Siek. Compile-time reflection and metaprogramming for Java, Proceedings of the ACM SIGPLAN 2014 Workshop

on Partial Evaluation and Program Manipulation. ACM, 2014.

Oracle, The Java Tutorials, http://docs.oracle.com/javase/tutorial/jdbc/,

last access: May 28th 2015

John Reppy and Aaron Turon, Metaprogramming with Traits, ECOOP

2007-Object-Oriented Programming, Springer Berlin Heidelberg, 2007.

p. 373-398

Oracle,

Trail: The Reflection API, https://docs.oracle.com/javase/tutorial/reflect/,

last access: May 28th 2015

Martin Fowler, OrmHate, http://martinfowler.com/bliki/OrmHate.html,

last access: April 19th 2015

Pieter J. Schoenmakers, On the Flexibility of Programming Languages,

http://gerbil.org/tom/papers/flex.shtml, last access: May 28th 2015

Ola

Leifler,

Metaprogramming

case

studies

in

C#,

https://www.ida.liu.se/∼TDDD05/lectures/slides/F03 reflection CSharp.pdf,

last access: May 28th 2015