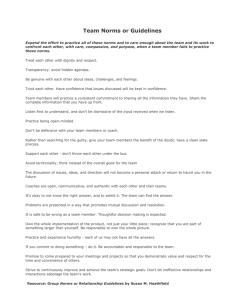

Rules, Norms, Commitments

advertisement