The Principles of Readability

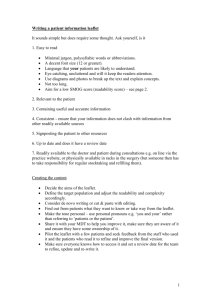

advertisement