Outcomes Assessment Review Committee 2012 Annual Report

advertisement

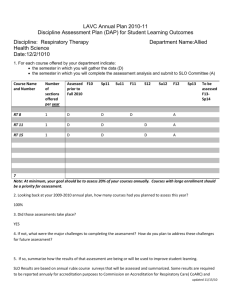

Outcomes Assessment Review Committee 2012 Annual Report Respectfully Submitted by: Marcy Alancraig, Dennis Bailey-Fournier, Alicia Beard, Justina Buller, Tama Bolton, Tana de Santos (student representative), Rick Fillman, Jean Gallagher-Heil, Paul Harvell, Brian King, Rachel Mayo, Isabel O’Connor, Margery Regalado, Georg Romero, Letisha Scott-Curtis Introduction and Background In response to the change in accreditation standards in 2002, two shared governance committees, the Learner Outcomes and Accreditation Committee (2001-2003) and the Accreditation Planning Committee (2003-2005), along with the Cabrillo Faculty Senate, designed a comprehensive SLO assessment plan: assessment of student learning outcomes occurs in all sectors of the college as part of on-going Program Planning (departmental review) processes. The college was divided into five assessment sectors -Transfer and Basic Skills Instruction, Career Technical Education, Library, Student Services, and Administration -- which were each to measure their contributions to students' mastery of the college’s core competencies. Last spring, after years of grappling with how to measure their contribution to student learning, administrative departments switched to writing and assessing administrative unit outcomes. Each sector of the college creates its own method to assess student success and/or the new AUOs. See the SLO website for a detailed description of the methods used in each area (http://pro.cabrillo.edu/slos/index.html). Programs and services undergo Program Planning on a rotating basis; only a few departments complete the process each year. For example, in Instruction, approximately twelve of its fifty-six programs write a program plan in a given year. Because of the number of programs within its purview, the Instructional component began by phasing in SLO assessment, starting with the college core competencies. When this set-up phase was completed, Instruction moved to institutionalizing the process, asking that departments measure student mastery of every course, certificate and degree SLO within the six-year program planning cycle. This staggered schedule of assessment is called the Revolving Wheel of Assessment; every department is currently embarked on some stage of its repeating cycle (see the SLO website for a detailed description of the Wheel). Now Instruction has focused its efforts on quality assurance, creating processes and tools to ensure excellence and full compliance with its SLO procedures. Student Services also phased in SLO assessment, beginning with writing and then revising their departmental SLOs, and now by assessing them. A grant received by the college, the Bridging Research Information and Culture Technical Assistance Project, 1 (sponsored by the Research and Planning Group and funded by the Hewlett Foundation) provided needed training in Student Services assessment methods during Spring 2011. Prior to the training, a few departments had piloted some assessment measures, but most began this activity in earnest after those sessions. By October 2012, all but one Student Service department wrote a program plan and assessed all of their SLOs, leading to new insights and ways to improve services. Administration (composed of departments or administrative offices in the President’s component, Administrative Services, Student Services and Instruction) spent the last five years discussing and identifying how their departments contribute to student mastery of the college core competencies and how to measure it. Because they provide a wide range of services that enable teaching and learning to occur, but are not directly involved in the formal learning process, their role in assessing SLOs has been difficult to define. In Spring 2012, Administration switched to measuring Administrative Unit Outcomes. Cabrillo defines an Administrative Unit Outcome as a description of what all the users of an Administrative service can do, know, or understand after interacting with that office or department – it is user centered, a description of what the service provides for others. Unlike some schools across the state, a Cabrillo AUO is not an administrative unit goal. Almost all administrative departments have written AUOs and two have assessed them. No matter the assessment sector, all college departments that write a Program Plan by June in a given year forward their assessment reports to the Outcomes Assessment Review Committee. This committee, a subcommittee of the Faculty Senate, is chaired by the Student Learning Outcomes Assessment Coordinator and is designed to include representatives from the Student Senate, Faculty Senate, CCEU, CCFT, and a manager along with representatives from Administration, Student Services, Library, and Instruction (both Transfer & Basic Skills and CTE). The Campus Researcher and Accreditation Liaison Officer serve as ex officio members of the committee. The Outcomes Assessment Review Committee (ARC) oversees, analyzes and evaluates all campus SLO and AUO assessment activities. It reviews the yearly assessment results in Instruction, Student Services, the Library and Administration, looking for common themes and broad trends. In addition to analyzing the collective contents of the assessments submitted each year, ARC evaluates its own function and all assessment processes on campus. ARC writes a report about its analysis, submitting it to campus governing bodies authorized to act upon ARC’s recommendations, including the Governing Board, the Master Planning Committee, the College Planning Council, the Faculty and Student Senates and both unions, CCFT and CCEU. This year, the Outcomes Assessment Review Committee was also asked by the President’s Cabinet to examine institutional effectiveness issues, making recommendations for improving college processes. When needed, the committee is empowered to initiate a college-wide dialog process to analyze and solve broad issues about student learning that have been revealed by SLO assessment results across the campus. For more detailed information on ARC’s charge, membership and duties, please see the SLO website (http://pro.cabrillo.edu/slos/index.html). 2 This report reflects ARC’s review of the assessment results for those departments that completed Program Planning in the 2011-2012 academic year. 3 Assessment Process: Facts and Figures Participating in this year’s assessment were fourteen instructional departments, ten serving Transfer and Basic Skills and five in CTE, the Library, thirteen departments in Student Services and five in Administration. Assessment Sector Area Transfer and Basic Skills All 9 scheduled departments submitted Program Plans All 5 scheduled departments submitted Program Plans Submitted a Program Plan as scheduled 13 of 14 departments submitted program plans and undertook SLO assessment. An on-going rotating program planning cycle, with annual reports has been developed. 5 of 21 departments submitted Program Plans; 2 of those departments assessed AUOs. An on-going schedule for program planning is still being developed in some components. Career Technical Education Programs Library Student Services Administration Participation The charts below capture the participation of Cabrillo faculty and staff in assessment activities. Since this assessment in Instruction and in some Student Services departments took place over a number of years, an average rate was calculated. Basic Skills/Transfer Department % of full time presenting assessment results Anthropology 100% Art History 100% Athletics 50% Kinesiology 50% Physics 75% Political 100% Science Sociology 100% Theatre Arts 66% Women’s 100% Studies % of adjunct presenting assessment results 100% 60% 10% 0% 50% 38% % of full time % of adjunct discussing discussing results results 100% 100% 75% 95% 75% 100% 60% 75% 50% 30% 50% 63% 60% 40% 0% 100% 100% 100% 60% 60% 0% 4 Figure 1. Basic skills/Transfer Assessment: Full time and part time faculty assessment presentation rates Figure 2. Basic skills/Transfer Assessment: Full time and part time faculty assessment discussion rates 5 CTE Department Computer Applications/Business Technology Engineering Technology Horticulture Human Services Nursing % of full time presenting assessment results 100% % of adjunct presenting assessment results 20% % of full time % of adjunct discussing discussing results results 100% 36% 100% 33% 100% 100% 100% 100% 81% 100% 50% 34% 100% 100% 81% 100% 75% 34% Figure 3. CTE Assessment: Full time and part time faculty assessment presentation rates 6 Figure 4. CTE: Full time and part time faculty assessment discussion rates ARC is pleased with the robust rates of participation of full-time faculty overall as well as the high rate of adjunct participation in the Anthropology and Horticulture departments, but notes with concern the uneven participation of adjunct faculty in other departments. As the charts demonstrate, more adjuncts participated in the discussion of assessment than those who undertook the assessment itself. In part, this is because the discussions occur during Flex week, when adjuncts have more opportunity to participate and collaborate with members of their department. The actual assessment and its analysis takes place during the course of the regular semester. But since so many of our courses are taught by adjuncts, ARC continues to be concerned by this lack of full participation, particularly in some smaller departments. However, ARC notes that the reporting form may collect erroneous information since it asks for the total number of adjuncts though only some may be teaching the course being assessed. ARC recommends changing the form to capture this information more accurately. In addition, it notes that some of the activities it undertook last year to help Program Chairs engage more adjuncts in SLO assessment are not apparent in these statistics since this captures years previous to that work. Library Type Assessment of % of full time % of adjunct % of full time % of adjunct presenting presenting discussing discussing assessment assessment results results results results Library 10 SLO 100% 100% 100% 100% Core Four 100% 95% 100% 95% ARC notes with pleasure the very high numbers of both full-time and adjunct faculty and staff participation in SLO assessment in the Library. 7 Student Services Department Admissions and Records Assessment Cabrillo Advancement Program (CAP) Counseling Disabled Students Programs and Services Financial Aid Extended Opportunity Programs and Services Fast Track to Work # of departmental SLOs % of SLOs assessed 1 1 2 100% 100% 50% % of department discussing results 95% 87% 100% 1 1 100% 100% 89% 100% 1 4 100% 75% 83% 100% 1 100% 50-86% for three meetings 100% 100% 100% Learning Skills 1 100% Office of Matriculation 1 100% Student Affairs/Student Activities 4 100% and Government Student Employment 1 100% 100% Student Health Services 2 50% 100% ARC is delighted with the high numbers of SLOs assessed and the robust rates of participation of department members discussing their results. Administration Administrative Unit/ Department Dean of Counseling and Educational Support Services Information Technology Instructional Division Offices President’s Office Vice President of Student Services Office # of departmental AUOs % of AUOs assessed % of department discussing results 1 100% 68% 1 0% 0% 1 0% 0% 3 1 0% 100% 0% 100% ARC is pleased that two of the new AUOs have already been assessed and discussed. 8 Assessment Progress Transfer and Basic Skills Department Anthropology Art History Athletics Kinesiology Physics Political Science Sociology Theatre Arts Women’s Studies % of Core 4 Assessed 100% 100% 100% 100% 100% 100% 100% 0% 0% % of Course SLOs Assessed 0 50% 36% 94% 100% 100% 75% 13% 100% According to the Revolving Wheel of Assessment, all transfer and basic skills departments were to have assessed each of the Core 4 and all course SLOs. Almost 80% of the departments were on track with their Core 4 assessment, but ARC notes with concern that the assessment of course SLOs varies. One third of the group assessed at least 90% with two departments at 100%. But one department assessed only 75% of its courses, while four others (44% of the group) assessed fifty percent or less. However, it should be noted that the Anthropology department, which assessed none of their course SLOs, is in the process of completely rewriting them. They chose not to assess the old ones and, having recently gotten curriculum approval for the new ones, are now embarked on their assessment. Yet the reporting forms do not provide an opportunity to explain this. Another factor to consider is that some classes may not have been assessed because they were not scheduled, due to the budget crisis. Again, the reporting forms do not capture this kind of information. ARC recommends revising the forms so that this data can be revealed. Career Technical Education Department Computer Applications/Business Technology Engineering Technology Horticulture Human Services Nursing Wrote Assessment Plan Certificate SLOs Assessed 100% Course SLOs Assessed 100% 0% 31% 0% 28% 100% 100% 100% 0% 9 According to the Revolving Wheel of assessment, CTE programs were to write an assessment plan, and to assess all course and certificate SLOs. ARC is pleased that all CTE departments wrote an assessment plan or implemented the one created from their last round of Program Planning. Two of five programs assessed all certificate SLOs while two did not assess any, and one only assessed a small portion. It should be noted, however, that the Horticulture department’s lack of certificate SLO assessment arose from a misunderstanding; the department assumed that its course level assessment also assessed its certificate SLOs. After working with the SLO coordinator, the department has since instituted a different process. Three out of five departments assessed all course SLOs, one department assessed almost a third and one didn’t assess any at all. Overall analysis: It would be nice to consider the numbers of course and certificate level assessment this year as a fluke, but the rates match what is occurring in other departments across campus. Now that Instruction has moved from setting up and institutionalizing SLO assessment to focusing on quality assurance, it undertook an inventory of overall SLO assessment for the first time in Fall 2012. Using a tracking tool developed in response to last year’s ARC annual report, Instruction discovered that 61% of SLOs in active courses (those in the catalog and offered in the last three years) and 76% of certificate SLOs had been assessed. Before this, a department’s progress on the Revolving Wheel of Assessment was not revealed until they attended a mandatory workshop two years before their program plan was due and then again when they presented that plan to the Council for Instructional Planning. The Council for Instructional Planning recognized that a four-year gap in assessment reporting was not helpful and in 2008, required a description of assessment activities as part of a department’s annual report. In 2011, it asked departments to also submit any completed Assessment Analysis forms from the past year. However, not until the reports due in December 2012 will departments be asked to report on their progress on the Revolving Wheel of Assessment. These reports will be read by the SLO Coordinator who will offer help to struggling departments. The new SLO Tracking tool, which took the campus inventory and revealed these numbers, also pinpointed specific departments that are struggling to whom the SLO Coordinator will offer assistance. ARC took a close look at these assessment completion statistics. First and foremost, it recognized that since the SLO tracking tool is a brand new approach to measuring departmental assessment efforts. The Planning and Research office consulted with the acting Vice Presidents of Instruction, the Deans and the SLO Coordinator, along with the Instructional Procedures Analyst, about how to define active courses and how to retrieve them from the college curriculum database. All involved acknowledge that this process and the tool itself need refinement. For example, while the spreadsheet captured information at the course level (course SLO assessed yes or no), systematic tracking of the assessment of individual course SLOs has yet to be attempted. Secondly, in an attempt to assure quality, this group also decided not to include those course and certificate SLOs whose measurement was attempted but done incorrectly. 10 For instance, one department carefully and thoroughly assessed all of its 120 courses, but used the wrong method. If these courses had been included, the course assessment rate would have jumped to 74%. Similar errors occurred with some certificate SLO assessment. All of this points to a need for continued monitoring of SLO processes and more intensive program chair training. Furthermore, ARC recognizes that since Instruction started its SLO assessment with the college core competencies, there has been more training and emphasis on assessing this level. The course and certificate level assessment methods have been taught but not as intensively. In addition, as noted in the 2011 annual report, some departments seem to be struggling with the organization and scheduling of course and program SLO assessment, while others are accomplishing it very successfully. Analysis has not revealed any easily definable reasons for this; size of department, number of adjuncts and length of tenure as program chair cannot be the sole cause since there are many departments that have completed their assigned assessment despite these issues. ARC recommends the following to assure quality control for Instructional SLO Assessment: Create an electronic tool for both assessment reporting and planning, so that program chairs, the SLO Coordinator and the Vice President of Instruction’s Office can more easily identify the SLO assessment that has been accomplished and that which needs to be done. Revamp the SLO assessment analysis forms to collect more accurate data on adjunct participation, and courses not offered due to budget or curriculum issues. Provide specific training in course and certificate level SLO assessment through the SLO web page and in flex workshops. Ensure that all struggling departments work individually with the SLO Coordinator to schedule, discuss and report SLO assessment. Facilitate a dialogue with Deans, the Council of Instructional Planning and the Faculty Senate to brainstorm other methods to ensure full compliance with college SLO standards. The Library While the Library has successfully assessed all of its SLOs, it reported in its program planning presentation to the Council for Instructional Planning in Spring 2012 that it wasn’t satisfied with its assessment method for the Core Four. The Library believes that their services provide a means to help students master the college core competencies. They chose to measure this by asking students to assess themselves on the competencies as part of their annual survey. This yielded very positive results but it did not provide useful and reliable information for how to actually improve student learning within the particular services. The Library also felt that this assessment did not capture how it contributes to the learning of faculty and community members as well as students. 11 In Fall 2012, the Library adopted an Administrative Unit Outcome to capture what the Library’s full component of services offers to students, faculty, and the community. It reads: Cabrillo library patrons will be able to successfully use the library's physical and electronic services, information tools, and resources, to find and evaluate information, and accomplish academic endeavors in the pursuit of formal and informal learning In addition, a new assessment cycle was drafted. Since the Library completed its program plan in 2012, this cycle will be put into effect beginning in 2013. Year 1 (by Spring 2013): Assess Computer services (website review committee efforts; annual survey questions covering computer use) Year 2 (Spring 2014): Assess Circulation services (likely using a brief survey handed out to users of the services) Year 3 (Spring 2015): Assess Reference services (likely using student evaluations generated through the faculty evaluation process) Year 4 (Spring 2016): Assess Information resources (method undetermined as yet) Year 5 (Spring 2017): Evaluate our overall outcomes, assessments, and planning cycle Year 6 (2017-2018): Write library program plan Student Services Student Services has completed the SLO assessment of 92% of its SLOs. Now that each SLO has been assessed once, Student Services is currently creating an on-going and revolving assessment schedule while revising its program planning and annual report templates. Administration Administration adopted a shift to writing and assessing administrative unit outcomes in Spring 2012. Almost all departments have since written AUOs and two departments have assessed them. In addition, five departments wrote program plans, using the template from the Office of Instruction that was adopted in 2010. 12 Assessment Results: Emerging Needs and Issues The departments who assessed the college Core 4 competencies, certificate and individual course SLOs identified the following key student needs and issues: A lack of preparedness in some students. A need to continue to experiment with and develop better instructional practices and pedagogy, including facilitating group work. A need for faculty to provide more writing assignments in non-English transfer level courses. Those departments proposed the following strategies to help meet these needs: More faculty training and development, particularly in working with underprepared students. More departmental meeting time devoted to a discussion of pedagogical issues, with a focus on how to “scaffold” assignments so that they build up to major assignments and/or the best progression of skills. Training in how to implement and manage student working groups. Request the writing factor for some transfer level courses. Increase class participation through the use of clicker response tools. Instructional departments also commented on the positive effects of SLO assessment in their program plans. Physics: “This process has proved very beneficial to our department. It sparked many interesting discussions about teaching methodologies as well as highlighted our students’ strengths.” Computer Applications/Business Technology: “We have found this to be a productive way to have focused discussions on course and student success and ways to increase them.” Kinesiology: “One faculty member who could not attend the meetings voiced concern that completing the assessments amounted to ‘inconsequential busy work.’ She was able to attend a later meeting and learn from other faculty the kinds of rubrics they used, and was thrilled with the results of her new assessment tool, so much so that she shared with me each and every one of the student responses. She was astounded at how meaningful her class was to her students, and has found new direction and emphasis in her teaching. So we’ve found that dialogue and sharing is integral to a meaningful assessment process. “ Others noted problem areas: Political Science: “Adding SLOs to our program planning over time has significantly impacted the planning process itself…The time involved in conceptualizing how to apply this new paradigm, taking part in the necessary training and actually implementing the assessment has been significant. Although part time faculty 13 members have been willing to participate, SLO assessment puts an undue burden on them.” Sociology: “…The Program Chair duties of a one full time contract department …have been overwhelming…The assessment process has suffered.” Student Services SLO assessment revealed that one time training may not be enough to help students become more self-efficacious. The departments are proposing new methods to follow-up with students over time. 14 Analysis of Cabrillo’s Outcomes Assessment Process ARC’s analysis of this year’s SLO assessment process notes the wealth of assessment occurring across campus in all sectors, along with issues that arise from either institutionalizing the process and/or assuring its quality. Overall, the assessment analysis forms scrutinized by ARC reveal that many important insights about student learning have been garnered as a result of SLO assessment. The entire college could benefit from hearing what has been learned. However, there are few ways for departments to share what they’ve come to comprehend with others. With all fifty-six Instructional departments now assessing SLOs, along with fourteen Student Service departments and soon twenty-one Administrative departments, the college needs to find a way to disseminate all that has been discovered. Last year ARC made a recommendation that more sharing occur in Instruction, and in Fall 2012, it facilitated a flex workshop to impart what had been discovered from Core 4 assessment, which resulted in useful interdepartmental dialogue. This year, ARC recommends continuing that conversation but also expanding it so that the important insights generated by the Student Services SLO assessment can be shared with Instructional faculty and staff, and vice versa. This presents a scheduling challenge since many Student Services faculty and staff are very busy serving students during Flex week and cannot attend the scheduled sessions. ARC brought this idea to the Staff Development committee, which enthusiastically embraced it and suggested a midsemester flex time for sharing between Instruction and Student Services. Staff Development is currently in dialogue with the Faculty Senate about this possibility; changing Flex times is also a negotiated item and must be part of a discussion between the Administration and the Faculty union. ARC further recommends that the college give serious consideration to how it can continue to recognize and share assessment results so that the entire college community can benefit. Some sort of vehicle that highlights excellent work, such as a newsletter, web portal or grants and awards, should be created. In addition to sharing assessment results, one of the biggest needs is a streamlined scheduling and reporting process that allows the college to have an accurate look at what outcomes assessment is occurring and how each department is progressing with this work. For instance, Instruction’s fifty-six departments are each required to turn in at least two forms per year summarizing their SLO assessment efforts. Currently, the forms are word documents downloaded from the SLO website which are e-mailed to the Instruction Office. Program Chairs keep track of what assessment is scheduled and what has been accomplished on their own. As noted earlier, not all departments are completing their assessment in a timely manner and those who might assist in this effort, such as Deans and the SLO Coordinator, are unaware of where problems exist until too late in the cycle. A campus wide system for reporting and scheduling would make this work easier and more efficient, and could increase the rate of SLO assessment. 15 ARC previously looked into various outsourced SLO reporting formats, but all were found to be financially unfeasible; the 2011 report details those efforts along with a recommendation that the SLO Coordinator and the Planning and Research Office continue working to find solutions. This resulted in the creation of the SLO Tracking tool, and some organizing documents but as this report attests, more is needed. To meet that need, in Fall 2012, ARC convened an ad hoc committee to explore other options. Composed of some of the best technical minds on campus, it is currently exploring the use of CurriUNET’s SLO and program planning modules along with some homegrown solutions. It will report its conclusions to ARC and the committee will, in turn, pass those recommendations on to other shared governance committees. ARC also noted that this year, some departments are assessing SLOs for the second time. It recommends modifying the assessment analysis form so that departments can report on the results of previous results and identified trends. Last year, ARC recommended increased training of Program Chairs and Deans. One of the goals of this training was to provide a solution to another issue arising from the institutionalization of SLO assessment: lack of participation by adjunct faculty. Since PC’s now are responsible for training their adjuncts in SLO assessment, ARC hoped that improving their training would result in increased adjunct participation. Two Flex workshops were held, in Spring and Fall 2012, with good attendance, during which the PC role in SLO assessment was clarified, new tools for organizing it were offered and most importantly, a dialogue occurred between those departments that have struggled with SLO issues and those that have been successful. It is too soon to tell if this dialogue will actually bring about an increase in adjunct participation numbers, but the workshops were a promising start. This year ARC recommends that this training be continued but in a different format: Now that struggling departments have been identified through the SLO Tracker, they should work individually with the SLO Coordinator to plan and organize SLO assessment. Create a special Program Chair section of the revamped SLO website, with training materials (for new program chairs), tools and examples from departments that have successfully undertaken SLO work. The Flex workshops offered this year also garnered many helpful suggestions for what should be included in this section of the website. One of ARC’s activities of last year was to develop a campus-wide survey to take the “SLO temperature” of the entire college. ARC administered the survey in late Fall 2011, analyzed the results in Spring 2012 and prepared a short report on the survey results in Fall 2012. The survey results confirmed the observations of many ARC reports from the past five years: The campus finds the SLO assessment process valuable. Adjunct and full-time faculty have a different experience with SLO assessment 16 At the time, Student Services had just rewritten their SLOs and were just embarking on actual assessment. At the time, Administration was still struggling with how they contributed to student learning outcomes; they were at the very beginning of their assessment efforts. ARC will disseminate the survey results in Spring 2013, along with an update on how things have changed. In addition, because Student Services and Administration have made such strides in only twelve months, ARC will administer the survey again in late Spring 2013. Last year, the Faculty Senate embarked on a pilot to add a quantitative component to SLO reporting and added pre and post testing as an approved assessment method for Basic Skills and Transfer departments. ARC recommends that the SLO Coordinator collect feedback about these changes and share the results with the Faculty Senate. The Library’s assessment process, with its switch to AUOs, showcases the iterative properties of SLO assessment and how taking a careful look at both data and processes can result in needed changes. ARC looks forward to the insights revealed by the new assessment focus and measurements. Student Services has made great progress in their work with SLO assessment this year. The committee commends them for undertaking so much good SLO assessment. Now that a first round has been completed, Student Services has standardized its required program planning elements and developed a template for those plans. This template better integrates SLO assessment results with program planning goals and budgetary recommendations. Administration has also made great progress. The writing of Administrative Unit Outcomes has commenced with zeal and insight; eighty-five percent of departments have written them and are slowly beginning their assessment. ARC was especially pleased that five departments wrote program plans and two of them assessed their AUOs. Now that some plans have been completed, it may be time to revisit the program planning template to see if all components of it are useful. As part of its new institutional effectiveness duties, ARC drafted a simpler template for all non-Instructional departments, built on the one developed by Student Services. ARC recommends that the College Planning Council, Cabinet and all other non-Instructional managers groups examine the new template and adopt it. Finally, ARC recommends that its name be changed from the SLO Assessment Review Committee to the Outcomes Assessment Review Committee since it is now looking at both student learning and administrative unit outcomes. 17 Commendations ARC salutes the Computer Applications and Business Technology department and the Nursing department for creating new forms to keep track of SLO assessment over the entire six-year planning cycle. ARC commends the Political Science and Physics departments for looking at all aspects of the learning process and for being very student-focused in their plans for improvement of the classroom. ARC lauds all Student Services departments for undertaking SLO assessment; their work demonstrates how they assist students to become more self-sufficient through training and self-efficacy. ARC extolls the AUO assessment done by the Vice President of Student Services office and the Office of Matriculation. Recommendations New Recommendations for Teaching and Learning The Faculty Senate has primary responsibility for providing leadership in teaching and learning, particularly in areas related to curriculum and pedagogy, while Student Services Council directs the efforts in their area. The recommendations below will be put into effect by Faculty Senate, Student Services Council and college shared governance committees. Recommendation Responsible Party Time Line Provide sustained faculty development for the improvement of pedagogy and the sharing of best practices, particularly across departments so faculty can learn successful strategies used by others to solve common problems. In Student Services, provide more than one-time trainings and/or follow up with students over time. Staff Development committee and Program Chairs Spring and Fall 2013 flex Individual departments in Student Services On-going 18 New recommendations for SLO Assessment Processes Recommendation Responsible Party Time Line Create a forum for Student Services and Instructional departments to share SLO assessment results that occurs outside of traditional flex week Find ways to showcase SLO and AUO assessment results across the campus Create an electronic means of organizing, scheduling and reporting SLO assessment results Provide web based and flex training in how to assess course and certificate SLOs Departments struggling with SLO assessment should work individually with the SLO Coordinator to find solutions for any issues Facilitate a dialogue with Deans, the Council for Instructional Planning and the Faculty Senate to brainstorm other methods to ensure full compliance with college SLO standards. Staff Development Committee, the Faculty Senate, CCFT and Administration ARC 2013 ARC ad hoc committee Spring 2013 SLO Coordinator 2013 SLO Coordinator 2013 ARC, Deans, CIP. Faculty Senate 2013 Create a special section of the SLO web site for Program Chairs Revise Assessment Analysis forms to gather better adjunct and curriculum data; add a line to report on previous interventions the last time an SLO was assessed and the progress made. Disseminate results of ARC survey; administer survey again in 2013 Gather feedback on pilot on quantitative reporting of SLO results Adopt the new template for nonInstructional program planning created by ARC Change ARC’s name SLO Coordinator Spring 2013 SLO Coordinator Spring 2013 SLO Coordinator Spring 2013 SLO Coordinator 2013 Administration 2013 Faculty Senate Spring 2013 19 Spring 2013 Completed Recommendations from the 2011 ARC Report Past Recommendation Action Taken Any Next Steps? Provide training about organizing and facilitating departmental SLO assessment to Program Chairs and Deans. Facilitate interdisciplinary discussions about student mastery of each of the four core competencies Provide on-going training and workshops in SLO assessment to Student Services staff Flex workshop offered Spring and Fall 2012 Work with PRO and IT to create an electronic tool to plan and track SLO assessment; train PCs how to use it. Spring 2013 Flex workshop to continue the discussion Serve as readers for the SLO chapter that will be included in the 2012 Accreditation SelfEvaluation. Fall 2012 Flex meeting Individual meetings between departments and the SLO Coordinator were held in Spring and Fall 2012 SLO Coordinator should continue to work with any departments that might want to revise their assessment process now that it’s been tried once. ARC read the chapter draft Revise chapter based on in Fall 2012 committee suggestions Recommendations in Process from the 2011 and 2010 ARC Reports Past Recommendation Actions Undertaken Next Steps Survey adjunct faculty to assess their awareness of Cabrillo’s SLO process Survey of entire campus (not just adjuncts) undertaken and analyzed Distribute analysis to shared governance venues Inform potential hires of Cabrillo’s SLO process and our participation expectations in trainings for new faculty, through mentorships and in the Faculty Handbook Create a venue or reporting mechanism for Administration’s Program Plans Wrote section on SLOs for new Faculty handbook Convene meeting with Human Resources; bring SLO process to adjunct faculty training. Discussions within Cabinet Continue discussion 20 Past Recommendation Actions Undertaken Next Steps Convene a meeting of Program Chairs of smaller departments to brainstorm organizational strategies for SLO assessment Create a web tool that lists the calendar for every Instructional department’s SLO assessment schedule. Workshop planned for Spring 2013 Hold workshop SLO Tracking Tool created- but it was only able to inventory (not calendar) college SLO assessment Post examples of a full assessment cycle on the SLO web site for transfer/ basic skills, CTE and Student Services Develop an Assessment Instrument and reporting format for Administration Program Planning that can be used by all the departments in this area No progress Continue to work with IT and PRO to create a web tool for planning and reporting SLO assessment results; explore options for outsourced tool. Revamp SLO website in Spring 2013 to include this Write Administrative Unit Outcomes for each department in Administration Revise and update SLO web site Based on the good work done in Administration program plans, ARC developed recommendations for a simpler, common program planning template 85% complete Bring the template to Administration No progress Complete in Spring 2013; on-going as needed Complete in Spring 2013 Past Recommendations that are now Institutional Practices Date of Recommendation 2008 2009 Past Recommendation Actions Taken Offer an intensive SLO Assessment workshop for all faculty in instructional departments two years in advance of Program Planning Support ongoing, sustained staff development in the assessment of student learning, including rubric development. Annual Spring Flex Workshop 21 Ongoing Flex Workshops Date of Recommendation 2009 2009 2009 2009 2010 Past Recommendation Actions Taken Share effective practices and methods for modeling strategies for assignments Provide support for faculty as they confront challenges to academic ethics, such as plagiarism and other forms of cheating Provide sustained faculty development for addressing student learning needs in reading, research and documentation, and writing Communicate to the college at large the importance of maintaining and documenting a college-wide planning process that systematically considers student learning, including noninstructional areas. Ongoing Flex Workshops Survey campus to assess awareness of Cabrillo’s SLO process On-going Flex workshops; creation of Student Honor Code On-going Flex workshops “Breakfast with Brian” flex workshops; development of the Faculty Inquiry Network; Bridging Research Information and Culture Technical Assistance Project; discussions about Student Success Task Force Recommendations; campus wide focus on Student Success First survey administered Fall 2011; second to be administered Spring 2013 Emerging Trends Emerging Needs and Issues 2007 Recommendations for Teaching and Learning Students need stronger More tutorial assistance for skills in writing, reading, students and college readiness; The longer a student is enrolled at Cabrillo, the more positive their association with the Library 22 Recommendations for SLO Assessment Processes Encourage greater adjunct involvement; Continue to educate the Cabrillo community about the paradigm shift Emerging Needs and Issues 2008 2009 2010 2011 2012 Recommendations for Teaching and Learning Increase emphasis on class discussions and student collaboration. Recommendations for SLO Assessment Processes Teachers want more frequent Encourage greater adjunct collegial exchange; Improved involvement. SLO workshop facilities/equipment needed. for programs two years in advance of Instructional Planning and for non instructional programs; Develop system of succession and dissemination of expertise in SLOAC across campus. Students need more Provide ongoing, sustained Encourage greater adjunct instruction in reading, faculty development; share involvement. Communicate to research and effective practices and the college the importance of documentation, and strategies for modeling maintaining and documenting a writing; concerns about assignments. planning process that plagiarism. systematically considers student learning. Some students need more Provide faculty Encourage greater adjunct instruction in basic training in new pedagogies, involvement. Embed SLO academic skills and technology, and assessment expectations in college survival skills. contextualized instruction. faculty hiring, new hire training Support the teaching of and mentoring practices. college survival skills across Develop an electronic means the curriculum. for SLO assessment result reporting. Explore adding a quantitative component. Students need to improve Provide faculty development Provide training to Deans and their reading and writing to improve pedagogy and Program chairs on organizing skills. sharing of best practices. Use SLO tasks. Revise web site and flex hours, but not necessarily add web tools to assist during flex week, to do this. organizing the process. Hold campus-wide discussions on Core 4 assessment results. Undertake pilot for numerical reporting. Students need to be more Provide faculty development Find ways to showcase prepared for college level to improve pedagogy and assessment insights occurring work, especially writing sharing of best practices. across the campus. Increase in transfer level courses. Facilitate discussions between rate of SLO assessment by Instructional faculty and creating an electronic tool to Student Services faculty and organize, track and report staff to share SLO assessment assessment results. Work results. individually with struggling departments to achieve timely SLO assessment. Create a program chair section of SLO website. 23