Outcomes Assessment Review Committee 2013 Annual Report

advertisement

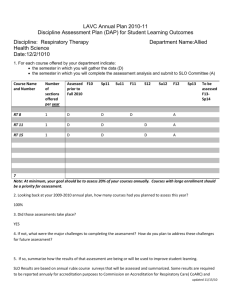

Outcomes Assessment Review Committee 2013 Annual Report Respectfully Submitted by: Marcy Alancraig, Dennis Bailey-Fournier, Alicia Beard, Justina Buller, Carter Frost, (student representative), Jean Gallagher-Heil, Matt Halter, Paul Harvell, Victoria Lewis, Rachel Mayo, Isabel O’Connor, Margery Regalado, Georg Romero, and Terrence Willett Introduction and Background In response to the change in accreditation standards in 2002, two shared governance committees, the Learner Outcomes and Accreditation Committee (2001-2003) and the Accreditation Planning Committee (2003-2005), along with the Cabrillo Faculty Senate, designed a comprehensive SLO assessment plan: assessment of student learning outcomes occurs in all sectors of the college as part of on-going Program Planning (departmental review) processes. The college was divided into five assessment sectors -Transfer and Basic Skills Instruction, Career Technical Education, Library, Student Services, and Administration -- which were each to measure their contributions to students' mastery of the college’s SLOs and core competencies. In 2012, after years of grappling with how to measure their contribution to student learning, administrative departments switched to writing and assessing administrative unit outcomes (AUOs). Each sector of the college creates its own method to assess SLOs and/or AUOs. See the SLO website for a detailed description of the methods used in each area: https://sites.google.com/a/cabrillo.edu/student-learning-outcomes/home Programs and services undergo Program Planning on a rotating basis every six years; only a few departments complete the process each year. For example, in Instruction, approximately twenty-one percent of the programs write a plan in a given in a given year. Because of the number of programs within its purview, the Instructional component began by phasing in SLO assessment, starting with the college core competencies. When this set-up phase was completed, Instruction moved to institutionalizing the process, asking that departments measure student mastery of every course, certificate and degree SLO within the six-year program planning cycle. This staggered schedule of assessment is called the Revolving Wheel of Assessment; every department is currently embarked on some stage of its repeating cycle (see the SLO website for a detailed description of the Wheel). Now Instruction has focused its efforts on quality assurance, creating processes and tools to ensure excellence and full compliance with its SLO procedures. Student Services also phased in SLO assessment, beginning with writing and then revising their departmental SLOs, and now by assessing them. A grant received by the college, the Bridging Research Information and Culture Technical Assistance Project, (sponsored by the Research and Planning Group and funded by the Hewlett Foundation) provided needed training in Student Services assessment methods during Spring 2011. By 1 the next year, all Student Service departments had assessed each of their SLOs, leading to new insights and ways to improve services. Administration (composed of departments or administrative offices in the President’s component, Administrative Services, Student Services and Instruction) spent five years discussing how their departments contribute to student mastery of the college core competencies and how to measure that contribution. Because they provide a wide range of services that enable teaching and learning to occur, but are not directly involved in the formal learning process, their role in assessing SLOs has been difficult to define. In Spring 2012, Administration switched to measuring Administrative Unit Outcomes. Cabrillo defines an Administrative Unit Outcome as a description of what all the users of an Administrative service can do, know, or understand after interacting with that office or department – it is user centered, a description of what the service provides for others. Unlike some schools across the state, a Cabrillo AUO is not an administrative unit goal. All administrative departments have written AUOs and are beginning to assess them. No matter the assessment sector, all college departments that write a Program Plan by June in a given year forward their assessment reports to the Outcomes Assessment Review Committee. This shared-governance committee, a subcommittee of the Faculty Senate, is chaired by the Student Learning Outcomes Assessment Coordinator. Its membership is composed of representatives from the Student Senate, Faculty Senate, CCEU, CCFT, and a manager along with representatives from each SLO sector -Administration, Student Services, Library, and Instruction (both Transfer & Basic Skills and CTE). A representative from Administrative Services fills the manger or Administration position. The Campus Researcher and Accreditation Liaison Officer serve as ex officio members of the committee. The Outcomes Assessment Review Committee (ARC) oversees, analyzes and evaluates all campus SLO and AUO assessment activities. It reviews the yearly assessment results in Instruction, Student Services, the Library and Administration, looking for common themes and broad trends. In addition to analyzing the collective contents of the assessments submitted each year, ARC evaluates its own function and all assessment processes on campus. ARC writes a report about its analysis, submitting it to campus governing bodies authorized to act upon ARC’s recommendations, including the Governing Board, the College Planning Council, the Faculty and Student Senates and both unions, CCFT and CCEU. In 2012, the Outcomes Assessment Review Committee was also asked by the President’s Cabinet to examine institutional effectiveness issues, making recommendations for improving college processes. When needed, the committee is empowered to initiate a college-wide dialog process to analyze and solve broad issues about student learning that have been revealed by SLO assessment results across the campus. For more detailed information on ARC’s charge, membership and duties, please see the SLO website: https://sites.google.com/a/cabrillo.edu/student-learningoutcomes/. This report reflects ARC’s review of the assessment results for those departments that completed Program Planning in the 2012-2013 academic year. 2 Assessment Process: Facts and Figures Participating in this year’s assessment were ten Instructional departments (six in Transfer and Basic Skills and four in CTE), four departments in Student Services, and five in Administration. Assessment Sector Program Plans Transfer and Basic Skills All 6 scheduled departments submitted Program Plans All 4 scheduled departments submitted Program Plans Not scheduled to present a program plan No Program Plans were scheduled, but 4 departments reassessed their SLO and reported results in their annual updates. 5 scheduled departments submitted Program Plans and 4 assessed AUOs. Career Technical Education Programs Library Student Services Administration Participation The charts below capture the participation of Cabrillo faculty and staff in assessment activities. Since this assessment in Instruction took place over a number of years, an average rate was calculated. Basic Skills/Transfer Department % of full time % of adjunct % of full time % of adjunct presenting presenting discussing discussing assessment assessment results results results results Art Photography 100% 51% 100% 53% Counseling 28% 64% 40% 40% Digital 12% 66% 7% 54% Management Career Preparation English as a Second 66% 83% 50% 56% Language Music 37% * 37%* 56%* 56%* Stroke and 100% N/A 100% N/A Disability Learning Center * Due to the use of an outdated reporting form, Music did not keep separate numbers for how many fulltime and adjunct faculty participated in assessment activities. 3 Basic Skills/Transfer Presentation % FT Presenting % PT Presenting 100% 80% 60% 40% 20% 0% Art Photography Counseling Digital English as a Management Second Career Language Preparation Music Stroke and Disability Learning Center Figure 1. Basic skills/Transfer Assessment: Full time and part time faculty assessment presentation rates Basic Skills/Transfer Discussion % FT Discussing % PT Discussing 100% 80% 60% 40% 20% 0% Art Photography Counseling Digital English as a Management Second Career Language Preparation Music Stroke and Disability Learning Center Figure 1. Basic skills/Transfer Assessment: Full time and part time faculty discussion rates 4 CTE Department Culinary Arts and Hospitality Management Dental Hygiene Early Childhood Education Radiologic Technology % of full time presenting assessment results 100% % of adjunct presenting assessment results 100% % of full time % of adjunct discussing discussing results results 100% 100% 56% 100% 28% 100% 45% 100% 43% 100% 100% 33% 83% 33% CTE Presentation % FT Presenting % PT Presenting 100% 80% 60% 40% 20% 0% Culinary Arts and Hospitality Management Dental Hygiene Early Childhood Education Radiologic Technology Figure 2. CTE Assessment: Full time and part time faculty assessment presentation rates 5 CTE Discussion % FT Discussing % PT Discussing 100% 80% 60% 40% 20% 0% Culinary Arts and Hospitality Management Dental Hygiene Early Childhood Education Radiologic Technology Figure 2. CTE Assessment: Full time and part time faculty assessment presentation and discussion rates ARC is very pleased with the rates of participation of full-time faculty overall as well as the complete participation of all adjunct faculty in the Culinary Arts and Hospitality Management and Early Childhood Education departments. The committee notes, however, the uneven participation of adjunct faculty in other departments. This is a pattern that has occurred in previous years. It is hoped that some of the activities that ARC has undertaken to better train program chairs to increase adjunct participation will begin to be apparent in future statistics. The rates of participation of adjunct and faculty participation in the music department could not be determined as they used an outdated form to record their six years of assessment, a form which only asked for total faculty who presented assessment results and a total of those participating in the discussion of those results. More current forms ask for separate numbers for full and adjunct faculty participation. The new CurricUNET SLO module, an on-line electronic program for the reporting and archiving of SLO assessment that will be implemented in Spring 2014, will prevent this from occurring in the future, since any updated forms will be the only possible ones to use. The Stroke Center spent much of their time rewriting their curriculum, and creating a viable assessment method for their special courses; they only undertook assessment of the new courses. 6 Student Services Department Admissions and Records Disabled Students Programs and Services Financial Aid Student Affairs/Student Activities and Government Student Employment # of departmental SLOs % of SLOs assessed 1 1 100% 100% % of department personnel discussing results 100% 100% 1 4 100% 100% 100% 100% 1 100% 100% ARC is delighted that four departments reassessed their SLOs, though it was not scheduled as part of their program planning activities. This indicates the value the departments see in the SLO assessment process. Administration Administrative Unit/ Department Education Centers CEED Facilities Planning and Plant Operations (M & O) Office of the Vice President, Administrative Services Purchasing, Contracts and Risk Management # of departmental AUOs % of AUOs assessed 2 3 1 100% 0 100% % of department personnel discussing results 100% 0 100% 1 100% 100% 1 100% 100% ARC notes with pleasure that four of the five departments assessed their AUOs for the first time. 7 Assessment Progress Transfer and Basic Skills Department % of Core 4 Assessed Art Photography 100% Counseling 100% Digital Management 100% Career Preparation English as a Second 100% Language Music 100% Stroke and Disability Curriculum Learning Center revised- no assessment yet % of Course SLOs Assessed 100% 54% 75% 100% 0% 100% According to the Revolving Wheel of Assessment, all transfer and basic skills departments were to have assessed each of the Core 4 and all course SLOs. All but one department completed the assessment of the Core 4, and three departments also assessed each of their course SLOs, but the other three departments varied in what they accomplished. The Music Department noted in its program plan that “the Program Chair never understood that each course SLO was supposed to be assessed, then discussed.” Instead, they diligently reassessed the Core 4 in each course over the six-year period. The Stroke Center entered a major curriculum revision and instead of assessing the Core 4, completely revamped its courses and assessed the new ones for the first time. The Counseling program assessed 100% of the courses offered by their department, but did not assess counseling courses offered by other departments such as DSPS and Tutoring. ARC recommends that Counseling undertake dialogue about those courses with the groups that are offering them and coordinate assessment efforts. Career Technical Education Department Culinary Arts and Hospitality Management Dental Hygiene Early Childhood Education Radiologic Technology Re-examine Assessment Plan ü Certificate SLOs Assessed 100% Course SLOs Assessed 100% ü ü 100% 100% 100% 100% ü 100% 100% 8 According to the Revolving Wheel of Assessment, CTE programs were to re-examine their assessment plan, and to assess all course and certificate SLOs. ARC is very pleased that all CTE departments completed each aspect of the work. Overall analysis: The rate of assessment and faculty participation has significantly increased from previous years. However, the incompleteness of course level assessment in three transfer departments is in line with the findings of last year’s ARC report, and the inventory of assessment revealed by Cabrillo’s SLO Tracker. As part of Instruction’s effort to focus on quality assurance, it undertook an inventory of overall SLO assessment in Fall 2012 for the first time. Using a tracking tool developed in response to the 2011 ARC annual report, Instruction discovered that 61% of SLOs in active courses (those in the catalog and offered in the last three years) and 76% of certificate SLOs had been assessed. As a result of this data, Instruction undertook the following: • Asked departments to assess any course offered in Spring 2013 and Fall 2013 that had not yet been assessed, in addition to undertaking any other assessment scheduled as part of the Revolving Wheel. Due to the hard work of all departments on campus, the overall rate of course assessment has been raised from 61% to 87% as of December 2013. It is expected to rise to 90% by the time each department completes this accelerated assessment by the beginning of the Spring 2014 semester. These improved statistics will become apparent in future Program Planning reports. The Music and Counseling departments, whose course SLO rates were 0 and 54% respectively when they submitted their program plans in Spring 2013, have both increased to 85%. Digital Management and Career Preparation has only one active course that it has not yet assessed, due to it not being offered in the last year, and has raised its rate to from 75% to 90% since last spring. • Refined the SLO Tracking Tool to be more accurate. • Worked with CurricUNET to create an electronic SLO Assessment module, which will be used for SLO and AUO assessment activities across the campus. It is being piloted in Fall 2013 and will then be implemented over the next two years. The Assessment module will: 1. Provide an electronic means to collect and archive SLO and AUO assessment. All faculty will submit their individual assessment analysis forms on-line, which will both save time and archive all records in a central place. 2. Accurately track what has been assessed and what yet needs to be done. 3. Create departmental assessment reports that summarize individual assessment results so that departments can more easily share data to decide what is needed to improve student learning. These reports can also be shared between departments (to facilitate inter-departmental dialogue) as well as with ARC to assist with its annual report. 4. Create campus-wide reports for Core 4 assessment results, so that ARC can more easily analyze the efforts across the campus. 9 • • 5. Provide a planning function with the ability to remind Program Chairs which SLOs should be assessed any particular semester as part of the Revolving Wheel of Assessment. 6. Create quality control through its approval process. Departmental reports will be scrutinized and approved by the SLO Coordinator, creating consistency and a more user-friendly process if reports need to be revised. Revised Core 4 assessment reporting forms to include numerical results, and to separate discussions about what the results indicate could improve student learning in transfer departmental degrees, the GE program, and the college’s institutional outcomes, all of which are measured by the Core 4. Previously, this discussion was combined into one area on the form. Revised the workbooks on the SLO web page to focus on assessing course and certificate SLOs as well as the Core 4. Student Services As noted earlier, no program plans for Student Services were scheduled yet four departments undertook SLO assessment again due to interest and seeing value in what can be learned from the results. Student Services annual reports ask departments to discuss SLO assessment progress. The CurricUNET SLO module will easily track what has been done once all departments in Student Services have been brought on to the new system. Administration All departments in Administration have written AUOs. Four out of the five departments that wrote program plans also assessed them. The assessment results are providing opportunities for departments to improve services, tweak the writing of the AUOs, and examine assessment methods to see if they provide enough useful information or need to be revised. 10 Assessment Results: Emerging Needs and Issues The departments who assessed the college Core 4 competencies, certificate and individual course SLOs identified the following key student needs and issues: • Academically, weaknesses in reading and writing skills affected student success. Reading problems were the ones most commonly mentioned. • Academic success was further impacted by the college budget crisis, which resulted in cutbacks in tutorials, the Writing Center and other student support services. • Students also struggled with the other effects of the budget crisis such as the impact on the scheduling of classes, purchases of new equipment or upkeep of facilities. Those departments proposed the following strategies to help meet these needs: • In-class tests to assess student writing and reading skills very early in the semester. • Better scaffolding of assignments to assist with reading and writing skills. • Tutorial help, when staffing is restored. Many departments commented on the value of department dialogue about SLO assessment results in their program plans and how this resulted in some concrete changes in departmental teaching or practices. • Art Photography: “This process was beneficial to the program because it formalized the review process and included all faculty members in the discussion process. Both positive trends and areas that need improvement emerged from the assessment and discussions.” • Culinary Arts and Hospitality Management: “All faculty, 100% of full time and adjunct, were involved in the assessment. Discussion at the meeting was fruitful, collegial and productive…There were several cases where assessment tools were not addressing what they were intended to measure. In these cases, the participating faculty helped to tweak assignments, projects or exams to better assess the SLO. In a couple of cases, the rate of A grades was very high. This prompted a very useful discussion among faculty on maintaining high standards and rigor in every course. As a consequence of this discussion, we have seen change in this practice.” • Early Childhood Education: “The ECE faculty have found that these discussions provide a rich opportunity to talk about our teaching, and discuss ideas for improving both teaching and learning. The conversations about SLOs have also served as a vehicle for: engaging in professional development activities, looking at our program planning process, completing the CAP work and the development of our AS-­‐T in ECE.” • English as a Second Language: “Another result of the completing the SLO course forms was to create a binder full of materials from natural language sources and molding them for language acquisition…ESL now has an abundance of materials for our classes, one class at each of the four core levels…Another benefit that came from the SLO course forms was to take more students to the Writing Center in Aptos and the ILC in 11 Watsonville at the beginning of each semester to show students where they can help outside of class.” Student Services SLO assessment revealed that students were making modest progress in applying for jobs, using Web Advisor to plan schedules and register for classes and using appropriate student activities and government. Enrollment Services identified a need to duplicate its one-stop office at the Watsonville Center, which “will require reconfiguration of service counters and ongoing cross training of staff.” Some departments are considering tweaking assessment instruments to gain better information on how to improve student learning. The issues revealed by Administration AUO assessment varied by department. Key findings by the Education Centers included lower enrollment in both Scott’s Valley and Watsonville compared to last year, low percentage of computer use in Watsonville when averaged from 8 am to 8 pm, although at key times all stations are in use, and a high demand for tutoring and counseling appointments (in line with the Enrollment Services SLO assessment described above). Both the Purchasing and Facilities departments discovered that much of the campus has had little interaction with their work order system or some services. The Office of Administrative Services assessment instrument, a survey, was given at a time of budgetary distress on campus, and this impacted its results. It was hard to glean useful information when the survey was used by some respondents to voice campus-wide concerns about the budgetary climate rather than evaluating the office’s services. Now that these first assessments have occurred, Purchasing, Facilities and the Office of Administrative Services are revising their assessment instruments to gain better and more useful information. 12 Analysis of Cabrillo’s Outcomes Assessment Process This year’s analysis of the college’s assessment processes notes the increase of sophisticated assessment measures across the entire campus, a greater level of participation, and a response to ARC’s recommendations to assure quality control in last year’s report. While “trending up” in all areas, some issues remain. Instruction In Instruction, the committee noted that more departments utilized the dialogue aspect of the SLO assessment analysis process to create concrete actions to improve student learning. In some departments, there was considerable sophistication in what was measured and how it was analyzed. Other departments seemed to be struggling to garner participation from departmental faculty. In addition, those departments that were not as experienced at SLO assessment analysis often listed professional development for their faculty as a way to improve rather than focusing more on student learning. ARC considered some of the factors that might contribute to this disparity, such as size of department and area of study, but found none of them to be significant. Instead, it believes that the leadership provided by program chairs to be the determining factor in departmental success with SLO assessment. ARC also observed that some departments seemed to need more technical assistance from the Planning and Research Office for charts and, most importantly, for understanding what the data in the charts means. ARC recommends the following to improve SLO assessment in Instruction: • Instruction should create a formal program chair training that includes how to lead the department in SLO assessment. Additionally, the training could include time-management skills for the job, how to write a program plan, and the knowledge needed about campus processes and systems such as curriculum and budget allocation. • Another flex workshop for program chairs should be held where they are provided an opportunity to drop in to meet with the SLO Coordinator to get specific help on their assessment issues. • The SLO section in program plans should include a discussion of what students are doing well, what they are not doing well and what the department is going to do to help them, utilizing the model used by the Applied Photography department in its 2013 program plan. Departments should focus on the specifics the department develops to help students, not just on what the department is learning about itself or teaching. • The Planning and Research Office should undertake an information campaign to inform departments about its services and how to best utilize them. In addition, ARC made several recommendations for changes in assessment in Instruction as a result of the college’s accreditation site visit. The visiting team seemed to be confused about how Cabrillo defines a program for SLO assessment in transfer. The confusion seemed to arise from two issues: 13 1. They were not familiar with the approach of considering GE all one program and had some concerns that this resulted in a lack of evidence of assessment of our departmental degrees in transfer. 2. The people they interviewed gave differing answers about how the college defines programs in transfer. After discussing the issues raised by the visiting team’s questions, ARC affirmed its commitment to Cabrillo’s approach. Individual departments in transfer will continue to assess the Core 4 as a way to measure three areas: the GE program, our institutional SLOs and departmental transfer degrees. However, as discussed on page 7, ARC recommended that the Core 4 assessment forms be changed to specifically discuss what was learned from the results about each area. This change has already been implemented. In addition, ARC recommended that the SLO Coordinator work with PRO to create a chart to map in more detail what is assessed by transfer and CTE departments, making it clear that courses, certificates, the GE program, departmental degrees and institutional outcomes are all measured. Finally, ARC recommended that an informational campaign be created to clarify how the college defines a program. Student Services In Student Services, some departments seem to be enthusiastically embarking on SLO assessment, undertaking it yearly. This is a most promising development. ARC recommends that those departments fill out the SLO Assessment Analysis form each time assessment occurs so that what is discovered can be recorded in detail, rather than in the summary captured on the annual update forms. Administration The assessment of AUOs in Administration is very promising, and also very new. Now that it has occurred for the first time in some departments, they have the opportunity to revise their assessment instruments. The committee noted that the surveys used for Purchasing, Facilities and the VP of Administrative Services Office did not seem to yield the best information to help them improve services. Members of ARC who also serve on CPC remembered the excellent budget pre-and-post test that the Vice President of Administrative Services used to teach the budget process to the committee and thought it might prove to be a better measure of the department’s AUO. ARC recommends that Administrative Services continue to explore various assessment instruments to find those that will provide pertinent information. ARC also noted that due to changes in Administration, no progress has been made on its recommendation of last year that Administrative departments share their program plans in a committee that is similar to CIP in scope and purpose. Dr. Jones is exploring options for this, and some progress may be made in the coming year. 14 Overall Analysis The committee notes that this is the seventh report it has written and that it is time to take a closer look at progress made over time. In 2014, ARC will undertake two projects to address this: 1. Examine the issues noted in all ARC reports and analyze trends that have emerged over the years along with the progress the college has made in addressing them. 2. Undertake an evaluation of the committee’s charge, its effectiveness, and its relation to other campus shared-governance bodies. Progress on Past Recommendations ARC made several recommendations last year to improve the campus’ SLO and AUO assessment process. The following was completed: 1. As noted on page 7, an electronic filing and archival system has been purchased. The CurricUNET SLO module is being piloted in Fall 2013 and, if all goes well, will begin implemented over the next three semesters. 2. Assessment Analysis forms were modified to include reports on the effectiveness of previous assessment results and the activities undertaken as a result. 3. The SLO Tracking Tool has been refined. 4. Departments that seemed to be struggling with assessment completion, as noted by the SLO Tracker, have worked with the SLO Coordinator as needed. 5. A special Program Chair section of the SLO web site was created along with an SLO Handbook for PCs that is posted on that section of the website. 6. A second SLO survey was undertaken in May 2013. A summary of the positive results entitled “Things are Trending Up!” is posted on the SLO website at https://sites.google.com/a/cabrillo.edu/student-learningoutcomes/. 7. The name of ARC was changed from the SLO Assessment Review Committee to the Outcomes Assessment Review Committee. Some progress has been made on three other recommendations from the 2012 report. The SLO Coordinator was directed to get feedback from the departments participating in the pilot for numerical reporting of assessment results. She found that all but one of the departments who had undertaken it wish to continue but with more overall participation. Not all faculty in each department had added numerical reporting to their assessment analysis forms. These results and the development of the CurricUNET SLO module led ARC to revise the Core 4 assessment analysis form to include numerical results. Faculty and entire departments now rate student competency on a four- point scale. Very preliminary response to the new form has been positive. ARC chose to do this for two reasons: • It will facilitate dialogue within and between departments, allowing them to more easily see a summary of each other’s results. • It will provide ARC with a better way to assess Core 4 results across the entire campus each year, allowing it to compare departmental ratings and get a sense of overall progress across the college. 15 ARC also recommended that the college find a better way for departments in all assessment areas to share the good work that has been done and the innovations they have created to improve student learning, particularly outside of Flex week so that the entire campus can participate. There was some discussion of this at the Faculty Senate, including the possibility of a flex day devoted to SLOs in the middle of the semester, but no formal process has yet been identified. Lastly, ARC recommended that a dialogue take place with Deans, the Council for Instructional Planning and the Faculty Senate to brainstorm other methods to ensure full compliance with college SLO standards. This has occurred in each area, with some processes clarified to better describe expectations, but no formal recommendations have yet been adopted. CIP and the Faculty Senate continue to discuss how to better “incentivize” assessment activities and to laud the departments who excel at meeting expectations. 16 Institutional Effectiveness ARC’s responsibilities were expanded to include looking at campus institutional effectiveness issues in Fall 2012. Over the last year, to fulfill this part of its charge, the committee has: • • • • • • • • • Created a diagram that captures the college’s integrated planning process for the Accreditation Self Evaluation. Made recommendations for the diagram that captures Cabrillo’s decision- making process for the Accreditation Self-Evaluation. Served as readers for the SLO chapter in the Accreditation Self Evaluation. Created a template for program plans in Administration. Discussed the link between SLO assessment and student success. Recommended the process to establish benchmarks for Instructional Goals required for the Accreditation Self-Evaluation. Created the SLO Assessment Dashboard that is posted on the SLO website: https://sites.google.com/a/cabrillo.edu/student-learning-outcomes/. Made recommendations for the frequency of AUO assessment in Administration. Discussed and piloted a possible template for minutes in campus shared governance committees. Commendations ARC salutes the Early Childhood Education and Art Photography departments for doing an excellent job analyzing their SLO assessment results in depth and with specific detail, resulting in compelling interventions to improve student learning. Both discussions could serve as models for other departments due to how they are organized and presented. ARC lauds the Admissions and Records, Disable Student Programs and Services, Financial Aid, Student Affairs/Student Activities and Government and Student Employment departments in Student Services department who undertook SLO assessment though it was not scheduled and reported the results in their annual updates. ARC commends the Education Centers, the Vice President of Administrative Services Office and Purchasing’s program plans for success in depicting complex, multi-faceted departments and undertaking useful and reasonable measures to assess AUOs. 17 Recommendations 2013 Recommendations for Teaching and Learning The Faculty Senate has primary responsibility for providing leadership in teaching and learning, particularly in areas related to curriculum and pedagogy, while Student Services Council directs the efforts in their area. The recommendations below will be put into effect by Faculty Senate, Student Services Council and college shared governance committees. Recommendation Responsible Party Time Line Utilize the exciting new activities from Student Success Committee, particularly the Faculty Consultation network, for the improvement of pedagogy and the sharing of best practices, particularly across departments so faculty can learn successful strategies used by others to solve common problems. Student Success committee and Staff Development committee Spring and Fall 2014 flex 2013 Recommendations for SLO Assessment Processes Recommendation Responsible Party Time Line In Instruction, create a formal program chair training that includes how to lead the department in SLO assessment. In Instruction, hold a flex workshop for Program chairs to get help on specific issues from the SLO Coordinator In Instruction, revise instructions for the SLO section in program plans In Instruction, revise Core 4 Assessment Analysis forms to include separate discussions of student success in departmental degrees, the GE program and the college’s institutional outcomes. Deans, Vice President of Instruction, Program Chairs Discuss in Spring 2014 SLO Coordinator and Staff Development Committee Fall 2014 SLO Coordinator and CIP Spring 2014 ARC Fall 2013 (completed) 18 Recommendation Responsible Party Time Line In Instruction, create a chart to better map what is assessed by transfer and CTE departments Create an informational campaign for how the college defines a program Create an information campaign about the services available from the Planning and Research Office. In Student Services, fill out assessment analysis forms in addition to the annual updates to record SLO assessment results In Administration, after an AUO is assessed, continue to refine assessment instruments Analyze all ARC annual reports, noting trends that have emerged over time and the college’s progress in addressing them. Undertake a self-evaluation of the committee SLO Coordinator and PRO Spring 2014 ARC and the Faculty Senate Spring and Fall 2014 Fall 2014 PRO Student Services and the SLO Spring 2014 Coordinator Administration and the SLO Coordinator On-going ARC Spring and Fall 2014 ARC Spring 2014 Completed Recommendations from Previous ARC Reports Year of Report 2012 2012 2012 2012 Past Recommendation Action Taken Any Next Steps? Create an electronic means of organizing, scheduling and reporting SLO assessment results Provide web based and flex training in how to assess course and certificate SLOs Departments struggling with SLO assessment should work individually with the SLO Coordinator to find solutions for any issues Create a special section of the SLO web site for Creation of CurricUNET SLO module Complete Pilot and implement gradually Spring 2014-Spring 2015 Create flex trainings New Assessment Workbooks on SLO website Individual departments worked with SLO Coordinator as needed. Continue to offer this service Section created and introduced at Spring Add more exemplary examples and models 19 Year of Report 2012 2012 2012 2012 2012 2011 2011 Past Recommendation Action Taken Any Next Steps? Program Chairs 2013 Program Chairs meeting Forms revised as part of creation of the CurricUNET SLO model to this section of the site Revise as needed Revise Assessment Analysis forms to gather better adjunct and curriculum data; add a line to report on previous interventions the last time an SLO was assessed and the progress made. Disseminate results of Results disseminated ARC survey; administer to Faculty Senate and survey again in 2013 posted on SLO website; New survey undertaken in Spring 2013 and results disseminated in Fall 2013. Gather feedback on pilot Results gathered and on quantitative reporting reported to ARC. of SLO results Numerical reporting incorporated into Core 4 assessment analysis forms. Adopt the new template Template adopted. for non-Instructional program planning created by ARC Change ARC’s name Faculty Senate approved the name change in Spring 2013. Provide training about Flex workshop offered organizing and Spring and Fall 2012; facilitating departmental Training for Deans SLO assessment to occurred at a CIP Program Chairs and meeting in Spring 2013 Deans. Convene a meeting of Flex Workshop held in Program Chairs of Spring 2013 smaller departments to brainstorm organizational strategies for SLO assessment 20 Undertake survey again in 5-6 years. SLO Coordinator to work with departments to increase participation in pilot. Template to be tweaked as needed by Administrative units. None None Continue to create a means for PCs to dialogue about SLO assessment Year of Report 2011 Past Recommendation Action Taken Create a web tool that lists the calendar for every Instructional department’s SLO assessment schedule. 2011 Write Administrative Unit Outcomes for each department in Administration Revise and update SLO web site Facilitate interdisciplinary discussions about student mastery of each of the four core competencies Serve as readers for the SLO chapter that will be included in the 2012 Accreditation SelfEvaluation. SLO Tracking Tool Pilot CurricUNET created- but it was only planning tools able to inventory (not calendar) college SLO assessment. The CurricUNET SLO module has some planning capabilities 100% complete None 2011 2011 2010 Any Next Steps? Web site revision completed Spring 2013 Fall 2012 and Spring 2013 Flex meetings Revise as needed ARC read the chapter draft in Fall 2012 and provided feedback; chapter completed in Spring 2013. None Continue to search for ways for faculty to share results across departments other than Flex workshops Recommendations in Process from previous ARC reports Year of Report 2012 Past Recommendation Actions Undertaken Next Steps Facilitate a dialogue with Deans, the Council for Instructional Planning and the Faculty Senate to brainstorm other methods to ensure full compliance with college SLO standards. Discussions at ARC, CIP, Faculty Senate in Fall 2012 to Fall 2013 Continue Discussion 2012 Create a forum for Student Services and Instructional departments to share Discussions at Faculty Senate and Staff Development Committee Continue discussions with all parties, including the CCFT, since Flex time is a 21 Year of Report 2011 2011 Past Recommendation Actions Undertaken SLO assessment results that occurs outside of traditional flex week. Create a venue or Discussions within reporting mechanism for Cabinet Administration’s Program Plans Post examples of a full No progress assessment cycle for each area on the SLO web site Next Steps negotiated item Continue discussion with President Jones and Cabinet Add to website Past Recommendations from ARC Reports that are now Institutional Practices Year of Report 2008 2009 2009 2009 2009 2009 Past Recommendation Actions Taken Offer an intensive SLO Assessment workshop for all faculty in instructional departments two years in advance of Program Planning Support ongoing, sustained staff development in the assessment of student learning, including rubric development. Share effective practices and methods for modeling strategies for assignments Provide support for faculty as they confront challenges to academic ethics, such as plagiarism and other forms of cheating Provide sustained faculty development for addressing student learning needs in reading, research and documentation, and writing Communicate to the college at large the importance of maintaining and documenting a college-wide planning process that systematically considers student learning, including non-instructional areas. Annual Spring Flex Workshop 22 Ongoing Flex Workshops Ongoing Flex Workshops On-going Flex workshops; creation of Student Honor Code On-going Flex workshops “Breakfast with Brian” flex workshops; development of the Faculty Inquiry Network; Bridging Research Information and Culture Technical Assistance Project; discussions about Student Year of Report Past Recommendation Actions Taken 2010 Survey campus to assess awareness of Cabrillo’s SLO process 2011 Inform potential hires of Cabrillo’s SLO process and our participation expectations in trainings for new faculty, through mentorships and in the Faculty Handbook 23 Success Task Force Recommendations; campus wide focus on Student Success First survey administered Fall 2011; second to be administered Spring 2013 Wrote section on SLOs for new Faculty handbook; SLO process presented at adjunct faculty training. Summary of Issues in Past Reports As stated on page 13, in Spring 2014, ARC will review the chart below, which catalogues the concerns noted in each ARC report, and analyze the trends that have emerged over time and the progress and the college has made in addressing them. This analysis will be included in the 2014 ARC report. Emerging Needs and Issues Recommendations for Teaching and Learning Recommendations for SLO Assessment Processes Students need stronger skills in writing, reading, and college readiness; The longer a student is enrolled at Cabrillo, the more positive their association with the Library Increase emphasis on class discussions and student collaboration. More tutorial assistance for students Encourage greater adjunct involvement; Continue to educate the Cabrillo community about the paradigm shift Teachers want more frequent collegial exchange; Improved facilities/equipment needed. 2009 Students need more instruction in reading, research and documentation, and writing; concerns about plagiarism. Provide ongoing, sustained faculty development; share effective practices and strategies for modeling assignments. 2010 Some students need more instruction in basic academic skills and college survival skills. Provide faculty training in new pedagogies, technology, and contextualized instruction. Support the teaching of college survival skills across the Encourage greater adjunct involvement. SLO workshop for programs two years in advance of Instructional Planning and for non instructional programs; Develop system of succession and dissemination of expertise in SLOAC across campus. Encourage greater adjunct involvement. Communicate to the college the importance of maintaining and documenting a planning process that systematically considers student learning. Encourage greater adjunct involvement. Embed SLO assessment expectations in faculty hiring, new hire training and mentoring practices. Develop an electronic 2007 2008 24 Emerging Needs and Issues Recommendations for Teaching and Learning Recommendations for SLO Assessment Processes curriculum. means for SLO assessment result reporting. Explore adding a quantitative component. Provide training to Deans and Program chairs on organizing SLO tasks. Revise web site and add web tools to assist organizing the process. Hold campus-wide discussions on Core 4 assessment results. Undertake pilot for numerical reporting. Find ways to showcase assessment insights occurring across the campus. Increase rate of SLO assessment by creating an electronic tool to organize, track and report assessment results. Work individually with struggling departments to achieve timely SLO assessment. Create a program chair section of SLO website. Create program chair training. Revise Core 4 form and instructions for SLO section on program plans. Create informational campaigns on Pro services and the definition of a program. In student Services and Administration, record assessment results on forms and continue to revise assessment instruments. 2011 Students need to improve their reading and writing skills. Provide faculty development to improve pedagogy and sharing of best practices. Use flex hours, but not necessarily during flex week, to do this. 2012 Students need to be more prepared for college level work, especially writing in transfer level courses. Provide faculty development to improve pedagogy and sharing of best practices. Facilitate discussions between Instructional faculty and Student Services faculty and staff to share SLO assessment results. 2013 Students were affected by budget cuts, particularly in tutoring and class offerings. Utilize projects from the Student Success Committee as opportunities for faculty to share best practices across departments. 25 26