Reduction of bias in exponential family models Ioannis Kosmidis

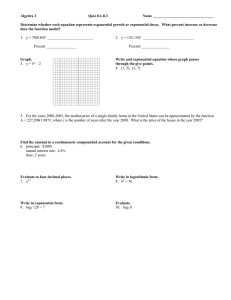

advertisement

Reduction of bias in exponential family models

with emphasis to models for categorical responses

Ioannis Kosmidis

Research Fellow

Department of Statistics

University of Padua 2009

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Outline

1

Methods for improving the bias

2

Adjusted score functions for exponential family models

3

Univariate generalized linear models

4

Binary regression models

5

Discussion and current work

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Bias correction

Bias correction

In regular parametric models the maximum likelihood estimator β̂ is

consistent and the expansion of its bias has the form

E(β̂) = β0 +

b1 (β0 ) b2 (β0 ) b3 (β0 )

+

+

+ ... .

n

n2

n3

Bias-corrective methods

→ Estimate b1 (β0 ) by b1 (β̂).

→ Calculate the bias-corrected estimate β̂ − b1 (β̂)/n.

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Bias correction

Bias correction

Cox & Snell (1968) derive the expression for b1 (β)/n for a very

general family of models.

Anderson & Richardson (1979) and Schaefer (1983) calculate

b1 (β)/n for logistic regressions.

The bias-corrected estimates shrink towards 0 relative to the

maximum likelihood estimates resulting in smaller variance and

hence mean squared error.

Cordeiro & McCullagh (1991) give the general form of b1 (β)/n for

generalized linear models

Bias-correction via a supplementary iterative re-weighted least

squares iteration.

Interesting results for binary regression models on the shrinkage

properties of the bias-corrected estimates.

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Bias correction

Bias reduction

Haldane (1955) and Anscombe (1956) on the estimation of the

log-odds ψ = log π/(1 − π) from a binomial observation y with

index m proposed

y + 1/2

.

ψ̃ = log

m − y + 1/2

→ ψ̃ has O(m−2 ) bias.

Quenouille (1956) proposes Jackknife, the first bias-reducing method

that is applicable to general families of distributions.

Firth (1993), for regular parametric models, proposes appropriate

adjustments to the efficient score vector so that the solutions of the

adjusted score equations have second-order bias.

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Bias correction

Which approach?

Bias-corrected estimators:

→ Easy to obtain.

→ Undefined when ML estimates are infinite.

→ Over-correction for small and moderate samples (Bull &

Greenwood, 1997; Bull et al 2002).

Jackknife:

→ Easy to implement.

→ Problems when ML estimates are infinite.

→ Do not eliminate first-order bias (Bull & Greenwood, 1997).

Adjusted score equations:

→ ML estimates are not required.

→ Estimators with “better” properties:

Mehrabi & Mathhews (1995), Pettitt et al (1998), Heinze &

Schemper (2002;2005), Bull et al (2002;2007), Zorn (2005), Sartori

(2006).

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Exponential family models

Bias-reducing adjusted score functions

Obtaining the bias-reduced estimates

Special case: multivariate generalized linear models

Example: Cumulative link models for ordinal responses

Exponential family models

Consider a q-dimensional random variable Y from the exponential

family having density or probability mass function of the form

T

y θ − b(θ)

f (y ; θ) = exp

+ c(y, λ) ,

λ

where the dispersion λ is assumed known.

Then,

E(Y ; θ) = ∇b(θ) = µ(θ) ,

cov (Y ; θ) = λD2 (b(θ); θ) = Σ(θ) .

Notational convention: D2 (b(θ); θ) is the q × q Hessian matrix of

b(θ) with respect to θ.

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Exponential family models

Bias-reducing adjusted score functions

Obtaining the bias-reduced estimates

Special case: multivariate generalized linear models

Example: Cumulative link models for ordinal responses

Exponential family models

Assume observed response vectors y1 , . . . , yn which are realizations

of independent random vectors Y1 , . . . , Yn each distributed

according to the exponential family of distributions.

For an exponential family nonlinear model (or generalized nonlinear

model)

gr (µr ) = ηr (β) (r = 1, . . . , n) ,

where

→ gr : <q → <q , and

→ ηr : <p → <q is a specified vector-valued function of model

parameters β.

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Exponential family models

Bias-reducing adjusted score functions

Obtaining the bias-reduced estimates

Special case: multivariate generalized linear models

Example: Cumulative link models for ordinal responses

Exponential family models

Efficient score vector for β:

X

U=

XrT Wr D (ηr ; µr ) (yr − µr ) ,

r

where

T

→ Wr = Dr Σ−1

r Dr is the matrix of working weights,

→ Xr = D (ηr ; β), and

→ DrT = D (µr ; ηr ).

→ µr = µ(θr (β)) and Σr = Σ(θr (β)).

Thorough technical treatment of this class of models can be found

in Wei (1997).

Notational convention: if a and b are p and q dimensional vectors,

respectively, D (a; b) is the p × q matrix of first derivatives of a with

respect to b.

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Exponential family models

Bias-reducing adjusted score functions

Obtaining the bias-reduced estimates

Special case: multivariate generalized linear models

Example: Cumulative link models for ordinal responses

Adjusted score functions for general parametric models

For a general parametric model with p-dimensional parameter vector

β, the tth component of the score vector Ut is adjusted to

Ut∗ = Ut + At

(t = 1, . . . , p) ,

where At is Op (1) as n → ∞.

Denote β̃ the estimator that results from Ut∗ = 0 (t = 1, . . . , p),

with F the Fisher and with I the observed information on β.

Firth (1993) showed that β̃ has O(n−2 ) bias if At is either

(E)

At

=

1 −1

tr F (Pt + Qt )

2

or

(O)

At

= It F −1 A(E) ,

where Pt = E(U U T Ut ) and Qt = E(−IUt ).

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Exponential family models

Bias-reducing adjusted score functions

Obtaining the bias-reduced estimates

Special case: multivariate generalized linear models

Example: Cumulative link models for ordinal responses

Adjusted score functions for general parametric models

(E)

(O)

It can be shown that At and At are particular instances of

bias-reducing adjustments in the more general family

At = (Gt + Rt )F −1 A(E) ,

where Rt is Op (n1/2 ) and Gt is the tth row of either F , I or U U T .

General family of adjusted score functions

The components of the adjusted score vector take the general form

Ut∗ = Ut +

p

X

etu A(E)

u

(t = 1, . . . , p) .

u=1

where etu denotes the (t, u)th component of matrix (G + R)F −1 .

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Exponential family models

Bias-reducing adjusted score functions

Obtaining the bias-reduced estimates

Special case: multivariate generalized linear models

Example: Cumulative link models for ordinal responses

Multivariate generalized nonlinear models

Adjusted score functions for multivariate generalized nonlinear models

Ut∗ = Ut +

q

1 XX tr Hr Wr−1 Dr Σ−1

⊗ 1q D2 (µr ; ηr ) x∗rst

r

s

2 r s=1

q

+

1 X X −1

tr F (Wrs ⊗ 1q )D2 (ηr ; β) x∗rst

2 r s=1

(t = 1, . . . , p) ,

where

→

1q is the q × q identity matrix,

→

Wrs is the sth row of the q × q matrix Wr as a 1 × q vector,

→

Hr = Xr F −1 XrT Wr ,

→

D2 (ηr ; β) is the pq × p matrix with sth block D2 (ηrs ; β).

Pp

x∗rst = u=1 etu xrsu .

→

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Exponential family models

Bias-reducing adjusted score functions

Obtaining the bias-reduced estimates

Special case: multivariate generalized linear models

Example: Cumulative link models for ordinal responses

Modified Fisher scoring iteration

Modified Fisher scoring iteration

−1 ∗

β̃(j+1) = β̃(j) + F(j)

U(j)

(j = 0, 1, . . .) .

β̃(0) can be set to the maximum likelihood estimates, if available

and finite.

Estimated standard errors for β can be obtained by taking the

square roots of diag(F −1 ) at β̃.

More convenient/efficient schemes can be constructed by exploiting

the special structure of the adjusted score functions for any given

model...

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Exponential family models

Bias-reducing adjusted score functions

Obtaining the bias-reduced estimates

Special case: multivariate generalized linear models

Example: Cumulative link models for ordinal responses

Special case: multivariate generalized linear models

Adjusted score functions for multivariate generalized linear models

General link functions

Ut∗

q

2

∗

1 XX = Ut +

tr Hr Wr−1 Dr Σ−1

D

(µ

;

η

)

xrst

⊗

1

r

r

q

r

s

2 r s=1

q

1 X X −1

tr F (Wrs ⊗ 1q )D2 (ηr ; β) x∗rst

2 r s=1

|

{z

}

+

(t = 1, . . . , p) ,

For multivariate GLMs ηr =Xr β , and hence this summand is 0

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Exponential family models

Bias-reducing adjusted score functions

Obtaining the bias-reduced estimates

Special case: multivariate generalized linear models

Example: Cumulative link models for ordinal responses

Cumulative link models for ordinal responses

Consider a multinomial response Y with k ordered categories and

corresponding category probabilities π1 , . . . , πk .

For the cumulative link model (McCullagh, 1980; Agresti, 2002)

g(π1 + . . . + πs ) = αs + β T x (s = 1, . . . , k − 1) ,

where

→ αs ∈ < with α1 < . . . < αk−1 ,

→ β ∈ <p , and

→ x is a p-vector of covariates.

Important special cases are the proportional-odds model and the

proportional hazards model in discrete time (grouped survival times).

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Exponential family models

Bias-reducing adjusted score functions

Obtaining the bias-reduced estimates

Special case: multivariate generalized linear models

Example: Cumulative link models for ordinal responses

Cumulative link models for ordinal responses

Let γ = (α1 , . . . , αk−1 , β1 , . . . , βp )T .

Under observing n multinomial vectors Y1 , . . . , Yn and having

available n corresponding p-vectors x1 , . . . , xn of covariates, we can

write

g(πr1 +. . .+πrs ) = ηrs =

p+q

X

γt zrst

(s = 1, . . . , k−1 ; r = 1, . . . , n) ,

t=1

where zrst is the (s, t)th

1 0

0 1

Zr = . .

.. ..

0

0

element of the q × (p + q) matrix

. . . 0 xTr

. . . 0 xTr

.

.. (r = 1, . . . , n) .

..

. ..

.

...

Kosmidis, I.

1

xTr

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Exponential family models

Bias-reducing adjusted score functions

Obtaining the bias-reduced estimates

Special case: multivariate generalized linear models

Example: Cumulative link models for ordinal responses

Cumulative link models for ordinal responses

Score functions

Ut =

X k−1

X

r

0

grs

s=1

yrs

yrs+1

−

πrs

πrs+1

zrst

(t = 1, . . . , p + q) ,

0

where grs

= dg −1 (η)/dη at η = ηrs .

Adjusted score functions with A(E) adjustments

The tth component (t = 1, . . . , p + q) of the adjusted score vector has the form

Ut∗ =

X k−1

X

r

s=1

0

grs

yrs + crs − crs−1

yrs+1 + crs+1 − crs

−

πrs

πrs+1

00

where crs = mr grs

arss /2 and cr0 = crk = 0 with

00

2 −1

2

→ grs = d

g

(η)/dη

at η = ηrs ,

Pq

→ mr = s=1 yrs and

→ arss the (s, s)th element of the q × q matrix Zr F −1 ZrT .

Kosmidis, I.

Bias reduction in exponential family models

zrst ,

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Exponential family models

Bias-reducing adjusted score functions

Obtaining the bias-reduced estimates

Special case: multivariate generalized linear models

Example: Cumulative link models for ordinal responses

Pseudo-responses for cumulative link models

If crs were known constants, then the bias-reduced estimates using

adjustments A(E) could be obtained by simply replacing the actual

responses by the pseudo-responses

∗

yrs

= yrs + crs − crs−1

(s = 1, . . . , k) ,

with cr0 = crk = 0, and the proceeding with usual maximum

likelihood.

However crs generally depend on the model parameters.

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Exponential family models

Bias-reducing adjusted score functions

Obtaining the bias-reduced estimates

Special case: multivariate generalized linear models

Example: Cumulative link models for ordinal responses

Obtaining the bias-reduced estimates

Let yr = (yr1 , . . . , yrk−1 )T , πr = (πr1 , . . . , πrk−1 )T and

ηr = (ηr1 , . . . , ηrk−1 )T .

The rth working observation vector for maximum likelihood is

ζr = Zr γ + (DrT )−1 (yr − mr πr ) ,

where DrT = mr D (πr ; ηr ).

Modified iterative generalized least squares

∗

If yrs are replaced by yrs

we obtain the modified working observation

vector having components

∗

ζrs

= ζrs +

00

mr grs

arss

0

2grs

Kosmidis, I.

(s = 1, . . . , k − 1) .

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Exponential family models

Bias-reducing adjusted score functions

Obtaining the bias-reduced estimates

Special case: multivariate generalized linear models

Example: Cumulative link models for ordinal responses

Illustration: Cheese-tasting experiment

Table: The cheese-tasting experiment

Response category

Cheese

1

2

3

4

5

6

7

8

9

Total

A

B

C

D

0

6

1

0

0

9

1

0

1

12

6

0

7

11

8

1

8

7

23

3

8

6

7

7

19

1

5

14

8

0

1

16

1

0

0

11

52

52

52

52

Data analyzed in McCullagh & Nelder (1989, §5.6) using a

proportional-odds model with

log

πr1 + . . . + πrs

= αs −βr

πrs+1 + . . . + πr9

Kosmidis, I.

(s = 1, . . . , 8 ; r = 1, . . . , 4) , β4 = 0.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Exponential family models

Bias-reducing adjusted score functions

Obtaining the bias-reduced estimates

Special case: multivariate generalized linear models

Example: Cumulative link models for ordinal responses

Illustration: Cheese-tasting experiment

Results are based on simulating 50000 samples under the ML fit. No infinite ML

estimates occurred in the simulation, but the probability of encountering them is > 0.

Estimates

ML

α1

α2

α3

α4

α5

α6

α7

α8

β1

β2

β3

−7.080

−6.025

−4.925

−3.857

−2.521

−1.569

−0.067

1.493

−1.613

−4.965

−3.323

Simulation Results

Bias

BR

−6.921

−5.909

−4.834

−3.786

−2.472

−1.538

−0.065

1.457

−1.582

−4.871

−3.261

MSE

Coverage

ML

BR

ML

BR

ML

BR

−0.185

−0.124

−0.100

−0.078

−0.052

−0.033

−0.002

0.040

−0.034

−0.101

−0.068

−0.003

−0.001

−0.001

−0.001

−0.001

−0.001

< 0.001

0.002

−0.001

−0.002

−0.002

0.496

0.263

0.207

0.170

0.128

0.102

0.074

0.124

0.152

0.254

0.198

0.333

0.233

0.187

0.156

0.119

0.096

0.071

0.113

0.143

0.231

0.184

0.951

0.946

0.947

0.948

0.950

0.951

0.951

0.954

0.950

0.946

0.949

0.952

0.948

0.947

0.949

0.951

0.953

0.955

0.954

0.954

0.950

0.951

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Adjusted score functions

Suggested pseudo-responses

Implementation via modified working observations

Bias-reducing penalized likelihoods

Adjusted score functions for generalized linear models

Assume scalar response variable, ie. q = 1.

For simplicity, write

→ κ2,r and κ3,r for the variance and the third cumulant of Yr and

→ wr = d2r /κ2,r for the working weights, where dr = dµr /dηr

(r = 1, . . . , n).

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Adjusted score functions

Suggested pseudo-responses

Implementation via modified working observations

Bias-reducing penalized likelihoods

Adjusted score functions for generalized linear models

Adjusted score functions for univariate generalized linear models

The general form of the adjusted score functions reduces to

Ut∗ = Ut +

p

1 X d0r X

etu xru

hr

2 r

dr u=1

(t = 1, . . . , p) ,

where

→ d0r = d2 µr /dηr2 ,

→ xrt is the (r, t)th element of the n × p design matrix X ,

→ hr is the rth diagonal element of H = XF −1 X T W , with

W = diag(w1 , . . . , wn ), and

→ etu denotes the (t, u)th component of the matrix (G + R)F −1 .

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Adjusted score functions

Suggested pseudo-responses

Implementation via modified working observations

Bias-reducing penalized likelihoods

Adjusted score functions for generalized linear models

The ordinary score functions have the form

X wr

Ut =

(yr − µr ) xrt (t = 1, . . . , p) .

dr

r

For A(E) adjustments, etu = 1, if t = u and 0 otherwise.

Adjusted score functions using A(E) adjustments.

X wr 1 d0r

∗

Ut =

yr + hr

− µr xrt (t = 1, . . . , p) .

dr

2 wr

r

Suggested pseudo-responses

1 d0

yr∗ = yr + hr r

2 wr

(r = 1, . . . , n)

(see, also, Firth (1993) for canonical links)

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Adjusted score functions

Suggested pseudo-responses

Implementation via modified working observations

Bias-reducing penalized likelihoods

Modified working observations

The pseudo-responses suggest implementation, using iterative

re-weighted least squares but with yr replaced by yr∗ .

In terms of modified working observations

ζr∗ = ηr +

yr∗ − µr

= ζr − ξr

dr

(r = 1, . . . , n) ,

where

→ ζr = ηr + (yr − µr )/dr is the working observation for maximum

likelihood, and

→ ξr = −d0r hr /(2wr dr ) (Cordeiro & McCullagh, 1991).

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Adjusted score functions

Suggested pseudo-responses

Implementation via modified working observations

Bias-reducing penalized likelihoods

Modified working observations

Modified iterative re-weighted least squares

Iteration

β̃(j+1) = (X T W(j) X)−1 X T W(j) (ζ(j) − ξ(j) ) ,

Cordeiro & McCullagh (1991): The O(n−1 ) bias of the maximum

likelihood estimator for generalized linear models is

b1 /n = (X T W X)−1 X T W ξ

Thus the iteration takes the form

β̃(j+1) = β̂(j) − b1,(j) /n .

→

At each iteration, the bias-corrected maximum likelihood estimate is

calculated at the previous value of the estimate until convergence.

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Adjusted score functions

Suggested pseudo-responses

Implementation via modified working observations

Bias-reducing penalized likelihoods

Penalized likelihood interpretation of bias reduction

Firth (1993): for a generalized linear model with canonical link, the

adjustment of the scores by A(E) corresponds to penalization of the

likelihood by the Jeffreys (1946) invariant prior .

In models with non-canonical link and p ≥ 2, there need not exist a

penalized log-likelihood l∗ such that ∇l∗ (β) ≡ U (β) + A(E) (β).

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Adjusted score functions

Suggested pseudo-responses

Implementation via modified working observations

Bias-reducing penalized likelihoods

Penalized likelihood interpretation of bias reduction

Theorem

Existence of penalized likelihoods

In the class of univariate generalized linear models, there exists a

penalized log-likelihood l∗ such that ∇l∗ (β) ≡ U (β) + A(E) (β), for all

possible specifications of design matrix X, if and only if the inverse link

derivatives dr = 1/gr0 (µr ) satisfy

dr ≡ αr κω

2,r

(r = 1, . . . , n) ,

where αr (r = 1, . . . , n) and ω do not depend on the model parameters.

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Adjusted score functions

Suggested pseudo-responses

Implementation via modified working observations

Bias-reducing penalized likelihoods

Penalized likelihood interpretation of bias reduction

The form of the penalized likelihoods for bias-reduction

When dr ≡ αr κω

2,r (r = 1, . . . , n) for some ω and α,

l∗ (β) =

→

→

→

→

l(β)

+

1X

log κ2,r (β)hr

4 r

(ω = 1/2)

l(β)

+

ω

log |F (β)|

4ω − 2

(ω 6= 1/2) .

The canonical link is the special case ω = 1.

With ω = 0, the condition refers to models with identity-link.

For ω = 1/2 the working weights, and hence F , H, do not depend

on β.

If ω ∈

/ [0, 1/2], bias-reduction also increases the value of |F (β)|.

Thus, approximate confidence ellipsoids, based on asymptotic

normality of the estimator, are reduced in volume.

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Well-known constant adjustment schemes

Iterative adjustment of the responses and totals

Properties of the bias-reduced estimator for binary regression models

Illustration of the properties of the bias-reduced estimator

Binary regression models

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Well-known constant adjustment schemes

Iterative adjustment of the responses and totals

Properties of the bias-reduced estimator for binary regression models

Illustration of the properties of the bias-reduced estimator

Constant adjustment schemes

Examples: Haldane (1955) & Anscombe (1956), Hitchcock (1962),

Gart and Zweifel (1967), Clogg et al (1991).

Estimation of the model parameters can be conveniently performed

by the following procedure:

1

2

Calculate the value of the adjusted responses and totals.

Proceed with usual estimation methods, treating the adjusted data

as actual.

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Well-known constant adjustment schemes

Iterative adjustment of the responses and totals

Properties of the bias-reduced estimator for binary regression models

Illustration of the properties of the bias-reduced estimator

Disadvantages of constant adjustment schemes

The resultant estimators are not invariant to different representations

of the data (for example, aggregated and disaggregated view).

No constant adjustment scheme can be optimal throughout the

parameter space, in terms of improving the asymptotic properties of

the estimators.

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Well-known constant adjustment schemes

Iterative adjustment of the responses and totals

Properties of the bias-reduced estimator for binary regression models

Illustration of the properties of the bias-reduced estimator

Parameter-dependent adjustments to the data

Consider the binomial regression model

g(πr ) = ηr =

p

X

βt xrt

(r = 1, . . . , n) ,

t=1

where g : [0, 1] → <.

If hr d0r /wr were known constants, the bias-reduced estimates for

A(E) adjustments would result by maximum likelihood estimation

with the actual responses replaced by

yr∗ = yr + hr

Kosmidis, I.

d0r

2wr

(r = 1, . . . , n) .

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Well-known constant adjustment schemes

Iterative adjustment of the responses and totals

Properties of the bias-reduced estimator for binary regression models

Illustration of the properties of the bias-reduced estimator

A set of bias-reducing pseudo-data representations

Dropping the subject index r, a pseudo-data representation is the

pair {y ∗ , m∗ }, where y ∗ is the adjusted binomial response and m∗

the adjusted totals.

By the form of Ut∗ ,

Bias-reducing pseudo-data representation

d0

y+h

, m .

2w

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Well-known constant adjustment schemes

Iterative adjustment of the responses and totals

Properties of the bias-reduced estimator for binary regression models

Illustration of the properties of the bias-reduced estimator

A set of bias-reducing pseudo-data representations

Actually, the form of Ut∗ suggests a countable set of equivalent

bias-reducing pseudo-data representations, where any pseudo-data

representation in the set can be obtained from any other by the

following operations:

→ add and subtract a quantity to either the adjusted responses or totals

→ move summands from the adjusted responses to the adjusted totals

after division by −π.

Special case (Firth, 1992): For logistic regressions

d0r /wr = (1 − 2πr ), thus

Ut∗ =

n X

1

yr + hr − (mr + hr )πr xrt

2

r=1

(t = 1, . . . , n) .

→ a bias-reducing pseudo-data representation is {y + h/2, m + h}.

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Well-known constant adjustment schemes

Iterative adjustment of the responses and totals

Properties of the bias-reduced estimator for binary regression models

Illustration of the properties of the bias-reduced estimator

An appropriate pseudo-data representation

Within this class of pseudo-data representations

Bias-reducing pseudo-data representation with 0 ≤ y ∗ ≤ m∗

1

md0

y + hπ 1 + 2 Id0 >0 ,

2

d

Kosmidis, I.

1

md0

m + h 1 + 2 (π − Id0 ≤0 )

.

2

d

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Well-known constant adjustment schemes

Iterative adjustment of the responses and totals

Properties of the bias-reduced estimator for binary regression models

Illustration of the properties of the bias-reduced estimator

Local maximum likelihood fits on pseudo-data

representations

Local maximum likelihood fits on pseudo-data representations

1

∗

, m∗r,(j+1) } (r = 1, . . . , n) evaluating all the

Update to {yr,(j+1)

quantities involved at β̃(j) .

2

∗

Use maximum likelihood to fit the model with responses yr,(j+1)

and

totals m∗r,(j+1) (r = 1, . . . , n) and using β̃(j) as starting value.

β̃(0) : the maximum likelihood estimates after adding c > 0 to the

responses and 2c to the binomial totals.

Pp ∗

Convergence criterion:

(

β̃

)

U

≤ , > 0

(j+1)

t

t=1

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Well-known constant adjustment schemes

Iterative adjustment of the responses and totals

Properties of the bias-reduced estimator for binary regression models

Illustration of the properties of the bias-reduced estimator

Invariance of the estimates to the structure of the data

Equivalent representations of the binomial data

Response

y1

Total

m1

..

.

mn

yn

yr =

kr

X

zrs

Covariates

x1

Response

z11

xn

z1k1

(r = 1, . . . , n)

zn1

s=1

mr =

kr

X

znk1

lrs

Total

l11

..

.

l1k1

..

.

ln1

..

.

lnk1

(r = 1, . . . , n)

s=1

0 ≤ zrs ≤ lrs

Kosmidis, I.

Bias reduction in exponential family models

Covariates

x1

x1

xn

xn

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Well-known constant adjustment schemes

Iterative adjustment of the responses and totals

Properties of the bias-reduced estimator for binary regression models

Illustration of the properties of the bias-reduced estimator

Invariance of the estimates to the structure of the data

Adjusted responses and totals:

∗

zrs

= zrs +h̃rs qr

∗

and lrs

= lrs +h̃rs vr

(s = 1, . . . , kr ; r = 1, . . . , n)

where

→ q ≡ q(πr ) and v ≡ v(πr ) and

→ h̃rs is the diagonal element of the hat matrix for the

disaggregated representation of the data.

Pkr

Simple algebra gives s=1

h̃rs = hr (r = 1, . . . , n). Hence,

yr∗ =

kr

X

∗

zrs

and m∗r =

s=1

→

kr

X

∗

lrs

(r = 1, . . . , n).

s=1

Ut∗ results by the replacement of y and m by y ∗ and m∗ in Ut . The

bias-reduced estimator is invariant to the structure of the binomial

data.

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Well-known constant adjustment schemes

Iterative adjustment of the responses and totals

Properties of the bias-reduced estimator for binary regression models

Illustration of the properties of the bias-reduced estimator

Logistic regression

For logistic regressions, bias-reduction is equivalent to maximizing

the penalized likelihood

l∗ (β) = l(β) +

1

log |F (β)| .

2

Heinze & Schemper (2002) empirically studied bias-reduction for

logistic regressions.

Consider estimation by maximization of a penalized log-likelihood of

the form

l(a) (β) = l(β) + a log |F (β)| ,

Kosmidis, I.

with fixed a > 0 ,

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Well-known constant adjustment schemes

Iterative adjustment of the responses and totals

Properties of the bias-reduced estimator for binary regression models

Illustration of the properties of the bias-reduced estimator

Logistic regression: Finiteness of the bias-reduced

estimates

Theorem

If any component of η is infinite-valued, the penalized log-likelihood l(a)

has value −∞.

Corollary

Finiteness of the bias-reduced estimates

The vector β̃ (a) that maximizes l(a) (β) has all of its elements finite, for

positive α.

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Well-known constant adjustment schemes

Iterative adjustment of the responses and totals

Properties of the bias-reduced estimator for binary regression models

Illustration of the properties of the bias-reduced estimator

Logistic regression: Shrinkage of the bias-reduced

estimates towards the origin

Theorem

Maximizer of det I(γ)

If I(γ) is the Fisher information on γ, the function det I(γ) is globally

maximized for γ = 0.

Theorem

Log-concavity of |F | on π

Let π be the n-dimensional vector of binomial probabilities. If F (β) is

the Fisher information on β, let F 0 (π(β)) = F (β). Then, the function

f (π) = |F 0 (π)| ,

is log-concave.

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Well-known constant adjustment schemes

Iterative adjustment of the responses and totals

Properties of the bias-reduced estimator for binary regression models

Illustration of the properties of the bias-reduced estimator

A complete enumeration study

Binomial observations y1 , . . . , y5 , each with totals m are made

independently at the five design points x = (−2, −1, 0, 1, 2).

Binary regression model:

g(πr ) = β1 + β2 xr

(r = 1, . . . , 5) .

We consider the logit, probit and complementary log-log links.

The “true” parameter values are set to β1 = −1 and β2 = 1.5:

logit

probit

cloglog

π1

0.01799

0.00003

0.01815

π2

0.07586

0.00621

0.07881

π3

0.26894

0.15866

0.30780

π4

0.62246

0.69146

0.80770

π5

0.88080

0.97725

0.99938

→ increased probability of infinite maximum likelihood estimates.

Through complete enumeration for m = 4, 8, 16 calculate:

bias and mean squared error of the bias-reduced estimator, and

coverage of the nominally 95% Wald-type confidence interval.

Kosmidis, I.

Bias reduction in exponential family models

Results

The parenthesized probabilities refer to the event of encountering at least one infinite

ML estimate for the corresponding m and link function. All the quantities for the ML

estimator are calculated conditionally on the finiteness of its components.

Link

m

logit

4

(0.1621)

8

(0.0171)

16

(0.0002)

4

(0.5475)

8

(0.2296)

16

(0.0411)

4

(0.3732)

8

(0.1000)

16

(0.0071)

ML:

probit

cloglog

Bias (×102 )

ML

BR

β1

-8.79

0.52

β2

14.44

-0.13

β1

-12.94

-0.68

β2

20.17

1.11

β1

-7.00

-0.19

β2

10.55

0.29

β1

17.89

13.54

β2

-18.84

-16.93

β1

0.80

3.24

β2

6.08

-3.81

β1

-7.06

0.24

β2

12.54

-0.17

β1

2.97

3.18

β2

-2.93

-12.97

β1

-8.42

0.84

β2

15.63

-5.40

β1

-6.45

0.17

β2

13.13

-1.74

maximum likelihood; BR:

MSE (×10)

ML

BR

5.84

6.07

3.62

4.73

3.93

3.11

3.70

2.68

1.75

1.42

1.59

1.16

1.44

2.61

0.98

3.07

1.07

1.82

1.26

2.13

1.03

1.08

1.39

1.22

2.97

3.07

1.35

3.51

2.49

1.89

2.33

2.36

1.32

0.98

1.60

1.23

bias reduction.

Coverage

ML

BR

0.971

0.972

0.960

0.939

0.972

0.964

0.972

0.942

0.961

0.957

0.960

0.948

0.968

0.911

0.960

0.897

0.964

0.938

0.972

0.908

0.974

0.949

0.973

0.933

0.959

0.962

0.955 0.880

0.962

0.953

0.972

0.906

0.964

0.957

0.965

0.921

Results

The parenthesized probabilities refer to the event of encountering at least one infinite

ML estimate for the corresponding m and link function. All the quantities for the ML

estimator are calculated conditionally on the finiteness of its components.

Link

m

logit

4

(0.1621)

8

(0.0171)

16

(0.0002)

4

(0.5475)

8

(0.2296)

16

(0.0411)

4

(0.3732)

8

(0.1000)

16

(0.0071)

ML:

probit

cloglog

Bias (×102 )

ML

BR

β1

-8.79

0.52

β2

14.44

-0.13

β1

-12.94

-0.68

β2

20.17

1.11

β1

-7.00

-0.19

β2

10.55

0.29

β1

17.89

13.54

β2

-18.84

-16.93

β1

0.80

3.24

β2

6.08

-3.81

β1

-7.06

0.24

β2

12.54

-0.17

β1

2.97

3.18

β2

-2.93

-12.97

β1

-8.42

0.84

β2

15.63

-5.40

β1

-6.45

0.17

β2

13.13

-1.74

maximum likelihood; BR:

MSE (×10)

ML

BR

5.84

6.07

3.62

4.73

3.93

3.11

3.70

2.68

1.75

1.42

1.59

1.16

1.44

2.61

0.98

3.07

1.07

1.82

1.26

2.13

1.03

1.08

1.39

1.22

2.97

3.07

1.35

3.51

2.49

1.89

2.33

2.36

1.32

0.98

1.60

1.23

bias reduction.

Coverage

ML

BR

0.971

0.972

0.960

0.939

0.972

0.964

0.972

0.942

0.961

0.957

0.960

0.948

0.968

0.911

0.960

0.897

0.964

0.938

0.972

0.908

0.974

0.949

0.973

0.933

0.959

0.962

0.955 0.880

0.962

0.953

0.972

0.906

0.964

0.957

0.965

0.921

Results

The parenthesized probabilities refer to the event of encountering at least one infinite

ML estimate for the corresponding m and link function. All the quantities for the ML

estimator are calculated conditionally on the finiteness of its components.

Link

m

logit

4

(0.1621)

8

(0.0171)

16

(0.0002)

4

(0.5475)

8

(0.2296)

16

(0.0411)

4

(0.3732)

8

(0.1000)

16

(0.0071)

ML:

probit

cloglog

Bias (×102 )

ML

BR

β1

-8.79

0.52

β2

14.44

-0.13

β1

-12.94

-0.68

β2

20.17

1.11

β1

-7.00

-0.19

β2

10.55

0.29

β1

17.89

13.54

β2

-18.84

-16.93

β1

0.80

3.24

β2

6.08

-3.81

β1

-7.06

0.24

β2

12.54

-0.17

β1

2.97

3.18

β2

-2.93

-12.97

β1

-8.42

0.84

β2

15.63

-5.40

β1

-6.45

0.17

β2

13.13

-1.74

maximum likelihood; BR:

MSE (×10)

ML

BR

5.84

6.07

3.62

4.73

3.93

3.11

3.70

2.68

1.75

1.42

1.59

1.16

1.44

2.61

0.98

3.07

1.07

1.82

1.26

2.13

1.03

1.08

1.39

1.22

2.97

3.07

1.35

3.51

2.49

1.89

2.33

2.36

1.32

0.98

1.60

1.23

bias reduction.

Coverage

ML

BR

0.971

0.972

0.960

0.939

0.972

0.964

0.972

0.942

0.961

0.957

0.960

0.948

0.968

0.911

0.960

0.897

0.964

0.938

0.972

0.908

0.974

0.949

0.973

0.933

0.959

0.962

0.955 0.880

0.962

0.953

0.972

0.906

0.964

0.957

0.965

0.921

Shrinkage

Fitted probabilities using bias-reduced estimates versus fitted probabilities using

maximum likelihood estimates for the complementary log-log link and m = 4.

The marked point is (c, c) where c = 1 − exp(−1).

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Discussion

A unified conceptual and computational framework for

bias-reduction in exponential family models.

λ was assumed known but this is not restricting the applicability of

the results. The dispersion is usually estimated separately from the

parameters β.

In categorical response models, bias-redcuction appears desirable in

terms of the properties of the estimators. However, this is not the

case for every model.

→ Reduction in bias can be accompanied by inflation in variance.

Bias and point estimation are, of course, not strong statistical

principles:

→ Bias relates to parameterization thus improving the bias violates

exact equivariance under reparameterization.

Kosmidis, I.

Bias reduction in exponential family models

Methods for improving the bias

Adjusted score functions for exponential family models

Univariate generalized linear models

Binary regression models

Discussion and current work

Some current work

We investigate the properties of the bias-reduced estimators that

occur with more general adjustments than A(E) .

Wald-type approximate confidence intervals for the bias-reduced

estimator perform poorly in small samples (mainly because of

finiteness and shrinkage).

Heinze & Schemper (2002), Bull et al (2007) in the case of logistic

regressions suggested profile penalized likelihood confidence

intervals.

The penalized likelihood is not generally available for links other

than logit. We examine the use of Ut∗T F −1 Ut∗ .

Kosmidis, I.

Bias reduction in exponential family models

References

Bull, S. B., Mak, C. and Greenwood, C. (2002). A modified score function estimator for

multinomial logistic regression in small samples. Computational Statistics and Data Analysis

39, 57–74.

Clogg, C. C., D. B. Rubin, N. Schenker, B. Schultz, and L. Weidman (1991). Multiple

imputation of industry and occupation codes in census public-use samples using Bayesian

logistic regression. Journal of the American Statistical Association, 86, 68–78.

Cordeiro, G. M. and McCullagh, P. (1991). Bias correction in generalized linear models.

Journal of the Royal Statistical Society, Series B: Methodological, 53, 629–643.

Cox, D. R. and Snell, E. J. (1968). A general definition of residuals (with discussion).

Journal of the Royal Statistical Society, Series B: Methodological 30, 248–275.

Firth, D. (1993). Bias re. In L. Fahrmeir, B. Francis, R. Gilchrist, and G. Tutz (Eds.), Advances in GLIM and Statistical Modelling: Proceedings of the GLIM 92 Conference, Munich,

New York, pp. 91–100. Springer.

Gart, J. J. and Zweifel, J. R. (1967). On the bias of various estimators of the logit and it

variance with application to quantal bioassay. Biometrika, 72, 179–190.

Heinze, G. and M. Schemper (2002). A solution to the problem of separation in logistic

regression. Statistics in Medicine, 21, 2409–2419.

Hitchcock, S. E. (1962). A note on the estimation of parameters of the logistic function

using the minimum logit χ2 method. Biometrika, 49, 250–252.

Kosmidis, I. and D. Firth (2008). Bias reduction in exponential family non-linear models.

Technical Report 8-5, CRiSM working paper series, University of Warwick. Accepted for

publication in Biometrika.

Wei, B. (1997). Exponential Family Nonlinear Models. New York: Springer-Verlag Inc.