Performance Evaluation of Medical Image Compression Using Discrete Cosine and Discrete

advertisement

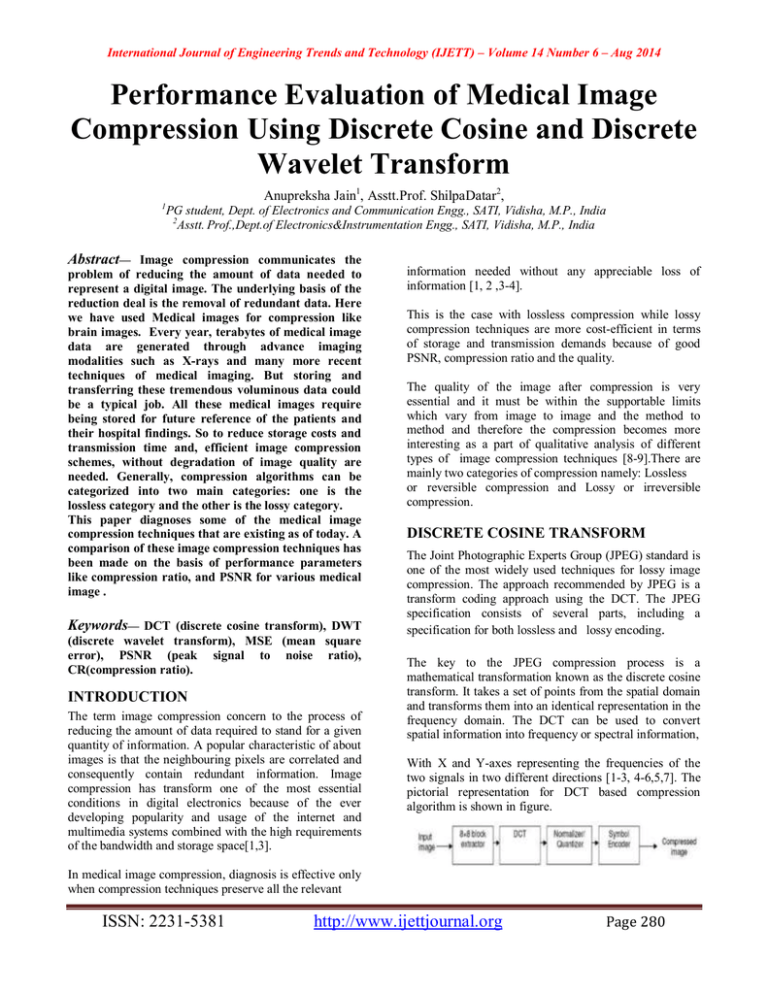

International Journal of Engineering Trends and Technology (IJETT) – Volume 14 Number 6 – Aug 2014 Performance Evaluation of Medical Image Compression Using Discrete Cosine and Discrete Wavelet Transform Anupreksha Jain1, Asstt.Prof. ShilpaDatar2, 1 PG student, Dept. of Electronics and Communication Engg., SATI, Vidisha, M.P., India 2 Asstt. Prof.,Dept.of Electronics&Instrumentation Engg., SATI, Vidisha, M.P., India Abstract— Image compression communicates the problem of reducing the amount of data needed to represent a digital image. The underlying basis of the reduction deal is the removal of redundant data. Here we have used Medical images for compression like brain images. Every year, terabytes of medical image data are generated through advance imaging modalities such as X-rays and many more recent techniques of medical imaging. But storing and transferring these tremendous voluminous data could be a typical job. All these medical images require being stored for future reference of the patients and their hospital findings. So to reduce storage costs and transmission time and, efficient image compression schemes, without degradation of image quality are needed. Generally, compression algorithms can be categorized into two main categories: one is the lossless category and the other is the lossy category. This paper diagnoses some of the medical image compression techniques that are existing as of today. A comparison of these image compression techniques has been made on the basis of performance parameters like compression ratio, and PSNR for various medical image . Keywords— DCT (discrete cosine transform), DWT (discrete wavelet transform), MSE (mean square error), PSNR (peak signal to noise ratio), CR(compression ratio). INTRODUCTION The term image compression concern to the process of reducing the amount of data required to stand for a given quantity of information. A popular characteristic of about images is that the neighbouring pixels are correlated and consequently contain redundant information. Image compression has transform one of the most essential conditions in digital electronics because of the ever developing popularity and usage of the internet and multimedia systems combined with the high requirements of the bandwidth and storage space[1,3]. information needed without any appreciable loss of information [1, 2 ,3-4]. This is the case with lossless compression while lossy compression techniques are more cost-efficient in terms of storage and transmission demands because of good PSNR, compression ratio and the quality. The quality of the image after compression is very essential and it must be within the supportable limits which vary from image to image and the method to method and therefore the compression becomes more interesting as a part of qualitative analysis of different types of image compression techniques [8-9].There are mainly two categories of compression namely: Lossless or reversible compression and Lossy or irreversible compression. DISCRETE COSINE TRANSFORM The Joint Photographic Experts Group (JPEG) standard is one of the most widely used techniques for lossy image compression. The approach recommended by JPEG is a transform coding approach using the DCT. The JPEG specification consists of several parts, including a specification for both lossless and lossy encoding. The key to the JPEG compression process is a mathematical transformation known as the discrete cosine transform. It takes a set of points from the spatial domain and transforms them into an identical representation in the frequency domain. The DCT can be used to convert spatial information into frequency or spectral information, With X and Y-axes representing the frequencies of the two signals in two different directions [1-3, 4-6,5,7]. The pictorial representation for DCT based compression algorithm is shown in figure. In medical image compression, diagnosis is effective only when compression techniques preserve all the relevant ISSN: 2231-5381 http://www.ijettjournal.org Page 280 International Journal of Engineering Trends and Technology (IJETT) – Volume 14 Number 6 – Aug 2014 Fig 1 Schematic diagram of JPEG compression ALGORITHM FOR JPEG COMPRESSION 1. The first step in JPEG compression process is to subdivide the input image into non-overlapping pixel blocks of size 8 x 8. 2. They are subsequently processed from left to right, top to bottom. As each 8 x 8 block or sub-image is processed, its 64 pixels are level shifted by subtracting , where is the number of gray levels. 3. Now its discrete cosine transform is computed. The resulting coefficients are then simultaneously normalized and quantized. 4. After each block’s DCT coefficients are quantized, the elements of T(u, v) are reordered in accordance with a zig-zag pattern. Now the nonzero coefficients are coded using a variable length code. The variable length codes are generated by the Huffman encoding. DISCRETE WAVELET TRANSFORM Wavelet transform has state an essential method for image compression. Wavelet based coding gives substantial improvement in picture quality at sufficient compression ratios mainly due to better energy compaction property of wavelet transforms [1,4,8]. With the increasing use of multimedia technologies, image compression requires advance performance as well as new features. The JPEG 2000 is the standard which will be based on wavelet decomposition..However, at lower rates there is a distinct degradation in the quality of the reconstructed image. To correct this and other shortcomings, the JPEG committee initiated work on another standard, commonly known as JPEG 2000. Fig 2 Schematic diagram of JPEG2000 compression ALGORITHM FOR JPEG 2000 COMPRESSION 1. The Discrete Wavelet Transform is first applied on the input image. 2. The transform coefficients are then quantized. 3. Finally the entropy encoding technique is used to generate the output. The Huffman encoding and run length is generally used for this purpose. Orthogonal or Bi orthogonal wavelet transform has often been used in many image processing applications, because it makes possible multi- resolution analysis and does not yield redundant information. DECOMPOSITION LEVEL SELECTION The number of operations, in the computation of the forward and inverse transform increases with the number of decomposition levels. In applications like, searching image databases on transmitting images for progressive reconstruction, the resolution of the stored or transmitted images and the scale of the lowest useful approximations normally determine the number of transform levels. SELECTION OF WAVELET The wavelets chosen as the basis of the forward and inverse transforms .The ability of the wavelet to compact information into a small number of transform coefficients find outs its compression and reconstruction performance, affect all aspects of wavelet coding system design and performance. They affect directly the computational complexity of the transforms and the system’s ability to compress and reconstruct images of satisfactory error. The most widely used expansion functions for waveletbased compression are the Daubechies wavelets. ISSN: 2231-5381 HUFFMAN CODING Huffman coding is an efficient source coding algorithm for source symbols that are not equally probable. A variable length encoding algorithm was suggested by Huffman in 1952, based on the source symbol probabilities P(xi), i=1,2…….,L . The algorithm is optimal in the sense that the average number of bits required to represent the source symbols is a minimum provided the prefix condition is met. The most popular technique for removing coding redundancy is due to David Huffman. It creates variable length codes that are an integral number of bits. Huffman codes have unique prefix attributes which means that they can be correctly http://www.ijettjournal.org Page 281 International Journal of Engineering Trends and Technology (IJETT) – Volume 14 Number 6 – Aug 2014 decoded despite being variable lengths. The Huffman codes are based on two observations regarding optimum prefix codes: 1-In an optimum code, symbols that occur more frequently (have higher probability of occurrence) will have shorter code words than symbols that occur less frequently and 2-In an optimum code, the two symbols that occur least often will have the same length. It is simple to see that the first observation is correct. If symbols that occur more frequently had code words that were longer than the code words for the symbol that occurred less often, the average number of bits per symbol would be larger if the condition were reversed [1, 3]. PERFORMANCE PARAMETERS Image compression research aims to reduce the number of bits required to represent an image by removing the spatial and spectral redundancies as much as possible. 1) MSE Mean Squared Error (MSE) is defined as the square of differences in the pixel values between the corresponding pixels of the two images. N * M size image is the mean square error (MSE) and is given by. And data redundancy of the original image can be specify as PROPOSED METHODOLOGY Uses of various compression techniques our aim is to provided the approximate compression ratio with sufficient PSNR. The commonly used two techniques i.e. JPEG and JPEG2000 are good sufficient for the compression but we may get improvisation in its performances by the changing the encoding technique. We get good PSNR in JPEG2000 technique compare to JPEG. In the preceding two techniques, the Huffman encoding is generally used. In the proposed work, a change in the coding technique has been comprised in the existing techniques. We have add Run length coding in the both techniques. We have used the Run Length encoding followed by the Huffman encoding and the significant coefficients are encoded separately using the Huffman encoding. This encoding technique provides an improvisation in the PSNR with sufficient the compression ratio. For the thresholding we used a parameter alpha, where alpha can be selected manually from 0 to 1. Threshold level is decided by the formula: 2) PSNR Peak signal-to-noise ratio often abbreviated PSNR, is an engineering name, for the ratio between the maximum possible power of a signal and the power of corrupting noise that affects the fidelity of its 3) COMPRESSION RATIO Data redundancy is the centric release in digital image compression. If n1 and n2 denote the number of information carrying units in original and encoded image respectively, then the compression ratio, CR can be specify as Where: Max I= Maximum intensity value present in the image. Min I= Minimum intensity present in the image. In the quantization stage, we have moderated the levels to . Where n is number of bits corresponds to each pixel of compressed image. We have used the n=4,5,6 in measurement in the coding . RESULT AND ANALYSIS The results are shown below for the two methods, for the different images, in the tabular form. ISSN: 2231-5381 http://www.ijettjournal.org Page 282 International Journal of Engineering Trends and Technology (IJETT) – Volume 14 Number 6 – Aug 2014 Table 1 Result of image compression using DCT for medical image 1 Number of bits corresponds to the each pixel of compressed image(Tbits) 4 5 6 PSNR of the reconstructed image Compression ratio 16.4135 7.0252 18.2842 7.0618 17.1680 6.0284 19.9230 6.3243 19.9358 5.0596 21.8884 5.4122 Number of bits corresponds to the each pixel of compressed image(Tbits) Table 3 Result of image compression using DCT for medical image2 Number of bits corresponds to the each pixel of compressed image(Tbits) 4 5 7.8571 27.9549 6.3454 27.3930 6.8094 28.6344 5.1512 28.1381 5.4801 Table 4 Result of image compression using DWT for medical image2 7.4449 8.2631 27.4606 6.0817 6.1520 27.1471 6.6447 4.9325 27.8837 5.0527 27.5361 5.4832 6.9782 20.0251 6.0363 Number of bits corresponds to the each pixel of compressed image(Tbits) 4 5 6 4.9439 (b) 7.3144 26.6518 26.3388 6.9301 18.5403 (a) 6 27.1235 26.5946 21.0794 21.6737 5 Compression ratio Compression ratio Compression ratio 21.7731 6 4 PSNR of the reconstructed image PSNR of the reconstructed image PSNR of the reconstructed image 20.4479 Table 2 Result of image compression using DWT for medical image 1 (c) (d) Fig. 3 (a) Original Image (medical image 1 –brain image) and (b) Reconstruct image at Tbits =4 (c) Reconstruct image at Tbits =5 (d) Reconstruct image at , Tbits =6. Images by DCT ISSN: 2231-5381 http://www.ijettjournal.org Page 283 International Journal of Engineering Trends and Technology (IJETT) – Volume 14 Number 6 – Aug 2014 (a) (b) (c) (d) Fig. 4 (a) Original Image (medical image 1 –brain image) and (b) Reconstruct image at Tbits =4 (c) Reconstruct image at Tbits =5 (d) Reconstruct image at , Tbits =6. Images by DWT (a) (b) (c) (d) Fig. 5 (a) Original Image (medical image 2 –brain image) and (b) Reconstruct image at Tbits =4 (c) Reconstruct image at Tbits =5 (d) Reconstruct image at Tbits =6. Images by DCT (a) (b) (c) (d) Fig. 6 (a) Original Image (medical image 2 –brain image) and (b) Reconstruct image at Tbits =4 (c) Reconstruct image at Tbits =5 (d) Reconstruct image at Tbits =6. Images by DWT ISSN: 2231-5381 http://www.ijettjournal.org Page 284 International Journal of Engineering Trends and Technology (IJETT) – Volume 14 Number 6 – Aug 2014 Another governing factor is the number of bits corresponding to each pixel of compressed image (Tbits). For the higher value of Tbits the number of levels in the compressed image is higher so PSNR is higher but the compression ratio is lower. [7] [8] [9] CONCLUSION We have seen the various compression methods that are most frequently and commonly used for the image compression. The JPEG compression scheme is a standard technique that uses DCT, but have blocking effect ,whereas JPEG2000 used the DWT and provides the results that are good enough and no blocking effect. But for both JPEG and JPEG2000, we may use the improved encoding technique to get the better results. Wallace.G, "The JPEG still picture compression standard", ", vol.34, pp. 30-44, 1991. Charilaos Christopoulos, “The JPEG 2000 still image coding system: an overview”, IEEE trans. On consumer electronics, vol.46, No.4, PP.1103-1127, Nov.2000. Sonja Grgic, Mislav Grgic, Branka Zovko-Cihlar, “Performance Analysis of Image Compression Using Wavelets”, IEEE Transactions on Industrial Electronics, Vol. 48, No. 3, pp 682-695, June 2001. We have used the run-length encoding followed by Huffman encoding and the significant coefficients are encoded separately using the Huffman encoding. With the use of good encoding technique the compression ratio remains unaffected but the PSNR get increased. We have also seen the results for the different number of bits corresponding to each pixel of the compressed image (Tbits). With lowering of Tbits value the PSNR decreases but the compression ratio increases. Thus we may select the desired level of compression with the corresponding PSNR. Here we have compared the value of PSNR at approximate compression ratio . We get good PSNR using DWT as compared to the DCT. So we can say DWT gives good image quality instead DCT. Our implemented strategies work sufficiently well, to provide improvisation in the PSNR with the desired compression ratio. REFRENCES [1] [2] [3] [4] [5] [6] Smitha Joyce Pinto , Prof. Jayanand P.Gawande "Performance analysis of medical image compression techniques”, Proceedings of the Institute of Electrical and Electronics Engineers IEEE, 2012. M.Antonini, M.Barlaud, P.Mathieu, I.Daubechies, “Image coding using wavelet transform“, IEEE. Trans. on image processing, Vol.1, No.2, pp.205-220, April1992. Sumathi Poobal, G.Ravindran, “The performance of fractal image compression on different imaging modalities using objective quality measures” Proceedings of the International Journal of Science and Engineering Technology (IJEST 2011), Vol. 3, No. 1, pp. 525-530, Jan 2011. M.A. Ansari , R.S. Anand “Comparative Analysis of Medical Image Compression Techniques and their Performance Evaluation for Telemedicine”, Proceedings of the International Conference on Cognition and Recognition, pp. 670-677. M.A. Ansari , R.S. Anand “Performance Analysis of Medical Image Compression Techniques with respect to the quality of compression” IET-UK Internaional Conference on Information and Communication Technology in Electrical Sciences (ICTES 2007) Chennai tamil nadu, india , pp. 743-750. Dec.2007. Ahmed N. T., Natarajan and K. R. Rao, "On image processing and discrete cosine transform", IEEE Trans. Medical Imaging, vol C23, pp. 90-93, 1974. ISSN: 2231-5381 http://www.ijettjournal.org Page 285