Video Coding Based on SSIM Roselin Raju , Arun P.S

advertisement

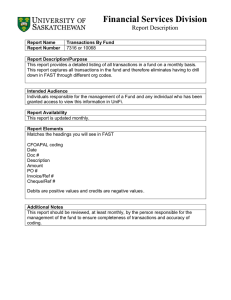

International Journal of Engineering Trends and Technology (IJETT) – Volume 10 Number 10 - Apr 2014 Video Coding Based on SSIM Roselin Raju1, Arun P.S2 1 PG Scholar, 2 Assistant Professor , Dept: of Electronics & Communication, Kerala University Mar Baselios college of Engineering and Technology, Nalanchira Trivandrum,India Abstract— This paper proposes a new image quality assessment algorithm- structured similarity index (SSIM) for video coding applications. It mainly incorporates the human visual sensitivity characteristics for video coding. This method can achieve significant gain at lower bit rate in terms of rate SSIM performance. Similarity measure is based on three comparisons such as luminance, chrominance and structure. Structural comparison extracts structural characteristics of an image which can improves the quality of video. Better quality video can be achieved by varying the value of quantisation parameter. Experimental results show the variation of video quality at different quantisation parameters. Keywords— Video coding, Structured Similarity Index, Peak Signal to Noise Ratio, Mean Square Error I. INTRODUCTION In several decades, video coding has been a very popular research area in video processing applications. It provides efficient solutions for storing and transmitting huge amount of data. Basically, video coding is equivalent to video compression. The main aim of video coding is reduce the size of the video without reducing the quality of video. Development of some video coding standards such as MPEG1, MPEG-2 , H.263 and H.263/AVC improves the efficiency of video coding out of which H.261, H.263,H.263+ are used for video conferencing and MPEG-1,MPEG-2 are used for video broad casting and archiving. These standards increase the coding efficiency by removing the statistical redundancy and temporal redundancy. A brief overview of statistical and temporal redundancy is given in [1] .There are several approaches used to improve the coding efficiency out of which perceptual video coding provides tremendous applications in video processing. In modern video coding methods, spatial redundancy has been extensively exploited using motion compensation, inter frame prediction technologies. Incorporating the human visual characteristics into the video coding techniques greatly reduces the bit rate without exploiting their quality. The main characteristics of HVS systems are frequency sensitivity, response to luminance amplitude variations and response to objective motion. A study of basic video coding algorithms is discussed in [5]. Initial procedure in every video coding algorithm is the generation of frame sequence. Normally, all video sequences are in RGB format. Conversion of RGB format to YUV in video coding makes the coding much simpler. Initially, video sequences are converted into frames. The quality of each frames are assessed using image quality ISSN: 2231-5381 assessment algorithms. Algorithms used for measuring image quality are classified into two types- Subjective evaluation and objective evaluation. The former one is usually time consuming and inconvenient. The latter one automatically predicts perceived image quality [4]. Objective quality metric is classified into full reference, reduced reference and no reference assessments. Mean square error (MSE) was one of the most commonly used full reference image quality assessment algorithms. II. RELATED WORKS H. Chen [2] had proposed a paper on Distributed video coding in which he makes a new paradigm of video coding based on temporal correlations between successive frames. C. Sun, H. J. Wang and H. Li [8]-[10] proposed a macro block level rate distortion optimization scheme based on the perceptual features of captured video. Based on the sensitivity features of human visual system this paper proposes three visual distortion sensitivity models-Attention model, position model, and texture structure model. These models are used to detect the active, central, flat regions. Zhi-Yi Mai proposed a paper discussed in [11], [12]. This paper is based on rate distortion optimization. Rate distortion optimization scheme is used for the selection of best prediction modes in H.264 I frame encoder. Rate distortion optimization is based on mean squared error (MSE) between the reconstructed blocks and the original blocks. This paper proposes two improved prediction mode selection methods based on structural similarity index. The first method is used to reduce the error or distortions in the perceived video. The second method is used to improve the compression efficiency. Domimque Brunet had proposed a paper on quality assessment from error sensitivity to structural similarity was discussed in [6]. Mean square error is simple to calculate and have clear physical meaning. It is computed by taking the average of squared intensity difference between the transmitted image and distorted image. But the disadvantage is that it does not incorporate the characteristics of human visual system (HVS). In [7], better perceived image quality can be achieved through proper bit allocation process. Psycho visual model consists of motion attention model and texture structure model which is used for bit allocation process. The idea behind in the bit allocation process is to allocate more bits to the video areas where more distortions occurs and allocate lesser number of bits to the video areas where less distortions occurs. http://www.ijettjournal.org Page 508 International Journal of Engineering Trends and Technology (IJETT) – Volume 10 Number 10 - Apr 2014 Many researches had gone into the development of quality assessment algorithms that incorporates the characteristics of human visual system. Based on the property of human visual system, a new paradigm (Structured similarity index) for quality assessment was proposed. Section III summarizes the advantages of structural similarity index vary from 0 to1. Human visual system is sensitive to relative luminance change rather than absolute luminance change. III. STRUCTURED SIMILARITY INDEX (SSIM) Pixels of the images that are highly structured exhibit strong dependencies. Those pixels exhibit strong dependencies carry important information about the structure of the object. Mean square error quality metric are independent of quality structure. Most of the existing quality measures based error sensitivity do not depends on the structure of the object. But the structured similarity index (SSIM) compares the structure of both the transmitted and the distorted image. This metric also incorporate the characteristics of human visual system thereby providing a good approximation to perceived image quality. Mean square approach is a bottom up approach where as structured similarity index is a top down approach. This top down approach also evaluates the structural changes between two complex structured signals. Each of the frame sequence consists of one luminance and two chrominance components. Luminance components are sensitive to human visual system where as chrominance components are less sensitive to human visual system. Luminance of an image is the product of illumination and reflectance components. But the structure of the image is independent of illumination. Consequently, in order to extract the structure of the information, influence of the illumination components was to be separated. Structural information in the image gives the structure of the objects in the image. The system diagram for structured similarity index is given in figure 1. Consider two image signals x and y with one signal having high quality and the other having lesser quality compared to the first signal. Initially, the system separates the luminance, chrominance and contrast components of the two image signals. First, comparison of luminance signal is done. Then mean intensities are removed from the image signal and contrast comparison is performed. After that the image signals are to be normalized. Finally structural comparison is performed on normalized signals. Normalized signals are obtained by dividing the signal with its standard deviation. Similarity can be measured by combining the three components. Boundedness, uniqueness and symmetry are the properties of SSIM. Each SSIM value of different video sequence must satisfy these properties. Structured similarity index is calculated using the following equation (1) l(x,y) represents the luminance comparison, c(x,y) represents the chrominance comparison and s(x,y) represents the structural comparison. The symbols α, β, ϒ are used to adjust the relative importance parameters. The value these symbols ISSN: 2231-5381 Fig. 1 Block Diagram of Structured Similarity Index(SSIM)[6] IV. PROPOSED SYSTEM A. Encoder Section Input video DCT SSIM Encoder Bit stream output B. Decoder Section Inverse DCT Encoded output Decoder Output In the encoder section, all the frame sequence in RGB format is converted into YUV sequence. The coefficients obtained after performing the DCT are stored in 8x8 matrix. The pixels of the transformed coefficients are compared with the coefficients of the pixels in the reference frames. Structural similarity is measured between the pixels in the reference frame and distorted frames. Inverse operation is performed on the decoding section. V. RESULTS AND DISCUSSION Simulation is done on different video sequences which are in .CIF format. Simulation is performed in MATLAB (2013). Image quality is assessed for each I, P, B frames and SSIM value is calculated for each of the video sequences. Performance can be evaluated for each frame. http://www.ijettjournal.org Page 509 International Journal of Engineering Trends and Technology (IJETT) – Volume 10 Number 10 - Apr 2014 Each frame is considered as an image. Each image is divided into macroblocks of variable block size. Here the following graph showing the variation of image quality of P frame based on the division of macroblocks. Quality of the video can also be varied by varying the quantisation parameter. Experimental results shows that better quality video can be achieved for a quantisation parameter 36. Table 1 Comparison of different frames for a quantisation parameter of 36 Parameters I frame P frame B frame No: of blocks - 76 36 Luma PSNR 34.857dB 33.9528dB 33.0944dB Chroma PSNR 37.2297dB 38.7243dB 37.5946dB Total rate 1.1615bpp 0.75738bpp 0.18535bpp Table 2 Comparison of different frames for a quantization parameter of 29 Fig. 2 Original frame & DCT transform result Parameters I frame P frame B frame No: of blocks - 27 4 Luma PSNR 27.9979dB 27.3599dB 26.8857dB Chroma PSNR 33.3316dB 33.1308dB 33.2892dB 34.2128dB 34.0324dB 34.1167dB 0.3997bpp 0.10156bpp 0.0665164bpp Total rate Table 3 Comparison of different frames for a quantization parameter of 40 Video No: of Resolution File SSIM sequence frames Foreman 300 352x288 YUV file 0.123 Football 125 352x240 YUV file 0.1109 Crowd 125 352x288 YUV file 0.0079 Garden 115 352x240 YUV file 0.0187 format Table 4 SSIM values for different video sequence Fig. 3 PSNR versus No: of blocks for P frame (QP-30) VI.CONCLUSION Similarily PSNR versus no: of blocks for P, B frames are also be obtained. Experimental results show that for a quantization parameter of 36, proposed approach provides better quality video. Performance evaluation also shows that the proposed approach doesn’t give better quality for a quantization parameter above 36 and below 36. Performance evaluation for different quantisation parameters is shown in the below tables. Parameters I frame P frame B frame No: of blocks - 54 27 Luma PSNR 42.803dB 34.828dB 34.1163dB Chroma PSNR 43.0735dB 43.8125dB 39.7412dB 41.7122dB 40.3192dB 42.0975dB Total rate 2.7826bpp 0.63585bpp 0.18316bpp ISSN: 2231-5381 We propose an image quality assessment metric, the structured similarity index for perceptual video coding. The novelty of this scheme lies in the comparison of three components. The proposed scheme demonstrates superior performance as compared to other image quality assessment algorithms. Improvement in visual quality and low bit rate is also achieved by the proposed scheme. ACKNOWLEDGMENT We would like to thank the almighty for sustaining the enthusiasm with which plunged into this endeavor. We are grateful to our families for supporting us and praying for us. We avail this opportunity to express our profound sense of sincere and deep gratitude to the Professors of MBCET for their valuable guidance for making this paper. My hearty and http://www.ijettjournal.org Page 510 International Journal of Engineering Trends and Technology (IJETT) – Volume 10 Number 10 - Apr 2014 inevitable thanks, to all our friends who supported us for the successful completion of this paper. REFERENCES [1] [2] [3] [4] [5] [6] [7] [8] [9] [10] [11] [12] [13] [14] Shiqi Wang, Abdul Rehman, Student Member, IEEE, Zhou Wang, Member, IEEE,”Perceptual Video Coding Based on SSIM-Inspired Divisive Normalization” Vol. 22, No. 4,April 2013 H. Chen, Y. Huang, P. Su, and T. Ou, “Improving video coding quality by perceptual rate-distortion optimization,” in Proc. IEEE Int. Conf. Multimedia Expo, Jul. 2010, pp. 1287–1292.. S. Channappayya, A. C. Bovik, and J. R. W. Heathh, “Rate bounds on SSIM index of quantized images,” IEEE Trans. Image Process., vol. 17, no. 9, pp. 1624– 1639, Sep. 2008. J. Chen, J. Zheng, and Y. He, “Macroblock-level adaptive frequency weighting for perceptual video coding,” IEEE Trans. Consum. Electron., vol. 53, no. 2, pp. 775–781, May 2007. Image and Video Compression: A Survey, Roger J. Clarke Department of Computing and Electrical Engineering, Heriot-Watt University, Riccarton, Edinburgh EH14 4 AS Scotland,1999. Z. Wang, A. C. Bovik, H. R. Sheikh, and E. P. Simoncelli, “Image quality assessment: From error visibility to structural similarity,” IEEE Trans. Image Process., vol. 13, no. 4, pp. 600–612, Apr. 2004. C.-W. Tang, C.-H. Chen, Y.-H. Yu, and C.-J. Tsai, “Visual sensitivity guided bit allocation for video coding,” IEEE Trans. Multimedia, vol. 8, no. 1, pp. 11–18, Feb. 2006. C. Sun, H.-J. Wang, and H. Li, “Macroblock-level rate-distortion optimization with perceptual adjustment for video coding,” in Proc. IEEEData Compress. Conf., Mar. 2008, p. 546. J. Chen, J. Zheng, and Y. He, “Macroblock-level adaptive frequency weighting for perceptual video coding,” IEEE Trans. Consum. Electron.,vol. 53, no. 2, pp. 775–781, May 2007. A. Tanizawa and T. Chujoh, “Adaptive quantization matrix selection,” Toshiba, Geneva, Switzerland, Tech. Rep. T05-SG16-060403-D-0266, Apr. 2006. Z. Mai, C. Yang, L. Po, and S. Xie, “A new rate-distortion optimization using structural information in H.264 I-frame encoder,” in Proc.ACIVS, 2005, pp. 435–441. X. Zhao, J. Sun, S. Ma, and W. Gao, “Novel statistical modeling, analysis and implementation of rate-distortion estimation for H.264/AVC coders,” IEEE Trans. Circuits Syst. Video Technol., vol. 20, no. 5,pp. 647–660, May 2010. E. Y. Lam and J. W. Goodman, “A mathematical analysis of the DCT coefficient distributions for images,” IEEE Trans. Image Process., vol. 9, no. 10, pp. 1661–1666, Oct. 2000. Joint Video Team (JVT) Reference Software. (2010) [Online].Available: http://iphome.hhi.de/suehring/tml/download/old-jm ISSN: 2231-5381 http://www.ijettjournal.org Page 511