Spatially Distributed Sampling and Reconstruction of High-Dimensional Signals Cheng Cheng Yingchun Jiang

advertisement

Spatially Distributed Sampling and Reconstruction

of High-Dimensional Signals

Cheng Cheng

Yingchun Jiang

Qiyu Sun

Department of Mathematics

University of Central Florida

Orlando, FL 32816

Email: cheng.cheng@knights.ucf.edu

Department of Mathematics

Guilin University of Electronic Technology

Guilin, Guangxi 541004

Email: guilinjiang@126.com

Department of Mathematics

University of Central Florida

Orlando, FL 32816

Email: qiyu.sun@ucf.edu

Abstract—A spatially distributed system for signal sampling

and reconstruction consists of huge amounts of small sensing devices with limited computing and telecommunication

capabilities. In this paper, we discuss stability of such a

sampling/reconstruction system and develop a distributed algorithm for fast reconstruction of high-dimensional signals.

I. I NTRODUCTION

Consider high-dimensional signals with finite rate of innovation,

X

f (t) =

cλ φλ (t), t ∈ Rn ,

(I.1)

λ∈Λ

where cλ and φλ are amplitude and impulse response of a

signal generating equipment located at the innovative position

λ ∈ Λ respectively ([1], [2], [3], [4], [5], [6], [7], [8], [9], [10]).

The parametric representation (I.1) retains some of simplicity

and structure of the band-limited (shift-invariant, reproducing

kernel) model ([11], [12], [13], [14]), and it is more flexible

for approximating real data ([1], [2], [3], [6]). In this paper,

we further assume that the set Λ of innovative positions is

contained in a low-dimensional manifold Md ⊂ Rn with

nonnegative Ricci curvature,

Λ ⊂ Md ⊂ R n .

Then a signal of the form (I.1) is superposition of impulse

responses of generating equipments at innovative positions

that could be described by much fewer parameters than the

dimension n of the signal.

Next we describe spatially distributed systems (SDS) for

signal sampling and reconstruction. Let there be a sensing

device located at every γ ∈ Γ ⊂ Md and ψγ be impulse

response of the sensing device at γ ∈ Γ. For such an SDS,

the signal f in (I.1) generates a sampling data

X

yγ := hf, ψγ i =

hψγ , φλ icλ , γ ∈ Γ

(I.2)

signal f is reconstructed in the headquarter from solving linear

equations of large size,

Sc = y,

(I.3)

where S := (hψγ , φλ i)γ∈Γ,λ∈Λ is sensing matrix of the SDS,

c := (cλ )λ∈Λ is amplitude vector of the signal f , and y :=

(yγ )γ∈Γ is sampling data vector in (I.2).

Recent technological advances have opened up possibilities

to SDSs consisting of huge amounts of sensing devices with

some computing and telecommunication capabilities. In an

SDS, device at every sensing location has limited telecommunication ability but certain computing power. Unlike a

centralized system, it communicates with neighboring devices

only, and it can use sampling data received from neighboring

devices to make first guess of the signal near its location. The

reconstruction procedure is for every device to send its first

guess of the signal to neighboring devices and to continue the

above predictor-corrector process until the signal is recovered

approximately. An SDS would give unprecedented capabilities

especially when creating a reliable communication network

is impracticable, and/or establishing a global center presents

formidable obstacle to collect and process all the information

(such as big-data problem). Meanwhile, for an SDS to be practicable, there are lots of important and challenging problems

need to be solved, such as designing a system for stable signal

reconstruction in the presence of noisy sampling environment

and failure of some sensing devices, and developing signal

reconstruction algorithm not to exceed communication and

computing capacities of each device.

In this paper, we introduce a local stability criterion for

an SDS to recover high-dimensional signals in the presence

of sampling noises, and we also develop a distributed signal

reconstruction algorithm to fulfill communication and computing requirements of each device.

λ∈Λ

for every sensing device.

Most well-studied signal reconstruction systems are centralized ([1], [3], [7], [9], [11], [12], [13], [14]). In such systems,

all sampling data are sent to the headquarter and the original

c

978-1-4673-7353-1/15/$31.00 2015

IEEE

II. S PATIALLY DISTRIBUTED SYSTEMS FOR SIGNAL

SAMPLING AND RECONSTRUCTION

In an SDS for signal sampling and reconstruction, there

should not be a lot of sensing devices in any spatial unit,

which means that the set Γ of device locations is relatively

separated,

X

(II.1)

D(Γ) := sup

χγ+[0,1]n (t) < ∞.

t∈Rn

By (II.3), (II.4), (II.5) and (II.6), there exists a positive

constant C such that

Z

|hψγ , φλ i| ≤

h(t − γ)g(t − λ)dt

Md

γ∈Γ

Recall from Bishop-Gromov inequality that balls in a manifold

Md with nonnegative Ricci curvature grow no faster than in

Euclidean space Rn [15]. So we use the Euclidean distance

on Rn to measure the distance between two sampling devices

located in Λ ⊂ Md , instead of geodesic distance on Md . This

together with (II.1) leads to our assumption on the set Γ ⊂ Md

of device locations:

Assumption 1: The counting measure µ on Γ has polynomial

growth, i.e., there exists a positive constant D1 such that

µ(B(γ, r)) ≤ D1 (1 + r)d for all γ ∈ Γ,

(II.2)

where B(γ, r) := {γ 0 ∈ Γ, |γ − γ 0 | ≤ r} is the ball with

center γ and radius r, and d is the dimension of the manifold

Md .

We call the minimal constant D1 for (II.2) to hold as

maximal sampling density of the SDS, c.f. [3], [11], [12].

For cost-effectiveness of an SDS, sensing devices in different locations could be chosen to have local sensing ability and

similar specification. So we assume the following for sensing

devices:

Assumption 2: The impulse response ψγ of the sensing

device located at γ ∈ Γ is enveloped by γ-shift of a bounded

signal h with finite duration,

|ψγ (t)| ≤ h(t − γ), γ ∈ Γ.

(II.3)

Next let us discuss signals being reconstructed from an SDS.

As a signal with finite rate of innovation has finitely many

innovative positions in any spatial unit, the set Λ of innovative

positions is relatively separated. In this proceeding paper, we

focus on discussing exact sampling problem:

Assumption 3: There is a sensing device located at every

innovative position, i.e.,

Γ = Λ.

(II.4)

The reader may refer to [16] for general sampling problems.

Recall that an SDS has limited telecommunication capacity

and computing ability. Thus for fast signal reconstruction of an

SDS, signal generating equipments far away from any sensing

location should not have strong influence on the sampling data.

So we make the following assumption on impulse responses

of signal generating equipments:

Assumption 4: There exists a signal g with polynomial decay

at infinity,

kgk∞,α := sup (1 + |t|)α |g(t)| < ∞ for some α > d, (II.5)

t∈Rn

such that impulse response φλ of the signal generating equipment located at the innovative position λ ∈ Λ is enveloped by

λ-shift of the signal g,

|φλ (t)| ≤ g(t − λ), λ ∈ Λ.

(II.6)

≤ C(1 + |λ − γ|)−α

for all γ, λ ∈ Γ.

Then the sensing matrix S := (hψγ , φλ i)γ,λ∈Γ of our SDS has

polynomial off-diagonal decay. In particular, it belongs to the

Jaffard class Jα (Γ) of matrices,

S ∈ Jα (Γ), α > d,

(II.7)

where

Jα (Γ) := A = (a(γ, λ))γ,λ∈Γ , kAkJα (Γ) < ∞

and

kAkJα (Γ) := sup (1 + |γ − λ|)α |a(γ, λ)|

γ,λ∈Γ

([17], [18], [19], [20]).

Let `p (Γ), 1 ≤ p ≤ ∞, be the space of all p-summable

sequences on Γ and denote its norm denoted by k·kp . Observe

from the polynomial growth property (II.2) that

X

sup

(1 + |γ − λ|)−α

γ∈Γ

λ∈Γ

≤ sup

γ∈Γ

≤ sup

γ∈Γ

≤ D1

X

(m + 1)−α

m≥0

X

1

X

m≤|γ−λ|<m+1

µ B(γ, m + 1) (m + 1)−α − (m + 2)−α

m≥0

∞

X

(m + 2)d (m + 1)−α − (m + 2)−α

m=0

= 2d D1 + D1

M

X

lim

M →+∞

−

M

+1

X

(m + 1)−α (m + 2)d

m=1

(m + 1)d−α

m=1

= D1 2d +

∞

X

(m + 1)−α (m + 2)d − (m + 1)d

m=1

2d−1 (2α − d)D1

.

(II.8)

≤

α−d

Then a matrix in Jα (Γ), α > d, defines a bounded operator

on `p (Γ), 1 ≤ p ≤ ∞.

Proposition II.1. Let α > d and 1 ≤ p ≤ ∞. If the counting

measure µ on Γ has polynomial growth property (II.2) and

A ∈ Jα (Γ), then

2d−1 (2α − d)D1

kAkJα (Γ) kckp , c ∈ `p (Γ).

α−d

For our SDS, we obtain from (II.7) and Proposition II.1 that

a signal with bounded amplitudes at all innovative positions

generates bounded sampling data at all sensing locations.

Given a matrix A = (a(γ, λ))γ,λ∈Γ , define its band approximation

As = (as (γ, λ))γ,λ∈Γ , s ≥ 0,

(II.9)

kAckp ≤

For an SDS with `2 -stability, we obtain from (III.2) and

N

Proposition II.2 that quasi-main submatrices χ2N

λ Sχλ , λ ∈ Γ,

d

2

of size O(N ) have uniform ` -stability for large N .

by

as (γ, λ) =

a(γ, λ) if

0

if

|γ − λ| ≤ s

|γ − λ| > s.

We notice that matrices in the Jaffard class can be approximated by band matrices.

Proposition II.2. Let α > d and 1 ≤ p ≤ ∞. If the counting

measure µ on Γ has polynomial growth property (II.2) and

A ∈ Jα (Γ), then

2d−1 (2α − d)D1

(s+1)−α+d kAkJα (Γ) kckp

α−d

for all c ∈ `p (Γ), where As , s ≥ 0, are band matrices in

(II.9).

k(A−As )ckp ≤

For our SDS, the sensing matrix S is a band matrix if the

enveloping signal g in Assumption 4 has finite duration. In

general, we obtain from (II.7) and Proposition II.2 that the

sensing matrix S can be approximated by band matrices. This

approximation property plays crucial roles for our local stability criterion and distributed signal reconstruction algorithm

in next two sections.

III. S TABILITY CRITERION OF SPATIALLY DISTRIBUTED

Theorem III.2. If an SDS satisfies (II.2), (II.3), (II.4), (II.5)

and (II.6), and it has `2 -stability with lower stability bound

A, then

A N

N

kχ ck2 , c ∈ `2 (Γ)

kχ2N

λ Sχλ ck2 ≥

2 λ

for all λ ∈ Γ and all integers N satisfying

2d (2α − d)D1

(N + 1)−α+d ≤ A.

α−d

Most importantly, the converse is shown to be true in

[16], c.f. local stability criterion in [21] for one-dimensional

convolution-dominated matrices.

Theorem III.3. If an SDS satisfies (II.2), (II.3), (II.4), (II.5)

and (II.6), and there exists a positive constant A0 and an

integer N0 ≥ 2 such that

−(α−d)/(α−d+1)

A0 ≥ CkSkJα (Γ) N0

and

N0

N0

2

0

kχ2N

λ Sχλ ck2 ≥ A0 kχλ ck2 , c ∈ ` (Γ),

SYSTEMS

p

We say that an SDS has ` -stability if its sensing matrix S

satisfies

Akckp ≤ kSckp ≤ Bkckp , c ∈ `p ,

(III.1)

where A and B are positive constants ([21], [22]). We call the

minimal constant B and the maximal constant A for (III.1)

to hold the upper bound and the lower bound of the `p stability. Reconstructing a signal from sampling data in the

presence of noises is a leading problem in sampling theory

([11], [12], [13], [23], [24], [25], [26], [27]). For an SDS with

sensing matrix having `p -stability (III.1), the `p -error between

the reconstructed signal and the true signal is dominated by a

multiple of the `p -norm of sampling error.

For our SDS, the upper bound estimate in (III.1) follows

from (II.7) and Proposition II.1. Following the argument in

[16], we can prove that the left-hand inequality in (III.1) holds

if the sensing matrix S has `2 -stability, c.f. [28], [29].

Proposition III.1. If an SDS satisfies (II.2), (II.3), (II.4), (II.5)

and (II.6), and it has `2 -stability, then it has `p -stability for

all 1 ≤ p ≤ ∞.

By Proposition III.1, the `p -stability of an SDS reduces to its

` -stability. If the size of our SDS is limited, its `2 -stability can

be verified whether smallest eigenvalues of ST S is positive.

But the above procedure is impractical as our SDS in mind

may have huge amounts of sensing devices.

Denote the identity matrix by I. For λ ∈ Γ and positive

integer N , one may verify that

2

N

N

2N

N

χ2N

λ Sχλ = Sχλ + (I − χλ )(SN − S)χλ ,

where the truncation operator χN

λ is defined by

χN

λ : (c(γ))γ∈Γ 7−→ c(γ)χB(λ,N ) (γ) γ∈Γ .

(III.2)

(III.3)

(III.4)

where C is an absolute constant, then the SDS has `2 -stability.

The requirements (III.3) and (III.4) can be rewritten as

inf

inf

λ∈Γ χN0 c6=0

λ

N0

0

kχ2N

λ Sχλ ck2

0

kχN

λ ck2

−(α−d)/(α−d+1)

≥ CkSkJα (Γ) N0

.

(III.5)

Then the `2 -stability of an SDS of huge size reduces to

verifying whether the low stability bound of subsystems

N0

d

0

χ2N

λ Sχλ of size O(N0 ) satisfies (III.5) or not. The above

local criterion is perfect for designing a robust SDS and for

confirming `p -stability of an SDS when some sensing devices

are dysfunctional.

IV. D ISTRIBUTED SIGNAL RECONSTRUCTION ALGORITHM

In a centralized system, the original signal f of the form

(I.1) is reconstructed from solving linear equations (I.3). For

an SDS with `2 -stability, the least-square solution of linear

equations (I.3) is given by

c = (ST S)−1 ST y.

But it is expensive to find pseudo-inverse of the sensing matrix

S for an SDS with huge amounts of sensing devices.

We recall from Theorem III.2 that quasi-main submatrices

2N

2

χ4N

λ Sχλ , λ ∈ Γ, have uniform ` -stability for large N . This

inspires us to solve linear equations

2N

4N

χ4N

λ Sχλ cλ,N = χλ y, λ ∈ Γ,

(IV.1)

of size O(N d ). Significantly, their least squares solutions cλ,N

provide an approximation of the true solution c of linear

equations (I.3) inside the ball B(λ, N ),

−α+d

kχN

kck∞ ,

λ (cλ,N − c)k∞ ≤ C(N + 1)

(IV.2)

where C is an absolute constant. Next we use those local

estimates cλ,N , λ ∈ Γ, to generate a global estimate

P

χN cλ,N

(IV.3)

c∗ = Pλ∈Γ λ

λ∈Γ χB(λ,N )

of the true solution c. For large N , we observe from (IV.2)

that c∗ obtained from (IV.1) and (IV.3) provides a good

approximation to the true solution c,

kc∗ − ck∞ ≤ C(N + 1)−α+d kck∞ .

(IV.4)

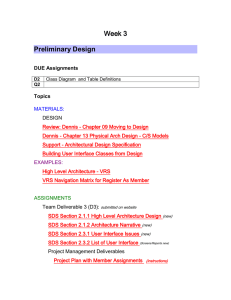

Fig. 1. Signal f =

P

1≤l≤L

cl φl with cl ∈ [0, 1], 1 ≤ l ≤ L, being

randomly selected.

Based on the above observation, we propose the following

predictor-corrector algorithm for an SDS with `2 -stability: Let

c ∈ `∞ (Γ) and y = Sc be sampling data vector. Set initial

c0 = 0 and y0 = y, and define cn , yn , n ≥ 1, iteratively by

zn;λ,NP= Rλ,N yn , λ ∈ Γ,

χN zn;λ,N

,

zn = Pλ∈Γ χλB(λ,N

)

(IV.5)

λ∈Γ

c

= cn + zn ,

n+1

yn+1 = yn − Szn ,

where

T 4N

2N

Rλ,N = χ2N

λ S χλ Sχλ

−1

Theorem IV.1. If an SDS satisfies (II.2), (II.3), (II.4), (II.5)

and (II.6), and it has `2 -stability. Then for large N satisfying

(IV.6)

the sequence cn , n ≥ 0, in (IV.5) converges to c exponentially,

kcn − ck∞ ≤ r1n kck∞ .

Let us have innovative positions distributed almost uniformly at the circle,

n

L

o

L

cos θl , sin θl , 1 ≤ l ≤ L ,

Γ = γl :=

5

5

and Gaussian signal generators at every innovation position,

φl (x, y) = exp(−|(x, y) − γl |2 /2), 1 ≤ l ≤ L,

T 4N

χ2N

λ S χλ .

r1 := C(N + 1)−α+d < 1,

V. N UMERICAL S IMULATIONS

(IV.7)

Proof. The reader may refer to [16] for detailed arguments.

where θl = 2π(l + rl )/L, and rl , 1 ≤ l ≤ L, are random

numbers in [−1/4, 1/4]. Illustrated in Figure 1 is a signal of

the form (I.1).

Consider an SDS with ideal sampling on every sensing

location. Then the distance between two nearest neighboring

devices is about one, and the sensing matrix is given by

S = exp(−|γl0 − γl |2 /2) 1≤l0 ,l≤L .

For our distributed algorithm (IV.5), denote by

EN := max |cl − c̃l |, N ≥ 2,

1≤l≤L

The implementation of the algorithm (IV.5) can be

distributed for devices in an SDS in each iteration.

(i) For the device located at λ ∈ Γ, first get data yn (γ) from

neighboring devices located at γ ∈ B(λ, 4N ), and then

generate local correction zn,λ,N ;

(ii) Then send the correction zn,λ,N to neighboring devices

located at γ ∈ B(λ, N ) and compute the correction zn .

(iii) Next add the correction zn to old prediction cn to create

new prediction cn+1 .

(iv) Send zn (λ) to neighboring devices at γ ∈ B(λ, M ) and

compute new correction yn+1 on sampling data, where

M is the bandwidth of the sensing matrix S.

Therefore the predictor-corrector method (IV.5) is a distributed

algorithm. Under the assumption that the telecommunication

cost between two sensing devices of an SDS is proportional to

their distance, we require about O(N 2d + M ) computing capacity and data storage, and we will spend about O((N +M )d )

on telecommunication for each device to implement (IV.5).

the maximal reconstruction error of amplitudes, where c̃l , 1 ≤

l ≤ L, are reconstructed amplitudes in the first iteration. For

the original signal in Figure 1, we see from Figure 2 that the

first iteration in the algorithm (IV.5) with N = 4 has provided

a good approximation already.

Fig. 2. Plotted is the difference of the original signal in Figure 1 and

the reconstructed signal using the algorithm (IV.5) with N = 4. The

maximal amplitude error E4 is about 0.2364.

Fig. 3. The reconstruction error of maximal amplitude EN has almost

exponential decay on N ≥ 2, a better numerical result than the

polynomial decay theoretically guaranteed in Theorem IV.1.

By (IV.6), the number of iteration required to reach accuracy

is about O(ln(1/)/ ln N ). So we may select small N for

an SDS with very limited computing and telecommunication

capabilities, while in general we would like to use a reasonable

N for fast reconstruction, see Figure 3.

Even though we have not discussed robustness and

distributed algorithm of an SDS when it has some sensing

devices dysfunctional, our numerical simulation shows that

the algorithm (IV.5) for signal reconstruction works well in

that scenario, see Figure 4.

Plotted is the difference of the original signal in Figure 1 and the reconstructed signal using the algorithm (IV.5)

with N = 4, L = 250, and sensing devices located at γl ,

l = 51, 57, 68, 79, 85, 98, 113, 131, 153, 166, 176, 188, 200, 208 being dysfunctional. The maximal amplitude error E4 is about 0.2504.

Fig. 4.

ACKNOWLEDGMENT

This work is partially supported by the National

Natural Science Foundation of China (Nos. 11201094

and 11161014), Guangxi Natural Science Foundation

(2014GXNSFBA118012), and the National Science

Foundation (DMS-1109063 and 1412413).

R EFERENCES

[1] M. Vetterli, P. Marziliano and T. Blu, Sampling signals with finite rate

of innovation, IEEE Trans. Signal Process., 50(2002), 1417–1428.

[2] D. Donoho, Compressive sampling, IEEE Trans. Inform. Theory,

52(2006), 1289–1306.

[3] Q. Sun, Non-uniform average sampling and reconstruction of signals

with finite rate of innovation, SIAM J. Math. Anal., 38(2006), 1389–

1422.

[4] P. L. Dragotti, M. Vetterli and T. Blu, Sampling moments and reconstructing signals of finite rate of innovation: Shannon meets Strang-Fix,

IEEE Trans. Signal Process., 55(2007), 1741–1757.

[5] P. Shukla and P. L. Dragotti, Sampling schemes for multidimensional

signals with finite rate of innovation, IEEE Trans. Signal Process.,

55(2007), 3670–3686.

[6] Q. Sun, Frames in spaces with finite rate of innovations, Adv. Comput.

Math., 28(2008), 301–329.

[7] M. Mishali, Y. C. Eldar and A. J. Elron, Xampling: signal acquisition

and processing in union of subspaces, IEEE Trans. Signal Process.,

59(2011), 4719–4734.

[8] H. Pan, T. Blu and P. L. Dragotti, Sampling curves with finite rate of

innovation, IEEE Trans. Signal Process., 62(2014), 458–471.

[9] Q. Sun, Localized nonlinear functional equations and two sampling

problems in signal processing, Adv. Comput. Math., 40(2014), 415–

458.

[10] C. Cheng, Y. Jiang and Q. Sun, Sampling and Galerkin reconstruction

in reproducing kernel spaces, arXiv:1410.1828

[11] M. Unser, Sampling -50 years after Shannon, Proc. IEEE, 88(2000),

569–587.

[12] A. Aldroubi and K. Gröchenig, Nonuniform sampling and

reconstruction in shift-invariant spaces, SIAM Rev., 43(2001),

585–620.

[13] M. Z. Nashed and Q. Sun, Sampling and reconstruction of signals in

a reproducing kernel subspace of Lp (Rd ), J. Funct. Anal., 258(2010),

2422–2452.

[14] B. Adcock, A. C. Hansen and C. Poon, Beyond consistent reconstructions: optimality and sharp bounds for generalized sampling, and

application to the uniform resampling problem, SIAM J. Math. Anal.,

45(2013), 3114–3131.

[15] P. Petersen, Riemannian Geometry, Springer, 2006.

[16] C. Cheng, Y. Jiang and Q. Sun, Spatially distributed sampling and

reconstruction of signals on a graph, in preparation.

[17] S. Jaffard, Properiétés des matrices bien localisées prés de leur diagonale et quelques applications, Ann. Inst. Henri Poincaré, 7(1990),

461–476.

[18] Q. Sun, Wiener’s lemma for infinite matrices with polynomial offdiagonal decay, C. Acad. Sci. Paris Ser I, 340(2005), 567–570.

[19] K. Gröchenig and M. Leinert, Symmetry and inverse-closedness of

matrix algebras and functional calculus for infinite matrices, Trans.

Amer. Math. Soc., 358(2006), 2695–2711.

[20] Q. Sun, Wiener’s lemma for infinite matrices, Trans. Amer. Math. Soc.,

359(2007), 3099–3123.

[21] Q. Sun, Stability criterion for convolution-dominated infinite matrices,

Proc. Amer. Math. Soc., 138(2010), 3933–3943.

[22] Q. Sun and J. Xian, Rate of innovation for (non-)periodic signals

and optimal lower stability bound for filtering, J. Fourier Anal. Appl.,

20(2014), 119–134.

[23] M. Pawlak, E. Rafajlowicz and A. Krzyzak, Postfiltering versus prefiltering for signal recovery from noisy samples, IEEE Trans. Inform.

Theory, 49(2003), 3195–3212.

[24] S. Smale and D.X. Zhou, Shannon sampling and function

reconstruction from point values, Bull. Amer. Math. Soc., 41(2004),

279–305.

[25] I. Maravic and M. Vetterli, Sampling and reconstruction of signals with

finite rate of innovation in the presence of noise, IEEE Trans. Signal

Process., 53(2005), 2788–2805.

[26] Y. C. Eldar and M. Unser, Nonideal sampling and interpolation

from noisy observations in shift-invariant spaces, IEEE Trans. Signal

Process., 54(2006), 2636–2651.

[27] N. Bi, M. Z. Nashed and Q. Sun, Reconstructing signals with finite

rate of innovation from noisy samples, Acta Appl. Math., 107(2009),

339–372.

[28] A. Aldroubi, A. Baskakov and I. Krishtal, Slanted matrices, Banach

frames, and sampling, J. Funct. Anal., 255(2008), 1667–1691.

[29] C. E. Shin and Q. Sun, Stability of localized operators, J. Funct. Anal.,

256(2009), 2417–2439.