A Transformation-based Nonparametric Estimator of

advertisement

A Transformation-based Nonparametric Estimator of

Multivariate Densities with an Application to Global

Financial Markets

Meng-Shiuh Chang∗ and Ximing Wu†

September 25, 2011

Abstract

We propose a probability-integral-transformation-based estimator of multivariate

densities. Given a sample of random vectors, we first transform the data into their corresponding marginal distributions. We then estimate the density of the transformed

data via the Exponential Series Estimator in Wu (2010). The density of the original data is then estimated as the product of the density of the transformed data and

marginal densities of the original data. This construction coincides with the copula

decomposition of multivariate densities. We decompose the Kullback-Leibler Information Criterion (KLIC) between the true density and our estimate into the KLIC of

the marginal densities and that between the true copula density and a variant of the

estimated copula density. This result is of independent interest in itself, and provides

a framework for our asymptotic analysis. We derive the large sample properties of

the proposed estimator, and further propose a stepwise hierarchical method of basis

function selection that features a preliminary subset selection within each candidate

set. Monte Carlo simulations demonstrate the superior performance of the proposed

method. We employ the proposed method to model the joint densities of the US and

UK stock market returns under different Asian market conditions. The estimated copula density function, a by-product of our estimation, provides useful insight into the

conditional dependence structure between the US and UK markets, and suggests a

certain resilience against financial contagions originated from the Asian market.

∗

School of Public Finance and Taxation, Southwestern University of Finance and Economics, Sichuan,

China; Email: mslibretto@hotmail.com

†

Texas A&M University, College Station, TX 77843; Email: xwu@tamu.edu.

We are grateful to David Bessler, Jianhua Huang, Qi Li, Victoria Salin, Robin Sickles and Natalia Sizova

for helpful comments and suggestions. The usual disclaimer applies.

1

Introduction

Estimating probability distributions is one of the most fundamental tasks in many fields of

science. Although many distributions can be conveniently summarized by a number of sample

statistics, such as moments, quantiles or cumulants, a distribution/density function itself is

the ‘complete package’ in the sense that all the aforementioned summary measures can

be calculated from the distribution/density function. In addition, distributions or densities

offer an important extra advantage; researchers may obtain useful insights into the quantities

in question via visual examination of distributions/densities, while these insights might be

elusive even if a set of summary measures are closely scrutinized.

This paper concerns with the estimation of multivariate density functions. Multidimensional analysis has played an increasingly important role in modern economics. For instance,

the recent financial crisis has called for a more comprehensive approach of risk assessment of

the financial market, in which multivariate analysis of the market, especially under extreme

conditions, plays a crucial role. Another example is welfare analysis. Conventional welfare

analysis tends to focus on one single attribute such as income or consumption. However, recent literature has contended that one shall take into account not only income/consumption,

but also some other important attributes such as health, environment and civil rights, to

arrive at more balanced welfare inferences. In either example, estimation of the joint distribution/density of the quantities in question is of independent interest in itself, and constitutes

an important first step that aids in the construction of more definite answers.

Density functions can be estimated by either parametric or nonparametric methods.

Parametric estimators are asymptotically efficient if they are correctly specified, but inconsistent under faulty distributional assumptions. In contrast, nonparametric estimators are

consistent, whereas they converge at a slower-than-root-N rate. The slower convergence rate

is due to the fact that the number of (nuisance) parameters increases with the sample size

in nonparametric estimators to achieve consistence. This so called curse of dimensionality is

particularly severe for multivariate density estimations, in which the number of parameters

increases exponentially with both the sample size and the dimension of the random vectors.

In this paper, we propose a new multivariate density estimator that is shown to mitigate

the curse of dimensionality. Let {X t }nt=1 , where X t = (X1t , . . . , Xdt ), be an iid random

sample from a d-dimensional distribution F with density f . We first transform each margin

of the random sample to F̂j (Xjt ) for j = 1, . . . , d and t = 1, . . . , n, where F̂j is an estimate of

the jth marginal distribution. Let ĉ be an estimate of the joint density of the transformed

2

data. It follows that one can construct a density estimator of the original data as

d

Y

fˆ(x) = ĉ F̂1 (x1 ), . . . , F̂d (xd )

fˆj (xj ),

(1)

j=1

where fˆj ’s, j = 1, . . . , d, are the marginal densities of the original data.

This probability-integral-transformation-based density estimator is used in Ruppert and

Cline (1994) on univariate densities. Their estimator is bias-reducing because the transformed data converge in distribution to the standard uniform distribution, whose derivatives

of all orders are zero. Although bias reduction is a potential benefit of employing this

transformation in multivariate density estimations, our estimator is largely motivated by a

different consideration. Equation (1) indicates that the joint density can be constructed as

the product of marginal densities and the density of the transformed data. As a matter

of fact, this construction coincides with the copula decomposition of multivariate densities

according to the celebrated Sklar’s Theorem (1959), in which the first factor in (1) is termed

the copula density function. This decomposition allows one to assemble a joint density by

first estimating the marginal densities and copula density separately. A valuable by-product

of this estimator is the copula density function, which completely summarizes the dependence structure among the margins and oftentimes provides useful insight into the variables

in questions.

We present a decomposition of the convergence rate of estimator (1), in terms of the

Kullback-Leibler Information Criterion (KLIC), into that of the marginal densities, and

the KLIC between the true copula density and a variant of the estimated copula density.

This result is of independent interest in itself, and provides the necessary framework for

the asymptotic analysis of the proposed estimator. The KLIC, being an expected log ratio

between two densities, arises as a natural metric for our analysis because it conveniently

transforms the product of densities in (1) into a sum of log densities.

We then propose a nonparametric estimator of the empirical copula density using the

Exponential Series Estimator (ESE) of Wu (2010). The ESE is particularly suitable for

copula density estimations since it is defined explicitly on a bounded support and thus does

not suffer from the boundary biases of the usual kernel density estimators, which are particularly severe for copula density estimations because copula densities tend to peak towards

the boundaries of its domain. This estimator has an appealing information-theoretic interpretation and lends itself to asymptotic analysis in terms of the KLIC. We derive the large

sample properties of the transformation-based multivariate density estimator, and discuss

how the convergence rates of the marginal densities and copula density together determine

3

the convergence rate of the joint density.

Inevitably, the number of basis functions in the ESE increases with the dimension and

sample size rapidly. To facilitate the selection of basis functions, we propose a stepwise hierarchical approach for basis function selection, which features a preliminary subset selection

within each group that maximizes the degree of similarity between the candidate set and

a subset of a given size (see, e.g., Cadima et al., 2004 for subset selections). Finally we

use some information criterion such as the Akaike Information Criterion (AIC) or Bayesian

Information Criterion (BIC) to select a preferred model.

To examine the finite sample performance of the transformation-based estimator, we

conduct two sets of Monte Carlo simulations. The first experiment compares the proposed

estimator with a direct density estimator using the kernel method in terms of overall density estimation performance. The second experiment compares the estimations of joint tail

probabilities based on the proposed method, a kernel estimate and the empirical distribution. In both experiments, the proposed method outperforms the alternatives, oftentimes by

substantial margins.

Lastly we apply the proposed method to estimating the joint distribution of the US

and UK stock markets under different Asian market conditions. Our analysis reveals how

fluctuations and extreme movements of the Asian market influenced the western markets.

In particular, the influence is asymmetric in the sense that the western market responded

more strongly when the Asian markets were up than down, indicating a certain degree of

resilience against global financial contagions originated from the Asian market. We note that

the asymmetric relation, albeit obscure in the joint densities of the US and UK markets, is

quite evident in their copula densities – a valuable by-product of our transformation-based

estimator.

This paper proceeds as follows. Section 2 briefly reviews various approaches of density

estimations. Section 3 presents a two-stage transformation-based estimator of multivariate

densities and its large sample properties, followed by a sequential updating method of basis

function selection. Section 4 presents a series of Monte Carlo simulations of the proposed

method. Section 5 provides an empirical application on global financial markets. Some

concluding remarks are given in the last section.

2

Multivariate density estimation

Let {X t }nt=1 be a d-dimensional i.i.d. random sample from an unknown continuous distribution F with density f defined on the real line, d ≥ 2. We are interested in estimating f . There

4

exist two general approaches: parametric and nonparametric. The parametric approach entails functional form assumptions up to a finite set of unknown parameters. Multivariate

normal distributions or more generally, the elliptical families, are commonly used due to

their simplicity. The nonparametric approach provides a flexible alternative that seeks a

functional approximation to the unknown density. Instead of imposing functional form assumptions, this approach allows the number of (nuisance) parameters to increase with sample

size to achieve consistency. One can also combine these two approaches to balance between

parsimony and goodness-of-fit. Below we briefly review various methods for multivariate

density estimation, with a focus on nonparametric estimators.

2.1

Direct estimation

One of the most commonly used density estimators is the kernel density estimator (KDE),

which takes the form

n

1X

Kh (X t − x),

fh (x) =

n t=1

where Kh (x) is a d-dimensional non-negative kernel function that peaks at x = 0 and h,

the so-called bandwidth, controls how fast Kh (x) decays as x moves away from zero. A

popular choice of K is the Gaussian kernel, which is the standard normal density function.

For multivariate densities, the product kernel is commonly used. It is well-known that the

performance of KDE crucially depends on the choice of bandwidth. Data-driven methods,

such as the cross validation, are often used for bandwidth selection (see, e.g., Li and Racine,

2007).

Another popular method for density estimation is the series estimator. Let gi , i =

1, 2, . . . , m, be a series of linearly independent real-valued basis functions defined on Rd .

A series estimator is given by

m

X

fm (x) =

λi gi (x),

i=1

where the number of basis functions m plays a role similar to the bandwidth in kernel

estimation, and is usually determined by some data-driven methods, such as the generalized

cross validation. Examples of basis functions include the power series, trigonometric series,

splines, and wavelets.

For a d-dimensional random variable with a r-times continuously differentiable density,

both the kernel and series estimators can achieve the optimal convergence rate Op (n−r/(2r+d) )

in the L2 norm under some regularity conditions. However, for the kernel estimators to

achieve a convergence rate faster than n−2/(4+d) , one needs to use a higher (than two) order

5

kernel, which may lead to negative density estimates. The optimal series estimator has an

appealing property of automatically adapting to the unknown smoothness of the underlying

distribution, but it does not guarantee the positiveness of density estimates either. One

advantage of these estimators is the linearity, which makes it easy to use cross-validation to

determine their smoothing parameters and relatively straightforward to derive their asymptotic properties. But the linearity is also their weakness in the sense that their likelihood

functions, being a product of a sum, are complicated and have no sufficient statistics.

Alternatively, there exist likelihood based nonparametric density estimators. A regular

exponential family of estimators takes the form

fm (x) = exp(

m

X

λi gi (x) − λ0 ),

(2)

i=1

where gi , i = 1, . . . , m, are a series of bounded linearly independent basis functions, and

R

P

λ0 ≡ exp( m

i=1 λi gi (x))dx < ∞ ensures that fm integrates to unity. The estimation of

a probability density function by sequences of exponential families, which is equivalent to

approximating the logarithm of a density by a series estimator, has long been studied. Earlier

studies on the approximation of log densities using polynomials include Neyman (1937)

and Good (1963). Transforming the polynomial estimate of log-density back to its original

scale yields a density estimator in the exponential family. The maximum likelihood method

provides efficient estimates of this canonical exponential family. Crain (1974) establishes

the existence and consistency of the maximum likelihood estimator. Zellner and Highfield

(1988) and Wu (2003) discuss the numerical aspects of this estimator, which typically requires

nonlinear optimizations.

By letting the number of basis functions increase with sample size, one obtains a nonparametric estimator in (2). Stone (1990) and Kooperberg and Stone (1991) provide in

depth analyses of the log-spline density estimator, which is a special case of (2) with spline

basis functions in its exponent. Barron and Sheu (1991) establish the asymptotic properties

of (2) for general basis functions that include the power series, splines and trigonometric

series in a unified framework. They show that under suitable regularity conditions, this estimator achieves the optimal rate specified in Stone (1982) in terms of the Kullback-Leibler

information criterion. Wu (2010) further generalizes their results to multivariate density

estimations.

Following the spirit of Barron and Sheu (1991), we call this family of density estimator Exponential Series Estimator (ESE) to reflect its nonparametric nature. Like the series

estimator, an optimal ESE adapts to the smoothness of the underlying distribution auto6

matically. On the other hand, it is strictly positive and has a set of sufficient statistics,

P

µ̂i = 1/n nt=1 gi (X t ), i = 1, . . . , m, thanks to its canonical exponential form. In addition,

the ESE has an appealing information theoretic interpretation. It can been derived as the

maximum entropy density by maximizing Shannon’s information entropy subject to given

moment constraints (Jaynes, 1957).

The methods discussed so far are general methods that can be applied to univariate and

multivariate densities alike. However, due to the ‘curse of dimensionality’, the estimations

of multivariate densities are significantly more difficult. Below we discuss transformationbased, multiple-staged estimation methods that in particular facilitate multivariate density

estimations by mitigating the curse of dimensionality.

2.2

Transformation-based estimation

Transformation of variables of interest to facilitate model construction and estimation is

a common practice in statistical and econometric analyses. For example, the logarithm

transformation of a positive dependent variable in regression analysis oftentimes mitigates

heteroskedasticity. More generally, the Box-Cox transformation, which nests the logarithm

transformation as a limiting case, is often used to remedy deviations from normality in

residuals. Although less common, transformations have also been used in density estimations.

In the context of nonparametric density estimation, transformations can be used to reduce

bias. Wand et al. (1991) propose a transformation based kernel density estimator. They

note that the usual kernel estimators with a single global bandwidth work well for densities

that are not far from Gaussian in shape, but can perform quite poorly when the densities

deviate further away from Gaussian. In a spirit close to the Box-Cox transformation, they

propose transformations of the data so that the density of the transformed data is closer

to normal and can be adequately estimated by kernel estimators with a global bandwidth.

In particular, they suggest applying the shifted power transformation to right-skewed data.

They demonstrate that if a transformation is carefully selected, it is then more appropriate

to use the typical kernel estimator with a global bandwidth on the transformed data; the

resulting density estimate of the raw data obtained by back-transformation has a smaller bias.

Yang and Marron (1999) show that multiple families of transformations can be employed at

the same time, and this process can be iterated for further improvements.

Wand et al. (1991) and Yang and Marron (1999) consider only parametric transformations, which reduce biases but do not improve the convergence rate of nonparametric estimators. Ruppert and Cline (1994) propose an estimator, based on the probability-integraltransformation, that not only reduces bias and but also improves the convergence rate for

7

univariate densities. Suppose for now Xt is a scalar. First the data are transformed to

F̂ (Xt ), which is a smooth estimate of the CDF of x. The estimated kernel density of the

raw data then takes the form

n

1X

Kh (F̂ (Xt ) − F̂ (x))fˆ(x),

f˜(x) =

n t=1

where fˆ(x) ≡ dF̂ (x)/dx. Because F̂ converges to the standard uniform distribution whose

density has all derivatives equal to zero, bias of the second stage estimate is asymptotically

negligible. They further show that if the bandwidths of the first and second steps are chosen

to be of order n−1/9 , then the squared error of f˜ is of order Op (n−8/9 ) as n → ∞ rather

than Op (n−4/5 ), the rate of the usual kernel density estimators. This procedure can also

be iterated to obtain further rate improvements, although in practice the benefits may be

rather small.

Intuitively, both parametric and nonparametric transformations achieve bias reduction by

choosing a transformation such that the density of the transformed data is easier to estimate

in terms of, say smaller squared errors or integrated squared error. Motivated by Ruppert and

Cline (1994), we apply the nonparametric transformation approach to multivariate density

estimations. We show that this transformation benefits the estimation by mitigating the

curse of dimensionality. In addition, it provides useful insight into the dependence relation

among variables since the decomposition resulting from the said transformation coincides

with the copula decomposition of multivariate densities.

Let F̂j and fˆj , j = 1, . . . , d, be estimated marginal CDF and PDF for the jth margin

of a d-dimensional data X = [X1 , . . . , Xd ]. The probability-integral-transformation-based

density estimator of X is given by

fˆ(x) = fˆ1 (x1 ) · · · fˆd (xd )ĉ(F̂1 (x1 ), . . . , F̂d (xd )),

(3)

o

n

where ĉ is the estimate of the density of the transformed data F̂1 (x1 ), . . . , F̂d (xd ) .

Interestingly, (3) can also be derived using Sklar’s theorem (Sklar, 1959). Let f be the

density of a d-dimensional random variable, with Fj and fj being its jth marginal CDF and

PDF for j = 1, . . . , d. Sklar (1959) shows that the joint density can be decomposed as

f (x) = f1 (x1 ) · · · fd (xd )c(F1 (x1 ), . . . , Fd (xd )).

(4)

When all marginal distributions are differentiable, the decomposition is unique. The last

factor in (4) is termed the copula density, which completely summarizes the dependence

8

structure of x (see, e.g., Nelsen, 2006 for a general treatment of copula). The copula decomposition allows the separation of marginal distributions and their dependence and thus

facilitates construction of flexible multivariate distributions.

2.3

Entropy of Copula Decompositions

Although it has been suggested that the copula approach helps mitigate the curse of dimensionality in multivariate density estimations (see, e.g., Hall and Neumeyer, 2006), to the

best of our knowledge, this conjecture has not been established formally. Below we provide

a formal characterization of the potential benefit of copula approach in multivariate density

estimations.

discrete entropy is defined with a negative sign

Generally the more features a density has (or the more bumpy a density is), the more

difficult is its estimation. There exist many criteria of the degree of bumpiness. For instance,

R

the integrated squared derivatives, (f (r) (x))2 dx, r = 1, 2, ..., are commonly used for this

purpose. For discrete distributions, Shannon’s information entropy is sometimes used. The

PK

P

discrete entropy is given by H = K

k=1 pk = 1. One can show

k=1 pk ln pk , where pk ≥ 0 and

that the negative entropy attains its maximum when pk = 1/K for all k; namely when the

distribution is uniform across all possible states. In this sense, the negative entropy, which

reflects the discrepancy between a given discrete distribution and the uniform distribution,

can be viewed as an indicator of the degree of difficulty in density estimation.

For a continuous univariate density f , the differential entropy is defined as H(f ) =

R

f (x) ln f (x)dx. The differential entropy of a distribution is not restricted in sign and

generally does not admit the intuitive interpretation of the discrete entropy. Nonetheless if

we impose the restriction that a given distribution has a bounded support, then a similar

interpretation is allowed. To see this, we first need to introduce a closely related concept,

the Kullback-Leibler information criterion (KLIC). The KLIC between two densities, f and

g, is given by

Z

f (x)

D(f ||g) = f (x) log

dx,

g(x)

where g is absolutely continuous with respect to f and D(f ||g) = +∞ otherwise. It is

well known that D(f ||g) ≥ 0 and the equality holds if and only if f = g almost everywhere.

Suppose that the support for f and g is bounded. Without loss of generality, the support can

be assumed to be the unit interval. Under this condition, when g is the standard uniform

distribution, D(f ||g) = H(f ). Thus the entropy of a continuous distribution defined on

a bounded support, like the discrete entropy, captures its discrepancy from the uniform

9

distribution, and therefore reflects the degree of difficulty in its density estimation.

Below we show that for a multivariate density defined on a bounded support, the entropy

of its joint density can be decomposed into the entropies of its margins and its copula density.

R

In particular, let H(f ) = f (x1 , . . . , xd ) ln f (x1 , . . . , xd )dx1 · · · dxd be the entropy for a ddimensional distribution. We establish the following:

Theorem 1 Suppose x is a d-dimensional random variable from a distribution F defined

on [0, 1]d , with density function f . Then

H(f ) =

d

X

H(fj ) + H(c),

(5)

j=1

where H(fj ), j = 1, . . . , d, is the entropy of the jth marginal density, and H(c) is the entropy

of the corresponding copula density c(F1 (x1 ), . . . , Fd (xd )).

Remark 1 The assumption that X is defined on [0, 1]d is no more restrictive than the assumption of a bounded support since one can transform an arbitrary bounded support to the

unit hypercube through, e.g., the probability integral transformation.

Remark 2 We stress that the assumption of bounded support is only imposed to facilitate

our theoretical exploration below. In practical implementation of the transformation-based estimation, the supports for marginal distributions are not required to be bounded. In principle,

any reasonable parametric/nonparametric methods can be used to estimate the marginal densities and distributions. After the first stage probability integral transformation, the domain

for the second stage density, or the copula density, is naturally restricted to [0, 1]d .

Remark 3 The decomposition (5) resembles the structure of additive models in nonparametric estimations.1 The difficult task of estimating the joint density is divided into the

estimation of individual marginal densities and that of the copula density. In regards of the

entropy as an indicator of degree of difficulty in density estimations, each individual task is

at least as easy as the estimation of the joint density since all entropies on the right hand side

are non-negative. Usually multivariate densities are considerably more difficult to estimate

than univariate ones. Theorem (1) suggests that when there are significant features in the

marginal distributions, the two-step estimation approach can be more effective than the direct

estimation since the joint density of the transformed data, or the copula density, is easier

1

There is one subtle difference: the additive structure of general additive models is oftentimes assumed,

while that of the copula decomposition holds exactly.

10

to estimate after the features attributed to the marginal distributions have been ‘removed’.

Obviously, the higher is the dimension d, the more substantial the potential benefit can be.

Remark 4 The copula decomposition of multivariate densities induced by the probability

integral transformation is in spirit close to the so called ‘divide and conquer’ algorithm, as

is dubbed in the computer science literature.

Due to its flexibility, the copula approach has been used widely in multivariate analyses;

see, e.g., Chen and Fan (2006a, 2006b), Chen et al. (2006), Patton (2006) and references

therein. Hall and Neumeyer (2006) show that the copula method can benefit estimation of

joint densities when there are additional data for the margins. Chui and Wu (2009) provide

simulation evidence that two-step estimation via an empirical copula density often outperforms direct estimation of joint densities. Both papers consider only bivariate densities.

Below we present formally a transformation-based estimator for the general d-dimensional

cases and establish its large sample properties.

3

Transformation-based multivariate density estimation

In this section we present a nonparametric transformation-based multivariate density estimator and establish its asymptotic properties. We then propose a method of model specification

for the second stage estimation of the density of the transformed data, which can be viewed

as estimation of empirical copula density functions.

3.1

The estimator

The transformation-based estimator for an iid d-dimensional random vector {X t }nt=1 is constructed in two steps. We first obtain consistent estimates of marginal densities and distributions, denoted by fˆj and F̂j respectively for j = 1, . . . , d. Note that it is not necessary that

fˆj (x) = F̂j0 (x); the densities and distributions of the margins can be estimated separately.

For instance, we can combine smoothed estimates of marginal densities and empirical CDF’s

of corresponding margins in our estimations.

The second step estimates the density of the transformed data F̂t = (F̂1 (X1t ), . . . , F̂d (Xdt )),

t = 1, . . . , n. To ease notation, we define ût = (û1t , . . . , ûdt ) with ûjt = F̂jt for j = 1, . . . , d.

As is discussed above, the density of {ût }nt=1 converges to the copula density as n → ∞.

11

Like an ordinary density function, a copula density can be estimated by parametric or nonparametric methods. Parametric copula density functions are usually parameterized by one

or two parameters. This parsimony in functional forms, however, oftentimes imposes restrictions on the dependence structure among the margins. For example, the popular Gaussian

copula is known to exhibit zero tail dependence. Consequently, it may be inappropriate

to use simple Gaussian copulae to investigate the co-movements of extreme stock returns.

Another limitation of parametric copulas is that they are usually defined only for bivariate

distributions (with the exception of the multivariate Gaussian copula) and extensions to

higher dimensions are not available.

Nonparametric estimation of copula densities provides a flexible alternative that ensures

consistency. However compared with their parametric counterparts, nonparametric estimators are marked for their slower convergence rates. In addition, since copula densities are

defined on a bounded support, treatment of boundary biases warrants special care. Boundary biases are negligible if the curves to estimate vanish at the boundaries, but can pose a

considerable problem otherwise. Although boundary bias problem exists generally in nonparametric estimations, it is particularly severe in copula density estimations. This is because

unlike many densities or curves that vanish at the boundaries, copula densities often peak

at the boundaries and corners. For example consider the joint distribution of two stock returns, their dependence structure is often dominated by the co-movements of their extreme

tails, giving rise to a copula density that peaks at either end of the diagonal of the unit

square. In this case, a nonparametric estimate, say a kernel estimate, of the copula density

without proper boundary bias corrections may significantly underestimate the degree of tail

dependence between the two stocks.

In this study, we adopt the ESE to estimate copula densities. This estimator has some

appealing properties that make it suitable for copula density estimations. The prominent

advantage is that the ESE is explicitly defined on a bounded support. With optimally

selected basis functions (by data-driven methods), the estimators are free of boundary bias.

This is particularly useful for estimation of copula densities that often peak at the boundaries

and corners. In addition, the ESE adapts automatically to the unknown smoothness of

the underlying copula density, and unlike the higher order kernel and series estimators, it

guarantees the strict positiveness of the density estimate.

P

Define a multi-index i = (i1 , i2 , . . . , id ), and |i| = dj=1 ij . Given two multi-indices i and

m, i ≥ m indicates ij ≥ mj elementwise; when m is a scalar, i ≥ m means ij ≥ m for all j.

Recall that the multivariate ESE of a copula density can be derived from the maximization of

Shannon’s entropy of the copula density subject to some given moment conditions. Suppose

12

P

m = (m1 , . . . , md ) and M ≡ {i : |i| > 0 and i ≤ m}. Let {µ̂i = n−1 nt=1 gi (ut ) : i ∈

M} be a set of moment conditions for a copula density, where gi ’s are a sequence of realvalued, bounded and linearly independent basis functions defined on [0, 1]d . The ESE of the

corresponding copula density can be obtained by maximizing Shannon’s entropy

Z

−c(u) log c(u)du,

(6)

H=

[0,1]d

subject to the integration to unity condition

Z

c(u)du = 1

(7)

[0,1]d

and side moment conditions

Z

gi (u) c(u) du = µ̂i ,

i ∈ M,

(8)

[0,1]d

where du = du1 du2 · · · dud for simplicity.

The estimated multivariate copula density is then given by

ĉ(u; λ̂) = exp (

X

λ̂i gi (u) − λ̂0 )

(9)

i∈M

where

Z

λ̂0 = log (

exp (

[0,1]d

X

λ̂i gi (u))du).

(10)

i∈M

Note that λ̂ is finite due to the boundedness of the domain of copula functions and that of

the basis functions. Given the estimated marginal density functions, the multivariate density

of the original data is then estimated by

d

Y

ˆ

f (x) = ( fˆj (xj ))ĉ(F̂ (x); λ̂),

(11)

j=1

where F̂ (x) = (F̂1 (x1 ), . . . , F̂d (xd )).

3.2

Asymptotic Properties of Two-Stage Multivariate ESE

In this section, we present the large sample properties of the proposed transformation-based

estimator of multivariate densities. The copula decomposition transforms a joint density

13

into the product of marginal densities and a copula density. Thus a discrepancy measure

in terms of the logarithm of densities is particularly useful since we can then transform the

said product into a sum of log densities. It transpires that the Kullback-Liebler Information

Criterion (KLIC), discussed in the previous section, is a natural candidate for this task.

Below we shall establish the convergence rates of the transformation-based estimator

in terms of the KLIC. Denote f (x) = f1 (x1 ) · · · fd (xd )cf (F1 (x1 ), . . . , Fd (xd )), where fj and

Fj , j = 1, . . . , d are marginal densities and distributions, and cf is the copula density for

the joint distribution F (x). Similarly, let g(x) = g1 (x1 ) · · · gd (xd )cg (G1 (x1 ), . . . , Gd (xd )),

where gj and Gj , j = 1, . . . , d are marginal densities and distributions, and cg is the copula

density for the joint distribution G(x). We first present a theorem that decomposes the

KLIC between f and g as the sum of KLICs of their components.

Theorem 2 Suppose F and G are continuous distributions defined on a common support

with densities f and g respectively. We then have

D(f ||g) =

d

X

D(fj ||gj ) + D(cf ||c̃g ),

j=1

where c̃g (u1 , . . . , ud ) = cg (G1 (F1−1 (u1 )), . . . , Gd (Fd−1 (ud ))) for (u1 , . . . , ud ) ∈ [0, 1]d .

Remark 5 Analogous to the decomposition of entropy in Theorem 1, Theorem 2 suggests

that the KLIC between two multivarirate densities can be expressed as the sum of the KLICs

between individual marginal distributions and a term that resembles the KLIC between two

copula densities, although c̃g is generally not a copula density function. Only under the

condition that Gj = Fj for all js, c̃g is a copula density. Nonetheless, this distinction is

negligible asymptotically for our analysis below, in which F̂j plays the role of Gj , which

converges to Fj asymptotically so long as F̂j is a consistent estimate of Fj .

Remark 6 There are two note-worthy special cases of Theorem 2. When the two distributions share common marginal distributions, or fj = gj for all j’s, D(fj ||gj ) = 0 and c̃g = cg .

It follows that D(f ||g) = D(cf ||cg ). On the other hand, when the two distributions share

P

a common copula density, or cf = cg , we have D(f ||g) = dj=1 D(fj ||gj ) + D(cf ||c̃f ), with

c̃f (u1 , . . . , ud ) = cf (G1 (F1−1 (u1 )), . . . , Gd (Fd−1 (ud ))). Thus it is seen that the ‘reminder’ term

attributed to the copula densities generally exist even when two distributions share a common

copula function, so long as their marginal distributions differ.

Theorem 2 indicates that one can derive the convergence rate of the estimator given

by (11) as a sum of the convergence rates of its components. To facilitate our asymptotic

analysis in terms of convergence in the KLIC, we focus on the following estimation strategy:

14

1. The marginal densities are estimated by the ESE. For j = 1, . . . , d, we have

fˆj (xj ) = exp

lj

X

λ̂j,i gi (xj ) − λ̂j,0 ,

(12)

i=1

where gi ’s are a series of linearly dependent, bounded real-valued basis functions defined

on the support of xj .

2. The marginal distributions are estimated by their corresponding empirical CDFs. To

ease notation, we denote uj = Fj (xj ) and ûj = F̂j (xj ).

3. The copula density c is estimated by the ESE of the form

!

ĉ(u) = exp

X

λ̂i gi (u) − λ̂0

,

(13)

i∈M

where gi ’s are a series of linearly dependent, bounded real-valued functions defined on

[0, 1]d , and M = {i : |i| > 0, i ≤ m and m = {m1 , m2 , . . . , md }}.

Only the third condition is essential to our analysis. The first two conditions are assumed

to ease our exposition. We stress that the above estimators for the marginal densities and

distributions can be replaced by other suitable estimators in our theoretical analysis if we

examine the convergence in terms of other criteria than the KLIC. As is discussed above, in

practice, the marginal quantities can be estimated by any reasonable estimators.

The following conditions are assumed for the rest of this section.

Assumption 1 The observed data X 1 = [X11 , X21 , . . . , Xd1 ], X 2 = [X12 , X22 , . . . , Xd2 ], . . . ,

X n = [X1n , X2n , . . . , Xdn ] are i.i.d. random samples from a continuous distribution F defined

on a bounded support, with joint density f , marginal density fj and marginal distribution Fj

for j = 1, . . . , d.

(s −1)

Assumption 2 For each j in 1, . . . , d, let qj (xj ) = log fj (xj ). qj j (·) is absolutely conR (s )

tinuous and (qj j (xj ))2 dxj < ∞, where sj is a positive integer greater than 1.

Assumption 3 lj → ∞, lj3 /n → 0 when gi ’s are the power series and lj2 /n → 0 when gi ’s

are the trigonometric series or splines, as n → ∞.

Assumption 4 Let qc (u) = log c(u) . For nonnegative integers rj ’s, j = 1, . . . , d, define

P

(r)

(r−1)

qc (u) = ∂ r c(u)/∂ r1 u1 · · · ∂ rd ud , where r = dj=1 rj . qc

(u) is absolutely continuous and

R (r)

2

(qc (u)) du < ∞ for r > d.

15

Q

Q

Q

Assumption 5 dj=1 mj → ∞, dj=1 m3j /n → 0 when gi ’s are the power series and dj=1 m2j /n →

0 when gi ’s are the trigonometric series or splines, as n → ∞.

The convergence rate of the marginal densities is given by Barron and Sheu (1991). To

ease references and for the sake of completeness, it is given below.

Theorem 3 Under Assumptions 1, 2 and 3, the estimated marginal density fˆj , j = 1, . . . , d,

given by (12) converges to fj in terms of the KLIC such that

−2s

D(fj ||fˆj ) = Op (lj j + lj /n),

as n → ∞.

Next we derive the convergence rate of the ESE of a copula density. Suppose for now that

the marginal distributions are known such that uj,t (Xj,t ) = Fj (xj,t ). We can then establish

the convergence rate of the copula density estimator ĉ(u) based on uj,t ’s:

Theorem 4 Under Assumptions 1, 4 and 5, the copula density estimator given by (13)

converges to the copula density c in terms of the KLIC at rate

D(c||ĉ) = Op

d

Y

−2r

mj j

j=1

+

d

Y

!

mj /n ,

j=1

as n → ∞.

Lastly since uj,t ’s are unknown, they need to be replaced by their corresponding estimates.

Let ûj,t (Xj,t ) = F̂j (Xj,t ), j = 1, . . . , d, t = 1, . . . , n. Denote the ESE copula density estimator

based on û by ĉ(û). Our joint density estimator is then given by

fˆ(x) =

d

Y

fˆj (xj )ĉ(û),

(14)

j=1

where û = {û1 , . . . , ûd } with ûj = F̂j (xj ) for j = 1, . . . , d. Combining the results of Theorems

3 and 4 via the decomposition given in Theorem 2, we obtain the following result for our

transformation-based density estimator:

Theorem 5 Under Assumptions 1, 2, 3, 4 and 5, the joint density estimator fˆ given by

(14) converges to f in terms of the KLIC with rate

D(f ||fˆ) = Op

!

d

d

d

Y

Y

X

−2s

−2r

{lj j + lj /n} +

mj j +

mj /n ,

j=1

j=1

16

j=1

as n → ∞.

Remark 7 Replacing u by û in the estimation of the empirical copula function does not

affect the final convergence rate because û, as the empirical CDF, has an n−1/2 convergence

rate and therefore faster than any nonparametric rate.

Remark 8 The optimal convergency rates for the marginal densities are obtained if we

set lj = O(n1/(2sj +1) ), leading to a convergence rate of Op (n−2sj /(2sj +1) ) in terms of the

KLIC for each j = 1, . . . , d. Similarly, the optimal convergence rate for the copula density is Op (n−2r/(2r+d) ) if we set mj = O(n1/(2r+d) ) for

each j. It follows that the optimal

P

d

−2sj /(2sj +1)

−2r/(2r+d)

=

convergence rate of the joint density is given by Op ( j=1 n

)+n

−2sj /(2sj +1)

−2r/(2r+d)

. Thus the best possible rate of convergence is either

Op maxj (n

)+n

the slowest convergence rate of the marginal densities or that of the copula density. Usually

the convergence rates of multivariate density estimations are slower than those of univariate cases. In our case, the convergence rate of the copula density is the binding rate unless

sj < r/d for at least one j ∈ 1, . . . , d; namely unless the degree of smoothness of at least one

marginal density is especially low (relative to that of the copula density).

3.3

Model specification

The selection of smoothing parameters plays a crucial role in nonparametric estimations. In

series estimations, the number of basis functions is the smoothing parameter. The asymptotic

analysis presented above is invariant to the choice of basis function space; on the other hand,

it does not provide guidance on the actual selection of basis functions within a certain basic

function space. In this subsection, we present a practical strategy of model specification.

One manifestation of the curse of dimensionality in nonparametric estimations is that

the number of nuisance parameters increases rapidly with the dimension of the problem. For

instance, consider the d-dimensional multi-index set M = {i : 0 < |i| ≤ m}, where m is a

positive integer. Denote the number of elements of the set M by #(M). One can show

− 1. A complete subset selection, which entails 2#(M) estimations, is

that #(M) = m+d

d

prohibitively expensive. The computational cost is even more severe for the ESE, which

requires numerical integrations in multiple dimensions.

One practical strategy is to use stepwise algorithms. Denote Gm = {i : |i| = m} , m =

1, . . . , M . We consider a hierarchical basis function selection procedure. Starting with G1 ,

we keep only basis functions whose coefficients are statistically significant in terms of their

17

2

t-statistics.

n Denote

o the significant subset of G1 by Ĝ1 . We next estimate an ESE using basis

functions Ĝ1 , G2 , and eliminate elements of G2 that are not statistically significant, leading

n

o

to a ‘degree-two’ significant set Ĝ1 , Ĝ2 . This procedure is repeated for m = 3, . . . , M ,

n

o

yielding a set of significant basis functions Ĝ1 , . . . , ĜM along the way. Denote by fˆm an

n om

c

ESE using basis functions Mm = Ĝj

, m = 1, . . . , M . One can then select a preferred

j=1

model according to an information criterion, such as the AIC or BIC, or the method of cross

validation or generalized cross validation.

There exists, however, a practical difficulty associated with the above stepwise selection

of basis functions. That is, for d ≥ 2, the number of elements of Gm increases with m (as

well as with d) rapidly. One can show that #(G)m = m+d−1

. For instance, with d = 4,

d−1

#(G)m = 4, 10, 20, and 35 respectively for m = 1, . . . , 4. Thus the stepwise algorithm entails

increasingly bigger candidate sets as the selection proceeds. Incorporating a large number

of basis functions indiscriminately is computationally expensive. At the same time, a more

severe consequence is that it may deflate the t-values of informative basis functions, leading

to their omissions from the significant set.

To tackle this problem, we further propose a refinement to the stepwise algorithm. This

method entails a preliminary selection of significant terms at each stage of the stepwise

selection. Let fˆm be the preferred model at stage m of the estimation. Denote

)

n

1X

gi (X t ) : |i| = m + 1 ,

=

n t=1

Z

ˆ

=

gi (x)fm (x)dx : |i| = m + 1 .

(

µ̄m+1

µ̂m+1

Recall that the sample moments associated with given basis functions are sufficient statistics

of the resultant ESE. If µ̄m+1 are well predicted by the ‘one-step-ahead’ estimates µ̂m+1 ,

one can argue that the moments associated

n with basisofunctions Gm+1 are not informative

given the set of moments associated with Ĝ1 , . . . , Ĝm . Consequently, it is not necessary

to incorporate Gm+1 into the estimation.

n

o

In practice, it is more likely that some elements of Gm+1 are informative given Ĝ1 , . . . , Ĝm .

We call these informative elements of Gm+1 its significant subset, denoted by G̃m+1 . Let ρm+1

2

See Delaigle et al. (2011) on the robustness and accuracy of methods for high dimensional data analysis

based on the t-statistics.

18

be the correlation between µ̄m+1 and µ̂m+1 . We estimate the size of G̃m+1 according to

#(G̃m+1 ) = d

p

1 − ρm+1 × #(Gm+1 )e,

(15)

where dae denotes the smallest integer greater than or equal to a.

After calculating #(G̃m+1 ), we need to select members of G̃m+1 from Gm+1 . For this

purpose, we employ the method of subset selection. This method of identifying a significant

subset of a vector variables is to select subsets which are optimal for a given criterion that

measures how well each subset approximates the whole set (see, e.g., McCabe, 1984, 1986;

Cadima and Jolliffe, 2001; Cadima et al, 2004). In particular, we adopt the RM criterion

that measures the correlation between a n × p matrix Z and its orthogonal projection on to

an n × q submatrix Q, where q ≤ p. This matrix correlation is defined as

s

RM (Z, PQ Z) = cor(Z, PQ Z) =

trace(Z T PQ Z)

,

trace(Z T Z)

(16)

where PQ is the linear orthogonal projection matrix onto Q.3 Thus given #(G̃m+1 ), we then

select the preliminary significant subset G̃m+1 as the one that maximizes the RM criterion

given in (16). This procedure is rather fast since it only involves linear operations (see

Cadima et al. 2004 for details in implementing this method).

We conclude this section with a step-by-step description of the proposed model specification procedure.

1. j = 1: Fit an ESE using G1 ; denote by Ĝ1 the subset of G1 with significant t-values; fit

an ESE using Ĝ1 , denote the resultant estimate by fˆ1 .

2. j = 2:

(a) Estimate the size of the preliminary significant subset #(G̃2 ) according to (15);

(b) Select the preliminary significant subset G̃2 of G2 according to (16);

n

o

(c) Estimate an ESE using Ĝ1 , G̃2 ; denote the significant subset of G̃2 by Ĝ2 ;

n

o

(d) Estimate an ESE using Ĝ1 , Ĝ2 ; denote the resultant estimate by fˆ2 .

3

To deal with the dilemma of dimensionality, a typical way is through a principal component analysis

(PCA). However, dimensionality reduction via PCA still involves all of the moments which may lead to

a worse estimation performance in our case since those trivial moments produce extra noise. The subset

selection method is closely related to the PCA. There exists, however, one key difference: the principal

components are linear combinations of all elements of a candidate set, while the subset selection method

only selects the most informative elements.

19

3. Repeat procedure 2 for j = 3, . . . , M to obtain fˆ3 , . . . , fˆM respectively.

n

o

4. Select the best model among fˆ1 , . . . , fˆM according to a given criterion such as the

AIC, BIC, cross validation or generalized cross validation.

4

Monte Carlo Simulation

In this section, we conduct Monte Carlo simulations to investigate the finite sample performance of the proposed estimator. We consider bivariate and tri-variate distributions

constructed as mixtures of normal distributions. In particular, following Wand and Jones

(1993), we construct multivariate normal mixture distributions characterized as uncorrelated

normal, correlated normal, skewed, kurtotic, bimodal I and bimodal II respectively.4

For the sake of comparison, we also evaluate the performance of a direct estimator of

multivariate densities using the KDE. For the transformation-based-estimator, the marginal

densities and distributions are estimated by the KDE, and the copula densities are estimated

via the ESE, for which the highest degree of basis functions is set to four (i.e., M = 4). We

use the polynomial basis functions, and the actual selection of basis functions is conducted

according to the stepwise algorithm described in the previous section. The best model is

then selected according to the AIC. For all KDEs (the marginal densities and distributions

in the two-step estimation, and the direct estimation), the bandwidths are selected using the

least squares cross validation.

Our first example concerns with the estimation of density functions. The sample sizes are

100, 200 and 500, and each experiment is repeated 300 times. The performance is gauged by

the integrated squared errors (ISE) evaluated on [−3, 3]d , d = 2 and 3, with the increment

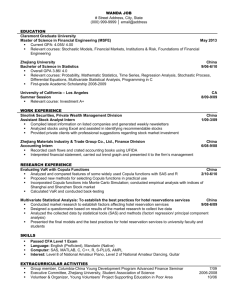

of 0.15 in each dimension. The results are reported in Table 1.

It is seen that in all experiments, the ESE outperforms the KDE. For the bivariate cases

reported in the top panel, the average ratios of the ISE between the ESE and the KDE across

all six distributions are 78%, 75% and 54% respectively when the sample sizes are 100, 200

and 500. The results for the trivariate cases are reported in the bottom panel. The general

pattern of performance remains the same. The corresponding average ISE ratios between

the ESE and the KDE are 69%, 59% and 52%. Overall, the average ISE ratios between

the ESE and the KDE decrease with the sample sizes for a given dimension, and with the

dimension for a given sample size, indicating the superior finite sample performance of the

transformation-based estimator.

4

Details on the distributions investigated in the simulations are given in the Appendix.

20

Table 1: ISE of joint density estimations (KDE: kernel estimator; ESE: exponential series

estimator)

N=100

KDE

ESE

uncorrelated normal

0.0103

(0.0047)

correlated normal 0.0094

(0.0035)

skewed 0.0223

(0.0061)

kurtotic 0.0202

(0.0052)

bimodal I 0.0075

(0.0029)

bimodal II 0.0142

(0.0050)

0.0086

(0.0075)

0.0081

(0.0074)

0.0141

(0.0082)

0.0153

(0.0037)

0.0065

(0.0045)

0.0107

(0.0080)

uncorrelated normal

0.0058

(0.0041)

0.0041

(0.0031)

0.0090

(0.0064)

0.0160

(0.0025)

0.0042

(0.0031)

0.0042

(0.0025)

0.0078

(0.0024)

correlated normal 0.0058

(0.0014)

skewed 0.0132

(0.0032)

kurtotic 0.0218

(0.0027)

bimodal I 0.0059

(0.0015)

bimodal II 0.0072

(0.0018)

N=200

KDE

ESE

d=2

0.0064

0.0052

(0.0026) (0.0050)

0.0058

0.0048

(0.0017) (0.0033)

0.0190

0.0104

(0.0043) (0.0046)

0.0152

0.0129

(0.0042) (0.0023)

0.0049

0.0038

(0.0017) (0.0024)

0.0095

0.0065

(0.0027) (0.0044)

d=3

0.0053

0.0028

(0.0015) (0.0019)

0.0040

0.0024

(0.0008) (0.0011)

0.0098

0.0053

(0.0022) (0.0021)

0.0192

0.0148

(0.0022) (0.0015)

0.0040

0.0023

(0.0008) (0.0014)

0.0051

0.0026

(0.0011) (0.0014)

N=500

KDE

ESE

0.0035

(0.0012)

0.0034

(0.0008)

0.0146

(0.0029)

0.0099

(0.0026)

0.0028

(0.0009)

0.0054

(0.0014)

0.0023

(0.0019)

0.0028

(0.0011)

0.0072

(0.0025)

0.0010

(0.0010)

0.0018

(0.0010)

0.0029

(0.0015)

0.0033

(0.0008)

0.0026

(0.0005)

0.0067

(0.0013)

0.0146

(0.0017)

0.0026

(0.0005)

0.0033

(0.0006)

0.0013

(0.0007)

0.0015

(0.0005)

0.0027

(0.0008)

0.0136

(0.0010)

0.0011

(0.0005)

0.0012

(0.0005)

Our second experiment concerns with the estimation of tail probabilities of multivariate

densities, which is of fundamental importance in many areas of economics, especially in financial economics. In particular, we are interested in the estimation of joint tail probabilities

of multivariate distributions. The tail index of a distribution is given by

Z

T =

f (x)dx,

[−∞,qj ]d

21

where qj ≡ Fj−1 (α), j = 1, · · · , d, and α is a small positive number close to zero. The same

set of distributions investigated in the first experiment are used in the second experiment.

The sample size is 100, and each experiment is repeated 500 times. The 5% and 10% lowertails (i.e., α = 5% and 10%) are considered. The transformation-based estimators and the

KDE’s are estimated in the same manner as in the first experiment. Denote the estimated

densities via the ESE and KDE by fˆESE and fˆKDE respectively. The corresponding estimated

tail indices are given by

Z

T̂ESE =

fˆESE (x)dx,

d

[−∞,qj ]

Z

fˆKDE (x)dx.

T̂KDE =

[−∞,qj ]d

In addition, we also consider an estimator based on the empirical distributions:

T̂EM

( d

)

n

1X Y

=

I(Xt,j ≤ qj ) .

n t=1 j=1

The performance of the tail index estimators is measured by the mean squared errors

(MSE), which is the average squarred difference between the estimated tail index and the

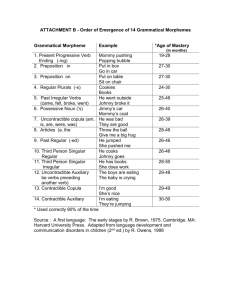

true tail index. The results are reported in Table 2. It is seen that the overall performance

of the ESE is substantially better than that of the other two. For d=2, the average ratio

of the MSE between the ESE and the KDE across six distributions is 37% at 5% marginal

distribution and 53% at 10% marginal distribution respectively, while for d=3 the average

MSE ratio between the ESE and the KDE is 25% and 47% respectively. The average ratio of

MSE between the ESE and the KDE improves with the dimensionality of the sample space.

In addition, the variations of the estimated tail index based on the ESE are considerably

smaller than those of the other two.

5

Empirical Application

In this section, we provide an illustrative example of multivariate analysis using the transformationbased density estimation. We also demonstrate that the copula density, a by-product of the

two-step density estimator, provides useful insight into multivariate dependence structure

that cannot be easily detected by examining the joint densities. We study the joint density of the U.S. and U.K. financial market returns, under various conditions of the Asian

financial markets. Starting from the 1990’s, the Asian financial markets have played an

22

Table 2: MSE of tail probability estimation (EM: empirical estimator; KDE: kernel estimator;

ESE: exponential series estimator)

EM

1.0320

(1.7724)

correlated normal 1.8451

(2.8861)

skewed 1.0076

(1.6747)

kurtotic 1.3930

(2.4307)

bimodal I 0.2045

(0.3951)

bimodal II 0.2095

(0.4327)

α = 5%

KDE

uncorrelated normal

1.7570

(2.3339)

1.3867

(2.4799)

0.7697

(1.2471)

1.1677

(2.0369)

0.3210

(0.5513)

0.3652

(0.5585)

uncorrelated normal

0.1352

(0.2539)

0.2822

(0.6333)

0.1111

(0.2475)

0.3607

(0.6043)

0.0080

(0.0263)

0.0108

(0.0371)

0.1331

(0.4129)

correlated normal 0.5059

(1.2809)

skewed 0.1829

(0.3992)

kurtotic 0.5730

(0.9976)

bimodal I 0.0119

(0.1063)

bimodal II 0.0080

(0.0869)

ESE

EM

d=2

0.2949

2.6780

(0.4981) (4.0155)

0.9333

4.2959

(0.8883) (5.9669)

0.3493

3.0475

(0.4207) (4.2814)

0.6777

2.9170

(0.6613) (4.3901)

0.0483

1.0200

(0.0904) (1.7069)

0.0602

0.9720

(0.0910) (1.4723)

d=3

0.0103

0.4535

(0.0218) (0.8620)

0.0971

1.7460

(0.0616) (2.5603)

0.0296

0.8814

(0.0134) (1.2628)

0.2699

1.8735

(0.0855) (2.8469)

0.0003

0.0996

(0.0007) (0.3228)

0.0004

0.1172

(0.0008) (0.3940)

α = 10%

KDE

ESE

4.6047

(5.7700)

3.2371

(4.5019)

2.7579

(4.0362)

2.5453

(4.2056)

1.5901

(1.9928)

1.5850

(1.9710)

1.1665

(1.9452)

2.7643

(3.0975)

1.8587

(2.1085)

2.3564

(2.1718)

0.3142

(0.5367)

0.3962

(0.7425)

0.6440

(0.8642)

0.9812

(1.7711)

0.5762

(1.0695)

1.2931

(2.1702)

0.0909

(0.1711)

0.0995

(0.2023)

0.0805

(0.1819)

0.5602

(0.5968)

0.4666

(0.2811)

1.4767

(0.9358)

0.0076

(0.0134)

0.0080

(0.0177)

increasingly important role in the global financial system. The purpose of our investigation

is to examine how the Asian markets influence the western markets. The scale and scope

of global financial contagion has been under close scrutiny especially since the 1998 Asian

financial crisis. Therefore we are particularly interested in examining the general pattern of

the western markets under extreme Asian market conditions.

In our investigation, we focus on the monthly stock return indices of S&P 500 (US),

FTSE 100 (UK), Hangseng (HK) and Nikkei 225 (JP) markets. Instead of assuming a

23

specific parametric model, we opt to investigate their relations in a fully nonparametric

manner. We first estimate the joint distribution of the four markets, and then calculate the

joint conditional distribution of the US and UK markets under various conditions of the HK

and JP markets. Our data include the monthly indices of the four markets between February

1978 and May 2006. For each market, we calculate the rate of return Yt by ln Pt − ln Pt−1 .

Following the standard practice, we apply a GARCH(1,1) model to each series and base our

investigation on the standardized residuals. The transformation-based density estimator is

used in our analysis. The marginal densities and distributions are estimated by the kernel

method and the copula density is estimated by the ESE.

Denote the returns for the US, UK, HK and JP markets by Yj , j = 1, . . . , 4 respectively.

After obtaining the joint density, we calculate the joint density of the US and UK markets

conditional on the HK and JP markets:

fˆ (y1 , y2 |(y3 , y4 ) ∈ ∆) ,

where ∆ refers to a given region of the HK and JP distribution. In particular, we consider

three scenarios of the Asian markets:

∆L = {(y3 , y4 ) : Fj−1 (0) ≤ yj ≤ Fj−1 (15%), j = 3, 4},

∆M = {(y3 , y4 ) : Fj−1 (40%) ≤ yj ≤ Fj−1 (60%), j = 3, 4},

∆H = {(y3 , y4 ) : Fj−1 (85%) ≤ yj ≤ Fj−1 (100%), j = 3, 4},

where Fj , j = 3, 4 are the marginal distributions of the HK and JP markets. Thus our analysis

focuses on the joint distributions of the US and UK markets when the Asian markets are in

the low, middle and high regions of their distributions respectively. The conditional copula

density, ĉ(u1 , u2 |(y3 , y4 ) ∈ ∆), of the US and UK markets is obtained in a similar manner.

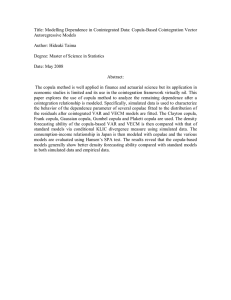

Figure 1 reports the estimated conditional joint densities of the US and UK markets under

various conditions of the Asian markets. For comparison, the unconditional joint density is

also reported. The positive dependence between the two markets is evident under all market

conditions. There is little difference between the unconditional density and the conditional

one when the Asian markets are in the middle region. When the Asian markets are low, the

bulk of US and UK distribution moves visibly to the lower left corner; in contrast, when the

Asian markets are high, the entire distribution shifts toward the upper right corner.

The overall pictures of the joint densities are consistent with the general consensus that

the western markets are influenced by fluctuations in the Asian markets and they tend to

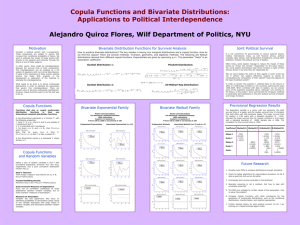

move in similar directions. Next we report in Figure 2 the conditional copula densities of

24

Unconditional density of US and UK

Conditional density of US and UK given distributions of HK and JP between (0, 0.15]

2.5e−05

0.00030

2

2

0.00025

2.0e−05

1

1

0.00020

UK

UK

1.5e−05

0

0

0.00015

1.0e−05

−1

−1

0.00010

5.0e−06

−2

−2

0.00005

−3

−3

0.0e+00

−2

−1

0

1

2

3

0.00000

−2

−1

0

1

2

3

US

US

Conditional density of US and UK given distributions of HK and JP between (0.40, 0.60]

Conditional density of US and UK given distributions of HK and JP between (0.85, 1]

0.00025

2

0.00025

2

0.00020

1

0.00020

0

0.00015

−1

0.00010

0.00005

−2

0.00005

0.00000

−3

1

UK

UK

0.00015

0

0.00010

−1

−2

−3

−2

−1

0

1

2

3

0.00000

−2

US

−1

0

1

2

3

US

Figure 1: Estimated US and UK joint densities (Top left: unconditional; Top right: Asian

market low; Bottom left: Asian market middle; Bottom right: Asian market high)

the US and UK markets under various Asian market conditions and show that additional

insight can be obtained by examining the copula densities. The top left figure reports the

unconditional copula density, which has a saddle shape with a ridge along the diagonal. It

is seen that the positive dependency between the US and UK markets is largely driven by

the co-movements of their tails, and the relationship appears to be symmetric. The bottom

left figure is the conditional copula when the Asian markets are in the middle. The saddle

shape is still visible, with an elated peak on the upper right corner. This result suggests

that when there are little actions in the Asian markets, the US and UK markets are more

likely to perform simultaneously above the average. The upper right figure reports the

conditional copula when the Asian markets are low. The copula density clearly peaks at

the lower left corner, indicating that the US and UK markets have a high joint probability

25

of underperformance. In contrast as indicated by the lower right figure, when the Asian

markets are high, the US and UK markets have a high joint probability of above the average

performance. In addition, what is not clearly visible from the figures is that the peak of the

copula density when the Asian markets are high is considerably higher than that when the

Asian markets are low. Therefore, the copula densities suggest that although the US and

UK markets tend to move together with the Asian markets, the dependence relation between

the western and Asian markets is not symmetric: the relation is stronger when the Asian

markets are high. In this sense, the western market is somewhat resilient against extremely

bad Asian markets.

Unconditional copula of US and UK

Conditional copula of US and UK given distributions of HK and JP between (0, 0.15]

1.0

1.0

0.00035

0.00020

0.00030

0.8

0.8

0.00015

0.00025

0.6

0.6

UK

UK

0.00020

0.00010

0.4

0.00015

0.4

0.00010

0.00005

0.2

0.2

0.00005

0.0

0.00000

0.0

0.2

0.4

0.6

0.8

0.0

1.0

0.00000

0.0

0.2

0.4

US

0.6

0.8

1.0

US

Conditional copula of US and UK given distributions of HK and JP between (0.40, 0.60]

Conditional copula of US and UK given distributions of HK and JP between (0.85, 1]

1.0

1.0

0.00020

8e−04

0.8

0.8

0.00015

6e−04

UK

0.6

UK

0.6

0.00010

4e−04

0.4

0.4

0.00005

2e−04

0.2

0.2

0.0

0.00000

0.0

0.2

0.4

0.6

0.8

0.0

1.0

0e+00

0.0

US

0.2

0.4

0.6

0.8

1.0

US

Figure 2: Estimated US and UK copula densities (Top left: unconditional; Top right: Asian

market low; Bottom left: Asian market middle; Bottom right: Asian market high)

The asymmetric relation between the western and Asian markets revealed in our analysis

of the copula densities prompts us to look into this issue more carefully. Below we calculate

26

some dependence indices that can be obtained readily from the estimated copula densities.

The first one is Kendall’s τ , a rank-based dependence index. This index can be calculated

from a copula distribution as follows:

Z

τ =4

C(u, v)dC(u, v) − 1.

[0,1]2

Although the Kendall’s τ offers some advantages over the linear correlation coefficient, it

does not directly capture the dependence structures at the tails of a distribution, which is

of critical importance in financial economics. Nor does it discriminate between symmetric

and asymmetric dependence. Therefore we also explore the tail dependence structure based

on the tail dependence coefficients (TDC). The upper and lower TDC between two random

variables X and Y are given by

UTD = lim− P r[X > FX−1 (α)|Y > FY−1 (α)] = lim−

α→1

α→1

1 − 2α + C(α, α)

1−α

and

LTD = lim+ P r[X < FX−1 (α)|Y < FY−1 (α)] = lim+

α→0

α→0

C(α, α)

,

α

provided that these limits exist and fall into [0, 1].

We report in Table 3 the estimated Kendall’s τ and tail dependence index of the US

market relative to the UK market, given various conditions of the Asian markets.5 The

Kendall’s τ is higher when the Asian markets are in the middle than in the tails. What

is particularly interesting is the comparison between the lower tail dependence index when

the Asian markets are low and the upper tail dependence index when the Asian markets are

high. The estimated numbers are respectively 0.1057 and 0.2248 when α = 3%, and 0.1746

and 0.3511 respectively α = 5%. These results confirm our visual inspection of the copula

densities that the dependence is stronger when the Asian markets are high. Therefore the

global financial contagions originated from the Asian markets are weaker when the Asian

markets are low relative to when the Asian markets are high.

6

Concluding remarks

Our numerical experiments and empirical examples demonstrate the usefulness of the proposed method, and that valuable insight can be obtained from the estimated copula density,

5

Similar patterns are observed regarding the tail dependence index of the UK market relative to the US

market.

27

Table 3: Conditional Dependence Measures between US and UK under different Asian market conditions

α = 3%

α = 5%

Asian Markets

τ

LTD

UTD

LTD

UTD

0-15%

0.2917 0.1057 0.0111 0.1746 0.0190

40-60%

0.3324 0.0499 0.0479 0.0834 0.0781

85-100%

0.2928 0.0149 0.2248 0.0249 0.3511

a by-product of our transform-based estimator. We expect that the proposed method will

find many useful applications in multivariate analysis, especially in financial economics. We

consider only iid case in this paper. Although it is beyond the scope of the current paper,

extensions of the current paper to accommodate dependent time series will be an interesting

subject for future studies.

28

References

[1] Barron, A.R. and C.H. Sheu, 1991,Approximation of Density Functions by Sequences

of Exponential Families, Annals of Statistics, 19, 1347–1369.

[2] Cadima, J., J. O. Cerdeira, and M. Minhoto, 2004, Computational Aspects of Algorithms for Variable Selection in the Context of Principal Components, Computational

Statistics and Data Analysis, 47, 225–236.

[3] Cadima, J. and I. T. Jollie, 2001, Variable Selection and the Interpretation of Principal

Subspaces, Journal of Agricultural, Biological, and Environmental Statistics, 6, 62–79.

[4] Chen, X. and Y. Fan, 2006a, Estimation of Copula-based Semiparametric Time Series

Models, Journal of Econometrics, 130, 307–335.

[5] Chen, X. and Y. Fan, 2006b, Estimation and Model Selection of Semiparametric Copulabased Multivariate Dynamic Models under Copula Misspecification, Journal of Econometrics, 135, 125–154.

[6] Chen, X., Y. Fan and V. Tsyrennikov, 2006, Efficient Estimation of Semiparametric Multivariate Copula Models, Journal of the American Statistical Association, 101,

1228–1240.

[7] Chui, C. and X. Wu, 2009, Exponential Series Estimation of Empirical Copulas with

Application to Financial Returns, Advances in Econometrics, 25, 263–290.

[8] Csiszár, I., 1975, I-divergence geometry of probability distributions and minimization

problems. Annals of Probability, 3, 146-158.

[9] Crain, B. R., 1974, Estimation of Distributions Using Orthogonal Expansion, Annals of

Statistics, 2, 454–463.

[10] Delaigle, A., P. Hall and J. Jin, 2011, Robustness and Accuracy of Methods for High Dimensional Data Analysis Based on Student’s t-statistic, Journal of the Royal Statistical

Society, Series B, 73, 283–301.

[11] Good, I. J., 1963, Maximum Entropy for Hypothesis Formulation, Especially for Multidimensional Contingency Tables, Annals of Mathematical Statistics, 34, 911–934.

[12] Hall, P. and N. Neumeyer, 2006, Estimating a Bivariate Density When There Are Extra

Data on One or Both Components, Biometrika, 93, 439–450.

29

[13] Jaynes, E. E., 1957, Information Theory and Statistical Mechanics, Physical Review,

106, 620–630.

[14] Kooperberg, C. and C. J. Stone, 1991, A Study of Logspline Density Estimation, Computational Statistics and Data Analysis, 12, 327–347.

[15] Li, Q. and J. Racine, 2007, Nonparametric Econometrics: Theory and Practice, Princeton University Press, New Jersey.

[16] McCabe, G. P., 1984, Principal variables, Technometrics, 26, 137–144.

[17] McCabe, G. P., 1986, Prediction of principal components by variables subsets, Technical

Report, 86-19, Department of Statistics, Purdue University.

[18] Nelsen, R. B., 2006, An Introduction to Copulas, 2nd Edition, Springer-Verlag, New

York.

[19] Neyman, J., 1937, Smooth Test for Goodness of Fit, Scandinavian Aktuarial, 20, 149–

199.

[20] Patton, A. J., 2006, Estimation of Multivariate Models for Time Series of Possibly

Different Lengths, Journal of Applied Econometrics, 21, 147–173.

[21] Ruppert, D., and D. B. H. Cline, 1994, Bias Reduction in Kernel Density-Estimation

by Smoothed Empirical Transformations, Annals of Statistics, 22, 185–210.

[22] Sklar, A., 1959, Fonctions De Repartition a n Dimensionset Leurs Mrges, Publ. Inst.

Statis. Univ. Paris, 8, 229–231.

[23] Stone, C. J., 1982, Optimal Global Rates of Convergence for Nonparametric Regression,

Annals of Statistics, 10, 1040–1053.

[24] Stone, C. J., 1990, Large-sample Inference for Log-spline Models, Annals of Statistics,

18, 717–741.

[25] Wand, M. P., J. S. Marron, and D. Ruppert, 1991, Transformations in Density Estimation, Journal of the American Statistical Association, 86, 343–361.

[26] Wand, M. P. and M. C. Jones, 1993, Comparison of Smoothing Parameterizations in

Bivariate Kernel Density Estimation, Journal of the American Statistical Association,

88, 520–528.

30

[27] Wu, X., 2003, Calculation of Maximum Entropy Densities with Application to Income

Distribution, Journal of Econometrics, 115, 347–354.

[28] Wu, X., 2010, Exponential Series Estimator of Multivariate Density, Journal of Econometrics, 156, 354–366.

[29] Yang, L. and J. S. Marron, 1999, Iterated Transformation-kernel Density Estimation,

Journal of the American Statistical Association, 94, 580–589.

[30] Zellner, A., and R. A. Highfield, 1988, Calculation of Maximum Entropy Distribution

and Approximation of Marginal Posterior Distributions, Journal of Econometrics, 37,

195–209.

31

Appendix A: Technical Proofs

Proof of Theorem 1. For simplicity, below we only present the proof for the case of

d = 2. Extensions to the more general d > 2 cases follow immediately.

Given f (x) = f1 (x1 )f2 (x2 )c(F1 (x1 ), F2 (x2 )), through the copula decomposition, we have

Z

H(f ) =

f (x1 , x2 ) log f1 (x1 )f2 (x2 )c (F1 (x1 ), F2 (x2 )) dx1 dx2

Z

Z

= f (x1 , x2 )dx2 log f1 (x1 )dx1 + f (x1 , x2 )dx1 log f2 (x2 )dx2

Z

+ f1 (x1 )f2 (x2 )c (F1 (x1 ), F2 (x2 )) log c (F1 (x1 ), F2 (x2 )) dx1 dx2 .

The first term on the right hand side simplifies to

Z

f1 (x1 ) log f1 (x1 )dx1 = H(f1 ).

Similarly, the second term equals H(f2 ). By change of variables u = F1 (x1 ) and v = F2 (x2 ),

the third term can be written as

Z

f1 (F1−1 (u))f2 (F2−1 (v))c(u, v) log c(u, v)dF1−1 (u)dF2−1 (v)

Z

= c(u, v) log c(u, v)dudv

=H(c),

which completes the proof.

Proof of Theorem 2. For simplicity, we only present the proof for the case of d = 2.

Extensions to the more general d > 2 cases follow immediately. By definition,

Z

f1 (x1 )f2 (x2 )cf (F1 (x1 ), F2 (x2 ))

dx1 dx2

g1 (x1 )g2 (x2 )cg (G1 (x1 ), G2 (x2 ))

Z

Z

f1 (x1 )

f2 (x2 )

= f (x1 , x2 ) log

dx1 dx2 + f (x1 , x2 ) log

dx1 dx2

g1 (x1 )

g2 (x2 )

Z

cf (F1 (x1 ), F2 (x2 ))

+ f (x1 , x2 ) log

dx1 dx2 .

cg (G1 (x1 ), G2 (x2 ))

D(f ||g) =

f (x1 , x2 ) log