DATA QUALITY PROBLEMS IN ARMY LOGISTICS Classification, Examples,

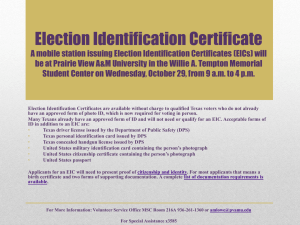

advertisement