R Concepts for Enhancing Critical Infrastructure Protection

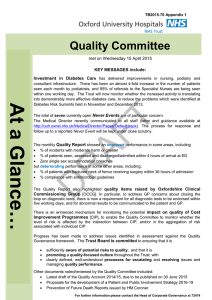

advertisement