Objective Bayesian Survival Analysis Using Shape Mixtures of Log-Normal Distributions

advertisement

Objective Bayesian Survival Analysis Using Shape

Mixtures of Log-Normal Distributions

Catalina A. Vallejos and Mark F.J. Steel

Department of Statistics, University of Warwick ∗

Abstract

Survival models such as the Weibull or log-normal lead to inference that is not robust to

the presence of outliers. They also assume that all heterogeneity between individuals can be

modelled through covariates. This article considers the use of infinite mixtures of lifetime

distributions as a solution for these two issues. This can be interpreted as the introduction of

a random effect in the survival distribution. We introduce the family of Shape Mixtures of

Log-Normal distributions, which covers a wide range of shapes. Bayesian inference under

non-subjective priors based on the Jeffreys rule is examined and conditions for posterior

propriety are established. The existence of the posterior distribution on the basis of a sample of point observations is not always guaranteed and a solution through set observations is

implemented. This also accounts for censored observations. In addition, a method for outlier detection based on the mixture structure is proposed. Finally, the analysis is illustrated

using a real dataset.

Keywords: Jeffreys prior; Outlier detection; Posterior existence; Set observations

1

Introduction

Standard survival models can be criticized in two aspects. Firstly, they often assign low probabilities to “rare” or “tail” events. In real datasets the presence of this kind of outcomes typically

∗

Corresponding author: Mark Steel, Department of Statistics, University of Warwick, Coventry, CV4 7AL, UK;

email: M.F.Steel@stats.warwick.ac.uk. Catalina Vallejos acknowledges funding through a Warwick Postgraduate

Research Scholarship of the University of Warwick and a grant from the Department of Statistics of the Pontificia

Universidad Católica de Chile.

1

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

occurs more often than predicted by standard models. In fact, as was argued in Barros et al.

(2008), models such as Weibull or log-normal do not produce inference that is robust to outlying observations. This situation implies the need to develop distributions with more flexible

tails. The second, related, criticism is concerned with the typical assumption that the survival

times correspond to realizations of random variables T1 , . . . , Tn which have the same distribution (possibly depending on a set of covariates). However, as was argued by Marshall and Olkin

(2007), this is not always realistic because there are often specific individual factors that result

in heterogeneity of the survival times which can not be captured by covariates.

We consider the use of infinite mixture of lifetime distributions as a solution for these two

issues. This idea has been mentioned by previous authors (e.g. Marshall and Olkin, 2007), but

is not yet much used in applied work. Mixture modeling can be interpreted as the introduction

of a random effect on the survival distribution. In particular, this article explores the Shape

Mixture of Log-Normal (SMLN) distributions for which the shape parameter is assumed as a

random quantity. This new class covers a wide range of shapes, in particular cases with fatter

tail behaviour than the log-normal. It includes the already studied log-Student t, log-Laplace,

log-exponential power and log-logistic distributions among others. This paper puts the earlier

literature into a common framework (which is more general, as other distributions can also be

defined) and provides a basis for the exploration of properties and the development of objective

Bayesian inference methods, which should be attractive for practitioners.

Section 2 introduces the use of mixture families of distributions with particular focus on the

SMLN family. Covariates are introduced via an Accelerated Failure Times model. Section 3

analyzes aspects of Bayesian inference for models in the SMLN family. This addresses the existence of censored observations. Non-subjective priors based on the Jeffreys rule are proposed

and conditions for the existence of the posterior distribution are provided. In addition, we highlight that the existence of the posterior distribution of the parameters on the basis of a sample

of point observations is not always guaranteed and a solution that considers set observations is

considered. Section 3 also discusses some implementation details and proposes a method for

outlier detection that exploits the mixture structure. Bayesian inference with the SMLN family

is illustrated in Section 4 through the use of a real dataset. Finally, Section 5 concludes. All the

proofs are contained in Appendix A without mention in the text.

2

Mixtures of life distributions

Let T1 , . . . , Tn be positive continuous random variables representing the survival times of n

independent individuals. Usually T1 , . . . , Tn are assumed to have the same distribution (possibly

2

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

depending on a set of covariates). However, this is not appropriate in the face of unobserved

heterogeneity between the survival times. This heterogeneity can be interpreted as the existence

of specific factors for each individual that cannot be observed. The lack of homogeneity on the

survival times can also be related to the presence of outlying observations. This paper considers

the use of mixtures of life distributions in order to represent unobserved heterogeneity and add

robustness to the presence of outliers. The distribution of Ti is defined as a mixture of life

distributions, if and only if its density function is given by

Z

f (ti |ψ, Λi = λi ) dPΛi (λi |θ),

(1)

f (ti |ψ, θ) ≡

L

where f (·|ψ, Λi = λi ) represents the density function associated with a lifetime distribution

which depends on the value of ψ and λi . This can be interpreted as the underlying distribution

of the survival time. Moreover, λi can be understood as a random effect associated to each

individual which in the context of survival analysis is often called frailty term. The mixing distribution has cumulative density function PΛi (·|θ) with support L and depends on a parameter

θ. If L is a finite set of values, the distribution of Ti is a finite mixture of life distributions. However, here we focus on the case in which Λi is a continuous positive random variable (usually

L = R+ ), in which case f (·|ψ, θ) can be interpreted as an infinite mixture of densities.

The intuition behind traditional parametric models carries over to these mixture models. In

fact, conditional on the mixing parameters the underlying distribution applies. The latter may

be underpinned by theoretical or practical reasons. For instance, the log-normal distribution

arises as the limiting distribution when additive cumulative damage is the cause of the death or

failure. Whereas we focus on mixtures generated from log-normal distributions, a large number

of mixture families can be generated by (1). For example, Jewell (1982) explores mixtures

of exponential and Weibull distributions. Barros et al. (2008) and Patriota (2012) consider an

extended class of Birnbaum-Sanders distributions that can be represented as in (1). The latter

family is motivated by models for crack extensions where the mixing distribution accounts for

dependence between the cracks.

2.1

The family of Shape Mixtures of Log-Normals

Definition 1. A random variable Ti has a distribution in the family of Shape Mixtures of LogNormals (SMLN) if and only if its density can be represented as

Z

σ2

2

f (ti |µ, σ , θ) =

fLN ti |µ,

dPΛi (λi |θ), ti > 0, µ ∈ R, σ 2 > 0, θ ∈ Θ,

(2)

λ

i

L

2

where fLN (·|µ, σλi ) corresponds to the density of a log-normal distribution with parameters µ

and σ 2 /λi , and λi is an observed value of a random variable Λi which has distribution function

3

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

Table 1: Some distributions included in the SMLN family. fP S (·|δ) denotes the density of a positive

stable random variable with parameter δ.

Distribution

Discrete mixture

Density f (ti )

Pq

j=1 pj fLN

Mixing density

P

2

ti |µ, σλj , qj=1 pj = 1, 0 ≤ pj ≤ 1

Log-Student t

Γ(ν/2+1/2)

1

√ 1

Γ(ν/2)

πσ 2 ν ti

Log-Laplace

1 1

2σ ti

Log-exponential

power

α

1

1 t

2σΓ( α

) i

Log-logistic

h

1+

(log(ti )−µ)2

σ2 ν

i−( ν2 + 12 )

,ν >0

n

o

exp − | log(tσi )−µ|

Discrete with finite support

Gamma(ν/2, ν/2)

Inv-Gamma(1,1/2)

n α o

exp − | log(tσi )−µ|

, α ∈ (1, 2)

(ti / eµ )1/σ−1

1

σ eµ [1+(ti / eµ )1/σ ]2

− 21

Γ(3/2)

Γ(1+1/α) λi

λ−2

i

P∞

k=0

× fP S (λi | α2 )

−2

k

(1+k)2

−

(1 + k) e 2λi

PΛi (·|θ) defined on L ⊆ R+ (possibly discrete). Denote Ti ∼ SM LNP (µ, σ 2 , θ). Alternatively,

(2) can be expressed as a hierarchical representation which corresponds to

σ2

2

,

Λi |θ ∼ PΛi (·|θ).

(3)

Ti |µ, σ , Λi = λi ∼ LN µ,

λi

The SMLN family can be interpreted as an infinite mixture of log-normal distributions with

random shape parameter or as the exponential transformation of a random variable distributed

as a scale mixture of normals. This family includes a number of distributions that have been

proposed in the context of survival analysis. Table 1 summarizes some of them. In particular,

the log-Student t distribution was introduced by Hogg and Klugman (1983) and used in e.g. McDonald and Butler (1987) and Cassidy et al. (2009). However, we are not aware of any previous

work that explored theoretical results and properties. In the special case of ν = 1, Lindsey

et al. (2000) applied it in the context of pharmacokinetic data. The log-Laplace appeared in

Uppuluri (1981) and the log-exponential power was introduced in Vianelli (1983) and was used

in Martı́n and Pérez (2009) and Singh et al. (2012). Martı́n and Pérez (2009) uses a mixture of

uniforms representation, which also allows for thinner tails than the log-normal (α < 1). Here,

however, we only consider distributions with fatter tails, in line with most of the empirical evidence in survival analysis. Finally, the log-logistic distribution was introduced by Shah and

Dave (1963) and is used regularly in survival analysis, hydrology and economics. This list can

be increased by varying the mixing distribution. For example, all the mixing distributions used

for scale mixtures of normals listed in Fernández and Steel (2000) can be used in this context.

For identifiability reasons, the mixing distribution must not have unknown scale parameters. As

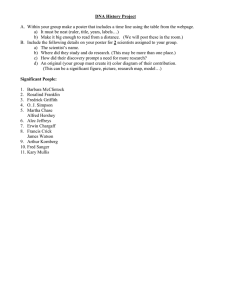

illustrated in Figure 1, the SMLN family allows for a wide variety of shapes for the density.

The hazard function can be interpreted as the instantaneous rate of failure given that the event

4

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

(a)

(b)

f(t)

0.4

0.6

0.8

µ=0,σ2=0.5

µ=0,σ2=1

µ=0,σ2=4

0.0

0.2

0.4

0.0

0.2

f(t)

0.6

0.8

µ=0,σ2=1,ν=1

µ=0,σ2=1,ν=5

0

1

2

3

4

0

1

t

2

3

(c)

(d)

µ=0,σ =0.5,α=1.2

µ=0,σ2=2,α=1.2

µ=0,σ2=2,α=1.6

µ=0,σ2=0.5

µ=0,σ2=2

0.8

0.6

f(t)

0.0

0.2

0.4

0.0

0.2

0.4

0.6

0.8

2

f(t)

4

t

0

1

2

3

4

0

t

1

2

3

4

t

Figure 1: Density function of some models in the SMLN family. (a) Log-Student t, (b) log-Laplace, (c)

log-exponential power, (d) log-logistic. The solid line corresponds to a log-normal(0, 1) density.

did not happen before. For the SMLN family it is generally not possible to obtain an analytic

formula for the hazard function, but an approximation can be obtained using numerical integration. Figure 2 illustrates that the shape of the hazard rates is also flexible. For example, while

the hazard rate of the log-normal distribution has an increasing initial phase, the log-Laplace

and log-logistic distributions produce a monotone decreasing hazard rate for some values of σ 2 .

Table 2 groups some properties of the log-normal and various SMLN distributions. It is

clear that the tails of all the examples of SMLN with continuous mixing distributions are fatter

than those of the log-normal distribution, and we also see that behaviour of the density function

close to zero can be quite different. Whereas all positive moments exist for the log-normal, this

is not necessarily the case for the shape mixtures: in particular, we can show that no positive

moments exist for the log-Student t for any finite value of ν. The log-Laplace and log-logistic

models only allow for moments up to 1/σ. The log-exponential power distribution with α > 1

does possess all moments (see Angeletti et al., 2011 and Singh et al., 2012).

An important aspect of survival modelling is the inclusion of covariates. Throughout, we

will condition on the covariates which are assumed to be constant in time. Based on the closure

under scale-power transformations of the SMLN family (if T ∼ SM LNP (µ, σ 2 , θ), then T 0 =

aT b ∼ SM LNP (log(a) + µb, σ 2 b2 , θ) (a > 0, b 6= 0)), an Accelerated Failure Times (AFT)

model is introduced. The AFT-SMLN model expresses the dependence between the covariates

5

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

1.5

(b)

1.5

(a)

µ=0,σ =1,ν=1

µ=0,σ2=1,ν=5

0.5

h(t)

1.0

µ=0,σ2=0.5

µ=0,σ2=1

µ=0,σ2=4

0.0

0.0

0.5

h(t)

1.0

2

0

1

2

3

4

5

6

7

0

1

2

3

t

4

5

6

7

t

1.5

(d)

1.5

(c)

µ=0,σ =0.5,α=1.2

µ=0,σ2=2,α=1.2

µ=0,σ2=2,α=1.6

0.5

h(t)

1.0

µ=0,σ2=0.5

µ=0,σ2=2

0.0

0.0

0.5

h(t)

1.0

2

0

1

2

3

4

5

6

7

0

1

2

t

3

4

5

6

7

t

Figure 2: Hazard function of some models in the SMLN family. (a) Log-Student t, (b) log-Laplace, (c)

log-exponential power, (d) log-logistic. The solid line corresponds to a log-normal(0,1) hazard rate.

Table 2: Properties of some distributions in the SMLN family. B(·, ·) is the Beta function and sign(x) =

1 if x > 0 and sign(x) = −1 for x < 0.

Distribution

Tails

limti →0 f (ti )

E(tri ), r > 0

Log-normal

1

2

t−1

i exp{− 2σ 2 [log ti ] }

0

exp(rµ + 21 r2 σ 2 )

Log-Student t

−(ν+1)

t−1

i | log ti |

∞

∞

Log-Laplace

1

t−1

i exp{− σ | log ti |}

0 for σ < 1

1

2 exp(−µ) for σ = 1

∞ for σ > 1

exp(rµ)

1−r 2 σ 2 , r

Log-exponential

power

1

α

t−1

i exp{− σ α | log ti | }

0 for α > 1

see Singh et al. (2012), α > 1

Log-logistic

ti

0 for σ < 1

exp(−µ) for σ = 1

∞ for σ > 1

exp(rµ)B(1 − rσ, 1 + rσ),

r < 1/σ

−

sign{log(ti )} −1

σ

< 1/σ

6

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

and the survival time by replacing the parameter µ with x0i β, so that

ind

Ti |xi , β, σ 2 , θ ∼ SM LNP (x0i β, σ 2 , θ),

i = 1, 2, . . . , n,

(4)

where xi is a vector containing the value of k covariates associated with survival time i and

β ∈ Rk is a vector of parameters. This can also be interpreted as a linear regression model for

the logarithm of the survival times with error term distributed as a scale mixture of normals. As

0

the median of Ti in (4) is given by exi β , eβj is interpreted as the (proportional) marginal change

of the median survival time as a consequence of a unitary change in covariate j.

3

Bayesian inference for the SMLN family

3.1

The prior

Bayesian inference will be conducted using objective priors that are generated by Jeffreys rule.

This is one of the most common choices in the absence of prior information and has interesting

invariance and information-theoretic properties (Bernardo and Smith, 1994). The next theorem

presents the Fisher information matrix for the AFT-SMLN model which is the basis for the

Jeffreys-style priors.

Theorem 1. Let T1 , . . . , Tn be independent random variables with Ti distributed according to

(4), then its Fisher information matrix corresponds to

Pn

1

0

x

x

0

0

k

(θ)

i

1

2

i

i=1

σ

1

(5)

I(β, σ 2 , θ) =

0

k (θ) σ12 k3 (θ) ,

σ4 2

1

k (θ) k4 (θ)

0

σ2 3

where k1 (θ), k2 (θ), k3 (θ) and k4 (θ) are functions depending only on θ.

The expressions involved in k1 (θ), k2 (θ), k3 (θ) and k4 (θ) are complicated (see the proof)

and thus I(β, σ 2 , θ) is not easily obtained from this theorem for any arbitrary mixing distribution. Indeed, it is usually more efficient to compute I(β, σ 2 , θ) directly from f (·|β, σ 2 , θ).

However, this structure facilitates a general representation of the Jeffreys-style priors:

Corollary 1. Under the same assumptions as in Theorem 1, it follows that

(i) The Jeffreys prior for the AFT-SMLN model is given by

q

1

J

2

π (β, σ , θ) ∝

[k1 (θ)]k [k2 (θ)k4 (θ) − k32 (θ)],

k

(σ 2 )1+ 2

(6)

7

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

(ii) The Type I Independence Jeffreys prior, which deals separately with the blocks for β and

(σ 2 , θ) is given by

q

1

II

2

π (β, σ , θ) ∝ 2 k2 (θ)k4 (θ) − k32 (θ),

(7)

σ

(iii) The Independence Jeffreys prior, which deals separately with β, σ 2 and θ, is given by

1p

π I (β, σ 2 , θ) ∝ 2 k4 (θ).

(8)

σ

The three non-subjective priors presented here can be written as

π(β, σ 2 , θ) ∝

1

π(θ),

(σ 2 )p

(9)

where π(θ) is the factor of the prior that depends on θ. The value of p is equal to 1+(k/2) for the

Jeffreys prior and p is equal to 1 for the Type I Independence Jeffreys and Independence Jeffreys

priors. In the cases in which the parameter θ does not appear (e.g. log-normal, log-Laplace and

log-logistic distributions) this prior simplifies to π(β, σ 2 ) ∝ (σ 2 )−p .

Note that the result in Corollary 1 also specifies the prior for θ. The implied priors for

the special cases of the log-Student t and the log-exponential power (derived directly from the

specific likelihood functions) are explicitly presented in the proof of Theorem 3.

3.2

The posterior distribution

The three priors presented in Corollary 1 do not correspond to proper probability distributions

and therefore the propriety of the posterior distribution must be verified. At this stage we

also discuss the existence of censored observations. We assume here that censoring is noninformative. Censoring must be taken into account when conducting inference. However, as is

shown in the following proposition, adding censored observations cannot destroy the propriety

of the posterior distribution.

Proposition 1. Let T1 , . . . , Tn be the survival times of n independent individuals. Let nc

(nc ≤ n) be the number of individuals for which the actual survival time was not observed

but a censored observation was recorded. Then, the propriety of the posterior distribution of

(β, σ 2 , θ) on the basis of the n − nc observed survival times implies that the posterior distribution of (β, σ 2 , θ) given the complete sample is proper.

Throughout the rest of this paper, the results regarding posterior propriety will be presented

on the basis of the non-censored observations (using n instead of n − nc for ease of notation).

The following theorem and its corollary cover posterior propriety for the AFT-SMLN model

under the priors in Corollary 1.

8

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

Theorem 2. Let T1 , . . . , Tn > 0 be the survival times of n independent individuals which

have distribution given by (4). Assume that the observed survival times are t1 , . . . , tn . Define

D = diag(λ1 , . . . , λn ) and X = (x1 , . . . , xn )0 . Suppose that r(X) = k (full rank) and that

the prior for (β, σ 2 , θ) is given by (9). Then, the posterior distribution is proper if and only if

n > max{k, k − 2p + 2} and

Z Z

Θ

n

Y

Ln i=1

1

2

0

− 12

λi [det(X DX)]

− n+2p−k−2

2

2

[S (D, y)]

π(θ)

n

Y

dP (λi |θ) dθ < ∞,

(10)

i=1

where S 2 (D, y) = y 0 Dy − y 0 DX(X 0 DX)−1 X 0 Dy and y = (log(t1 ), . . . , log(tn ))0 .

Corollary 2. Under the same assumptions as in Theorem 2, it follows that

(i) If p = 1, a sufficient condition for the propriety of the posterior is n > k and π(θ) being

a proper density function for θ,

(ii) If p = 1 + k/2, a sufficient condition for the propriety of the posterior is n > k and

Z

−k

E(Λi 2 |θ)π(θ) dθ < ∞,

(11)

Θ

(iii) For any value of p, a sufficient condition for the non-existence of a proper posterior

distribution is

Z

dP (λi |θ)π(θ) dθ = ∞,

(12)

Θ

for λi ∈ B, where B is a set of positive Lebesgue measure with respect to P (·|θ).

The previous results provide some general conditions for the SMLN family, which immediately leads to posterior propriety of the log-normal AFT model (where no θ appears and each

λi = 1) when n > k under any of the priors in Corollary 1. The following theorem summarizes

the analysis for the AFT models based on the other distributions presented in Table 2.

Theorem 3. Under the same assumptions as in Theorem 2 and provided that n > k, it follows

that

(i) For the log-Student t AFT model, the posterior is proper under the Jeffreys or Type I

Independence Jeffreys prior. However, the posterior is improper for the Independence

Jeffreys prior.

(ii) For the log-Laplace AFT model, log-exponential power AFT model and log-logistic AFT

model, the propriety of the posterior can be verified with any of the three proposed priors.

9

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

Theorem 3 tells us that the log-Student t AFT model does not lead to valid Bayesian inference in combination with the Independence Jeffreys prior. The other models can be combined

with all priors considered here; of course, the absence of θ in the log-Laplace and log-logistic

models implies that in those cases the Type I Independence Jeffreys and Independence Jeffreys

priors coincide.

3.3

The problem with using point observations

Most statistical models assume that the observations come from continuous distributions which

assign probability zero to a random variable taking any particular (point) value. In spite of

this, the standard statistical analysis is based on point observations. This situation can cause

problems in the context of Bayesian inference because the assessment of the propriety of the

posterior distribution is usually conducted without taking into account events that have zero

probability. Hence, the propriety of the posterior on the basis of a sample of point observations

cannot be always guaranteed. As was argued in Fernández and Steel (1998), this problem

introduces the risk of having senseless inference. The following theorem illustrates the problem

of the use of point observations in the context of the AFT log-Student t model.

Theorem 4. Adopt the same assumptions as in Theorem 2 and assume that n > k. If the

mixing distribution is Gamma(ν/2,ν/2) and s (k ≤ s < n) is defined as the largest number of observations that can be represented as an exact linear combination of their covariates

(i.e. log(ti ) = x0i β for some fixed β), a necessary condition for the propriety of the posterior

distribution of (β, σ 2 , ν) is

Z m

n − k + (2p − 2)

π(ν) dν = 0, where m =

− 1.

(13)

n−s

0

This result indicates that it is possible to have samples of point observations for which no

Bayesian inference can be conducted, unless π(ν) induces a positive lower bound for ν. In

particular, this condition is not satisfied for the Jeffreys-style priors that were presented in this

report. Theorem 4 highlights the need for considering sets of zero Lebesgue measure when

checking the propriety of the posterior distribution based on point observations. When no covariates are taken into account (k = 1), s coincides with the largest number of observations that

take the same value.

In the context of scale mixture of normals, Fernández and Steel (1998, 1999) proposed the

use of set observations as a solution to this problem. We now extend this to the SMLN family.

The use of set observations is based on the fact that, in practice, it is impossible to record observations from continuous random variables with total precision and every observation can only

10

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

be considered as a label of a set of positive Lebesgue measure. For instance, if the recorded

value for the survival time is t0 , it really means that the actual survival time is between t0 − l

and t0 + r where l and r represent the level of imprecision with which the data was recorded.

This is equivalent to considering the observation as interval censored. As explained in Section

3.4, the implementation is done through data augmentation which does not involve a large increase of the computational cost. This procedure also naturally deals with left or right censored

observations by taking respectively l or r equal to infinity. The following theorem indicates

that the use of set observations provides a proper posterior distribution in situations where point

observations might not.

Theorem 5. Let T1 , . . . , Tn > 0 be the survival times of n independent individuals which have

distribution given by (4). Assume set observations (t1 − l , t1 + r ), . . . , (tn − l , tn + r )

(0 < l , r < ∞) are recorded. Define D = diag(λ1 , . . . , λn ) and X = (x1 · · · xn )0 . Suppose

that r(X) = k (full rank) and that the prior for (β, σ 2 , θ) is given by (9). Define E = (t1 −

l , t1 + r ) × (t2 − l , t2 + r ) × · · · × (tn − l , tn + r ). The posterior distribution of (β, σ 2 , θ)

given the sample is proper if and only if the conditions in Theorem 2 are valid for any sample

of point observations in E, except for a set of zero Lebesgue measure.

3.4

Implementation

Bayesian inference will be implemented using the hierarchical representation (3) of the SMLN

family. This can be seen as an application of the data augmentation idea of Tanner and Wong

(1987). Throughout, we use the prior presented in (9). A Gibbs Sampling algorithm is used for

the implementation, where both censored and set observations are also accommodated through

data augmentation (as in Fernández and Steel, 1999, 2000). This introduces an additional step

in the sampler in which, given the current value of the parameters and mixing variables, point

values of the survival times in line with the censored observations are simulated. In this case,

this can be easily done by sampling log(ti ) from a truncated normal distribution. Given these

simulated values, the other steps can be treated as if there were no censoring nor set observations. Regardless of the mixing distribution and provided that n > 2 − 2p, the full conditionals

for β and σ 2 are normal and inverted gamma distributions. However, the full conditionals for

λ and θ are generally not of a known form for arbitrary mixing distributions. For the Λi ’s we

Q

have π(λ1 , . . . , λn |β, σ 2 , θ, t) = ni=1 π(λi |β, σ 2 , θ, t) where t = (t1 , . . . , tn )0 are the simulated

survival times and

1

1

0

2

2

2

(14)

π(λi |β, σ , θ, t) ∝ λi exp − 2 λi (log(ti ) − xi β) dP (λi |θ), i = 1, . . . , n.

2σ

11

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

The full conditional of Λi for the log-Student t and log-Laplace models has a known form.

In the first case it is a Gamma((ν + 1)/2,1/2 [{(log(ti ) − x0i β)2 /σ 2 } + ν]). In the second, it

corresponds to an Inverse Gaussian(σ/| log(ti ) − x0i β|,1). When these full conditionals have

no known form, a Metropolis-Hastings (MH) step with a Gaussian Random Walk proposal

can be implemented. However, if the mixing distribution has no closed form (as e.g. for the

log-exponential power and log-logistic distributions), the acceptance probability in the MH is

not easily computable. In these cases, we need to carefully consider alternative solutions. For

instance, the mixing density of the log-logistic distribution corresponds to an infinite series. In

this case we implement the Rejection Sampling algorithm proposed in Holmes and Held (2006,

√

p.163), using the fact that (2 Λi )−1 has the asymptotic Kolmogorov-Smirnov distribution.

The mixing distribution of the log-exponential power model has no known analytical form

either. One possible approach to this is to use the hierarchical representation proposed by Ibragimov and Chernin (1959) for the positive stable distributions which requires the use of n extra

augmenting variables. However, as argued in Tsionas (1999), this is not appropriate when

the value of α is unknown. Instead, we implement Bayesian inference through the mixture

of uniforms representation used in Martı́n and Pérez (2009). This replaces the use of Λi by

iid

Ui (i = 1, . . . , n), with Ui ∼ Gamma(1 + 1/α, 1) and log(ti )|Ui = ui , β, σ 2 , α ∼ U(x0i β −

1/α

1/α

σui , x0i β + σui ). We restrict the range of α to (1, 2) in order to obtain fatter tails than the

log-normal. The case α = 1 is excluded but it is covered by the log-Laplace model. We decomQ

pose the posterior distribution π(β, σ 2 , α, u1 , . . . , un |t) as ni=1 π(ui |t, β, σ 2 , α) × π(β, σ 2 , α|t)

and the full conditionals for the Ui ’s are

α

| log(ti ) − x0i β|

2

−ui

π(ui |t, β, σ , α) ∝ e , ui >

, i = 1, . . . , n.

(15)

σ

Additionally, for censored and set observations log(ti ) is sampled from a truncated uniform

distribution. In terms of setting up a sampler, it might be easier to simply start directly from

the log-exponential power or log-logistic distribution, rather than its interpretation as a scale

mixture. However, we would lose the inference on the mixing variables Λi or Ui and this is

particularly important in identifying outlying observations (see e.g. Lange et al., 1989 and

Fernández and Steel, 1999), as further discussed in Section 3.6. Also, the use of these mixing

representations facilitate dealing with censored and set observations.

The samplers presented previously update n mixing parameters at each step of the chain.

This results in long running times and low efficiency of the algorithms (especially in situations

in which sampling from the mixing variables is cumbersome). In order to avoid this problem,

the Λi ’s will be sampled only every Q iterations of the chain. The value of Q is chosen in order

to optimize the efficiency of the sampler which is measured as the ratio between the Effective

Sample Size (ESS) of the chain and the CPU time required. This simplification is not applicable

12

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

to the log-exponential power model because the truncation of the full conditionals of the Ui ’s

requires the update of these mixing parameters at each iteration of the algorithm.

3.5

Model comparison

In order to compare different models, we will use Bayes factors. These will be computed using

the methodology proposed by Chib (1995) and Chib and Jeliazkov (2001). For comparison,

the Deviance Information Criteria (DIC) developed by Spiegelhalter et al. (2002) will also be

provided. Additionally, models will be compared by the quality of their predictions. We use

the log-predictive score (LPS) introduced by Good (1952). For this, we randomly divide the

original sample in a inference sample (t∗1 , . . . , t∗n−q ) and a predictive sample (t∗n−q+1 , . . . , t∗n ).

Lower values of the LPS indicate better predictive accuracy. The LPS is defined as

LP S = −

3.6

n

1 X

log(f (t∗r |t∗1 , . . . , t∗n−q )).

q r=n−q+1

(16)

Outlier detection

The existence of outlying observations will be assessed using the posterior distribution of the

mixing variables. Extreme values (with respect to a reference value, λref or uref ) of the mixing

variables are associated with outliers (see also West, 1984). Let us first consider mixing through

Λi . Formally, evidence of outlying observations will be assessed by contrasting the hypothesis

H0 : Λi = λref versus H1 : Λi 6= λref (with all other Λj , j 6= i free). Evidence in favour of

each of these hypothesis will be measured using Bayes factors, which can be computed as the

generalized Savage-Dickey density ratio proposed in Verdinelli and Wasserman (1995). The

evidence in favour of H0 versus H1 is given by

1

BF01 = π(λi |t)E

,

(17)

dP (λi |θ) λi =λref

where the expectation is with respect to π(θ|t, Λi = λref ). Note that when the parameter θ

does not appear in the model, this expression can be simplified as the original version of the

Savage-Dickey density ratio

π(λi |t) π(ti |β, σ 2 , λi ) BF01 =

=E

,

(18)

dP (λi ) λi =λref

π(ti |β, σ 2 )

λi =λref

where the expectation is with respect to π(β, σ 2 |t). The main challenge of this approach is the

choice of λref . Intuitively, if there is no unobserved heterogeneity, the posterior distribution

13

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

of the mixing parameters should not be much affected by the data. Therefore, the mixing

distribution (which can be interpreted as a prior distribution for Λi ) will then be close to the

posterior distribution of Λi . Following this intuition, we propose to use E(Λi |θ) (if it exists) as

λref . Using this rule, λref = 1 for the log-Student t model and λref = 0.4 for the log-logistic

model. When the value of θ is unknown, we estimate it using the MCMC sample obtained

during the implementation. In particular, we consider the median of the posterior distribution of

θ. The performance of these choices has been validated using simulated datasets. Examples for

which E(Λi |θ) is not finite, require a more detailed analysis. For example, the expectation of the

mixing distribution that generates the log-Laplace distribution does not exist. Using simulated

datasets, we note a large heterogeneity between the posterior distributions of the Λi ’s and the

existence of a unique reference value is not clear (even in the absence of outlying observations).

However, the average of the posterior medians of the mixing distribution is close to one for all

simulated data sets we have tried. In this calculation, we discard the lowest 25% of λi values

in order to remove the influence of any possible outliers. Hence, we propose λref = 1 for

the log-Laplace model. Figure 3 shows the performance of the reference values by plotting

the Bayes factor in (17) for the log-Student t, log-Laplace and log-logistic models in terms of a

standardized log survival time observations z (given β, σ 2 and θ). The standardized observations

p

are defined as log(t) minus its mean, divided by its standard deviation (i.e. σ qEΛ (1/Λ|θ)). For

the log-Student t, log-Laplace and log-logistic models, they are z =

log(t)−x0 β

√

log(t)−x0 β 3

,

σ

π

log(t)−x0 β

σ

ν−2

ν

(for ν > 2),

√1 and z =

z =

respectively. As expected, large values of |z| lead to

σ

2

evidence in favour of an outlier. The log-Student t model with very large number of degrees of

freedom requires an exceptionally large |z| to distinguish it from the log-normal case.

The log-exponential power model is a very special case. The implementation of Bayesian

inference for this model did not consider the SMLN representation. A mixture of uniform

distributions representation was used instead. Under this representation, the mixing parameters

are denoted by Ui . The hypothesis associated to outlier detection can be re-written in terms

of Ui as H0 : Ui = uref versus H1 : Ui 6= uref . The expectation of Ui given α is 1 + 1/α

and, according to the intuition presented previously, we might use this value as uref . With this

rule, uref is a function of α which lies in (1.5, 2). In practice, this choice overestimated the

number of outliers. Even for datasets generated from the log-normal model, several outliers

were detected. In fact, we estimate π(uref |t) by averaging π(uref |t, β, σ 2 , α) in (15) using

an MCMC sample from the posterior

distribution of (β, σ 2 , α). If the value of (β, σ 2 , α) is

α

| log(ti )−x0i β|

, π(uref |t, β, σ 2 , α) is equal to zero and the Bayes Factor in

such that uref ≤

σ

favour of the observation i beingan outlier iscomputed as infinity. Simulated datasets indicated

α

| log(ti )−x0i β|

that the means and medians of

(i = 1, . . . , n) are around 0.6, regardless of the

σ

model from which the data was generated. We use this in order to adjust the reference value to

14

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

3

4

5

2

6

0

1

2

3

|z|

|z|

(c)

(d)

4

5

6

4

5

6

1

2

3

4

5

−4

0

2

4

0

2*log(BF)

4

2

0

α=1.1

α=1.5

α=1.9

−4

2*log(BF)

0

2*log(BF)

4

2

6

1

6

0

λref=0.8

λref=1

λref=1.2

−4

2

0

ν=10

ν=30

ν=200

−4

2*log(BF)

4

6

(b)

6

(a)

6

0

|z|

1

2

3

|z|

Figure 3: Bayes factor for outlier detection as a function of |z| for: (a) log-Student t, (b) log-Laplace

(c) log-exponential power and (d) log-logistic models. The log Bayes factor has been re-scaled by 2 in

order to apply the interpretation rule proposed in Kass and Raftery (1995). The dotted horizontal line is

the threshold for which observations will be detected as outliers.

uref = 1+1/α+0.6. This choice performed much

q better with simulated datasets. The resulting

0

Γ(1/α)

β

(see Figure 3) are not much affected by

Bayes Factors as a function of z = log(t)−x

σ

Γ(3/α)

the value of α. Also, when simulating a log-normal dataset, no outliers were detected.

4

Application: Veterans administration lung cancer trial

We use a dataset (presented in Kalbfleisch and Prentice, 2002) relating to a trial in which a therapy (standard or test chemotherapy) was randomly applied to 137 patients that were diagnosed

with inoperable lung cancer. The survival times of the patients were measured in days since

treatment and as previous studies suggested some heterogeneity between the patients, they also

recorded some covariates. The covariates include the treatment that is applied to the patient

(0: standard, 1: test); the histological type of the tumor (squamous, small cell, adeno, large

cell); a continuous index representing the status of the patient at the moment of the treatment

(the higher the index, the better the patient’s condition); the time between the diagnosis and the

treatment (in months); age (in years); and a binary indicator of prior therapy (0: no, 1: yes).

The data contains 9 right censored observations. This dataset has been previously analyzed

from a frequentist point of view using traditional models such as the Weibull, log-normal and

log-logistic regressions (see Lee and Wang, 2003). These models coincide in that the status of

the patient at the moment of treatment and the histological type of the tumor are the relevant

15

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

explanatory variables for the survival time. Nevertheless, as was argued in Barros et al. (2008),

the existence of influential observations affects the inference produced under some models. In

fact, they illustrated that the inference produced by a log-Birnbaum-Saunders model is greatly

modified when dropping observations 77, 85 and 100. They proposed a log-Birnbaum-Saunders

Student t distribution as a more robust alternative for this dataset because it allows for fatter tails

and accommodates heterogeneity in the data. This distribution can also be represented through

a mixture family as in (1), so our methodology could be also extended to this distribution.

Table 3 presents a summary of the posterior distribution of (β, σ 2 ) when a log-normal AFT

model is fitted. This is based on 10,000 draws, recorded from a total of 400,000 iterations with

a burn-in period of 200,000 and a thinning of 20. The use of different starting points and the

usual convergence criteria strongly suggest convergence of the chains. The Jeffreys and the

Independence Jeffreys prior produced similar results, making the choice between one of these

two priors not too critical. In addition, Bayesian inference was conducted on the basis of set

observations, using l = r = 0.5 for non-censored observations. Censored observations are

necessarily set observations, e.g. l = 0 and r = ∞ for right censored observations. We use this

treatment of the censored observations for both point and set observations. In this application,

this did not produce very different results from those obtained on the basis of point observations.

The use of point observations does not produce problems for the log-normal model. However,

we know that set observations avoid potential problems with the inference for other models (see

Subsection 3.3), so we will focus on the analysis with set observations in the rest of the paper.

Results suggest that the main covariate effects are due to the tumour type and patient status,

and possibly age (which has a somewhat surprising largely positive effect) and are roughly in

line with the maximum likelihood results for the log-Birnbaum Saunders model in Barros et al.

(2008), although the effect of the test treatment is less clearly negative.

We then use the model (4) with the mixing distributions presented in Table 1. Bayesian

inference is conducted under the Jeffreys-type priors introduced in Corollary 1. We adopted

the same total number of iterations, burn in and thinning period as in the log-normal case. In

order to improve the efficiency of the algorithm we choose Q = 10 for the log-Student t and

log-Laplace models and Q = 20 for the log-logistic model. The Bayes factors of these models

with respect to the log-normal is computed. Additionally, the LPS and DIC performance of

each model is reported. Figure 4 and Table 4 summarize this information. For the LPS criteria,

the original sample was randomly partitioned in a prediction sample and an inference sample

of sizes 34 and 103, respectively (i.e. 25% and 75% of the original sample). This was repeated

50 times. Clearly, Bayes factors, LPS and DIC provide evidence in favour of these mixture

models. For the log-Student t model, this evidence is also supported by the fact that inference

on ν favours relative small values. Similarly, the log-exponential power model suggests values

16

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

Table 3: Summary of the posterior distribution of the parameters of the log-normal AFT model. β0 :

Intercept, β1 : Treat (test), β2 : Type (squamous), β3 : Type (small cell), β4 : Type (adeno), β5 : Status, β6 :

Time from diagnosis, β7 : Age, β8 : Prior therapy (yes).

Jeffreys prior

Point Observations

Set Observations

Median

HPD 95%

Median

HPD 95%

β0

β1

β2

β3

β4

β5

β6

β7

β8

σ2

1.81

-0.17

-0.11

-0.73

-0.77

0.04

0.00

0.01

-0.10

1.12

[ 0.52, 3.11]

[-0.53, 0.21]

[-0.63, 0.45]

[-1.25,-0.19]

[-1.37,-0.17]

[ 0.03, 0.05]

[-0.02, 0.02]

[-0.01, 0.03]

[-0.54, 0.35]

[ 0.86, 1.42]

1.79

-0.17

-0.12

-0.73

-0.78

0.04

0.00

0.01

-0.11

1.13

[ 0.38, 3.06]

[-0.53, 0.21]

[-0.67, 0.43]

[-1.24,-0.19]

[-1.37,-0.19]

[ 0.03, 0.05]

[-0.02, 0.02]

[-0.01, 0.03]

[-0.55, 0.33]

[ 0.88, 1.44]

Independence Jeffreys prior

Point Observations

Set Observations

Median

HPD 95%

Median

HPD 95%

1.82

-0.17

-0.11

-0.72

-0.76

0.04

0.00

0.01

-0.11

1.21

[ 0.44, 3.17]

[-0.56, 0.21]

[-0.68, 0.47]

[-1.28,-0.18]

[-1.39,-0.16]

[ 0.03, 0.05]

[-0.02, 0.02]

[-0.01, 0.03]

[-0.57, 0.36]

[ 0.93, 1.54]

1.80

-0.17

-0.11

-0.72

-0.77

0.04

0.00

0.01

-0.10

1.21

[ 0.38, 3.09]

[-0.57, 0.22]

[-0.66, 0.47]

[-1.27,-0.16]

[-1.39,-0.17]

[ 0.03, 0.05]

[-0.02, 0.02]

[-0.01, 0.03]

[-0.58, 0.35]

[ 0.92, 1.54]

of α far from 2. Figure 5 and 6 contrast the prior and the posterior distributions of ν and α under

the log-Student t and log-exponential power models. They reflect how the prior and posterior

distributions differ and that the later is strongly driven by the data itself. For example, in spite

of the fact that the Jeffreys prior for ν has a thicker right tail, the posterior distribution of ν

looks somehow similar under both priors. The contrast between the Jeffreys prior for α and its

posterior is somewhat surprising, in view of the results for the other priors. This is explained

by the fact that σ 2 and α are highly (positively) correlated a posteriori. For k = 9, the Jeffreys

prior assigns high probabilities to low values of σ 2 (much higher in comparison with the other

two priors) and therefore the Jeffreys prior is implicitly driving the posterior of α towards 1.

Using a modification of the Jeffreys prior where p = 1, we find indeed that the posterior of α is

shifted much to the right, with a mode around 1.5.

Combining these results, the log-logistic model appears the best candidate for fitting this

dataset. This is in line with the results in Lee and Wang (2003) in which, using a frequentist

approach, the log-logistic model is preferred to the log-normal and other standard models. The

posterior distribution of β is somewhat different from that for the log-normal model (see Table

5). In particular, the effect of the test treatment is less pronounced and the effect of age is also

less prominent and more in line with our expectations. The results on β are relatively close to

the classical ones reported in Barros et al. (2008) using the log-Birnbaum Saunders Student t

model and to the ones in Lee and Wang (2003) using the log-logistic model. The estimates of

σ 2 cannot be compared across models because it has a different interpretation for each model.

17

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

Table 4: Comparison of the fitted models by DIC and the percentage of repetitions (out of 50) for which

the model performs better than the log-normal model in terms of the LPS score.

Type I Ind. Jeffreys

DIC

Better

than LN

Ind. Jeffreys

DIC

Better

than LN

1449.10

1446.08

1444.25

1444.03

1444.53

1449.51

1445.66

1444.28

1444.33

1444.24

1449.51

1444.28

1444.33

1444.24

60%

66%

74%

80%

●

LEP

0

●

2

4

6

LN

●

●

LEP

●

0.0

log−Bayes Factors

0.5

1.0

1.5

2.0

5.690

LST

LLOG

8

●

5.705

Log−Predictive Score

5.700

●

5.690

●

LLAP

66%

84%

88%

(c)

LN

5.705

●

5.695

Log−Predictive Score

5.700

LST

5.695

●

5.690

Log−Predictive Score

5.705

LN

5.710

(b)

5.710

(a)

●

74%

66%

84%

88%

5.700

Log-normal

Log-Student t

Log-Laplace

Log-exp. power

Log-logistic

Jeffreys

DIC

Better

than LN

5.695

Model

LLAP

●

●

LLOG

2.5

LEP

●

3.0

0.0

0.5

log−Bayes Factors

1.0

1.5

2.0

LLAP

LLOG

2.5

3.0

log−Bayes Factors

Figure 4: Bayes Factor of each model with respect to the log-normal and average of the LPS score

0

5

10

15

ν

20

25

30

0.10

0.20

(b)

0.00

Relative Frequency / Density

0.10

0.20

(a)

0.00

Relative Frequency / Density

obtained for the 50 repetitions. (a) Jeffreys prior, (b) Type I Ind. Jeffreys prior, (c) Ind. Jeffreys prior.

0

5

10

15

20

25

30

ν

Figure 5: Histogram of the posterior for ν with the log-Student t model. The solid line correspond to

π(ν) defined by (a) Jeffreys prior and (b) Type I Independence Jeffreys prior.

18

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

1.0

1.2

1.4

1.6

1.8

2.0

1.0

1.2

1.4

α

1.6

1.8

2.0

3

2

1

0

1

2

3

Relative Frequency / Density

(c)

0

1

2

3

Relative Frequency / Density

(b)

0

Relative Frequency / Density

(a)

1.0

1.2

1.4

α

1.6

1.8

2.0

α

Figure 6: Histogram of the posterior for α with the log-exponential power model. The solid line correspond to π(α) defined by (a) Jeffreys prior, (b) Type I Ind. Jeffreys prior and (c) Ind. Jeffreys prior.

Table 5: Summary of the posterior distribution of the parameters of the model under the Jeffreys prior

for the log-Student t, log-Laplace, log-logistic and log-exponential power models. Results are presented

on the basis of set observations. β0 : Intercept, β1 : Treat (test), β2 : Type (squamous), β3 : Type (small

cell), β4 : Type (adeno), β5 : Status, β6 : Time from diagnosis, β7 : Age, β8 : Prior therapy (yes).

Log-Student t

Median

HPD 95%

β0

β1

β2

β3

β4

β5

β6

β7

β8

σ2

θ

2.12

-0.07

0.03

-0.73

-0.74

0.04

0.00

0.01

-0.09

0.66

4.28

[ 0.79, 3.36]

[-0.43, 0.26]

[-0.50, 0.57]

[-1.23,-0.28]

[-1.26,-0.26]

[ 0.03, 0.04]

[-0.02, 0.02]

[-0.01, 0.03]

[-0.49, 0.33]

[ 0.37, 1.01]

[ 1.45,12.61]

Log-Laplace

Median

HPD 95%

2.08

-0.06

-0.04

-0.73

-0.67

0.04

0.01

0.01

-0.10

0.60

-

[ 0.82, 3.29]

[-0.42, 0.25]

[-0.55, 0.51]

[-1.20,-0.26]

[-1.14,-0.21]

[ 0.03, 0.04]

[-0.02, 0.02]

[-0.01, 0.03]

[-0.48, 0.31]

[ 0.41, 0.81]

-

Log-exp. power

Median

HPD95%

2.00

-0.08

-0.04

-0.72

-0.69

0.04

0.00

0.01

-0.10

0.87

1.15

[ 0.70, 3.27]

[-0.41, 0.28]

[-0.55, 0.52]

[-1.19,-0.22]

[-1.20,-0.20]

[ 0.03, 0.04]

[-0.02, 0.02]

[-0.01, 0.03]

[-0.50, 0.30]

[ 0.45, 1.64]

[ 1.00, 1.54]

Log-logistic

Median

HPD95%

2.06

-0.10

-0.02

-0.73

-0.76

0.04

0.00

0.01

-0.10

0.33

-

[ 0.74, 3.31]

[-0.46, 0.24]

[-0.55, 0.51]

[-1.23,-0.26]

[-1.31,-0.26]

[ 0.03, 0.05]

[-0.02, 0.02]

[-0.01, 0.03]

[-0.52, 0.30]

[ 0.24, 0.43]

-

19

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

The posterior distributions of the mixing parameters (not reported) vary substantially between the patients, suggesting heterogeneity in the data. Figure 7 formalizes this by presenting

the Bayes factor in favour of being an outlier for each of the 137 observations. There is clear

evidence for the existence of outlying observations under the three considered priors for all

models. Although all prior present similar results, this evidence is slightly stronger for the Jeffreys prior. Moreover, the choice of the mixture model does not greatly affect the conclusions.

The analysis suggests that observations 77, 85 are very clear outliers, while 44 and 100 are also

obvious outliers. Patients 77 and 85 had an uncensored survival time of 1 day (lower than the

2nd percentile of the survival times), were under the standard treatment and had a squamous

type of tumor. Observations 15, 17, 21, 75 and 95 are also detected as outlying observations

for some of these models. With the exception of patient 21, they all correspond to uncensored

observations. While observations 15, 95 and 100 have a small survival time (8, 2 and 11 days

respectively), the survival times associated to patients 17, 21, 44 and 75 are larger (384, 123,

392 and 991 days respectively). In particular, the survival time of patient 75 is above the 99th

percentile of the survival times of patients with the same type of tumor (squamous). Similarly,

the survival times of patients 17 and 44 are above the 98th percentile for patients that had the

same type of tumor (small cell). None of the patients detected as possible outliers had tumors

type adeno or large cell. Additionally, patient 95 has a considerably larger number of months

from diagnosis than other patients with the same type of tumor (small cell).

The previous results are roughly in line with the results in Barros et al. (2008), where

observations 77, 85 and 100 were also identified as possible influential observations when fitting

a log-Birnbaum-Saunders model. However, using a log-Birnbaum-Saunders-t model they also

label observations 12, 77, 95 and 106 as influential observations, whereas our models would

point to observations 44 and possibly 15 and 95 as additional outliers. This illustrates that

different models might detect different outliers.

5

Concluding remarks

We recommend the use of mixtures of life distributions defined in Section 2 as a convenient

framework for survival analysis, particularly when standard models such as the Weibull or lognormal are not able to capture some features of the data. This approach intuitively leads to flexible distributions on the basis of a known distribution by mixing with a random quantity. This

can also be interpreted as random effects or frailty terms. Specifically, the use of these mixture

families can be justified in the presence of unobserved heterogeneity or outlying observations.

In particular, the SMLN family is presented as an example of mixtures of life distributions. This

20

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

(a)

(b)

100

0

20

40

60

80

100

120

6

140

0

20

40

60

80

Observation

Observation

(c)

(d)

100

100

120

140

120

140

6

77

44

2

100

0

0

2

100

4

77

44

4

2log(BF)

6

8

85

8

85

2log(BF)

95

75

0

0

77

44

15

1721

2

95

52

4

2log(BF)

6

4

44

15

2

2log(BF)

8

85

8

85

77

0

20

40

60

80

100

120

140

0

Observation

20

40

60

80

100

Observation

Figure 7: Under Jeffreys prior. 2 × log(BF ) in favour of the hypothesis H1 : λi 6= λref (ui 6= uref )

versus H0 : λi = λref (ui = uref ). For (a) log-Student t, (b) log-Laplace, (c) log-exp. power and (d)

log-logistic model. Horizontal lines reflect the interpretation rule of Kass and Raftery (1995).

family, while based on the log-normal model, naturally allows us to fit data with a variety of tail

behavior. The mixing is applied to the shape parameter of the log-normal distribution and the

resultant distribution is quite flexible and can be adjusted by choosing the mixing distribution.

This makes this family applicable in a wide range of situations. Covariates are included through

an accelerated failure time (AFT) specification.

We consider objective Bayesian inference under the AFT-SMLN model. We propose three

different Jeffreys-type priors. These prior distributions are not proper and therefore the propriety of the posterior distribution cannot be guaranteed. Theorem 2 provides necessary and

sufficient conditions for the propriety of the posterior. As these conditions are not always easy

to check in practice, Corollary 2 and Theorem 3 provide some extra guidance and results for

specific mixing distributions. Additionally, the problem associated with the use of point observations was explored. The use of set observations was considered as a solution of this problem.

This solution can easily be implemented using Gibbs Sampling. We recommend their use in

situations in which it is not easy to verify the existence of problems associated to point observations. Additionally, we propose a methodology for outlier detection that is based on the mixing

structure. It is intuitively clear that outlying observations are associated with extreme values for

their corresponding mixing variable and the evidence of outlying observations is formalized by

means of Bayes Factors. The choice of a reference value is critical and is not always trivial. We

provide a general rule for situations in which the mixing distribution has a finite expectation.

We also provide guidance in those examples where this rule cannot be applied.

The mixing framework proposed here can be used with any proper mixing distribution,

21

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

whether the induced survival time distribution has a closed-form density function or not. The

proposed MCMC inference scheme does not rely on a closed form expression for the survival

density with the mixing variables integrated out, so our Bayesian inference can be used much

more widely than in the examples illustrated here. The challenge then may be that the Jeffreystype prior for the parameter(s) of the mixing distribution needs to be derived from Theorem 1

(rather than from the integrated survival density) and this may not be trivial in general.

A

Proofs

Theorem 1. Taking the negative expectation of the second derivatives of the log likelihood, the

expressions k1 (θ), k2 (θ), k3 (θ) and k4 (θ) are given by

2

2

σ

0

2

log(ti ) − x0i β EΛi Λi fLN ti |xi β, Λi

(19)

k1 (θ) = nETi

,

σ

f (ti )

2

σ2

0

4

n

log(ti ) − x0i β EΛi Λi fLN ti |xi β, Λi

k2 (θ) =

ETi

− 1 ,

4

σ

f (ti )

h

k3 (θ) =

n

ET i

2

log(ti )−x0i β

σ

i2

EΛi Λi fLN

2

ti |x0i β, σΛi

f 2 (ti )

(20)

Z

∞

fLN

0

ti |x0i β,

2

σ

λi

d

dPΛi (λi |θ)

dθ

n Z

1X ∞ d

dPΛi (λi |θ),

−

2 i=1 0 dθ

R

2

∞

σ2

d

0

n Z ∞

d2

0 fLN ti |xi β, λi dθ dPΛi (λi |θ) X

k4 (θ) = nETi

dPΛi (λi |θ).

−

f (ti )

dθ2

i=1 0

(21)

(22)

Corollary 1. The proof follows directly from Theorem 1 using the structure of the determinant of the Fisher information matrix and its sub-matrices.

Proposition 1. The contribution of the censored observations to the likelihood function

is given respectively by 1 − FT (ti ), FT (ti ) or FT (ti2 ) − FT (ti1 ) in the case of right, left or

interval censoring. These three quantities have an upper bound of 1 and using the independence

of the survival times, the likelihood of the data is bounded by the likelihood of the n − nc

observed survival times. Therefore, the marginal likelihood of the complete data is bounded by

the marginal likelihood of these n − nc observations and the result follows.

22

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

Theorem 2. (Based on Fernández and Steel, 2000). Let T = (T1 , . . . , Tn )0 , it follows that

Z

n

n

n

Y

Y

Y

1

− 12 [(β−a)0 A(β−a)+S 2 (D,y)]

−1

2

2 −n

2

2

2σ

fT (t|β, σ , θ) =

ti

(2πσ )

λi e

dP (λi |θ), (23)

i=1

R+n

i=1

i=1

where y = (log(t1 ), . . . , log(tn ))0 , a = (X 0 DX)−1 X 0 Dy, A = X 0 DX and S 2 (D, y) = y 0 Dy −

y 0 DX(X 0 DX)−1 X 0 Dy. The posterior distribution of (β, σ 2 , θ) is proper if and only if the

marginal density of T , fT (t), is finite. In the following, we use Fubini’s theorem in order to

exchange the order of the integrals. Integrating with respect to β, we have

1

Qn

Z Z Z

n

n

2

Y

Y

S 2 (D,y)

λ

−

i

−1

2 − n+2p−k

i=1

2

2

2σ

p

e

fT (t) ∝

ti

(σ )

π(θ)

dP (λi |θ) dθ dσ 2 .

0

det(X DX)

R+ Θ R+n

i=1

i=1

(24)

2

After integrating with respect to σ , it follows that

Z Z

n

n

n

Y

Y

Y

1

−1

0

− 21

2

− n+2p−k−2

2

2

fT (t) ∝

ti

π(θ)

λi (det(X DX)) [S (D, y)]

dP (λi |θ) dθ,

i=1

Θ

R+n i=1

i=1

(25)

provided that n + 2p − k − 2 > 0. As X has full rank, n > k is also required. Finally, provided

that ti 6= 0 for all i ∈ {1, . . . , n}, the theorem holds.

Lemma 1. Under the same assumptions as in Theorem 2, the posterior distribution of

(β, σ 2 , θ) given the data is proper if and only if n > max{k, k − 2p + 2} and

Z Z

n

Y

Y

1

− n+2p−k−3

2

2

π(θ)

dP (λi |θ) dθ < ∞,

(26)

λi λmk+1

Θ

0<λ1 <···<λn <∞ i∈{m

/

1 ,...,mk ,mk+1 }

i=1

where

k

Y

i=1

k+1

Y

i=1

(

λmi ≡ max

k

Y

)

λli : det ((xl1 · · · xlk )) 6= 0 ,

(27)

i=1

λmi ≡ max

(k+1

Y

i=1

λli : det

xl1

···

log(tl1 ) · · ·

xlk+1

log(tlk+1 )

!!

)

6= 0 .

(28)

Proof: It can be shown that det(X 0 DX) has upper and lower bounds that are proportional

Qk

to i=1 λmi and S 2 (D, Y ) has upper and lower bounds that are proportional to λmk+1 (see

Fernández and Steel, 1999). Combined with (25), this lemma is immediate.

Corollary 2. Excluding a set of zero Lebesgue measure, it follows that λmk+1 in Lemma 1

is equal to λb = max{λi : i 6= m1 , . . . , mk }.

R

(i) For p = 1. By Lemma 1, the integral in (26) is bounded by Θ π(θ) dθ. Hence, a sufficient

condition for the propriety of the posterior distribution is that π(θ) is proper.

23

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

(ii) For p = 1 + k/2. By Lemma 1, (26) is bounded by

−k

−k

R

Θ

−k

E(λb 2 |θ)π(θ) dθ. However,

E(λb 2 |θ) ≤ E(λ(1)2 |θ) where λ(1) = min{λ1 , . . . , λn }. Using the density of the first

−k

−k

order statistic it follows that E(λ(1)2 |θ) ≤ nE(λi 2 |θ) ∀i = 1, . . . , n and hence, as the

λi ’s are iid, a sufficient condition for the propriety of the posterior distribution is

Z

−k

E(λi 2 |θ)π(θ) dθ < ∞.

(29)

Θ

(iii) The proof is immediate when using Fubini’s theorem in (25).

Theorem 3.

(i) It can be shown that the Fisher information matrix corresponds to

1 ν+1 Pn

0

0

0

i=1 xi xi

σ 2 ν+3

n

ν

1

0

− σn2 (ν+1)(ν+3)

.

2σ 4 ν+3

0 ν

2(ν+5)

n

1

n

0 ν+1

0

− σ2 (ν+1)(ν+3) 4 Ψ ( 2 ) − Ψ ( 2 ) − ν(ν+1)(ν+3)

(30)

Therefore, the components depending on ν of the Jeffreys, Type I Independence Jeffreys

and Independence Jeffreys prior are, respectively

s

s

ν k

ν

+

1

2(ν + 3)

ν(ν + 1)

J

π (ν) =

Ψ0

− Ψ0

−

,

(31)

(k+1)

(ν + 3)

2

2

ν(ν + 1)2

s

r

ν ν

+

1

ν

2(ν + 3)

II

− Ψ0

,

(32)

π (ν) =

Ψ0

−

ν+3

2

2

ν(ν + 1)2

s

ν ν

+

1

2(ν + 5)

I

π (ν) =

Ψ0

− Ψ0

.

(33)

−

2

2

ν(ν + 1)(ν + 3)

It can be shown that π J (ν) and π II (ν) are proper priors for ν (using Fonseca et al.,

2008). Therefore, Corollary 2 part (i) implies the propriety of the posterior distribution

for these two priors. Corollary 2 part (iii) indicates that the Independence Jeffreys prior

does not produce a proper posterior. In fact, using Stirling’s approximation for the gamma

function, it follows that

!ν/2−1

nν/2 n/2 Y

n

n

Y

ν Pn

ν/2

1

p(λi |ν) ≈

λi

e− 2 ( i=1 λi −n) e−n , (34)

ν/2 − 1

ν/2 − 1

i=1

i=1

as ν goes to infinity. Moreover, by Corollary 1 in Fonseca et al. (2008), π I (ν) is of order

R∞

Q

ν −2 when ν → ∞. Therefore, 0 π(ν) ni=1 p(λi |ν) dν = ∞ for any value of λ in A,

P

where A = {λ = (λ1 , . . . , λn )0 : ni=1 λi < n}.

24

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

−k

(ii) For the Jeffreys prior, E(λ1 2 ) is finite and therefore the posterior is proper. As the parameter θ is not required, the Independence Jeffreys and Type I Independence Jeffreys

coincide and Corollary 2 part (i) indicates that the posterior is proper under these priors.

(iii) The Fisher Information matrix was derived by Martı́n and Pérez (2009) and is given by

α(α−1)Γ(1− 1 ) P

n

0

α

0

0

1

i=1 xi xi

σ 2 Γ( α

)

1

n(1+Ψ(1+ α

))

nα

0

−

2

σ

σα

1

n(1+Ψ(1+ α

))

n

0

−

(1 + α1 )Ψ0 (1 + α1 ) + (1 + Ψ(1 + α1 ))2 − 1

σα

α3

.

Therefore, the components depending on α of the Jeffreys, Type I Independence Jeffreys

and Independence Jeffreys prior are, respectively

k/2 s

α(α − 1)Γ(1 − 1/α)

1

1

1

J

0

π (α) =

1+

Ψ 1+

− 1,

(35)

Γ(1/α)

α

α

α

s

1

1

1

II

0

1+

Ψ 1+

− 1,

(36)

π (α) =

α

α

α

s

2

1

1

1

1

I

0

π (α) =

1+

Ψ 1+

+ 1+Ψ 1+

− 1.

(37)

3

α

α

α

α2

As the previous components are bounded continuous functions of α in (1, 2), they are

proper priors for α. Corollary 2 part (i) implies the propriety of the posterior distribution

under the Type I Independence Jeffreys and Independence Jeffreys prior. The propriety

of the posterior under the Jeffreys prior can be verified using Corollary 2 part (ii) because

−k

E(λ1 2 |α) is a continuous bounded function for α ∈ (1, 2). In fact,

k+1

−k

E(λ1 2 |α)

Γ(3/2) E(W 2 |α)

Γ(3/2) Γ((k + 1)/α + 1)

=

,

=

k+1

Γ(1 + 1/α) E(Z 2 |α)

Γ(1 + 1/α) Γ((k + 3)/2)

(38)

where W ∼ Weibull(α/2, 1) and Z ∼ Exponential(1). The latter uses the lemma in

Meintanis (1998) which states that a Weibull(a, 1) random variable can be represented as

the ratio of an Exponential(1) and an independent positive stable(a) random variable.

(iv) As the parameter θ is not required , we can conclude the same as in the log-Laplace model

for the Independence Jeffreys and Type I Independence Jeffreys prior. For the Jeffreys

p

prior, Corollary 2 part (ii) is used. It can be shown that Ωi = 1/(4Λi ) has an Asymptotic

P

k+1 2 −2k2 ωi2

Kolmogorov distribution with density function g(ωi ) = 8ωi ∞

k e

, for

k=1 (−1)

−k/2

ωi > 0. Therefore, E(λ1 ) = 2k E(ωik ) which is finite because

k+2

Z ∞

∞

∞

X

X

1

1

k+1 2

k+1 −2k2 ω12

k+1 2

(−1) k

ω1 e

dω1 =

(−1) k √

(39)

k+1

2k

0

k=1

k=1

25

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

is finite (it has the form

P∞

k+1

ak

k=1 (−1)

with ak → 0 as k → ∞).

Theorem 4. This proof requires Lemma 1. As was argued in Fernández and Steel (1999),

if s is the largest number of observations that can be written as an exact linear combination

of their covariates, it follows that λmk+1 in Lemma 1 corresponds to λ(n−s) which represent the

(n−s)-th order statistic of λ1 , . . . , λn . The rest of the proof is obtained by iteratively integrating

(26). We use the following inequality (see Fernández and Steel, 1999)

Z λi+1

λvi+1

λvi+1 −rλi+1

−rλi

e

≤

λv−1

e

dλ

≤

,

r, v > 0.

(40)

i

i

v

v

0

Note that the integral in (40) is infinity for v < 0. After integrating with respect to the n − s − 1

smallest λ’s, (26) has an upper bound and lower bound given respectively by

"

∞Z

Z

0

0<λ(n−s) <···<λ(n) <∞

ν #n−s ν+1 −(n−s−1)

2

ν

2

λa−1

e−λ(n−s) 2

ν

(n−s)

(n − s − 1)!

Γ 2

ν

2

n

Y

dP (λ(i) |ν)π(ν) dν

i=n−s+1

(41)

Z

0

∞Z

0<λ(n−s) <···<λ(n) <∞

"

ν #n−s ν+1 −(n−s−1)

n

2

Y

(n−s)ν

a−1

− 2 λ(n−s)

2

e

λ

dP (λ(i) |ν)π(ν) dν.

(n − s − 1)! (n−s)

Γ ν2

i=n−s+1

(42)

ν

2

Where a = − n+2p−k−3

+ ν2 + (n−s−1)(ν+1)

. Note that when integrating with respect to λ(n−s) ,

2

2

it requires a > 0 in order to have a finite integral in (41) and (42). Hence, the propriety of the

posterior distribution requires ν > n−k+(2p−2)

− 1.

n−s

Theorem 5. The proof follows from Fubini’s theorem and the proof of Theorem 2. Define

R

I(·) as the integral in (25) as a function of the observables. As E is bounded E I(s) ds is

bounded as long as I(·) is finite except on a set of zero Lebesgue measure.

References

Angeletti, F., E. Bertin, and P. Abry (2011). Critical moment definition and estimation, for finite

size observation of log-exponential-power law random variables. arXiv:1103.5033.

Barros, M., G. Paula, and V. Leiva (2008). A new class of survival regression models with

heavy-tailed errors: robustness and diagnostics. Lifetime Data Analysis 14, 316–332.

Bernardo, J. and A. Smith (1994). Bayesian Theory. Chichester: Wiley.

Cassidy, D., M. Hamp, and R. Ouyed (2009). Pricing european options with a log Student’s

t-distribution: a Gosset formula. Physica A: Statistical Mechanics and its Applications 389,

5736–5748.

26

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

Chib, S. (1995). Marginal likelihood from the Gibbs output. Journal of the American Statistical

Association 90, 1313–1321.

Chib, S. and I. Jeliazkov (2001). Marginal likelihood from the Metropolis-Hastings output.

Journal of the American Statistical Association 96, 270–281.

Fernández, C. and M. Steel (1998). On the dangers of modelling through continuous distribution: A Bayesian perspective. Bayesian Statistics 6, J.M. Bernardo, J.O. Berger, A.P. Dawid,

and A.F.M. Smith (eds.), Oxford University Press, 213–238.

Fernández, C. and M. Steel (1999). Multivariate Student-t regression models: Pitfalls and

inference. Biometrika 86, 153–167.

Fernández, C. and M. Steel (2000). Bayesian regression analysis with scale mixtures of normals.

Econometric Theory 16, 80–101.

Fonseca, T., M. Ferreira, and H. Migon (2008). Objective Bayesian analysis for the Student-t

regression model. Biometrika 95, 325–333.

Good, I. (1952). Rational decisions. Journal of the Royal Statistical Society. Series B (Methodological) 14, 107–114.

Hogg, R. V. and S. A. Klugman (1983). On the estimation of long tailed skewed distributios

with actuarial applications. Journal of Econometrics 23, 91–102.

Holmes, C. and L. Held (2006). Bayesian auxiliary variable models for binary and multinomial

regression. Bayesian Analysis 1, 145–168.

Ibragimov, I. and K. Chernin (1959). On the unimodality of stable laws. Theory of Probability

and its applications 4, 417–419.

Jewell, N. (1982). Mixtures of exponential distributions. The Annals of Statistics 10, 479–484.

Kalbfleisch, J. and R. Prentice (2002). The Statistical Analysis of Failure Time Data (2nd ed.).

Wiley.

Kass, R. and A. Raftery (1995). Bayes factors. Journal of the American Statistical Association 90, 773–795.

Lange, K., R. Little, and J. Taylor (1989). Robust statistical modelling using the t distribution.

Journal of the American Statistical Association 84, 881–896.

Lee, E. and J. Wang (2003). Statistical methods for survival data analysis (3rd ed.). Wiley.

27

CRiSM Paper No. 13-01, www.warwick.ac.uk/go/crism

Lindsey, J., W. Byrom, J. Wang, P. Jarvis, and B. Jones (2000). Generalized nonlinear models

for pharmacokinetic data. Biometrics 56, 81–88.

Marshall, A. W. and I. Olkin (2007). Life Distributions. Springer.

Martı́n, J. and C. Pérez (2009). Bayesian analysis of a generalized lognormal distribution.

Computational Statistics and Data Analysis 53, 1377–1387.

McDonald, J. and R. Butler (1987). Some generalized mixture distributions with an application

to unemployment duration. The Review of Economics and Statistics 69, 232–240.