Capability Computing HPCx: Going out in a blaze of glory ContEntS

advertisement

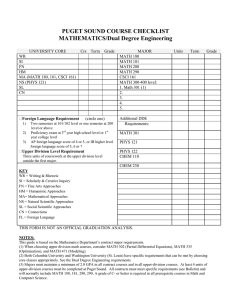

Capability Computing The newsletter of the HPCx community [ISSUE 13, Autumn 2009] Image courtesy London Fire Brigade. See FireGrid and Urgent Computing on page 12. HPCx: Going out in a blaze of glory We celebrate seven years of supporting world-class research Contents 2 Editorial Some words from the HPCx service providers 15CPMD simulations of modifier ion migration in bioactive glasses 3 Moving the capability boundaries 17Searching for gene-gene interactions in colorectal cancer 6 Computational materials chemistry on HPCx 9 Modelling enzyme-catalysed reactions 12 FireGrid and Urgent Computing 14 Interactive biomolecular modelling with VMD and NAMD 18 Simulations of antihydrogen formation 20New EPCC Research Collaboration webpages The Sixth DEISA Extreme Computing Initiative The Seventh HPCx Annual Seminar Editorial Alan Gray, EPCC, The University of Edinburgh At the end of January, after 7 years of tireless number crunching, the HPCx service will close its virtual doors and move into a wellearned retirement. HPCx has enabled a wealth of results across a wide range of disciplines, and in turn has facilitated progress which otherwise would not have been possible. I urge you to not only read this issue of Capability Computing, but take some time to revisit some of the many successes highlighted in previous issues available at www.hpcx.ac.uk/about/newsletter. It will be sad to lose HPCx, but of course HECToR (which took over HPCx’s role as the UK’s main high-end computing resource in 2007) will continue. In managing both systems simultaneously, we have been able to make the most of a tremendous opportunity. Over the last 2 years we have operated HECToR and HPCx in a complementary fashion: HECToR as the top-end “Leadership Facility”, and HPCx as our “National Supercomputer” trading overall utilisation in favour of a more flexible service (see “HPCx Phase 4 and complementary capability computing” in issue 11 of Capability Computing). This has not only enabled a much richer complete service than previously possible, but has provided EPCC and Daresbury at SC’2009 14-20 November, Portland, OR, USA EPCC will exhibit in booth 658. STFC Daresbury Laboratory will be in booth 659. If you’re going to Supercomputing 2009, please drop by and say hello. 2 valuable experience to the benefit of future services. On the opposite page, a few words are presented from those responsible for delivering the HPCx service. Following this, two of the most active projects over the years, in the areas of materials research and environmental modelling, describe how HPCx has helped further their research. The remainder of the issue is dedicated to showcasing the fruits of the complementarity initiative: several projects, each of which have benefited from the enhanced flexibility, describe their recent work on HPCx. Included are articles which encompass a wide range of areas: the use of “Urgent Computing” to assist firefighting with real-time simulations, analysis of the catalysis of enzymes (important for drug discovery), the use of “Computational Steering” to further the understanding of proteins within the nervous system, research into the structure of bioactive glasses (which have applications in biomedicine), the search for genetic markers of colorectal cancer, and simulations of the formation of anti-hydrogen which aim to further our understanding of fundamental physical theories. The first point which strikes me is that the presented research is not only important in furthering our theoretical understanding, but in many cases directly applicable in improving our everyday lives. Secondly, it is evident that the problems which we wish to tackle (and the resulting computational applications) continue to increase in complexity, and so too do the computing architectures on which these problems must be executed. It is therefore of paramount importance that all those involved in the process: experimentalists, theoreticians, computational experts and all those in-between, strive to work as closely together as possible to enable further breakthroughs. I sincerely hope that you enjoy this issue of Capability Computing, that HPCx has served you well and you continue to benefit from the national HPC facilities. ! The figure above shows the excellent reliability of HPCx. Some words from the Service Providers... Arthur Trew, HPCx Service Director and EPCC Director, The University of Edinburgh Caroline Isaac, IBM UK HPC Executive While it is true that computers are transitory and it is the knowledge that they create which persists, it is equally true that the end of the HPCx service in January 2010 will mark the termination of one of the most productive and trouble-free HPC facilities in the world. HPCx was born in, and owes much of its success to, a close collaboration between EPCC, Daresbury Laboratory and IBM. While the science it has produced is represented by the articles in this newsletter, any such report cannot do full justice to the many papers produced over the past six years by groups as disparate as climate modelling, design of novel materials, computational fluid dynamics, atomic physics and fusion research. It has also undertaken commercially-orientated researched work for companies, notably in oil reservoir detection and characterisation. To quote a cliché, it seems like yesterday since we were deep in the bits and bytes of bidding for and installing the HPCx system at Daresbury. The system has come to represent one of the most successful academic services for HPC in the world, launching onto the Top 500 straight in at position 9 in November 2002. I must mention a few names as ‘blasts from the past’ – John Harvey, Paul Gozzard and Jonathan Follows all contributed in those early days. And of course, where would be have been without the services of Terry Davis, who sadly passed away earlier in 2009. His robust stance on a number of issues have undoubtedly underpinned the success of HPCx and its availability statistics. I know we all miss him. To quote from the HPCx Annual Report in 2007, the availability of HPCx ‘…is unprecedented for any national service in the UK (and to our knowledge elsewhere). This is a great credit to both the Systems Team and to IBM.’ For the past two years since the HECToR service started, HPCx has also undertaken a role of testing new methods of using HPC facilities: the complementary computing initiative. This has given us valuable lessons in the way that services can, and must, respond to the changing needs of computational researchers using national HPC facilities without unduly affecting utilisation or diminishing a service’s capability computing focus. Our aim must be to ensure that these service innovations transfer to successor facilities. In closing, I must note the single most impressive feature of HPCx: its reliability. Of course, I may be tempting fate in so doing, but it is now some three years since we last had a failure resulting in a loss of service. That is a record that is unrivalled at any similarly-sized facility in the world and is testament to IBM’s build quality and maintenance. But services are more than machines and I would wish to thank all staff who have worked on HPCx over the years and I also thank you, the users, for the energy and understanding that you have brought to the project. I am always heartened to hear the success stories around the science that has been achieved on HPCx. From the extreme largescale simulations with DL_POLY to the visualisation of POLCOMS output and a myriad of other projects, the sheer breadth of science achieved on HPCx has been truly astonishing. In conjunction with the Computational Science and Engineering Department at STFC, Daresbury Laboratory (then CCLRC) and EPCC, IBM has for many years worked closely with research groups throughout the UK on many projects. The unmatched expertise in porting and optimising large amounts of scientific code has without a doubt significantly contributed to the scientific success of the service. In addition, the cross discipline nature of many projects, especially in the later years, has encouraged a real ‘pushing out of the scientific envelope’ and bodes well for the real challenges ahead as we move into the world of exascaling. 3 Figure 1: The rate of forecast error growth of the coupled UM atmosphere-ocean ensemble with prediction lead times for different ensemble sizes. Figure 2: the scalability of different UM atmosphere model resolutions on HPCx. Moving the capability boundaries Lois Steenman-Clark, University of Reading When HPCx was first labelled as a ‘capability computing service’ there was extensive debate within the environmental modelling community about the precise definition of capability. If capability was about thinking big, then big could be applied to many different aspects of environmental modelling experiments. For climate modelling experiments the ‘thinking big’ challenges are many, including: • running long enough time slices to achieve statistical significance • increasing the temporal frequency of model diagnostic output • using large ensembles of experiments to study variability and predictability • increasing model spatial resolution • increasing the complexity of processes within the earth system modelling system But some of these challenges demand big changes in throughput or input/output performance, that is, model efficiency, while others need more processors, which was the initial definition of capability computing on HPCx. Within NCAS, the National Centre for Atmospheric Science, two climate projects running on HPCx were successful in addressing both a major scientific challenge 4 and the capability challenge, that of exploiting a larger number of processors. RAPID is a six year NERC (Natural Environment Research Council) program which aims to improve our ability to quantify the probability and magnitude of future rapid climate change, principally focussing on the role of the Atlantic Ocean thermohaline circulation. A RAPID funded project within NCAS, proposed to use ensembles of modest resolution coupled atmosphere-ocean experiments to sample the ocean domain, especially the Atlantic, to explore the maximum benefit for climate predictions of enhanced ocean observations. Ensembles of climate experiments essentially enable us to average weather ’noise’ to isolate a climate signal. There are many different ways to run ensemble experiments: • run the jobs serially and independently • run them using scripts to control the runs • run them as one mpi pool, effectively running the ensemble as one job The NCAS CMS (Computational Modelling Services) group developed an ensemble framework for the Unified Model (UM) Figure 3: A composite of sea surface temperature anomalies associated with El-Nino events from: a) an observational climatology b) the HIGEM control run c) standard climate resolution UM experiments. that exploited the third method. The benefit of this method is principally efficient throughput as creating, changing, stopping and starting the ensemble are significantly easier using the framework. The other added benefit is using more processors. The modest size of the UM experiment, with a 270 km (N48) atmosphere and 1⁰ ocean, normally runs efficiently on some 16 processors on HPCx as shown in figure 2 but with the ensemble framework up to 256 processor jobs were run for the 16 member ensembles. This RAPID project was able to run ensembles of ensembles to find the optimum number of ensemble members which gave convergence in the rate of the growth of forecast error for at least 10 model years, as shown in figure 1. The project has successfully identified key critical regions of the Atlantic Ocean where enhanced ocean observations would optimally improve decadal climate predictions. Ensemble experiments are fundamental in improving the predictability and understanding the variability of models so the ensemble framework is being exploited by a wide range of application areas. HIGEM was a NERC funded partnership of seven UK academic groups and the Met Office with the goal to achieve a major advance in developing an Earth System model of unprecedented resolution and capable of performing multi-century simulations. The HIGEM model development was based on the Met Office Unified Model (UM) family of models. Increasing the horizontal resolution of these Earth System models allows the capture of climate processes and weather systems in much greater detail. However increasing resolution is scientifically challenging. The resolution was to be increased from the current, approximately 135 km (N96) for the atmosphere with 1⁰ in the ocean and sea-ice, climate resolution to nearer 90 km (N144) for the atmosphere with 1/3⁰ in the ocean and sea-ice. New input and model forcing files had to be created and tested. The new model needed to be tested, analysed, assessed and tuned with respect to observational climatology data. Then the new model had to be optimised by the NCAS CMS group to ensure that multi-decadal experiments were feasible. While the atmosphere Unified Model (UM) code scaled well with horizontal resolution as can be seen in figure 2, the ocean code had many more scalability issues so the optimum performance for HIGEM was on 256 processors of HPCx. The control experiment, 115 model years of HIGEM, took over 6 months to run but nearly 12 months to complete because of wait time. Results from the analysis of this experiment are shown in figure 3 demonstrating the benefits of enhanced resolution. The HIGEM model is now regularly used in current research projects and will be used for some very high resolution CMIP5 (Climate Model Inter-comparison Project) experiments as part of the input to the next IPCC (International Panel on Climate Change) report due in 2013. 5 Figure 1: Meetings held by the Materials Chemistry Consortium. Figure 2: Damage created by 50 keV recoil atom in quartz. Computational materials chemistry on HPCx Richard Catlow and Scott Woodley, Dept of Chemistry, University College London The EPSRC funded Materials Chemistry Consortium, which comprises over 25 research groups based in 13 of UK’s universities and RCUK research laboratories, has, since its inception in 1994, exploited the latest generation of high performance computing technology in a wide-ranging programme of simulation studies of complex materials. The consortium meet every six months to share experience, present latest results and allocate resources; although often crammed into a small room (Figure 1). The programme of the consortium has embraced code development and optimisation and an extensive applications portfolio, which has included energy and environmental materials, catalysis and surface science, quantum devices, nano-science and biomaterials. The HPCx service provided exciting new opportunities for the consortium and this article will highlight some of the many scientific achievements of the consortium using the facility. Energy and Environmental Materials The work of the consortium has included extensive modelling studies of solid-state battery and fuel cell materials. For example, considering rechargeable Li-ion batteries, Koudriachova and Harrison have investigated the phase stability and electronic structure of Zr-doped Li-anatase [1], whereas Islam et al. [2] have elucidated the various mechanisms for ion diffusion in fuel cell materials. Modelling radiation damage in materials using molecular dynamics is another key area that demands national HPC resources, and where advances have been achieved by two 6 of our members using the DL_POLY code, which has effectively exploited the massively parallel architecture of HPCx. Duffy et al. [3] has included, and implemented with the DL_POLY code, the effects of electronic stopping and electron–ion interactions within radiation damage simulations of metals, whereas Dove et al. [4] has investigated the evolution of the damage on annealing for SiO2, GeO2, TiO2, Al2O3, and MgO, see Figure 2 which illustrates the simulated fission track as a result of a 50 keV U recoil in quartz, where the simulation box contains 5,133,528 atoms. The knowledge obtained from these simulations, i.e. the fundamental atomistic processes occurring during damage, is vitally important in the assessment and design of materials for use in nuclear reactors. Catalysis Computer simulation is now a key tool in catalytic science and the consortium has made notable progress in advancing our understanding of catalysis at the molecular level in several key areas including oxide supported metals (see, for example, the work of Willock et al. [5, 6]) and microporous catalysts. Work of To et al. [7] on Ti substituted microporous silica catalysts nicely illustrates the latter field. These materials are widely used as selective oxidation catalysts – a key class of reaction in the chemicals industry. To’s work elucidated both the nature of the active sites (as shown in Figure 3) and the catalytic cycle in this catalyst (in Figure 4). The work ties in closely with recent in situ spectroscopic studies Figure 3: Formation of active intermediate in microporous titanosilicate catalysts by reaction with H2O2. Figure 4: Epoxidation mechanisms in titanosilicate catalysts. of the material using synchrotron radiation techniques. Switching our interest from industry to nature, and in particular reactions on stardust, Goumans et al. [8] have investigated silica grain catalysis of methanol formation. Biomaterials An important new departure for the consortium in recent years has been the extension of its programme to include biomaterials science. In particular, work of de Leeuw and co-workers [9] has explored fundamental factors relating to the structure of bone, in particular the interface between apatite and collagen. Figure 5 illustrates the results of a molecular dynamics simulation of the nucleation of hydroxyapatite in an aqueous environment at a collagen template, showing the clustering of the calcium and phosphate ions around the collagen functional groups. Nano-Chemistry and Nucleation One of the most rapidly expanding areas of computational materials science is exploiting computational tools to develop models for the structures, properties and reactivities of nanoparticulate matter. Woodley et al. [10-12] have been particularly active in the field of oxide, nitride and carbide nano-science. They have explored the possible structures and properties of such nanoparticles, as well as how particularly stable particles can be employed as building blocks, see Figure 6. Different aspects of nanochemistry have been examined in the work of Tay and Bresme [13], who have modelled water about passivated gold nanoparticles that are approximately 3 nm in diameter; and Martsinovich and Kantorovich [14], who have modelled the process of pulling the C60 molecule on a Si(001) surface with an STM tip. Whereas in nucleation and crystal growth, Parker et al. [15] have investigated the influence of pH on crystal growth of silica; and Hu and Michaelides [16] have investigated the formation of ice on kaolinite, microscopic dust particle of which reside in the upper atmosphere and play an important role in the creation of snow crystals. The examples provided here is by no means an exhaustive list of innovative research conducted by the members of the Materials Chemistry Consortium, indeed the work of the consortium is very broad including for example exciting new studies of magnetic oxides [17] and transparent conductors [18]. We hope however that the illustrative set of examples in this article indicates the wide range and impact of work achieved within e05 on HPCx, which is funded via the EPSRC grants GR/S 13422 and EP/D504872 (under the portfolio scheme). The Materials Chemistry is still very much active on HPCx thanks to the portfolio grant and on HECToR via the EPSRC grant EP/F067496 held by Richard Catlow (UCL) and Nic Harrison (Imperial College). We would like to thank the staff within the HPCx User Administration and Helpdesk for their efficient and friendly help and advice. 7 Figure 5: molecular dynamics simulation of the nucleation of hydroxyapatite in an aqueous environment at a collagen template. Figure 6: Stable octahedral clusters are connected to create microporous crystals. References 1. Li sites and phase stability in TiO2-anatase and Zr-doped TiO2-anatase, M V Koudriachova, N M Harrison, J. Mat. Chem., 2006, 16, 1973. 2. Cooperative mechanisms of fast-ion conduction in gallium-based oxides with tetrahedral moieties, K E Kendrick, J Kendrick, K S Knight, M S Islam, P R Slater, NAT MAT, 2007, 6, 11, 871. 3 Including the effects of electronic stopping and electron–ion interactions in radiation damage simulations, D M Duffy, A M Rutherford, J. Phys.: Condens. Matter, 2007, 19, 016207. 4 Atomistic simulations of resistance to amorphization by radiation damage, K Trachenko, M T Dove, E Artacho, I T Todorov, W. Smith, Phys. Rev. B 2006, 73, 174207. 5. Theory and simulation in heterogeneous gold catalysis, R Coquet, K L Howard, D J Willock, Chem. Soc. Rev., 2008, 37, 9, 2046. 6. Calculations on the adsorption of Au to MgO surfaces using SIESTA, R Coquet, G J Hutchings, S H Taylor, D J Willock, J. Mater. Chem., 2006, 16, 20, 1978. 7. Hybrid QM/MM investigations into the structure and properties of oxygen-donating species in TS-1, J To, A A Sokol, S A French, C R A Catlow, J. Phys. Chem. C, 2008, 112, 18, 7173. 8. Silica grain catalysis of methanol formation, T P M Goumans, A Wander, C R A Catlow, W A Brown, Mon. Not. R. Astron. Soc., 2007, 382, 1829. 9. N Almora-Barrios, N H de Leeuw, Density Functional Theory calculations and Molecular Dynamics simulations of the interaction of bio-molecules with hydroxyapatite surfaces in an aqueous environment, Mater. Res. Soc. Symp. Proc., 2009, 1131E, 1131-Y01-06. 8 10 Properties of small TiO2, ZrO2 and HfO2 nanoparticles, S M Woodley, S Hamad, J A Mejias, C R A Catlow, J. Mat. Chem., 2006, 16, 20, 1927. 11. Structure, optical properties and defects in nitride (III–V) nanoscale cage clusters, S A Shevlin, Z X Guo, H J J van Dam, P Sherwood, C R A Catlow, A A Sokol, S M Woodley, Phys. Chem. Chem. Phys., 2008, 10, 14, 1944. 12. Bubbles and microporous frameworks of silicon carbide, M B Watkins, S A Shevlin, A A Sokol, B Slater, C R A Catlow, S M Woodley, Phys. Chem. Chem. Phys., 2009, 11, 17, 3186. 13. Hydrogen bond structure and vibrational spectrum of water at a passivated metal nanoparticle, K Tay, F Bresme, J. Mat. Chem., 2006, 16, 20, 1956. 14. Pulling the C60 molecule on a Si(001) surface with an STM tip: A theoretical study, N Martsinovich, L. Kantorovich, Phys. Rev. B, 2008, 77, 115429. 15. Atomistic simulations of zeolite surfaces and water-zeolite interface, W Gren, S C Parker, Geochimica Cosmochimica Acta, 2008, 72, 12, A328 16. Ice formation on kaolinite: Lattice match or amphoterism?, X L Hu, A Michaelides, Surf. Sci., 2007, 601, 23, 5378. 17. Magnetic moment and coupling mechanism of iron-doped rutile TiO2 from first principles, G. Mallia, N. M. Harrison, Phys. Rev. B, 2007, 75, 165201. 18. Hopping and optical absorption of electrons in nano-porous crystal 12CaO.7Al2O3, P V Sushko, A L Shluger, K Hayashi, M Hirano, H Hosono, Thin Solid Films, 2003, 445, 2, 161. Using high performance computing to model enzyme-catalysed reactions Adrian Mulholland and Christopher Woods, University of Bristol Introduction HPC resources are increasingly helping to illuminate and analyse the fundamental mechanisms of reactions catalysed by enzymes. [1] Enzymes are very efficient natural catalysts. Understanding how they work is a vital first step to the goal of harnessing their power for industrial and pharmaceutical applications. For example, many drugs work by stopping enzymes from functioning. Endocannabinoids[2] can reduce pain and anxiety. These are molecules produced by our own bodies that are similar to the active ingredient of cannabis. The enzyme fatty acid amide hydrolase (FAAH, figure 1) catalyses the break down of the endocannabinoid anandamide. Blocking the activity of FAAH is therefore a promising target for drugs designed to treat pain, anxiety and depression. Atomically detailed computer models of enzyme-catalysed reactions can provide an insight into the source of an enzyme's catalytic power.[2,3] Due to the large size of these biological macromolecules, simplified classical models of atomic interactions are used. These molecular mechanics (MM) models can be used successfully to study motions and interactions of proteins.[3] However, MM can provide only a low-quality model of a chemical reaction. In contrast, computational chemistry methods based on quantum mechanics (QM) can model reactions well. QM calculations are highly computationally expensive though, making it impractical to model an entire enzyme system. One solution is to use multiscale methods[4,5] that embed a QM representation of the reactive region of the enzyme within an MM model of the rest of the system. Multilevel simulations of biological systems typically scale poorly over the many processors available on modern HPC resources. New multiscale modelling methods,[6] which split a single calculation into an ensemble of loosely-coupled simulations, are therefore a promising new direction to utilize maximum computing power. The aim is to make best use of the large numbers of processors by effectively coupling multiple individual simulations into a single supra-simulation. This method, applied on an HPC resource, promises to lead to a step change in the quality of the modelling of enzyme-catalysed reactions and so to provide new insights into these remarkable biological catalysts. Simulation Methodology To understanding an enzyme-catalysed reaction we need to know how much energy is needed to make and break chemical bonds in the process, i.e. how energy changes during the reaction, the ‘energy profile’ of the reaction. This shows the change in free energy as the reaction progresses from the reactant, through a transition state, to the final product. The difference in free energy between the reactant and transition state is the energy barrier to reaction, central to determining how fast the reaction will happen. Comparing it to that for the uncatalysed reaction, it is possible to gauge (or predict) the catalytic efficiency of an enzyme. Energy barriers are difficult to calculate because they involve intensive simulations using detailed quantum mechanics models. To facilitate such calculations, we are developing methodology to calculate enzyme reaction energy profiles by combining a simplified (and thus computationally less demanding) model with corrections calculated using a more sophisticated model. To achieve this, we must calculate the change in free energy from moving from the simplified to the detailed model at several points along the reaction coordinate. This involves running an ensemble of sub-simulations in parallel, with each sub-simulation periodically exchanging information (e.g. coordinates of atoms) with other members of the ensemble. Each sub-simulation involves the running of two programs: • A master program (Sire[7]), which is used to communicate with the other sub-simulations in the ensemble, and which performs the molecular mechanics (MM) parts of the calculation. • A slave program (Molpro[8]), which is controlled by the master, and is used to perform the quantum mechanics (QM) parts of the calculation. One instance of Sire is run per node, with each instance communicating with the others using MPI. A separate instance of Molpro must be run for each of the ~100 thousand QM calculations required during the simulation. Each instance of Sire must thus be capable of running shell scripts that start, control, and then process the output of each Molpro job. The bulk of the computational time is spent running these Molpro jobs, and so we have adapted Molpro so that it can use OpenMP to make use of the multiple processor cores that are available on each node.[9] Program Design Both Sire and the OpenMP-adapted version of Molpro present new program designs that respond to the challenges posed by modern algorithms for biomolecular simulation. Continues overleaf. 9 Figure 1: Fatty Acid Amide Hydrolase (FAAH), an important target for the development of drugs to treat pain, anxiety and depression. Sire Sire is a new simulation program that provides a toolkit of objects that can be assembled to run different types of molecular simulation. Sire is designed as a collection of related C++ class libraries, which are then wrapped up and exposed as Python modules. Python is a dynamic scripting language,[10] and is used to glue the C++ objects together to create the desired simulation. Python thus provides a highly flexible and configurable frontend to Sire, yet because all of the simulation objects are compiled C++, the code is highly efficient. Sire Python scripts can be run via a custom MPI-enabled version of Python, which is capable of running a sequence of Python scripts across any of the nodes in the MPI cluster. All Sire C++ objects (which include complete simulations) can be serialised to platform-independent binary streams, which can be saved to disk (as restart or checkpoint files), or, using MPI, communicated between nodes. In addition, Sire has a strong concept of program state, and is able to recover from detected errors by rolling back to a previous state. This is important for enzymology applications, as it is not acceptable for failure of one of the hundreds of thousands of Molpro jobs to cause the entire simulation to crash or exit. Sire is capable of detecting failure of a Molpro job (or indeed of an entire subsimulation) and will automatically restore the pre-error state, and then resubmit the parts of the calculation that need to be re-run. OpenMP-enabled Molpro Molpro is a quantum chemistry (QM) program with a long and successful heritage. It is written predominantly in a range of versions of Fortran. Molpro is mainly a serial application, and while parts of it can be run in parallel, the algorithms we needed for our calculations were serial only. We thus set about parallelising those algorithms using OpenMP. To make the code efficient, it was decided to use a clean rewrite of the necessary parts of Molpro using C++. This allowed modern coding techniques 10 to be used to control the allocation, deallocation and sharing of memory between threads. The OpenMP code was developed originally on Intel and AMD processors and used custom vector instructions (SSE2) to gain extra performance. The C++ code was linked to Molpro, and can be called conditionally in place of the original Fortran code. Benchmarks of the new code demonstrated high-performance and near-linear scaling up to 16 threads (16 was the maximum tested as Intel/AMD machines with more than 16 processor cores were not available to us at the time of benchmarking). Application on HPC systems Both Sire and OpenMP-Molpro were originally developed and deployed on multicore/multisocket Linux clusters. Both codes had been successfully compiled on Intel and AMD platforms using a range of different C++ and Fortran compilers, on both Linux and MAC OS X. Adaption of this setup to run on an HPC resource presented many challenges. For example, a University-level Linux cluster is open and permissive, allowing both SSH and arbitrary network connections to be made between compute nodes. Most importantly, as each node in the cluster runs a complete (Linux) operating system and has full access to the shared disk, it is trivial for the main program to start and control sub-programs by dynamically writing and running shell scripts. This last ability is particularly important for our application, as each instance of Sire on each of the compute nodes must be able to launch a series of Molpro jobs via shell scripts. HPCx, a tightly coupled HPC system comprised on IBM p5-575 compute nodes, was able to satisfy this requirement. A port of Sire/Molpro to HPCx was thus undertaken. The port was non-trivial, as the two codes involved multiple shared dynamic libraries, involved mixing MPI with OpenMP, and required multiple programming languages (C++, various flavours of Fortran and Python). All of this had to be recompiled using the IBM C++ and Fortran compilers, which required many changes to the source code, and a port of the custom Intel/AMD SSE2 vector code to PowerPC. Porting of the wrapper code that exposes the Sire C++ classes to Python was particularly challenging. The Python wrappers involve ~100 thousand lines of auto-generated C++ code that makes heavy use of templates (the wrappers were written using boost::python, and were auto-generated using Py++). As parts of the auto-generated wrappers could not be compiled using the IBM C++ compiler, the generator had to be modified to include auto-generated workarounds. In addition, the methods the IBM compiler used to compile C++ templates led to very long (>24 hour) compilation times, and the production of unacceptably large wrapper libraries (e.g. the wrapper for the Sire molecular mechanics library from gcc 4.2 on Linux and OS X is 6 MB, while it is 227 MB on XLC/AIX – this is too large to dynamically load from Python). Changes to wrapper generation and compilation had to be investigated to find ways of reducing library size. Finally, the Sire libraries are dynamic shared libraries, which are loaded by the MPI-Python executable using dlopen (in response to Python “import” commands). The Python wrapper library is dynamically linked to the shared C++ libraries on which it depends, and loads them automatically when it is dlopened. Subtle differences were found in the way that AIX (the operating system of HPCx) handles the loading and symbol resolution of dynamic libraries compared to Linux and OS X (the current two operating systems supported by Sire), and this required workarounds to be implemented in parts of the code that involved statically allocated objects and shared symbols. This was particularly a problem when the same template instantiation occurred in different shared libraries, and parts of the code had to be rewritten. Porting of the code has been highly challenging, but most issues have now been resolved, and a large proportion of the original codes’ functionality is now available on HPCx in a developmental form. Porting of complex programs with complex custom simulation workflows to HPC requires a significant investment of time and resources, a deep understanding of the nuances of the target HPC platform, and an intimate knowledge of the program to be ported and –crucially– understanding of the scientific goals. simulations, like many biomolecular simulations,[3] use complicated workflows to couple together multiple programs, and have been developed predominantly on commodity computing resources. Despite their commodity computing origins, these calculations require large amounts of resource, in many cases on the scale of that provided by HPC capability computing platforms. While the benefits of porting these workflows to HPC are significant, the differences between a commodity and HPC system can be subtle, and can present significant challenges. A major investment of time and resources, a deep understanding of the target HPC platform, and an intimate knowledge of the program(s) to be ported and the underlying scientific goals are all necessary to achieve success. These developments should allow detailed and reliable investigation of enzyme catalytic mechanisms by utilizing HPC resources effectively. Acknowledgements A.J.M. is an EPSRC Advanced Research Fellow and thanks EPSRC for support. Both authors also thank the EPSRC for funding this work (grant number EP/G042853/1), and thank EPCC and the HPCx consortium for providing support and computing resources. References: [1] Lodola, A., Woods, C.J., and Mulholland, A.J., Ann. Reports. Comput. Chem., 4, 155-169, 2008 [2] Lodola, A., Mor, M. Rivara, S., Christov, C., Tarzia, G., Piomelli, D., and Mulholland, A.J., Chem. Commun., 214-216, 2008 [3] van der Kamp, M.W., Shaw, K.E., Woods, C.J. and Mulholland A.J., J. Royal. Soc. Int., 5, 173-190, 2008 [4] Woods, C.J., and Mulholland, A.J., "Multiscale modelling of biological systems" in RSC Special Periodicals Report: Chemical Modelling, Applications and Theory, Volume 5, 2008 [5] Sherwood, P., Brooks, B.R. and Sansom, M.S.P., Curr. Opin. Struct. Biol. 18, 630-640, 2008 [6] Woods, C.J., Manby, F.R and Mulholland, A.J., J. Chem. Phys. 123, 014109, 2008 [7] Sire http://siremol.org Conclusion [8] Molpro http://www.molpro.net/ Computer simulations of enzyme-catalysed reactions have the potential to transform our understanding of these natural catalysts. They will help harness the power of enzymes for industrial and pharmaceutical applications. Computational enzymology [9] Woods, C,J., Brown, P.S., and Manby, F.R., J. Chem. Theo. Comput., 5, 1776-1784, 2009 [10] Python http://www.python.org 11 FireGrid and Urgent Computing Gavin J. Pringle, EPCC, The University of Edinburgh FireGrid began with a three-year project, which ended in April 2009. It established a cross-disciplinary collaborative community to pursue fundamental research for developing real-time emergency response systems, using the Grid, beginning with fire emergencies. The FireGrid community consists of seven partners: the University of Edinburgh (including EPCC, the Institute for Infrastructure and Environment, the Institute for Digital Communication, the National e-Science Centre, and the Artificial Intelligence Applications Institute) provided the majority contribution to R&D for all areas of the project; BRE (Building Research Establishment) was the project leader and also provided the state-of-the-art experimental facilities that housed the fire; Ove Arup was the overall project manager; ABAQUS UK Limited and ANSYS-CFX contributed advanced structural mechanics and CFD modelling software; while Xtralis provided both expertise on active fire protection systems, as well as sensor equipment in support of experiments; the London Fire Brigade was the principal user and guided the development of the command and control interface. FireGrid employs Grid technologies to integrate distributed resources, such as databases of real-time data, building plans and scenarios, HPC platforms and mobile emergency responders. The distributed nature ensures that these expensive resources are reusable and, most keenly, not damaged by the fire itself. Last October, FireGrid conducted a large-scale experiment to demonstrate its viability. We set fire to a three-room apartment rig, within the burn hall of BRE Watford. This rig bristled with more than 125 sensors of many different types. These sensors pushed data, graded in real time, into a database in Edinburgh. This database was monitored by autonomous agents running in Watford. Once a fire was detected, a super-real time simulation was automatically launched on a remote HPC resource. At a prescribed frequency, the latest data was staged to the HPC resource, and then assimilated into the simulation. The results were then pulled back to Watford, and were employed to predict the course of the fire, the state of the building and the actions of those potentially trapped inside. For a general overview of the experiment, see EPCC News, issue 65. Of particular note for FireGrid is the requirement for access to the HPC resource in urgent computing mode. This is to ensure that a fire model can be launched as quickly as practically possible from the time of request, and irrespective of other computational loads on the resource, as any delay to launching the job translates to a delay in delivering results. Urgent Computing refers to a branch of Grid-enabled High 12 Performance Computing, where the time between job submission and job execution is of great importance. For FireGrid, we require time-critical results from faster than real-time predictive simulations. These applications are designed to give decision makers predictions during life-threatening emergencies and must run immediately. Urgent Computing can also service commercial interests, where any delay in starting simulations costs money. Life threatening scenarios employ applications that are typically Grid-enabled, as they utilise real data (gathered from remote sources in real time) to enhance the quality of predictions. Such simulations include severe weather prediction, forest fires, flood modelling and coastal hazard prediction, earthquakes, medical simulations such as influenza modelling and, in the case of FireGrid, how fires, buildings and people will interact. If such an application were to submit its batch job to a shared HPC resource, using the normal procedures, then it may be hours before the application begins to run. Urgent Computing aims to significantly reduce this turn-around time. Several levels of immediacy can be considered, i.e. where running jobs are killed or, in less urgency, swapped to disk, and the urgent job runs immediately, or where the urgent job is merely placed at the head of the queue. There are a number of ways to offer the urgent computing model. A user might purchase their own HPC platform to perform their jobs and their jobs alone. This gives them immediate access, but the cost of running and maintaining such a system will be high, and many cycles will be wasted. Cycles could, of course, be sold under the proviso that running jobs would be at risk. Such jobs would therefore need to posses restart capabilities. Urgent computing can also be offered on shared resources. This approach means we can reuse existing infrastructure, with its associated continual, system-wide monitoring by a dedicated team. Further, in contrast to dedicated platforms, the system may well have an associated SLA. However, the use of shared resources introduces issues of resource contention, scheduling and authorization. To address such issues, the US’s TeraGrid project have developed Special PRiority Urgent Computing Environment (SPRUCE). This is “a system to support urgent or event-driven computing on both traditional supercomputers and distributed Grids”. Scientists are provided with transferable Right-of-Way tokens with varying urgency levels. During an emergency, a token has to be activated at the SPRUCE portal, and jobs can then request urgent access. An alternative to SPRUCE is to repeatedly submit pilot, or “prospector” jobs to a large number of platforms such that at any one time there is at least one prospector job running, Warehouse fire. Pic courtesy London Fire Brigade. which checks for any pending urgent computing tasks. Pilot jobs are employed within PanDA, which was developed as part of the ATLAS particle physics experiment at CERN. For FireGrid, the HPC simulation clearly has to run immediately and thus falls into the category of Urgent Computing. Indeed, for the large-scale experiment, FireGrid decided that such a prediction justified not only Urgent Computing on a single HPC resource, but also a backup, fail-over, HPC platform. Thus we employed two HPC systems: HPCx and ECDF, the University of Edinburgh’s research compute cluster. By happy coincidence, last year EPSRC announced their Complementary Capability Computing programme, through which HPCx caters for more unusual jobs, three categories of which being relevant to FireGrid: ‘Urgent computing’, ‘Interactive and near-interactive computing’ (which supports more flexible access patterns to exploit grid technologies) and ‘Advance Reservation’. As SPRUCE was not available at the time, we employed the advance reservation mechanism on HPCx, implemented using the Highly-Available Robust Co-allocator (HARC) software. On ECDF, the batch system was configured to take any job from FireGrid and place it at the top of the queue. Despite assigning a single node for FireGrid’s experiment’s exclusive use, there was a delay of up to 2 minutes between submission and execution, due to the batch queue process frequency of 2 minutes. This was solved by placing a separate instance of the batch system on the private node, which then processed the dedicated batch queue at a higher frequency. The large-scale FireGrid experiment was deemed a success. Present at the experiment was Paul Jenkins, of the London Fire Brigade, who said that “… the demonstrator proves that grid-based sensors and [HPC] fire models can be used together predictively, it showed that simple but accurate predictions of fire performance can be made, dynamically; and was a remarkable collaborative achievement.” Paul went on to say that, in time, FireGrid may be part of an emergency response. Overall, this was regarded as a highly successful demonstrator, illustrating the great potential of the FireGrid system. Acknowledgements The work reported in this paper forms part of the FireGrid project. This project is co-funded by the UK Technology Strategy Board’s Collaborative Research and Development programme, following an open competition. This work made use of the facilities of HPCx, the UK’s national high-performance computing service, which is provided by EPCC at the University of Edinburgh and by STFC Daresbury Laboratory, and funded by the Department for Innovation, Universities and Skills through EPSRC’s High End Computing Programme. This work has made use of the resources provided by the Edinburgh Compute and Data Facility (ECDF): www.ecdf.ed.ac.uk/. The ECDF is partially supported by the eDIKT initiative: www.edikt.org/. For more information on FireGrid: email firegrid-enquiries@epcc.ed.ac.uk or visit www.firegrid.org. References FireGrid: www.firegrid.org SPRUCE: spruce.teragrid.org ATLAS: http://atlasexperiment.org HARC: www.cct.lsu.edu/site54.php ECDF www.ecdf.ed.ac.uk HPCx: www.hpcx.ac.uk EPSRC Complementary computing: www.epsrc.ac.uk/ResearchFunding/ FacilitiesAndServices/HighPerformanceComputing/CCCGuidance.htm 13 Figure 1. Representative transmembrane domain of a K+ channel and its selectivity filter. Shown is the MD simulation system consisting of a K+ channel. K+ ions are rendered as green spheres, Cl- as cyan spheres, and water oxygen and hydrogen as red and white spheres, respectively. On the right, for visual clarity, atomic detail is given for only two out of the four subunits comprising the selectivity filter. Only waters in the selectivity filter and those in the cavity are shown. Interactive biomolecular modelling with VMD and NAMD on HPCx Carmen Domene, Department of Chemistry, University of Oxford Ion channels are specialized pore-forming proteins, which, by regulating the ion flow through the cellular membrane, exert control on electrical signals in cells. Therefore, they are an indispensable component of the nervous system and play a crucial role in regulating cardiac, skeletal, and smooth muscle contraction. Despite their high rate of transport, some channels are selective, for example, K+ channels are about 10,000 times more selective for K+ than for Na+ ions despite the radius of K+ is larger than that of Na+. Channels do not stay open all the time. Instead, they can be open and conduct ions or they can be closed. The mechanism by which ion channels open and close is referred as ‘gating’. This process is believed to operate via large conformational changes. From a medical point of view, membrane protein and ion channel dysfunction in particular, can cause diseases in many tissues affecting muscles, kidney, heart or bones. This group of diseases has been termed channelophathies. Therefore, interest in ion channels as pharmaceutical drug targets continues to grow. For example, many psychoactive drugs potently block some of these proteins. By altering the normal mechanism of transport, the drugs lead to chemical imbalances in the brain that can have profound physiological effects. The numerous links between proteins, drugs, disease and treatment are extremely complex but it is a fascinating field of research. 14 The selectivity filter is the essential component to the permeation and selectivity mechanisms in these protein architectures (see Figure 1). It is composed of a conserved signature sequence peptide, the TVGYG motif, and the carbonyl oxygens of these residues point towards the pore of the channel orchestrating the movements of ions in and out of biological membranes. Thousands of millions of K+ ions per second can diffuse down their electrochemical gradient across the membrane at physiological conditions, and the largest free energy barrier of the process is of the order of 2-3kcal mol-1 [1,2]. Potassium ions and water molecules move through potassium channels in a concerted way. The accepted view is that water and ions move through the channels in single file, as exemplified by the few crystal structures available and numerous molecular dynamics simulations. On average, and under physiological conditions, two ions occupy the selectivity filter at a given time with a water molecule in between them (see Figure 1). Using Interactive Molecular Dynamics (IMD) as starting point, we have come up with an alternative conduction mechanism for ions in K+ channels where site vacancies are involved, and we have proposed that coexistence of several ion permeation mechanisms is energetically possible. Conduction can be described as a more anarchic phenomenon than previously characterized by the concerted translocations of K+-water-K+. Continues opposite. CPMD simulations of modifier ion migration in bioactive glasses Antonio Tilocca, Department of Chemistry, University College London Implanted phosphosilicate glass materials containing variable amounts of Na2O and CaO promote the repair and regeneration of bone and muscle tissue through a complex sequence of inorganic and cellular steps, which begins with the partial dissolution of the glass network and the ion release into the physiological environment surrounding the implant-tissue interface [1,2]. The marked correlation between initial dissolution rate and glass bioactivity highlights the importance of investigating the structural and dynamical factors which affect the glass durability in an aqueous medium, such as the fragmentation of the bulk glass network and the surface structure [2–4]. Another critical factor which determines the chemical durability of the glass in solution is the mobility of the modifier cations, namely Na+ and Ca2+: the rate of release of these species depends on their rate of transport from the bulk to the glass surface. Compared to crystalline materials, identifying the ion migration pathways and mechanism in a glass is much less straightforward, due to the diverse and complex nature of the ion coordination environments found in a multicomponent glass. The atomistic resolution of Molecular Dynamics (MD) simulations has proven very powerful to gain insight regarding the migration of alkali ions in silicate glasses [5]; here we apply ab-initio (Car-Parrinello [6]) MD to investigate the diffusion of modifier cations in glass compositions with biomedical applications, such as 45S5 Bioglass®. The CPMD calculations are carried out on HPCx using the CPMD module of the Quantum-Espresso (Q-E) package [7]. Q-E is an open-source software for parallel calculations of extended (periodic and disordered) systems based on density functional theory and a plane wave/pseudopotential approach. In particular, the CPMD module of Q-E is highly optimised for efficient parallel calculations employing ultrasoft (US) pseudopotentials, Continued on p16. We have used the VMD [3] and NAMD [4] packages in this project. VMD (Visual Molecular Dynamics) is a molecular visualisation program with an interactive environment for displaying, animating and analysing large biomolecular systems. NAMD is a parallel Molecular Dynamics code specialised for high performance simulation of large biomolecular systems. IMD refers to using VMD and NAMD together by connecting VMD to NAMD, providing a method to run the MD simulation interactively. VMD can communicate steering forces to a remote molecular dynamics program such as NAMD. This allows one to interact with MD simulations as they are progressing. In principle, any molecular dynamics simulation that runs in NAMD can be used for IMD, and just a small modification in the NAMD configuration file is required. NAMD can be run on HPCx and viewed and controlled from VMD running on a local machine, using a program called Proxycontrol for the connection. The performance of the process has been optimised by the EPCC team and a very useful tutorial on how to use IMD with VMD and NAMD at HPCx is documented in the HPCx website (http://www. hpcx.ac.uk/support/documentation/UserGuide/HPCxuser/Tools. html). Energetic analysis of two permeation mechanisms, demonstrates that alternative pathways for ion conduction to the one already proposed in the literature are possible [5]. The presence of a water molecule separating the ions inside the SF does not seem to be as compulsory as previously thought. Under energetics grounds therefore, it is legitimate to conceive of alternative patterns of permeation. Crystallographers are likely to assume in their refinements that if a site is not occupied by an ion, the site is likely to be occupied by a water molecule, and this is not likely to be always the case. Considering these results, it would be also interesting to revisit many of the kinetic models proposed in the literature that aimed at describing ion conduction in low- and high-conductance ion channels which did not successfully agree with experimental data for conductance and ion occupancies. Undoubtedly, computational approaches have contributed substantially to our understanding of membrane proteins and ion channels in particular. Although there have been considerable advances in molecular dynamics simulations of biological systems, much remains to be done. Interactive MD has proved to be a useful complementary technique and results obtained from the calculations performed at the HPCx facility, with the help of the EPCC team, have provided mechanistic information on how this family of ion channels perform one of its functions. Acknowledgments I would like to thank The Royal Society for a University Research Fellowship, and of course, the HPCx team, in particular Dr Joachim Hein, Dr Eilidh Grant, Mr Qi Huangfu and Dr Iain Bethune. This work was supported by grants from The Leverhulme Trust and the EPSRC. References (1) Berneche, S.; Roux, B. Nature 2001, 414, 73-77. (2) Aqvist, J.; Luzhkov, V. Nature 2000, 404, 881-884. (3) Humphrey, W.; Dalke, A.; Schulten, K. J. Mol. Graphics 1996, 14, 33-&. (4) Phillips, J. C.; Braun, R.; Wang, W.; Gumbart, J.; Tajkhorshid, E.; Villa, E.; Chipot, C.; Skeel, R. D.; Kale, L.; Schulten, K. J. Comput. Chem. 2005, 26, 1781-1802. (5) Furini, S.; Domene, C. Proc. Natl. Acad. Sci. USA (in press). 15 CPMD simulations... continued from p15 Figure 1: Octahedral coordination shell of a sodium ion (green), extracted from a configuration of the CPMD run of 45S5 Bioglass®. Si and O atoms are coloured blue and red, respectively. Only the atoms in the immediate proximity of the central ion are shown. Figure 2: Traces of the CPMD trajectory of three selected Na ions (green) in the 45S5 Bioglass®. Si, P, O, Ca atoms are coloured blue, yellow, red and white, respectively. making use of special techniques (double grid and augmentation boxes) to reduce the computational cost and improve numerical accuracy [8,9]. The smaller wavefunction cutoff made possible by US pseudopotentials (25-30 Ry vs. 70-80 Ry for standard, norm-conserving pseudopotentials) is critical to speed up CPMD calculations of oxide-based materials such as silicates, especially in cases like the present one where relatively long trajectories are needed to sample the diffusive event with a reasonable statistical accuracy. The cubic simulation cell (~11-12 Å side) typically includes around 100-120 atoms and 700-800 electrons; a 100 ps CPMD trajectory requires around 150,000 AUs on HPCx. The motion of selected sodium atoms at ~1000 K is visualised in Figure 2: the traces confirm that sodium migrates through short jumps between coordination sites such as the one highlighted in Figure 1. We are currently analyzing the migration mechanism in order to identify in detail what triggers a hop from a site to another: we anticipate the use of CP-based chain-of-states methods to identify the migration paths and energy barriers with high accuracy [12]. Initial glass structures were generated using a standard quenchfrom-the-melt MD approach, by continuously cooling an initial random mixture of the appropriate composition and density [10]. Each structure was then heated up and kept to temperatures around 500-1000 K (always below the melting point) using a Nosé thermostat, and a CPMD run of up to 100 ps was carried out in the canonical ensemble at each temperature. The migration of modifier cations in silicate glasses is deemed to proceed through discrete hops between stable sites: the largest fraction of the trajectory is spent in these sites, and occasional, rapid jumps occur where the ion leaves its initial coordination polyhedron and moves to a new one, linked to the original site [11]. An important initial issue is therefore to identify the characteristic coordination shell of the modifier cations: ion migration can occur along preferential diffusion pathways (“channels”) formed by coordination polyhedra sharing corners or edges [5, 11]. The typical coordination shell of sodium is illustrated in Figure 1: the ion lies at the centre of a distorted octahedron formed by both bridging and non-bridging oxygen atoms bonded to silicon (and occasionally to phosphorus) network formers. 16 This HPCx project is supported under the EPSRC Complementary Capability Challenge, grant EP/G041156/1 “Modelling Ion Migration in Bioactive Glasses”. A. Tilocca thanks the Royal Society for financial support (University Research Fellowship). References 1. L. L. Hench, J. Wilson, J. Science (1984) 226, 630 2. A. Tilocca, Proc. R. Soc. A (2009) 465, 1003 3. A. Tilocca and A. N. Cormack, J. Phys. Chem. B (2007) 111, 14256 4. A. Tilocca and A. N. Cormack, J. Phys. Chem. C (2008)112, 11936 5. A. Meyer, J. Horbach, W. Kob, F. Kargl and H. Schober, Phys. Rev. Lett. (2004) 93, 027801 6. R. Car and M. Parrinello, Phys. Rev. Lett. (1985) 55, 2471 7. http://www.quantum-espresso.org 8. K. Laasonen, A. Pasquarello, R. Car, C. Lee, and D. Vanderbilt, Phys. Rev. B (1993) 47, 10142 9. P. Giannozzi, F. De Angelis, and R. Car, J. Chem. Phys., (2004) 120, 5903 10. A. Tilocca, Phys. Rev. B (2007) 76, 224202 11. A. N. Cormack, J. Du and T. R. Zeitler, Phys. Chem. Chem. Phys. (2002) 4, 3193 12. Y. Kanai, A. Tilocca, A. Selloni and R. Car, J. Chem. Phys. (2004) 121, 3359 Overcoming computational barriers: the search for gene–gene interactions in colorectal cancer Florian Scharinger and Paul Graham, EPCC, The University of Edinburgh National Cancer Registration data indicate that some 35,000 people each year are diagnosed with colorectal cancer (cancer of the large bowel and rectum) and 16,000 die from the disease [1]. Excluding skin cancer, this makes it one of the most common forms of cancer in the country in both men (after prostate and lung cancer) and women (after breast cancer). While the development of effective treatments is clearly important, early identification of patients at risk and prevention is a primary objective of all major cancer agencies and of National Health Service policy. Armed with first access to an unprecedented set of genomic data in colorectal cancer, the University of Edinburgh Colon Cancer Genetics Group (CCGG) and EPCC teamed up to investigate the relationship between genetic markers and colorectal cancer. Following on from a previous project which examined individual genes, the current study looks at gene–gene interactions (GxG) as a possible contributor to colorectal cancer risk. The scale of the programme is substantial. It aims to use a significant portion of the largest genotypic data set for large bowel cancer that has been compiled anywhere in the world to date: a unique and extensive set of 560,000 genetic markers with real data from 1000 cancer cases and 1000 matched controls. The analysis software calculates the probability of an interaction by chance for all pairs of markers. To calculate these probability values, every single marker needs to be compared to every other marker: a total of 150 Billion comparisons! On a standard PC, this analysis, using the existing software, would have taken about 400 days and required over 3TB of memory and hard disk space. Clearly, this is not practically feasible: the way forward was to optimise and parallelise the code, spreading the gene marker comparisons across multiple processors and hence reducing the calculation time. This work used three different machines in a complementary fashion: HECToR was chosen for the main analysis because of its large scale computational capability. A local parallel cluster (with similar processors to HECToR) was used for development. The sorting of the result data was not computationally demanding, but did require access to large amounts of memory, and hence was well suited to HPCx. First, serial optimizations were performed resulting in a three fold speed-up, and the code was modularised and thoroughly tested. Then, the code was parallelised using a 2D decomposition to split the data into manageable “chunks”. The size of a chunk was designed to fit Image of a normal colon. the memory requirements of a single processor. A task farm approach was used to distribute the chunks to all parallel processors on a “first come, first served” basis, since the individual processors do not need to exchange any data during processing. The resulting analysis took approximately 5 hours on HECToR (using 512 cores): a vast improvement on 400 days! The resulting 200GB of output data then needed to be sorted in order to rank those gene markers as to which have the highest probability to interact with each other. Similar to the analysis itself, sorting such a large amount of data on a single PC was not feasible. A parallel sorting algorithm was identified, developed and run on HPCx to perform this task. The sorted data is now undergoing further study, with results expected Q4 2009. This project has enabled the exploration of new territory for genetic marker analysis in colorectal cancer, and plans are underway to enhance this study by analysing even larger datasets. The primary research work, including patient recruitment and genetic analysis, is funded by Cancer Research UK, the Medical Research Council (MRC), the Scottish Executive and CORE. EPCC acknowledges the help of the HPCx support team. The CCGG is a University of Edinburgh research group based at the MRC Human Genetics Unit at the Western General Hospital in Edinburgh. [1] Cancer Research UK, CancerStats Key Facts on Bowel Cancer: http://info.cancerresearchuk.org/cancerstats/types/bowel/ 17 Figure 2 Part of the trajectory of an antiproton, showing helical motion inside the positron plasma (right) and reflection by the electric field at the end of the Penning trap (left). The scale of the cyclotron motion is to small to be resolved in this figure. The scales on the axes are given in cm. Simulations of antihydrogen formation Svante Jonsell, Department of Physics, Swansea University The world, as we know it, is made of matter. This may not seem very surprising, but in fact the present abundance of matter is an unsolved mystery in physics. By the most fundamental symmetries of nature every matter particle has a corresponding antimatter counterpart. The particle and anti-particle have opposite charges, but are, as far as we know, in all other respects identical. When a particle and anti-particle meet the pair is destroyed through annihilation. Given that the universe was created with matter and anti-matter in equal proportions, and that the symmetry is indeed perfect, there is no reason why the matter of the present universe should be left over. The symmetry of matter and anti-matter is dictated by a fundamental theorem of theoretical physics, known as the CPT theorem. According to this theorem nature is invariant under the combination of three operations: C for charge conjugation (converting matter to anti-matter), P for parity (taking a mirror image), and T for time reversal. Although it is widely believed that nature follows this theorem, one could imagine a very small violation, which possibly could explain the excess of matter in the universe. In the history of physics such surprises have occurred before. For instance,physicist believed that P and the combination CP were symmetries of nature, only to be provenwrong by experiments. The CPT theorem rests on much firmer theoretical foundations than P or CP conservation, but, as always, the final verdict can only be given by experiments. An experimental test of CPT can be provided through the study of atoms made of anti-matter. According to the CPT theorem atoms and anti-atoms have identical structures of energy levels, and hence identical spectral lines. Spectral lines can be measured with very high accuracy. In particular the 1S-2S line in hydrogen has 18 been measured with an accuracy of close to 1 part in 1014. If this measurement could be compared to a similar measurement of the 1S-2S line in antihydrogen, also very small violations of the CPT theorem could be detected. This is the experimental programme of two collaborations, ALPHA and ATRAP, working at the Antiproton Decelerator at CERN. Already in 1930, Paul Dirac predicted the existence of positrons, the antimatter mirror image of the ordinary electron. His prediction was soon confirmed by the discovery of positrons created by cosmic rays hitting the atmosphere of the Earth. Discoveries of antimatter counterpartsto other particles, e.g. the antiproton, have followed. Antihydrogen atoms were produced in the 1990s, but then at relativistic energies that made any spectroscopy impossible [1,2]. Low-energy antihydrogen was first produced in 2002 by the ATHENA [3], and then the ATRAP [4], collaborations. In our work, we support the experimental efforts by large-scale Monte Carlo simulations of antihydrogen formation under experimental conditions. The entire formation process is a very complex interplay between many different processes, acting on very different time scales. The shortest time scale is set by the rapid cyclotron oscillations of the positrons around the magnetic field lines, with a period of about 10-11 s. The longest time scale is set by the typical time before the antiprotons either are detected as antihydrogen or are permanently trapped out of reach of the positrons, which, depending on the density and temperature of the plasma, takes up to several seconds. Moreover, for each set of parameters a large number of antihydrogen trajectories is required for good statistics. This study is therefore possible only by using the resources of HPCx. Figure 1 The ATHENA experiment at CERN, where the first cold antihydrogen atoms were created. The antiprotons enter the apparatus from the Antiproton Decelerator to the left and the positrons enter from an accumulator to the right. The antihydrogen experiments catch antiprotons with kinetic energies around 5 keV from the antiproton decelerator. These are trapped at energies of a few eV in a configuration of electric and magnetic fields called a nested Penning trap. In the same trap the oppositely charged positrons are accumulated from a radioactive isotope of Sodium. The positrons form a charged plasma, which is rotating around its axis due to the interaction with the magnetic field in the trap. A few thousand antiprotons are released into the plasma, pass through it, and are then reflected back by an electric field. In this way the antiprotons bounce back and forth, passing through the positron plasma on each bounce. While inside the plasma the antiprotons and positrons can form antihydrogen. The dominating formation process is three-body recombination. Since the final state must contain a second body to take away the excess binding energy, two-body recombination is only allowed if a photon is also emitted, giving the radiative recombination. At the temperatures and densities of the experiments, radiative recombination is considerably slower than three-body recombination. However, at higher temperatures and lower densities it might give an important contribution. Radiative recombination has the advantage that it gives more tightly bound, and hence more stable, antihydrogen. Once formed, the neutral antihydrogen atom is not trapped by the Penning trap anymore.The anti-atom will therefore drift towards the wall of the trap. Most of the time it will be ionized again, either through a collision with another positron or through fieldionization. The antihydrogens that make it all the way are detected through simultaneous annihilation of the positron and the antiproton. However, for spectroscopic studies one would need to be able to trap also the neutral atoms in sufficiently large numbers. Atom trapping around magnetic field minima is a well-established technique. However, these traps are very shallow. For ground-state anti-atoms to stay in the trap their kinetic energy must be below 1 Kelvin, something which so far has not been achieved in the experiments. Our simulations have already given a lot of interesting results. For instance, it turns out that optimization of antihydrogen formation rates and of trapping conditions require conflicting experimental conditions. This is because the antiprotons need time to cool down. If formation is too efficient the antiprotons will form antihydrogen while they are still hot. We are currently investigating the antihydrogen formation rate as a function of positron temperature. Due to the non-equilibrium nature of the process the temperature scaling deviates strongly from more simplified theoretical predictions, something that also has been observed experimentally [5,6]. References [1]G. Baur et al., Production of antihydrogen, Phys. Lett. B 368 (1996), pp. 251–258. [2] G. Blanford et al., Observation of atomic antihydrogen, Phys. Rev. Lett. 80 (1998), pp. 3037–3040. [3] M. Amoretti et al.,Production and detection of cold antihydrogen atoms, Nature 419 (2002), pp. 456–459. [4] G. Gabrielse et al., Driven production of cold antihydrogen and the first measured distribution of antihydrogen states, Phys. Rev. Lett. 89 (2002), 0213401. [5] M. Amoretti et al., Antihydrogen production temperature dependence, Phys. Lett. B 583 (2004), pp. 59–67. [6] M. C. Fujiwara et al., Temporally Controlled Modulation of Antihydrogen Production and the Temperature Scaling of AntiprotonPositron Recombination, Phys. Rev. Lett. 101 (2008), 053401 19 Launch of EPCC Research Collaboration web pages EPCC is committed to making the power of advanced computing available to all areas of research. Our staff work on collaborative projects across a wide range of disciplines, and are enthusiastic about forming new research relationships and taking on new technical challenges. Please take some time to browse our newlook Research Collaboration web pages, which describe our current activities and give details on how to get involved in collaborating with EPCC. www.epcc.ed.ac.uk/research-collaborations A snapshot from an ab-initio molecular dynamics simulation of an accurate model of the functionalized solid/liquid interface in dye sensitized solar cells. For this system (1300 atoms) 1 BO MD steps takes 1.2 min on 512 cores. Image courtesy F. Schiffmann and J. VandeVondele. The Sixth DEISA Extreme Computing Initiative Call for proposals for the 6th DEISA Extreme Computing Initiative.Opens November, 2009. The DEISA Extreme Computing Initiative, or DECI, is an excellent way to gain access to a large amount of HPC cycles, for projects which are too large to run on a single National Resource. DEISA also offers manpower and middleware to harness its grid of 15 different HPC systems, distributed across Europe. Seventh HPCx Annual Seminar The 5th DECI Call closed in May, 2009, and received 74 proposals from across the world. The UK-based proposals alone have requested a total of over 15 million core hours. 3rd December 2009, STFC Daresbury Laboratory Further information: www.deisa.eu Or contact gavin@epcc.ed.ac.uk The seventh HPCx Annual Seminar will be held at STFC Daresbury Laboratory on Thursday 3rd December 2009. The seminar will be the final event of a highly successful series organised by the HPCx service providers. There will be presentations from HPCx users and staff which look back on research achievements enabled by HPCx, describe current work exploiting the flexibility offered under the complementarity initiative, and look forward to future HPC services. Attendance at this event is free for all academics. Further details and registration information can be found at: http://www.hpcx.ac.uk/about/events/annual2009/ 20