The Santa Claus Problem [Extended Abstract] Nikhil Bansal Maxim Sviridenko

advertisement

![The Santa Claus Problem [Extended Abstract] Nikhil Bansal Maxim Sviridenko](http://s2.studylib.net/store/data/012842724_1-88aa883ef1a61aeb2b9ff964ac3ff356-768x994.png)

The Santa Claus Problem

[Extended Abstract]

Nikhil Bansal

Maxim Sviridenko

IBM T.J. Watson Research Center

P.O. Box 218

Yorktown Heights, NY 10598

IBM T.J. Watson Research Center

P.O. Box 218

Yorktown Heights, NY 10598

bansal@us.ibm.com

sviri@us.ibm.com

ABSTRACT

We consider the following problem: The Santa Claus has n

presents that he wants to distribute among m kids. Each kid

has an arbitrary value for each present. Let pij be the value

that kid i has for present j. The Santa’s goal is to distribute

presents in such a way that the least lucky kid is P

as happy

as possible, i.e he tries to maximize mini=1,...,m j∈Si pij

where Si is a set of presents received by the i-th kid.

Our main result is an O(log log m/ log log log m)-approximation

algorithm for the restricted assignment case of the problem

when pij ∈ {pj , 0} (i.e. when present j has either value pj

or 0 for each kid). Our algorithm is based on rounding a

certain natural exponentially large linear programming relaxation usually referred to as the configuration LP. We also

show that the configuration LP has an integrality gap of

Ω(m1/2 ) in the general case, when pij can be arbitrary.

1. INTRODUCTION

We consider the following maximin resource allocation

problem. The Santa Claus has n presents that he wants to

distribute among m kids. Each kid has an arbitrary value for

each present and let pij denote the value of the j-th present

for the i-th kid. The Santa’s goal is to distribute presents in

such a way that the least lucky kid P

is as happy as possible,

i.e he tries to maximize mini=1,...,n j∈Si pij where Si is a

set of presents received by the i-th kid.

This problem is closely related to the classic problem of

makespan minimization on unrelated parallel machines [9].

Our setting is similar, but the objective function is different. In the makespan problem, the goal is to distribute all

the presents in a way that minimizes the value of presents

received by the luckiest kid. Lenstra, Shmoys and Tardos

[9] designed 2-approximation algorithm for the problem and

proved that there is no approximation algorithm with performance guarantee better then 3/2 unless P = N P .

The Santa Claus problem, that we consider here, has

been studied in the literature albeit with different names.

The special case of “parallel machines” or the case when

Permission to make digital or hard copies of all or part of this work for

personal or classroom use is granted without fee provided that copies are

not made or distributed for profit or commercial advantage and that copies

bear this notice and the full citation on the first page. To copy otherwise, to

republish, to post on servers or to redistribute to lists, requires prior specific

permission and/or a fee.

STOC’06, May21–23, 2006, Seattle, Washington, USA.

Copyright 2006 ACM 1-59593-134-1/06/0005 ...$5.00.

all children place the same value on each present was studied in the sequence of papers [2, 4, 5, 13]. Woeginger [13]

obtains a polynomial time approximation scheme for that

problem. The case of “uniform machines”, i.e. when the

value pij = pj /si , was studied in [2, 12] in the online setting.

The general case was first considered in [10] in the game

theoretic context of fairly allocating goods. Using techniques from [9, 11], Bezakova and Dani [3] give an algorithm that obtains a solution with value at least Opt − pmax

where Opt is a value of the optimal assignment and pmax =

maxi,j pij . Unfortunately, in the case when pmax ≈ Opt it

does not provide us with any provable performance guarantee. Bezakova and Dani [3] also design two algorithms with

performance guarantee n − m + 1 (note that wlog we may

assume that n ≥ m). Recently, Golovin [6] gave an algorithm which guarantees that at least (1 − 1/k) fraction of

the kids receive presents with value at least

√ Opt/k, for any

integer k. Golovin [6] also gave an O( n) approximation

for the so called “Big Goods/Small Goods” case where all

pij ∈ {pj , 0} and moreover each pj ∈ {1, X}. Finally, modifying the hardness result of [9] for unrelated parallel machine scheduling, [3] show that there is no approximation

algorithm for the Santa Claus problem with performance

guarantee better then 2 unless P=NP, even in the restrcited

assignment case when pij ∈ {pj , 0}.

In the rest of the paper we will use the machine scheduling terminology, i.e. presents correspond to jobs, kids correspond to machines, and the value of a present to a kid

corresponds to the processing time of a job on a machine.

The goal is to find an assignment of jobs to machines that

maximizes the minimal machine load. In the general unrelated machines case, pij can be arbitrary. The case when

pij ∈ {pj , 0} is referred to as the restricted assignment case.

As we shall see in Section 2, the natural linear programming relaxations for this problem even when combined with

the binary search and job size truncation technique of [9]

(defined in Section 2) has a large Ω(m) integrality gap even

for the restricted assignment case. In Section 2 we consider

a stronger LP that we call “the configuration LP”. This

linear program has a variable for every possible way to feasibly pack jobs on each machine and resembles the classical

configuration LP used for the bin packing problem (see for

example [7]). Unfortunately,

it turns out that this stronger

√

LP has a large Ω( m) integrality gap (the instance is given

in Section 3) for the general Santa Claus problem. The

main result of this paper is an O(log log m/ log log log m)approximation algorithm for the restricted assignment case

of the Santa Claus problem. This is described in Sections 6.

Section 4 gives a high level overview of our techniques and

we conclude in Section 7.

2. LINEAR PROGRAMMING FORMULATIONS

The following linear programming formulation is perhaps

the most natural linear program associated with the Santa

Claus problem.

max T,

m

X

yij = 1,

j = 1, . . . , n,

pij yij ≥ T,

i = 1, . . . , m,

i=1

n

X

j=1

yij ≥ 0,

∀i, j.

However, the integrality gap of this formulation is unbounded

even for one job instance with identical size p on all machines

(i.e. pi1 = p for i = 1, . . . , m). This follows as the LP can

distribute one job fractionally between m machines while

assigning it integrally leaves m − 1 machines empty.

A natural way to deal with the problem above is to use binary search combined with the job truncation idea due to [9].

Fix number T that is our target value of objective function.

Define a new instance of the problem p′ij = min{pij , T }. The

crucial observation is that any integral solution with value

at least T for the original sizes still has value at least T

for the instance with truncated sizes. Thus we consider the

algorithm, where we do a binary search and find the maximum value T of objective function for which the following

linear program has a feasible solution

m

X

yij = 1,

j = 1, . . . , n,

(1)

p′ij yij ≥ T,

i = 1, . . . , m,

(2)

∀i, j.

(3)

i=1

n

X

j=1

yij ≥ 0,

This technique eliminates a lot of bad instances (for example the one considered above), and in particular works

well when the job sizes are small compared to T . In fact,

using a rounding approach similar to that considered by [9,

11], Bezakova and Dani [3] proved the following result.

Lemma 1. Given a fractional solution to the assignment

LP (1)-(3) with value T , it can be converted to an integral

solution with value T − p′max , where p′max = maxi,j p′i,j

This implies a good approximation in the case when p′max

is not too close to T . However, in the case when p′max is

close to T , the integrality gap can be as large as Ω(m) even

in the restricted assignment case.

Consider the following instance with n = 2m − 1 jobs.

There are m small jobs labeled j = 1, . . . , m and m − 1 big

jobs labeled j = m + 1, . . . , 2m − 1. Each big job has size

exactly m on every machine. For i = 1, . . . , m, each small

job i has size 1 on machine i and 0 everywhere else (i.e.

pij = 1 if i = j and 0 otherwise). Note that this instance is

actually that of the restricted assignment case. For T = m

we have that p′ij = pij . Assigning job i to machine i for

i = 1, . . . , m and assigning each big job to an extent of

1/m on each machine, the fractional load on each machine

is exactly 1 + (m − 1) · (1/m) · m = m. Thus the LP above

has a feasible solution for T = m. On the other hand, as

there are only m − 1 big jobs, in any integral solution there

is some machine that is not assigned any big job. For each

machine, as there is exactly one small job has a non-zero

size on that machine, this implies that any integral optimal

solution has value at most 1.

We now define a stronger LP relaxation of the Santa Claus

problem. A configuration is a subset of jobs. We say that

configuration C has size p on machine i, P

if the total sum of

the jobs in C on machine i is p, i.e. p = j∈C pi,j . We use

p(C, i) to denote the size of C on i. As usual assume that

value of the objective T is known by doing a binary search.

A configuration C is valid for machine i, if p(C, i) ≥ T . Let

C(i, T ) denote the set of all valid configurations for machine

i. Sometimes, for notational convenience we will write C(i)

when the dependence on T is clear.

The configuration LP is defined as follows. There is a

variable xi,C for each valid configuration C on machine i.

There could possibly be an exponential number of variables.

The constraints are:

X

C∈C(i)

XX

C∋j

i

xi,C ≥ 1,

∀i

(4)

xi,C ≤ 1,

∀j

(5)

xi,C ≥ 0,

∀i, ∀C ∈ C(i, T ).

(6)

The first set of constraints ensure that each machine is assigned at least one unit of configurations, and second set of

constraints ensure that no job is used in more than one unit

of configurations.

Assuming that the primal problem has an objective function that should be minimized and has zero coefficients. We

define dual variables yi for 1 ≤ i ≤ n corresponding to the

first set of constraints, and variables zj for 1 ≤ j ≤ n corresponding to the second set of constraints. Thus the dual of

the program above is

max

m

X

i=1

yi −

X

j∈C

n

X

zj ,

j=1

zj ≥ yi ,

zj ≥ 0,

∀i, ∀C ∈ C(i, T )

∀j.

We claim that separation problem for this dual program is

the regular minimum knapsack problem. Indeed, given a

candidate solution (y ∗ , z ∗ ), a violated constraintP

consists of

finding a machine i and a set of jobs J ′ such that j∈J ′ zj ≤

P

yi and j∈J ′ pij ≥ T .

As a preprocessing step we can round down the size of each

job to the nearest integer multiple of ǫT /n. Since there can

be at most n jobs of any machine, this affects the solution

by at most ǫT . Thus, we can assume that all jobs have integral sizes in the range [0, n/ǫ], and the knapsack problem

above can be solve in polynomial time using the standard

dynamic program. Thus the dual can be solved up to arbitrary precision using the ellipsoid method in polynomial

time. Solving the primal problem exactly on the subset of

variables (columns) that correspond to the inequalities of

the dual considered by the ellipsoid method we could find a

feasible solution for the linear program (4)-(6) to any desired

accuracy in polynomial time.

Note that any feasible solution to the configuration LP

induces a fractional assignment yij such that the load on

each machine is at least T . Moreover, the configuration LP is

significantly stronger than the assignment LP defined by 1-3.

It is instructive to consider the behavior of the configuration

LP on the Ω(m) integrality gap instance (described above)

for the LP 1-3. On this instance, the configuration LP is

infeasible for any T > 1 and hence gives an exact solution.

Unfortunately, as we show next, even the configuration LP

has a large integrality gap in the general case of arbitrary

pij .

3. THE INTEGRALITY GAP OF CONFIGURATION LP

In this section we give a family of instances of the general

Santa Claus problem for which the configuration LP has an

unbounded integrality gap. In particular, for an arbitrary

parameter k, we give an instance with O(k2 ) jobs and O(k2 )

machines such that the configuration LP has a solution with

value Ω(k) where as the optimum integral solution has value

1. Moreover all the job sizes in the instance are either 0, 1

or k.

Let k be an arbitrary integer, The instance consists of a

set of machines M and a set of jobs J. The set M consists

of k + 1 groups M0 , . . . , Mk where each group Mi has k

machines mi1 , . . . , mik .

The set of jobs J consists of three types of jobs, big, small

and dummy denoted by Jb , Js and Jd respectively. The set

Jb has k − 1 “big” jobs jb,1 , . . . , jb,k−1 , each of which has

size exactly k on the machines in M0 and size 0 everywhere

else. The set Js consists of k groups of jobs Js,i , where i =

1, . . . , k. Each set Js,i contains k jobs js,i,1 , . . . , js,i,k . The

job js,i,l has size k on machine mi,l , has size 1 on machine

m0,i and has size 0 everywhere else. In other words, the lth

job in group Js,i has only two non-zero sizes: It has size k

on the lth machine in group Mi and has size 1 on the ith

machine in M0 . Finally, for each i = 1, . . . , k there is a

dummy job jd,i that has size exactly k on the machines in

the set Mi and 0 everywhere else. We refer the reader to

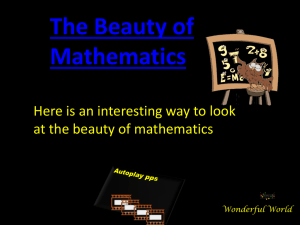

Figure 1 which gives a (partial) configuration LP solution

for the case of k = 3. Here each machine is depicted as a bin

of width 1 and each configuration is depicted as rectangular

slab of height greater than or equal T . The width of the slab

denotes the amount of configuration (xi,C ) in the solution,

and the height of each piece in the slab is the size of job in

that configuration.

Lemma 2. For the instance (J, M ) defined above, there

exists a fractional feasible solution to the configuration LP

defined by (4)-(6) with T = k.

Proof. Consider the following fractional solution (partially depicted in Figure 1 for the case k = 3). The k − 1

jobs in Jb are distributed equally among the k machines in

M0 . That is, each machine m0,i ∈ M0 is assigned a configuration consisting of the singleton job jb,l to the extent of

1/k for each l = 1, . . . , k − 1. Next, for each i = 1, . . . , k,

the machine m0,i is assigned the configuration consisting of

k jobs from Js,i to the extent of 1/k. This completes the

description on M0 . We now describe the assignment of configurations for machines in M1 , . . . , Mk . For i = 1, . . . , k and

j

1111

0000

000

111

0000

1111

000

111

000

111

0000

1111

000

111

000

111

0000

1111

000

111

000

111

0000

1111

000

111

0000

1111

0000

1111

0000

1111

0000

1111

m

jb,1

b,2

1111

0000

0000

1111

0000

1111

0000

1111

0000

1111

0000

1111

0000

1111

0000

1111

0000

1111

m

111

000

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

j

s,1,3

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

j

s,1,2

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

js,1,1

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

000 111

111

0,1

js,1,1

1111

0000

000

111

0000

1111

000

111

0000

1111

000

111

0000

1111

000

111

0000

1111

000

111

0000

1111

000

111

0000

1111

000

111

0000

1111

000

111

0000

1111

000

111

0000

1111

000

111

0000

1111

000

111

0000

1111

000

111

0000

1111

000

111

0000

1111

000

111

m1,1

1111

0000

0000

1111

0000

1111

0000

1111

0000

1111

0000

1111

0000

1111

0000

1111

0000

1111

m

111

000

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

000

111

0,2

1111

0000

j

0000

1111

000

111

000

111

000

111

0000

1111

000

111

000

111

0000

1111

000

111

000

111

0000

1111

000

111

000

111

0000

1111

000

111

000

111

0000

1111

000

111

000

111

0000

1111

000

111

s,1,2

m1,2

0,3

j

1111

0000

000

111

000

111

000

111

0000

1111

000

111

000

111

0000

1111

000

111

000

111

0000

1111

000

111

000

111

0000

1111

000

111

000

111

0000

1111

000

111

000

111

0000

1111

000

111

0000

1111

m

s,1,3

1,3

Dummy Job jd,1

Figure 1: A partial fractional solution to machines M0 = {m0,1 , m0,2 , m0,3 } and machines in M1 =

{m1,1 , m1,2 , m1,3 } corresponding to the case k = 3.

The big jobs jb,1 and jb,2 are distributed among M0 ,

and jobs in Js,1 are distributed among m0,1 and M1 .

l = 1, . . . , k, the machine mi,l is assigned 1 − 1/k units of

configuration consisting of the singleton job js,i,l and 1/k of

the configuration consisting of the singleton job jd,i . Figure

1 shows the assignment to machines in M1 . It is easy to

verify that the assignment is a valid one. In particular, each

configuration has load k, each machine is assigned 1 unit

of configurations and each job occurs in at most 1 unit of

configurations.

Lemma 3. The value of any integral solution on the instance (J, M ) described above is at most 1.

Proof. Consider an arbitrary integral solution for the

instance (J, M ). Since there are k − 1 jobs in Jb and k

machines in M0 , there is some machine m0,i in M0 that is

not assigned any job in Jb . By our construction, other than

jobs in Jb , only jobs from Js,i have nonzero processing times

on the machine m0,i , and each of these jobs has size 1 on

m0,i . If one or fewer jobs from the set Js,i are assigned to

the machine m0,i , then clearly it has load at most 1 and the

result follows. Thus, suppose that there are at least two jobs

from Js,i that are assigned to m0,i . In this case we will show

that there is some machine to which no job is assigned. As

the set Js,i contains exactly k jobs, and at least two of these

are assigned to m0,i , there are at most k − 2 jobs in Js,i that

can be assigned to machines in Mi . As the only other job

(other than jobs in Js,i ) that can be assigned to a machine

in Mi is the dummy job jd,i , it follows that are at most k − 1

jobs available to be assigned to the k machines in Mi which

implies that some machine in Mi must be empty.

4. ALGORITHM OVERVIEW AND ROADMAP

idea for the improved approximation is that we can relax

the “worst case congestion” requirement for small jobs. In

particular we show that for each cluster Mi , we can choose

a configuration Ci that can be assigned to some machine in

Mi with the property that after we throw away some constant fraction of jobs from each chosen Ci , no job occurs

in more than O(log log m/ log log log m) sets Ci . We call

this the congestion with outliers property. This gives us a

pseudo-schedule where each machine has load Ω(T ) and no

job is assigned to more than O(log log m/ log log log m) machines, which implies the desired approximation. We show

that the “congestion with outliers” property on arbitrary

configurations is closely related to the “worst case congestion” property in an instance where each configuration has

only poly-logarithmic jobs. This allows us to use the known

results that give better guarantees than randomized rounding for worst case congestion when the configurations have

few jobs.

Given Lemma 1, it follows that the hard case is when

some jobs have size close to the optimum solution T , and

hence the load on some machines can essentially be due to

a single job. We call such jobs big. Throughout this paper,

the reader can only focus on the special case where the job

sizes are either T (which is a big number), or 1 (small).

We make the reason for this precise in Section 6.1. In this

case, the main problem reduces to determining the subset of

machines on which big jobs should be placed. Clearly, this

choice of machines must be such that it should be possible to

load each of the remaining machines reasonably well using

only small jobs. Consider for example the bad integrality

gap instance for the LP defined by (1)-(3). Here, there is a

5. SIMPLIFICATION OF THE RESTRICTED

group of m machines and m − 1 big jobs with the property

that these jobs can be placed on any m − 1 machines. Thus

ASSIGNMENT CASE

one machine needs to be loaded using small jobs. However

As all pij ∈ {0, pj } in the restricted assignment case, withthe problem in this instance was that no machine could be

out any ambiguity, we will call pj the size of job j. Throughassigned more than one small job.

put, we use m to denote the number of machines and n for

Our proof is organized into two parts: In Sections 5.1 and

the number of jobs.

5.2, we make the underlying structure of the problem (mentioned above) explicit. Starting with a feasible solution of

5.1 Rounding Big Jobs and Relaxing Feasibilthe configuration LP, we give a transformation procedure

ity

which makes the LP solution more structured at the loss of

Let I be an instance of the problem. Let T denote the

an O(1) factor in the approximation ratio. After this procelargest value for which the configuration LP has a feasible

dure, the machines and big job can be clustered into groups

solution, and we assume (by doing a binary search) that

M1 , . . . , Mp and J1 , . . . , Jp respectively with the following

our algorithm knows T . Clearly, T is an upper bound on

property. For each i = 1, . . . , p, the group Ji contains exthe optimum integral solution. We modify the instance I as

actly |Mi | − 1 big jobs such that they can be assigned to any

follows. Consider a parameter α > 1, which will be related

subset of |Mi | − 1 machines in Mi . More precisely, for each

to the desired approximation ratio (it is convenient to think

i, the big jobs in Ji make up |Mi | − 1 units of configurations

of α as a large constant, say 10, though in Sections 5.3 and

that are distributed among machines in Mi . The remaining

6, we will choose super-constant values of α). We round all

one unit of configuration in Mi , consists of various small-job

pj ≥ T /α to T , and leave all pj < T /α unchanged. A job

configurations that are distributed across the different maj with pj = T is called big, otherwise we call it a small job.

chines in Mi . This transformation allows us to completely

We call this modified instance I ′ an α-gap instance.

ignore the big jobs. In particular, the problem reduces to

Since the sizes are rounded up, the instance I ′ has a soluchoosing exactly one machine mi from each Mi on which

tion at least as large as I. Moreover, as any big job has size

small jobs must be placed (the machines in Mi \ {mi } can

T , we can assume that in any solution to I ′ , a machine is

be filled with Ji ). The group of machines M0 and jobs Jb in

either assigned a single big job, or else it is assigned multiple

Figure 1 is a good example of a cluster.

small jobs.

Given the above structural properties, an O(log n/ log log n)

We claim that if there is a solution S with value at least

approximation follows easily by randomized rounding (this

T /α for I ′ , then the same solution when considered on the

is shown in Section 5.3). For each cluster Mi , there are one

instance I will guarantee that each machine has load at least

unit of small-job configurations distributed among machines

T /α. To see this, if S assigns a big job to a machine, then

∗

in Mi by the configuration LP solution x . We choose one of

that machine has load at least T /α for I (as each big job has

these configurations at random according to probablity desize at least T /α in I), and if S assigns small jobs with total

termined by x∗ and assign it to the corresponding machine

load T ′ to a machine, then that machine also has load T ′ in

m(i) ∈ Mi . This gives a pseudo-assignment where each maI (as the size of small jobs is same in I and I ′ ). Henceforth,

chine has load T , and by standard Chernoff bounds, each

we will assume that the input instance is an α-gap instance

small job is assigned to no more than O(log n/ log log n) mawith largest job size T and is guaranteed to have a feasible

chines (or equivalently, the worst case congestion for small

solution to the configuration LP with load T .

jobs is O(log n/ log log n)). Since the multiply assigned jobs

Consider the assignment LP defined by 1-3. For β ≥ 1,

are small, a simple argument (Lemma 4) shows that this

suppose we relax the condition 1 for small jobs to

pseudo-solution can be used to obtain a proper solution

m

X

where each machine has load Ω(T log log n/ log n).

yij ≤ β

In Section 6, we improve the approximation ratio to O(log log m/ log log log m).

i=1

Note that here we have a guarantee in m (instead of that

in n above), which is only better as m ≤ n. The main

Let y ∗ be the solution to this relaxed LP. We call y ∗ a β-

relaxed assignment. Note that we do not allow a big job to

occur more than once. The following is a simple but useful

property of β-relaxed solutions.

Lemma 4. Let I be an α-gap instance, and S be a βrelaxed assignment for I with value at least T /γ, for some

γ > 1. If βγ < α, then there exists a proper integral solution

(where each job can be assigned at most once) with value at

least T /γβ − T /α.

Proof. As I is an α-gap instance, each machine is assigned either a single big job, or many small jobs. Let Ms

denote the set of machines which are assigned small jobs by

S, and let Js denote the set of all small jobs. By definition,

S yields a feasible solution y ∗ to

X

pij yij ≥ T /γ

∀i ∈ Ms

Since G is bipartite, C has even length. We decompose

C into two matchings P1 and P2 . We continuously and

simultaneously increase the weights yij on edges of P1 and

simultaneously decrease the weights on the edges of P2 until

some yij is 0 or 1. If some yij reaches 0, we remove the edge

(i, j) from the graph. If some yij = 1 for some big job j,

we assign job j to machine i forever and delete both i and

j from the instance.

Note that no big job is assigned to more than one unit of

machines machine. Similarly, the amount of small configurations assigned to a machine can only decrease during this

procedure (this happens only in the step when we assign

a big job to a machine permanently, since in this case we

might throw away some small job configurations).

j∈Js

X

i∈Ms

∗

yij

yij ≤ β

∀j ∈ Js

0 ≤ yij ≤ 1

Scaling each

down by β gives a feasible solution to

X

pij yij ≥ T /(γβ)

∀i ∈ Ms

j∈Is

X

i∈Ms

yij ≤ 1

∀j ∈ Js

0 ≤ yij ≤ 1

Since each job in Js has size at most T /α, by Lemma 1,

this solution can be transformed to an integral assignment

of jobs in Is to machines in Ms with value at least T /(βγ) −

T /α.

The algorithm begins by solving the configuration LP for

the α-gap instance. Let x∗ = {. . . , xi,C , . . .} be a fractional

assignment of configuration to machines. We call a configuration on a machine big if it consists of a single big job, and

small otherwise. Let B(i) denote the set of valid big configurations for machine i. Given the solution x∗ , P

consider

the induced assignment yij . For job j, let y(j) = m

i=1 yij

denote the cumulative amount of job j assigned in the solution. Clearly, the constraints (5) in the configuration LP

ensure that y(j) ≤ 1 for each job j. In the next section, we

will modify the solution x∗ in various ways. In the process

the value of y(j) will be allowed to increase up to η = 3 for

small jobs. However, we will ensure that y(j) never exceeds

1 for big jobs.

5.2 Transforming the Solution

In this section we show that any solution x∗ can be transformed into another 3-relaxed solution x′ with certain “good”

structural properties (stated in Lemma 6).

We begin by simplifying the assignment of big jobs is x∗ .

Let Jb denote the set of big jobs. Consider the bipartite

graph G with machines M on the left and the big jobs Jb on

the right. We put an edge of weight yij between machine i

and big job j, if yij > 0.

Lemma 5. The solution x∗ can be transformed into another (1-relaxed) configuration LP solution where the graph

G is a forest.

Proof. We apply the following procedure until the graph

G becomes acyclic. Consider an arbitrary cycle C in G.

Let G′ denote the forest obtained after the above procedure,

and let x∗ (abusing the notation slightly) denote the corresponding configuration LP solution. We now show how this

forest structure can be exploited to form clusters of machines

and big jobs.

Lemma 6. The solution x∗ can be transformed into another (relaxed) configuration LP solution x′ and the machines and big jobs can be clustered into groups M1 , . . . , Mp

and J1 , . . . , Jp respectively with the following properties:

1. For each i = 1, . . . , p, the number of jobs in group Ji is

exactly |Mi | − 1. The group Ji could be possibly empty.

2. Within a group the assignment of big jobs is completely

flexible in the sense that they can be placed feasibly on

any |Mi | − 1 out of the |Mi | machines.

3. For each group Mi , the solution x′ assigns exactly 1

unit of small configurations to machines in Mi and all

the |Mi | − 1 units of configurations corresponding to

big jobs in Ji . The assignment LP solution induced by

x′ is 2-relaxed.

Proof. Consider the forest G′ obtained at the end of

Lemma 5. We apply the following procedure to each tree in

this forest.

1. Any isolated machine node in G′ is a machine that

has only small job configurations assigned to it by x∗ .

Such a node trivially forms a cluster by itself with the

corresponding job cluster being the empty set.

2. If a big job j is a leaf, assign it to its parent node (that

is a machine) m and delete both m and j from G′

(i.e. the job j is assigned to machine m permanently).

Observe that this step keeps the solution 1-relaxed.

Continue applying this step until no job node is a leaf

(and hence each job node has degree at least two).

3. If each job node has degree exactly two, form a machine cluster Mi consisting of machines in this tree and

another job cluster Ji consisting of big jobs in this tree.

Note that by the degree property, |Ji | = |Mi | − 1.

4. Otherwise, root the tree arbitrarily at some machine

node. Consider some job node j ∗ with at least two

children, and with the minimality property that every other job node in the subtree rooted at j ∗ has exactly one child. Suppose j ∗ has l ≥ 2 children and let

m1 , m2 , . . . , ml denote the machine nodes corresponding to these children. In the solution x∗ , at most one

unit of j ∗ is assigned to m1 , . . . , ml . As l ≥ 2, at least

one of these machine nodes (call it m1 ) must have less

than 0.5 units of j ∗ assigned to it. Consider R the

subtree rooted at m1 . By the minimality property

of j ∗ , each job node in R has exactly one machine

node child. Let M (R) and J(R) be the set of machine nodes and job nodes in R respectively. Clearly,

|J(R)| = |M (R)| − 1. As each job in J(R) is assigned

at most one unit to nodes in M (R) and as j ∗ is assigned at most 0.5 units to M (R), it follows that the

cumulative amount of small configurations assigned by

x∗ to M (R) is at least 0.5. We form the cluster M (R)

and corresponding cluster J(R), remove the subtree R

from G and repeat the procedure.

During the above procedure, some big jobs get assigned

to machines permanently (step 2). At the end, we are left

with a collection of groups M1 , . . . , Mp and J1 , . . . , Jp .

By construction we have that |Ji | = |Mi | − 1, and hence

they satisfy the first property of the lemma. The second

property follows by observing that for any node m ∈ Mi ,

we can root the tree corresponding to Mi ∪ Ji at m and

assign each job node j ∈ Ji to its child machine ( this can

always be done as no job j is a leaf). The third property

follows as the cumulative amount of small configurations

assigned by the solution x∗ to the machine nodes in Mi

is at least 0.5. For each machine m ∈ Mi we can scale

the small configurations xi,C uniformly (possibly adjusting

the assignment of big configurations, to ensure constraints

(4) in the configuration LP) such that the amount of small

configurations in Mi is exactly 1. The resulting solution is

clearly 2-relaxed.

Lemma 6 implies that the assignment of big jobs to machines within a group is completely flexible and can be ignored. Henceforth given a group Mi , we will only care about

the small configurations assigned to machines in Mi by the

configuration LP. We will call Mi a super-machine. If the

solution x′ assigns xm,C amount of small configuration C to

machine m ∈ Mi , we say that Mi contains a configurationmachine pair (C, m) to an extent xm,C . Finally, we can

make another (minor) simplification.

Lemma 7. We can round the solution x′ such that each

xm,C is an integral multiple of 1/(n + m), and the resulting

solution is 3-relaxed.

Proof. The total number of nonzero variables xi,C in

any basic feasible solution to the configuration LP 4-6 is at

most n + m. Thus we can round each xi,C either up or down

to the nearest multiple of 1/(n + m) while keeping the total

of small configurations for each super-machine equal to 1.

Since each variable xi,C is increased by at most 1/(n + m)

the new solution is 3-relaxed.

5.3 The Algorithmic Framework and a Simple

Algorithm

The underlying idea of our algorithms is the following.

Suppose we could find a (relaxed) assignment of small jobs

to super-machines with the following properties:

1. For each super-machine Mi , there is some machine

m(i) ∈ Mi that is assigned small jobs with total load

load at least T /γ.

2. No job is assigned to more than β machines (i.e. the

assignment is β-relaxed).

Then, by Lemma 6 for each super-machine Mi , we assign

we can assign the big jobs in Ji to machines other than

m(i). For the machines m(i), by Lemma 4, there is a proper

assignment where each machine has load at least T /(βγ) −

T /α. So, if βγ < α/2, we are guaranteed that each machine

has load at least T /α. This will give us an α = O(βγ)

approximation, In Section 6.5, we will show that we can

always choose γ = O(1) and η = O(log log m/ log log log m),

which will imply our main result.

In fact, we will always guarantee a somewhat stronger

guarantee than property (1) above. We will ensure all the

small jobs assigned to Mi belong to some single configuration machine pair (m, C) where m ∈ Mi .

We begin by showing a simple O(log n/ log log n) approximation.

Theorem 1. A simple randomized rounding based algorithm has an approximation guarantee of O(log n/ log log n).

Proof. For each super-machine Mi , by Lemma 6 we have

that exactly one unit of small configurations are assigned

to Mi . We choose exactly one configuration-machine pair

(m, C) with probability xm,C and assign the jobs in the

configuration to C to machine m. Since the solution x′ is 3relaxed, a direct application of Chernoff Bounds (Lemma 13)

shows that no job is assigned to more than O(log n/ log log n)

machines with high probability. Since each configuration has

load T , whp this gives a β-relaxed assignment with γ = 1

and β = O(log n/ log log n). By the framework discussed

above this implies the claimed approximation.

6.

IMPROVING THE GUARANTEE

The goal in the rest of the paper will be to improve the

approximation guarantee to O(log log m/ log log log m). In

the proof of Theorem 1, choosing β = O(log n/ log log n)

ensured that with high probability no job is assigned to more

than β machines and hence γ = 1. In this section, we will

show how to reduce β significantly by allowing γ to be a

constant larger than 1.

We begin by showing that at the expense of an O(γ) =

O(1) factor loss in the approximation ratio, we can assume

that every small job has the same size. This will allow us to

recast our problem as that about certain set-systems. We

will define this problem formally in Section 6.2 and study it

in Sections 6.3 -6.5.

6.1

Making small job sizes uniform

Lemma 8. Given our algorithmic framework described in

Section 5.3, at the loss of an O(γ) factor in the approximation ratio, we can assume that all small jobs have an

identical size of ǫT /n.

Proof. First, we can assume that the size of each job

is an integral multiple of ǫT /n for a small enough ǫ. This

affects the load on any machine by at most ǫT . We now

split each job into atoms each of which has size ǫT /n and

treat each atom as a separate job.

Now suppose we are given a β-relaxed assignment of atoms

to super-machines, and some machine in each super-machine

is assigned a load of at least T /γ atoms. To map this solution

back to the original instance, we assign a job j to machine

m if it contains more than 1/(2γ) fraction of atoms of j.

Clearly, no job j is assigned to more than 2γβ machines otherwise there would some atom of j that is assigned to more

than β machines, contradicting our assumption that we had

a β-relaxed assignment of atoms. Moreover, if a machine m

is assigned atoms with cumulative load at least T /γ, a simple

averaging argument shows that at least T /(2γ) of the load on

m will be made up by atoms of jobs j which have more than

1/(2γ) fraction of atoms assigned to m. Thus the procedure

for assigning jobs above gives a 2βγ relaxed assignment of

jobs where each machine has load at least T /(2γ). Thus by

the framework in Section 5.3, this solution can be converted

to a valid assignment with value T /(2γβ · 2γ) − T /α, which

implies as O(γ 2 β) approximation.

6.2

Auxiliary Problem

Given Lemma 8 above, each small job has the same size

and hence we will ignore its size and treat it as an element

of some set. We will now recast the problem above as one

about certain systems. Once we complete this description,

we will completely abandon the notion of machines, job and

configurations.

Let k, l, p and η be positive integers. Let Si,j be a collection of sets indexed by i and j, where i = 1, . . . , p and

j = 1, . . . , l over some ground set U of elements. We say that

these sets define a (k, l, p, η)-system if the following properties hold:

1. Each set Si,j has cardinality k.

2. The sets are partitioned into m collection C1 , . . . , Cm ,

where Ci = {Si,1 , . . . , Si,l }

3. Each element u ∈ U occurs in at most βl of the sets

S1,1 , . . . , Sm,l .

We claim that the configuration LP solution corresponding to super-machines can be viewed as a (k, l, p, η) system.

Observation 1. The configuration LP solution corresponding to super-machines can be viewed as a (k, l, p, η) system

with k = n/ǫ, l = n + m, p equal to the number of supermachines and η = 3.

Proof. We view each small job as an element u of the

ground set U , each super-machine Mi as the collection Ci ,

and each configuration-machine pair that occurs to an extent

of 1/(n + m) (by Lemma 7) in Mi as a set Sij . That η = 3,

also follows from Lemma 7.

We now recast our problem.

Definition 1 ((γ, β)-good function). Let f be a function from {1, . . . , p} → {1, . . . , l}. For γ ≥ 1 and β ≥ 1, we

say that f is (γ, β)-good if it satisfies the following properties:

′

1. For each i = 1, . . . , p, there is subset Si,f

(i) of Si,f (i)

′

such that |Si,f (i) | ≥ k/γ.

2. No element u ∈ U appears in more than β elements in

the collection {S1,f (1) , S2,f (2) , . . . , Sp,f (p) }.

Note that by Lemma 8 and the framework in Section

5.3, finding a (γ, β)-good function f and the associated sets

Si,f (i) gives an O(γ 2 β) approximation to the Santa Claus

problem. Our main theorem (proved later) is the following:

Theorem 2. Given any (k, l, p, η)-system, and any ǫ >

0, there exists an (1/(1−ǫ), O(η log log p/ log log log p))-good

function f . Moreover, f and the subsets Si,f (i) for i =

1, . . . , p can be found in polynomial time with high probability.

In our setting, as the number of super-machines p is no

more than the number of machines m and η = 3, and hence

the theorem implies an O(log log m/ log log log m) approximation for the restricted assignment case.

6.3

Certifying

properties

(γ, β)-good

functions and their

Note that to determine if a function f is (γ, β)-good, we

′

need to find the subsets Si,f

(i) that satisfy the properties in

Definition 1. In this section, we first show that this can be

done in polynomial time by solving a max-flow problem. In

particular,

Lemma 9. Given a function f : {1, . . . , p} → {1, . . . , l},

and parameters γ and β, there is a polynomial time algorithm to determine whether f is (γ, β)-good. Moreover, we

′

can determine the sets Si,f

(i) in polynomial time.

Proof. We construct the following network (please see

Figure 2) corresponding to f and the set system: We have a

directed bipartite graph with the vertex sets V = {1, . . . , p}

corresponding to C1 , . . . , Cp and U = {1, . . . , |U |} corresponding to the ground elements. There is a directed edge

with capacity 1 from v ∈ V to u ∈ U if u ∈ Sv,f (v) . There is

a source vertex s and sink vertex t. For each vertex v ∈ V ,

there is a edge (s, v) with capacity k/γ and for each vertex

u ∈ U there is a directed edge (u, t) with capacity β.

Consider the maximum flow from s to t subject to the

edge capacities. We claim that this network has a maximum

flow of value exactly kp/γ if and only if f is (γ, β)-good. If

f is (γ, β)-good, then consider the solution that transmits

one unit of flow on each edge (v, u) such that v ∈ V and

′

u ∈ Sv,f

(v) . The flows on the sets of edges (s, v) and (u, t)

are determined by the flow conservation constraints. Since f

is (γ, β)-good, by definition, it follows that no edge (u, t) has

flow more than β and that each edge (s, v) has flow exactly

k/γ and hence the flow is feasible.

Conversely, consider a flow with value kp/γ. As there are

p vertices of the type (s, v), each edge (s, v) must have flow

exactly equal to its capacity k/γ. Since the flow is integral, for each v there are exactly k/γ edges corresponding

′

Sv,f (v) with flow 1. Let Sv,f

(v) be the subset corresponding

to these edges. The capacity constraints on the edges (u, t)

ensure that no ground element u appears more than β times

′

′

′

{S1,f

(1) , S2,f (2) , . . . , Sp,f (p) }.

The network characterization in the proof of Lemma 9

above also gives the following important characterization of

functions that are not (γ, β)-good.

Lemma 10. Let f be a function {1, . . . , p} :→ {1, . . . , l}.

If f is not (γ, β)-good, then there exists a subset of configurations Ṽ ⊂ V = {1, . . . , p} and a subset Ũ of ground elements

such that

1. Each element in Ũ occurs at least (1 − 1/γ)β/2 times

in {Si,f (i) : i ∈ Ṽ }.

2. The size of Ũ is at least (1 − 1/γ)2 βk|Ṽ |/(2η 2 l2 ). In

particular, the number of vertices in Ũ is linear in the

number of elements in Ṽ .

Proof. Consider the network graph in Lemma 9 corresponding to γ, β and f . Since f is not (γ, β)-good, by

Lemma 9 the maximum flow in the network above is strictly

less than kp/γ. Thus there is some mincut (C, C) with value

strictly less then kp/γ. Let C be side of the cut that contains

the source s. Let V ′ = V ∩ C and U ′ = U ∩ C. We refer the

reader to Figure 2 for clarity. Note that V ′ 6= ∅ otherwise

each of the edges (s, v) must lie in the cut and hence the cut

has size at least kp/γ which contradicts that C is a mincut.

Similarly, U ′ 6= ∅ otherwise any such cut must contain kp/γ

edges (because each vertex v contributes the edge (s, v) or

all the edges (v, u), both of which contribute at least k/γ to

the cut size).

Let v be an arbitrary vertex in V ′ . Suppose we remove v

from C and move it to C. This adds k/γ capacity due to

the edge (s, v) to the cut and removes e(v, U \ U ′ ) capacity

from the cut. Here e(v, U \ U ′ ) denotes the number of edges

from v to the set U \ U ′ . As (C, C) is a mincut, it must be

that k/γ − e(v, U \ U ′ ) ≥ 0. Summing over all v in V ′ , it

follows that

U’

Cut C

V’

t

s

δk

β

k|V ′ |/γ − e(V ′ , U \ U ′ ) ≥ 0

Here e(V ′ , U ′ ) denotes the number of edges from V ′ to U ′ .

As each v ∈ V has exactly k edges to U it follows that

e(v, U \ U ′ ) = k − e(v, U ′ ) and hence the above inequality

can be written as

′

′

′

e(V , U ) ≥ (1 − 1/γ)k|V |

(7)

Moreover as any vertex in U has in-degree at most ηl, it

follows that

|U ′ | ≥

e(V ′ , U ′ )

(1 − 1/γ)k|V ′ |

≥

ηl

ηl

(8)

We now apply a similar argument to U ′ . Let u be an

arbitrary vertex in U ′ and suppose we remove u from C.

This reduces the capacity by β as the edge (u, t) is no longer

in the cut, and it increases the cut capacity by e(V ′ , u). As

C is a mincut, it must be that β − e(S, u) ≤ 0. Summing

over all u ∈ U ′ , we get that

β|U ′ | − e(V ′ , U ′ ) ≤ 0

As each v ∈ V has k outgoing edges to U , it follows that

e(V ′ , U ′ ) ≤ k|V ′ | and hence

|U ′ | ≤ e(V ′ , U ′ )/β ≤ k|V ′ |/β

′

(9)

′

′

By equations 7 and 9 it follows that e(V , U )/|U | ≥ β(1 −

1/γ) and hence a vertex in U ′ has on the average at least

β(1 − 1/γ) incoming edges from V ′ . Since the maximum

in-degree of any vertex in U is at most ηl, by a simple

averaging argument it follows that there are at least (1 −

1/γ)β|U ′ |/(2ηl) vertices in U ′ that have at least (1−1/γ)β/2

incoming edges from V ′ . By equation 8 the number of such

vertices is at least (1 − 1/γ)2 βk|V ′ |/(2η 2 l2 ). The desired

claim follows by choosing Ũ to be the set of these vertices

and choosing Ṽ = V ′ .

6.4 Further properties of the auxiliary problem

Before we can prove our main result, we need two other

facts.

V

U

Figure 2: The network corresponding to the proofs

of Lemma 9 and 10

Lemma 11. Let ǫ < 1/2. Without loss of generality, we

can assume that l = O(log p). In particular, given a (k, l, p, η)system G, we can construct a ((1−ǫ)k, log p/ǫ2 , p, 6η)-system

G′ with high probability.

Proof. For each Ci , choose log p/ǫ2 sets Si,j randomly

′

with repetition. Let these sampled sets be denoted by Si,j

and call this set system G′ . Consider an element u ∈ U .

′

The expected number of occurences of u in Si,j

is η log p/ǫ2 .

We call u bad if it is lies in to more than 6η log p/ǫ2 sets

′

Si,j

. By Chernoff Bounds 13, the probability that u is bad

2

2

is at most e−5η log p/ǫ ·1/5 = e−η log p/ǫ < 1/p4 .

′

′

′

Consider a particular set Si,j in G . As |Si,j

| = k, the ex′

pected number of bad elements in Si,j is at most k · (1/p4 ).

′

Thus by Markov’s inequality the probability that Si,j

con4

tains more than ǫk bad elements is at most 1/(ǫp ). As there

′

are at most p log p/ǫ2 sets Si,j

, it follows that with high prob′

ability (inversely polynomial in p) each set Sv,i

contains at

least (1 − ǫ)k non-bad elements. By the properties above,

G′ is a ((1 − ǫ)k, log p/ǫ2 , p, 6η) system.

Next we state a result due to Leighton et al. [8] about

good functions with γ = 1. This result is useful when k is

small (for large k the guarantee is essentially similar to that

obtained by randomized rounding). The existential statement of this result follows by applying Lovasz Local Lemma

to the set system where we define two sets Si,j and Sk,l to

be dependent if they share an element. [8] showed how this

can be derandomized to give a polynomial time algorithm.

Their result was originally stated in the context of low congestion routings, but it can be rephrased as the following in

our context.

Theorem 3 (Leighton et al.,Theorem 3.1 in [8]).

For every (k, l, p, η)-system, there exists a polynomial time

algorithm to find a (1, O(η log kl/ log log kl))-good function

f.

6.5 The Algorithm

We are now ready to prove Theorem 2. Let G be a

(k, l, p, η)-system. By Lemma 11, we can assume that l =

O(log p). We also note that the interesting case is only when

η ≤ log p, the result easily follows for η > log p by randomized rounding. Recall that for our application η = 3.

Our algorithm chooses a small random sample Us of the

ground elements U , such that if we restrict G to Us , we

obtain a (k′ , l, p, η) system where k′ is polylogarithmic in

p. We apply Theorem 3 to obtain a good function f with

γ = 1. We then show that with high probability (over the

random choice of Us ), the function f is also good for G with

γ = 1/(1 − ǫ).

Our algorithm is the following:

1. Obtain a random set Us by sampling each element u ∈

U with probability η 2 l2 log2 p/k. Let Gs by the system

obtained by restricting G to Us . For each set Si,j , the

expected size of Si,j ∩ Us is η 2 l2 logp . Thus, with high

probability Gs is a (ks , l, p, β)-system where ks is polylogarithmic in p.

We will show that with high probability, the event stated

above does not happen. In particular, with high probability,

for every possible bad witness S̃, some bad element corresponding to S̃ appears in Us .

Consider a particular bad witness S̃ of size x. By Lemma

10, the witness S̃ has at least ǫβkx/(η 2 l2 ) bad elements Ũ .

Since Us is obtained by sampling each of these elements

independently and uniformly at random with probability

q = η 2 l2 log2 p/k from U , the probability that none of the

elements in Ũ is chosen is at most

(1−q)ǫβkx/(η

2 2

l )

= (1−η 2 l2 log2 p/k)ǫβkx/(η

2 2

l )

≤ e−ǫβx log

2

p

Since there are at most pl sets Si,j in the set system G,¡ the

¢

total number of possible bad witness of size x is at most pl

.

x

Thus, the probability that our algorithm fails is at most

à !

X pl −ǫβx log2 p X

2

e

≤

(pl)x e−ǫβx log p

x

x≥1

x≥1

In our setting, since l = O(log p) and β = Θ(log log p/ log log log p)

and hence greater than 1/ǫ, the quantity above can be bounded

by

X −(log2 p−2 log p)x

e

x≥1

2. Apply the algorithm of Leighton et al, Theorem 3 to

the system Gs . Since ks = polylog p, this gives a

(1, dη log log p/ log log log p))-good function f for Gs ,

where d is some constant.

3. Using the algorithm in Lemma 9, check whether the

function f is (1/(1 − ǫ), 2dη log log p/(ǫ log log log p))′

good for G. If yes, obtain the corresponding sets Si,j

by applying Lemma 9. Otherwise reject.

We show that above procedure succeeds with high probability which implies Theorem 2.

Lemma 12. The algorithm above succeeds with high probability.

Proof. Let F denote the set of all functions f from

{1, . . . , p} :→ {1, . . . , l}. Let β ≥ 1 be an arbitrary parameter. It suffices to show that with high probability, every

f ∈ F that is not (1/(1 − ǫ), 2β/ǫ)-good for G, is also not

(1, β)-good for the random instance Gs .

If some f is not (1/(1 − ǫ), 2β/ǫ)-good for G, then by

Lemma 10, there is a subset Ṽ of V and a subset Ũ of U ,

such that |Ũ | ≥ ǫ2 (2β/ǫ)k|Ṽ |/(2η 2 l2 ) and each element in Ũ

appears at least (ǫ/2) · (2β/ǫ) = β sets in {Sv,f (v) : v ∈ Ṽ }.

We call the collection S̃ = {Si,f (i) : i ∈ Ṽ } a bad witness for

f , and the set Ũ as the bad elements corresponding to this

witness. We also say that the size of the bad witness S̃ is |Ṽ |.

Note that the bad elements Ũ are completely determined by

S̃.

The crucial observation is the following: Suppose f is not

(1+ǫ, 2β/ǫ)-good for G, but is (1, β)-good for Gs (and hence

the algorithm might reject and fail). Consider some bad

witness S̃ for f , then it must be the case that none of the bad

elements Ũ corresponding to S̃ were chosen in the sample

Us . Because, if some bad element in Ũ was chosen in the

sample Us , then it would appear in more than β sets in

{Sv,f (v) ∩ Us : v ∈ Ṽ }, and hence would ensure that f is not

(1, β)-good for Gs .

which is less than inversely polynomial in p. Thus with high

probability, if f is (1, β)-good for Gs , then it is (1 − ǫ, 2β/ǫ)good for G, which completes the proof.

7.

CONCLUDING REMARKS

The Santa Claus problem remains poorly understood in

the general case, and a huge gap remains between the known

upper bound of O(n) and the lower bound of 2 on the approximation ratio.

Another natural question is whether there is an O(1) approximation in the restricted assignment case. Based on the

results of our paper, it suffices to show the following relation

between fractional and integral congestion:

Let C1 , . . . , Cp be collections of sets, Ci = {Si1 , . . . , Sil }

for i = 1, . . . , p, where each set Sij is k-element subset of

some ground set such that each element appears in at most

l sets Sij (i.e. fractional congestion is 1).

Then, can we choose some set Si,f (i) ∈ Ci for each i =

1, . . . , p, such that we can find Si′ ⊆ Si,f (i) with the property

that |Si′ | = Ω(k) and that each element occurs in at most

O(1) sets in {S1′ , . . . , Sp′ }?

8.

ACKNOWLEDGMENTS

We would like to thank Tracy Kimbrel for suggesting the

problem name, and Nicole Immorlica and Vahab Mirrokni

for bringing the problem to our attention. We would also

like to thank Don Coppersmith for stimulating discussions

about the problem.

9.

REFERENCES

[1] N. Alon and J. Spencer. The Probabilistic Method.

John Wiley and Sons, New York, 2000.

[2] Y. Azar and L. Epstein. On-line machine covering.

Journal of Scheduling, 1(2):67–77, 1998.

[3] I. Bezakova and V. Dani. Allocating indivisible goods.

SIGecom Exchanges, 5.3, 2005.

[4] J. Csirik, H. Kellerer, and G. Woeginger. The exact

lpt-bound for maximizing the minimum completion

time. Operations Research Letters, 11(5):281–287,

19982.

[5] B. Deuermeyer, D. Friesen, and M. Langston.

Scheduling to maximize the minimum processor finish

time in a multiprocessor system. SIAM J. Algebraic

Discrete Methods, 3(2):190–196, 1982.

[6] D. Golovin. Max-min fair allocation of indivisible

goods. Technical Report, Carnegie Mellon University,

CMU-CS-05-144, 2005.

[7] N. Karmarkar and R. M. Karp. An efficient

approximation scheme for the one-dimensional

bin-packing problem. In Procedeeings of Foundations

of Computer Science (FOCS), pages 312–320, 1982.

[8] T. Leighton, C. Lu, S. Rao, and A. Srinivasan. New

algorithmic aspects of the local lemma with

applications to routing and partitioning. SIAM J.

Comput., 31(2):626–641, 2001.

[9] J. K. Lenstra, D. B. Shmoys, and E. Tardos.

Approximation algorithms for scheduling unrelated

parallel machines. Mathematical Programming, Series

A, 46(2):259–271, 1990.

[10] R. Lipton, E. Markakis, E. Mossel, and A. Saberi. On

approximately fair allocations of indivisible goods.

ACM Conference on Electronic Commerce, 2004.

[11] D. B. Shmoys and E. Tardos. An approximation

algorithm for the generalized assignment problem.

Mathematical Programming, Series A, 62:461–474,

1993.

[12] Z. Tan, Y. He, and L. Epstein. Optimal on-line

algorithms for the uniform machine scheduling

problem with ordinal data. Information and

Computation, 1196(1):57–70, 2005.

[13] G. Woeginger. A polynomial-time approximation

scheme for maximizing the minimum machine

completion time. Oper. Res. Lett., 20(4):149–154,

1997.

Technical Facts

We use the following version of Chernoff bounds as given on

page 267, Corollary A.1.10 and Theorem A.1.13, in [1].

Lemma 13. Suppose X1 , . . . , Xn , are 0-1 random

variPn

ables,Psuch that P r[Xi = 1] = pi . Let µ =

i=1 pi and

X= n

i=1 Xi . Then for any a > 0

P r[X − µ ≥ a] ≤ ea−(a+µ) ln(1+a/µ)

Moreover, for any a > 0,

P r[X − µ ≤ −a] ≤ e−a

2

/2µ

We will also need the following corollary.

Lemma 14.

P r[X − µ ≥ a] ≤ e−a min(1/5,a/4µ)

Proof. Let x = µ/a. Then the right-hand side can be

written as e−a((x+1) ln(1+1/x)−1) .

Now, (x + 1) ln(1 + 1/x) − 1 is decreasing in x. At x = 2,

its value is 3 ln 3/2 − 1 ≥ 1/5. For x > 2, it is at least

(x + 1)(1/x − 1/2x2 ) − 1 = 1/(2x) − 1/(2x2 ) ≥ 1/(4x).

![\documentstyle[twoside,11pt,psfig]{article}](http://s3.studylib.net/store/data/007560442_2-48982c7e677d9bc3305e1d8bd38bda9c-300x300.png)