Data Analysis 3

advertisement

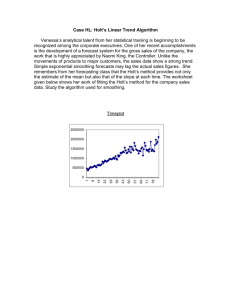

Data Analysis 3 The summarising, tabulating and graphing of data provides a picture of what is happening within the measurements. However, it is often the case that looking beyond the collected data is as important. This is the difference between descriptive and inferential statistics mentioned in Chapter 1. 3.1 Trends and patterns One common and valuable type of data is obtained when the measurement is repeated for an extended time or over a distance, so that the change in the value as a function of time or distance can be observed. This leads to the observation of trends or patterns in the data. CLASS EXERCISE 3.1 What is the difference between a trend and a pattern? Give an example of each. Trend Example Pattern Example These recognisable changes in the data can occur in a time context, where they might be rapid – for example, the change in pH in a river after a chemical spill – or over a long period of time – for example, global warming. They can also occur as a function of distance or any other variable that you care to think of. CLASS EXERCISE 3.2 The graph on the following page shows data collected over a number of years, plotted by (i) joining the dots (solid line) and (ii) a line of best fit (dotted line). Consider the following questions • which is a more useful graph – join the dots or line of best fit? • can you see any trend? • what effect would it have on your certainty if you only had data from 2001, 2005, 2009 and 2013? • what value do you think might occur in 2015? in 2030? 3. Data analysis Time Series The join-the-dots graph in Exercise 3.2 is known as a time series, a line graph where a measured variable is plotted against time. It is best if the time interval remains constant between successive data points, though it is not essential. A time series can help identify patterns, which might be: • a trend, • a repeating cycle, • random fluctuation • a combination of all three However, the natural variation in the measurements may cause the pattern to be blurred by “noise”, where there is so much up-and-down variation between successive points that it is not possible to see “the wood for the trees”. Figure 3.1 shows such an example. FIGURE 3.1 A time series with substantial variation Is there a trend in this data? Well, if you look carefully, you might believe that there is a small rise, but you would not feel very confident about drawing a line of best fit through the data. What needs to be SIS 3.2 3. Data analysis done is to smooth the data – to clear some of the noise, so that the real pattern becomes clear. Figure 3.2 shows the same data from Figure 3.2, but using a smoothing procedure. FIGURE 3.2 The time series from Figure 3.1 with smoothing Smoothing data means the loss of the raw data, which is not unimportant. Any smoothed data should be clearly shown as just that. You should not report smoothed data as raw data!! There are a variety of smooth methods, some more complicated than others. We will look at one: the running means method. THE RUNNING MEANS METHOD This method requires that there are no gaps in the data – that each time interval between the data is the same. The value that is plotted is the mean of a number of successive data points; how many is a matter of choice but three and five are common. We will choose three here for simplicity. EXAMPLE 3.1 Smooth the following data using 3-point running means. Data 2.2 1.5 2.1 3.5 1.6 2.8 3.6 3.2 3.1 2.9 Smoothing Calculation (2.2 +1.5) ÷ 2 * (2.2 +1.5 + 2.1) ÷ 3 (1.5 + 2.1 + 3.5) ÷ 3 (2.1 + 3.5 + 1.6) ÷ 3 (3.5 + 1.6 + 2.8) ÷ 3 (1.6 + 2.8 + 3.6) ÷ 3 (2.8 + 3.6 + 3.2) ÷ 3 (3.6 + 3.2 + 3.1) ÷ 3 (3.2 + 3.1 + 2.9) ÷ 3 (3.1 + 2.9) ÷ 2 * Smoothed Value 1.85 1.93 2.37 2.40 2.63 2.67 3.20 3.30 3.07 3.00 * the end values are calculated slightly differently because they don’t have another point on the “other” side The greater the number of points included in the running mean, the greater the amount of smoothing. Figure 3.3 shows the effect on Figure 3.1 using 5-point running means. SIS 3.3 3. Data analysis FIGURE 3.3 The time series from Figure 3.1 with 5-point running mean smoothing SEASONAL PATTERNS You probably have heard the term “seasonally adjusted” when unemployment figures are released. Many measurements will have a variation which re-occurs at particular times of the year. January unemployment figures are always high because of the addition of school-leavers. Dissolved oxygen levels in a river will have a variation brought on by changes in the atmospheric temperature. To see any trend in these figures is made difficult by the seasonal variation. Various methods – most of them quite complex – are used to remove the seasonal component of the data. These are beyond the scope of this course. Linear regression This sounds complex and difficult. And it is if you don’t have a computer with a statistical or spreadsheet program handy. We will assume that you do, so most of the horrible calculations part are avoided. Linear regression involves the calculation of a line of best fit that links the x- and y-values for a number of data points. In its simplest form, it calculates the slope (m) and y-intercept (b) for a straight line – y = mx + b – which is of course what you use for working out sample concentrations from your calibration graphs. In Exercise 3.2, you did this by guesswork – not a very reliable method, I’m sure you will agree. USING THE LINE-OF-BEST-FIT One of the reasons for producing a line-of-best-fit is to determine x or y-values where no data exists (for example, the fallout levels for 2030 in the earlier exercise). This is known as interpolation or extrapolation. CLASS EXERCISE 3.3 What is the difference between interpolation and extrapolation? SIS 3.4 3. Data analysis The values obtained from interpolation and extrapolation are not perfect. The error in the y value depends on how far you are extrapolating the data: the closer the data is to the extrapolation point, the lower the error, as shown in Figure 3.4. error limits in line of best fit error in y-estimate line of best fit FIGURE 3.4 Error in extrapolation from line of best fit Statistical methods exist for estimating the error involved in an extrapolation. 3.2 Statistical tests These are mathematical processes to make objective judgements and comparisons about data sets. They are not 100% accurate, and can only make an educated guess with an inbuilt error. It allows you to “answer” questions such as: • is the average December temperature in Sydney over the last ten years significantly hotter than 50 years ago? • has the level of pollution dropped significantly since the installation of new equipment? • does this batch of Corn Flakes meet the standard for protein content? The uncertainty comes about because there is inbuilt error and variation in measurement due to sampling and other factors. For example, looking at the last question, let’s say that the sample gives an average protein value of 7.92%w/w with a variation (standard deviation) of 0.1%w/w. The standard value is 7.9-8.0. While the average is within the standard range, the variation takes some of the sample out of it. A statistical test can identify whether it is likely that all the Corn Flakes in this batch meet the standard or not. A useful example of a statistical test: The outliers test Outliers are data points in a set that seem to be so different from the rest that they don’t belong, and should be deleted. Leaving in the data set would change the mean and standard deviation. However, unless the measurement process for the suspect point is known to have a problem, you should not simply remove it on a whim. A simple procedure based on statistics exists to eliminate the point objectively. SIS 3.5 3. Data analysis EXAMPLE 3.2 Consider the dissolved oxygen levels below, which were collected at points around a dam site for the purpose of providing a typical measure of the DO. 9.21 9.10 9.13 8.99 9.05 9.05 9.25 4.28 8.95 9.22 The 4.28 value is so different to the others, that the question is: should it be included in the value for the typical DO level? The table below shows the effect on the mean and standard deviation of the presence of the outlier. Mean SD With outlier 8.62 1.53 Without outlier 9.11 0.11 The effect is obvious, and it may be that the low value was a consequence of an instrumental or operator error, not a very polluted pocket of water. Regardless of the cause, a reading of 8.62 doesn’t really reflect the typical DO for that water, so it may be that it should be omitted. Removing data should not be done without serious consideration of what is the intention of the data collection. If the purpose is to look for problems, then you obviously don’t eliminate these points. Even if you are expecting all the values to be close together, it is not appropriate to cross it out (or even worse, not even record it at all). The Q-test for testing outliers This is actually a simple statistical test. The process is: 1. calculate a test-value (Q in this case – see equation below) based on the data 2. compare this value to a table of values 3. make a judgement on the basis of the comparison The test value (Q) is the difference between the suspected outlier and the nearest other value divided by the range of the data, as shown in the equation below. Q = | vo – vn | ÷ r where vo is the value of the outlier, vn the value of the nearest data point and r the range. The | | symbols mean that the difference is always positive. The test score for Q is then compared to a table of values which have been calculated on the basis of statistical probabilities. Table 3.1 below gives a selection of these values. Rule for discarding outlier If the value of Q is greater than the table value, the outlier can be deleted. SIS 3.6 3. Data analysis TABLE 3.1 Q-test table values Number of data points Table Value 5 6 7 8 9 10 0.73 0.64 0.59 0.54 0.51 0.48 Statistics can measure the likelihood that you are making an error with the test. In this case, there is a 4% chance that you will discard a valid point or keep an invalid one. EXAMPLE 3.3 Can the 4.28 point from the DO data in Example 3.4 be eliminated? From the DO data above, the value of Q is calculated to be: Q = (8.95 – 4.28) ÷ (9.25 – 4.28) = 0.94 The number of data points is 10, so the table value is 0.48. Since the test value of Q is greater than this, we can safely delete the 4.28 data point from our calculations. EXERCISE 3.4 Identify the outliers in the following data sets, and determine whether they can be deleted. (a) (b) (c) SIS Data Values 15, 22, 18, 6, 25, 19 0.75, 0.83, 0.53, 0.82, 0.76, 0.81, 0.69, 1.03 41.5, 46.2, 41.6, 42.0, 41.1, 42.1 3.7

![[#GEOD-114] Triaxus univariate spatial outlier detection](http://s3.studylib.net/store/data/007657280_2-99dcc0097f6cacf303cbcdee7f6efdd2-300x300.png)