Document 12756735

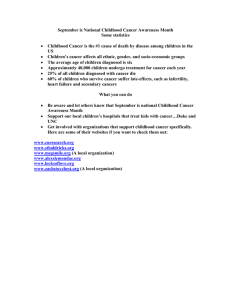

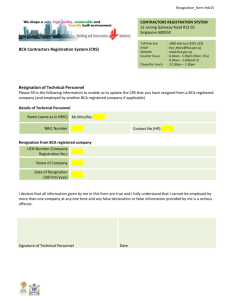

advertisement