UNIVERSAL BIASES IN PHONOLOGICAL LEARNING ACTL SUMMER SCHOOL, DAY 5 JAMIE WHITE (UCL)

advertisement

UNIVERSAL BIASES IN

PHONOLOGICAL LEARNING

ACTL SUMMER SCHOOL, DAY 5

JAMIE WHITE (UCL)

OVERVIEW OF MAXENT MODELS

PLAN

• 1. What is a maximum entropy model (and specifically, as

applied in phonology)?

• 2. Demystify how the model works by working through

mini-cases by hand!

• 3. Show you how the MaxEnt Grammar Tool works, in case

you want to try it on your own data.

• 4. Look at implementing a substantive (P-map) bias using

the prior.

WHAT IS MAXENT?

• Maximum entropy models:

• General class of statistical classification model.

• Mathematically very similar to logistic regression.

• Wide, longstanding use in many fields.

• As applied to phonological grammars:

• Constraint-based.

• A variety of Harmonic Grammar.

• Comes with a learning algorithm with an objective function.

• Probabilistic ⟶ readily able to account for variation.

• Priors ⟶ allow us to implement learning biases.

• Early application to phonology: Goldwater & Johnson 2003.

• Some other refs: Wilson 2006, Hayes & Wilson 2008, Hayes et al.

2009, White 2013.

‘REGULAR’ HARMONIC GRAMMAR

(NON-PROBABILISTIC)

• Roots go back to pre-OT (e.g. Legendre et al. 1990), with a

resurgence in recent years (e.g. Pater 2009, Potts et al. 2009).

• Main difference from OT: Method of evaluation

• Rather than being strictly ranked, each constraint is associated with a

weight.

• To determine the winning candidate:

• Take the sum of the weighted constraint violations.

• Candidate with the lowest penalty (i.e. most Harmony) is the winner.

• Perhaps the most noteworthy property of any harmonic

grammar: the “ganging” property.

• Multiple violations of lower weighted constraints can ‘gang up’ to

overtake a stronger constraint.

EXAMPLE FROM JAPANESE

• Phonotactic restrictions in native Japanese words:

• No words with two voiced obstruents (“Lyman’s Law”): *[baɡa].

• No voiced obstruent geminates: [tt] ok, but *[dd].

• Violations possible in loanwords.

• Two voiced obstruents ok:

• [boɡiː] ‘bogey’, [doɡɯma] ‘dogma’

• Voiced geminates ok:

• [habbɯɾɯ] ‘Hubble’, [webbɯ] ‘web’

• But, both cannot be violated in the same word:

• [betto] ‘bed’, [dokkɯ] ‘dog’

Kawahara 2006, Language

CLASSICAL OT FAILS AT THIS

/boɡiː/ IDENT(voice)

☞ boɡiː

*D…D

*

poɡiː

*!

bokiː

*!

/webbɯ/ IDENT(voice)

*D…D

☞ webbɯ

weppɯ

*VOICEDGEM

*

*!

/doɡɡɯ/ IDENT(voice)

M doɡɡɯ

L

*VOICEDGEM

dokkɯ

*!

toɡɡɯ

*!

*D…D

*VOICEDGEM

*

*

*

HARMONIC GRAMMAR ANALYSIS

/boɡiː/

TOTAL

PENALTY

IDENT(voice)

3

boɡiː

*

bokiː

*

TOTAL

PENALTY

IDENT(voice)

3

*D…D

2

webbɯ

*VOICEDGEM

2

*

weppɯ

/doɡɡɯ/

*VOICEDGEM

2

*

poɡiː

/webbɯ/

*D…D

2

*

TOTAL

PENALTY

IDENT(voice)

3

doɡɡɯ

dokkɯ

*

toɡɡɯ

*

*D…D

2

*VOICEDGEM

2

*

*

*

HARMONIC GRAMMAR ANALYSIS

/boɡiː/

TOTAL

PENALTY

IDENT(voice)

3

☞ boɡiː

2

poɡiː

3

3

bokiː

3

3

TOTAL

PENALTY

IDENT(voice)

3

/webbɯ/

*D…D

2

*VOICEDGEM

2

2

☞ webbɯ

2

weppɯ

3

3

/doɡɡɯ/

TOTAL

PENALTY

IDENT(voice)

3

doɡɡɯ

4

☞ dokkɯ

3

3

toɡɡɯ

5

3

*D…D

2

*VOICEDGEM

2

2

*D…D

2

*VOICEDGEM

2

2

2

2

MAXENT GRAMMAR: A PROBABILISTIC

VERSION OF HARMONIC GRAMMAR

GENERATING PROBABILITIES FROM

THE GRAMMAR

• Each constraint is associated with a non-negative weight.

• 1. First, take the sum of the weighted constraint violations

for each candidate.

• This is the Penalty for each candidate.

• So far, this is the same as regular HG.

• 2. Take e(–penalty) for each candidate.

• 3. For each candidate, divide its e(–penalty) by the sum for all

candidates (i.e. take each candidate’s proportion of the

total).

• This is the output probability of each candidate.

LET’S TRY WITH THE JAPANESE CASE

• Assume the following input probabilities for three cases:

• Words like /doggɯ/ :

57.4% devoiced to [dokkɯ]

• Words like /webbɯ/ :

3.7% devoiced to [weppɯ]

• Words like /bogii/ :

0.1% devoiced to [bokii]

• Weights learned by the grammar:

• IDENT(voice):

• *D…D:

• *VOICEDGEM:

11.36

3.56

8.10

|weights|!after!optimization:!

Ident(voice)!!

11.36!

Ident(voice)!!

11.36!

Ident(voice)!!

11.36!

*D…D!!

3.56!

*D…D!!

3.56!

*D…D!!

3.56!

*VoicedGem!!

8.10!

*VoicedGem!!

8.10!

*VoicedGem!!

8.10!

Based on

on these

these weights,

weights, let’s

let’s figure

Based

figure out

out the

the predicted

predicted probabilities:

probabilities:

Based on these weights, let’s figure out the predicted probabilities:

Predicted

Total

IIDENT

(voice)

Predicted

Total

DENT(voice)

(–penalty)

Predicted

Total

IDENT

(voice)

/beddo/ probability

probability

ee(–penalty)

Penalty

11.36

/beddo/

Penalty

11.36

(–penalty)

/beddo/

probability

e

Penalty

11.36

.424

beddo

11.66

.0000086

beddo

beddo

11.36

11.36

.0000117

betto

.576

betto

betto

19.46

~0

peddo

11.36

~0

peddo

peddo

22.72

22.72

petto

~0

~0

petto

petto

.0000203

Predicted

Total

IIDENT

(voice)

Predicted

Total

DENT(voice)

(–penalty)

Predicted

Total

IDENT

(voice)

/webbu/ probability

probability

ee(–penalty)

Penalty

11.36

/webbu/

Penalty

11.36

(–penalty)

/webbu/

probability

e

Penalty

11.36

webbu

8.10

.0003035

webbu

.963

webbu

weppu

11.36

11.36

.0000117

.037

weppu

weppu

.0003152

Predicted

Total

IIDENT

(voice)

Predicted

Total

DENT(voice)

(–penalty)

/bogii/

probability

ee(–penalty)

Penalty

11.36

Predicted

Total

IDENT

(voice)

/bogii/

probability

Penalty

11.36

(–penalty)

/bogii/

probability

e

Penalty

11.36

bogii

bogii

.999

.0284389

3.56

bogii

bokii

bokii

.0000117

pogii

bokii

~0

11.36

11.36

pogii

11.36

11.36

.0000117

pogii

~0

pokii

pokii

pokii

22.72

22.72

~0

~0

GENERATING PROBABILITIES FROM A

GRAMMAR

!!

Sum: .0284623

7!

7!

*D…D

*D…D

*D…D

3.56

3.56

3.56

3.56

*V

GEM

*VOICED

OICEDGEM

*VOICED

GEM

8.10

8.10

8.10

8.10

8.10

*D…D

*D…D

*D…D

3.56

3.56

3.56

*V

GEM

*VOICED

OICEDGEM

*VOICED

8.10

8.10GEM

8.10

8.10

*D…D

*D…D

3.56

*D…D

3.56

3.56

3.56

*V

GEM

*VOICED

OICEDGEM

8.10

*VOICED

8.10GEM

8.10

OK…WE KNOW HOW TO GET THE

PROBABILITIES FROM A LEARNED

GRAMMAR.

NOW, HOW IS THE GRAMMAR

LEARNED?

constraint weights that maximize the objective function in (32), in w

• OBJECTIVE

In MaxEnt, the goalFUNCTION

of learning is to maximize an “objective” fun

subtracted

the logthat

probability

observed

function

o from

That means

there is of

an the

objective

goaldata

that(the

is trying

to

o Once you reach this goal, you have found the “best” gram

• The goal of learning is to maximize this objective function:

• The objective function to be maximized:

(32)

The search space of log likelihoods is provably convex, meaning tha

Log likelihood of the data

Minus

a penalty

Apply a penalty for

constraints

objective

set of weights

that will

maximize

the(i.e.

function

in (32),

and

(all

the observed

input-output

pairs)

gone wild

according

to

how much they vary

Maximize the (log) probability

a preferred

weight).

of the

dataany standard optimizationfrom

found

using

strategy

(Berger

et al.,

•

1996).

So the model is searching for the set of weights that maximize

th

data (again, vis-à-vis the prior) – which also

the likeli

= theminimizes

prior

What does it mean to maximize the ‘log likelihood’? Let’s see with a

data (again, vis-à-vis the prior) – which also minimizes the likelihood

data (again,

prior) also

– which

also minimizes

theof

likelihood

of thedata.

unobse

data (again,

vis-à-visvis-à-vis

the prior)the

– which

minimizes

the likelihood

the unobserved

What does it mean to maximize the ‘log likelihood’? Let’s see with a very simple example:

WHAT DOES IT MEAN TO MAXIMIZE

What

does

it mean

to

‘log

likelihood’?

Let’s

see with

very

What does

itdoes

mean

maximize

the maximize

‘log likelihood’?

Let’s see

with

a very

simple

example:

What

ittomean

to

maximize

the ‘logthe

likelihood’?

Let’s

see with

a very

simplea exam

THE

(LOG)

LIKELIHOOD

Imagine

we have

are trying

to model these data:

/badub/

[badup]:

7 these data:

Imagine

we

have

are

trying

to

model

Imagine

we

have

are

trying

to

model

these

data:

• Simple

example:

Imagine

that

we

are

trying

model these data:

Imagine

we

have

are

trying

to

model

these to

data:

[badub]:

3

100

/badub//badub/ [badup]:[badup]:

7

7

/badub/

7

[badub]: 3 [badup]: 100

[badub]:

3

100

And we want to compare these two[badub]:

grammars:3

100

IDENT(voice) 1.30

1: *D]word

And we Grammar

want to compare

these1.55

two grammars:

And

wewant

want

to

compare

two

grammars:

• And

we

toword

compare

these

two

possible

Grammar

*D]

2.70these

IDENT

(voice)

Grammar

IDENT

(voice)

1.302.00 grammars:

word1.55

1:2:*D]

And Grammar

we: want

to compareIDENT

these

two

grammars:

IDENT

(voice) 1.30

1: *D]word 1.55

Grammar

(voice)

2.00

2 *D]word 2.70

*D]2.70

1.55IDENT

IDENT

(voice)

1.30

: *D]

(voice)

2.00

1: word

word

First, we needGrammar

toGrammar

know 2the

predicted

probability

of each

candidate

under

the each grammar:

Grammar

IDENT(voice)

First, we need to know

the predicted

under the2.00

each grammar:

2: *D]probability

word 2.70of each candidate

• First,

wewe

need

thepredicted

predicted

probability

each

grammar:

First,

needtotoknow

know the

probability

of eachunder

candidate

under

the each gram

Pred.

Total

*D]word ID(vce)

Pred.

Total

*D]word ID(vce)

(–pen)

(–pen)

/badub/ Pred.

prob. e

Penalty *D]1.55

1.30

/badub/Pred.

! prob.! e Total

! Penalty

! word2.70ID! (vce) 2.00!

Total

ID(vce)

*D]

word

(–pen)need to know the predicted probability

(–pen)of each candidate under t

First,

we

/badub/

e

Penalty Total

1.55

1.30

/badub/

! !prob.! ! e !Pred.

! Penalty

!

2.00*D]

badup prob. Pred.

badup

*D]

ID(vce)

! ! 2.70!Total

!! word

word

(–pen)

badup

! !!.67

.56 .2725

! ! !prob.

! 2.00

prob. e1.30

Penalty 1.30

1.55 badup

1.30

/badub/

! !e(–pen)! ! Penalty

! ! 2.70!

badub/badub/

badub

! .1353

! ! 2.00

badub badup

badub

! !.33

1.55 Total

2.70

.0672

Pred.1.55

*D]

Ibadup

D(vce)

T

.44 .2122

!! ! ! 2.70

!

word

! !Pred.

!

!

(–pen)

.4847

/badub/

prob.

e

Penalty

1.55

1.30.2025

badub

badub

! ! /badub/

!!

Then, we calculate the log likelihood

of the data

under each

grammar:

• Then,

calculate

likelihood

under

grammar:

Then, webadup

calculate thethe

log log

likelihood

of the data

under each

each grammar:

badup!

prob.

! !

!

Predicted

Plog,

Predicted

badub

badub

! Plog,!

Then,

we calculate

the log likelihood

of the dataPredicted

under

each

grammar:

Predicted

Plog,

Plog,

e(–pen)

! !

!

!

ln(p) x

ln(p) x

Observed

probability, Grammar1

Plog

probability, Grammar1

Plog

Observed

probability, Grammar1

Plog x

probability, Grammar1

Plog x

frequency

Grammar1

( ln(p) )

obs. freq.

Grammar1

( ln(p) )

obs. freq.

frequency

Grammar1Predicted

( ln(p) )

obs. freq.

Grammar1 Predicted

( ln(p) )

obs.Plog,

freq.

Plog,

Pe

!

!

/badub/

/badub/

badup

we calculate the log

likelihood

ofPlog

thex data

under–.4005

eachGrammar

grammar:

–2.8035

.67 probability,

–4.0586

–.5798

Grammar

Plog

badup Then,

1

1

77 Observed .56probability,

badub/badub/ 3 frequency .44 Grammar

–1.1087

–.8210

–3.3261

( ln(p)–2.4630

)

obs. freq..33 Grammar

( ln(p)

)

obs. fr

1

1

badub

3

badup

7

Sum:

Predicted

Sum:

–6.5216

Plog,

–6.1296

Sum:Sum:Predicted

P

grammar. Which one is better in this case?

SEARCH SPACE

In reality, the model considers every possible grammar – i.e. e

constraints – not just two.

• The virtue of MaxEnt is that this search space is provably c

• The real model considers all possible

sets oftovalues

forgrammar

the (=set of w

always be guaranteed

find the “best”

criterion! There are no local maxima (=”false” high points)

constraints.

1996).

• And it is guaranteed to find the best weights. How??

•

Simplifying to just two constraints, we can see what this lo

dimensional space (weight of constraint 1 x weight of cons

• The search space is provably convex.

MAXIMUM ENTROPY PHONOTACTICS

387

Figure 1

(from

Hayes & Wilson, 2008, p. 387)

The surface defined by the probability of a representative training set for the grammar given in

table 1

constraint weights that maximize the objective function in (32), in w

• OBJECTIVE

In MaxEnt, the goalFUNCTION

of learning is to maximize an “objective” fun

subtracted

the logthat

probability

observed

function

o from

That means

there is of

an the

objective

goaldata

that(the

is trying

to

o Once you reach this goal, you have found the “best” gram

• The goal of learning is to maximize this objective function:

• The objective function to be maximized:

(32)

✔︎

The search space of log likelihoods is provably convex, meaning tha

Log likelihood of the data

Minus

a penalty

Apply a penalty for

constraints

objective

set of weights

that will

maximize

the(i.e.

function

in (32),

and

(all

the observed

input-output

pairs)

gone wild

according

to

how much they vary

a preferred

weight).

optimizationfrom

strategy

(Berger

et al.,

any standard

1996).

•found

So using

the model

is searching for the set of weights that maximize

th

data (again, vis-à-vis the prior) – which also

the likeli

= theminimizes

prior

What does it mean to maximize the ‘log likelihood’? Let’s see with a

WHAT IS THE PRIOR?

• The prior (short for prior distribution) is a way of biasing the

model towards certain learning outcomes.

• Often used as a “smoothing” component to prevent

overfitting.

• In this case, it is a Gaussian (=normal) distribution over each

constraint, defined in terms of:

• µ = a priori preferred weight for the constraint.

• σ = how tightly the constraint is bound to its preferred weight during

learning.

• For smoothing purposes, it is common to set them as follows:

• µ = 0 (i.e. constraints want to be close to 0)

• σ = some appropriate value, e.g. 1.

(discussed,

by Goldwater & Johnson, 2003). In my model, constraints may each receive a

learninge.g.,

outcomes.

subtracted

from

the

log probability

of

observed

(the

function

o

That

there iscomponent

an the

objective

goaldata

that

is trying

to

• Generally, themeans

prior is usedthat

as a “smoothing”

to prevent overfitting

the

model.

found

using

any

standard

optimization

strategy

et

al.,

Log likelihood

ofoSEE

the

data

Minus

athepenalty

based

the

pri

•

So

the

model

is

searching

for

set

of

weights

that

maxim

different

µ,

sothis

the

prior

alsoprior

serves

as

a means

ofPRIOR

implementing

adistribution

substantive

learning

bias (Berger

(see on

LET’S

WHY

THE

WORKS

In

case,

the

is

usually

a

Gaussian

(=normal)

over

each

Once

you

reach

thisthegoal,

you have

found

gram

bjective

set o

of weights

that

will

the

function

in (32),

andthe

this“best”

set of weigh

constraint,

defined

inmaximize

terms

following:

ll the observed

input-output

pairs)

data of

(again,

vis-à-vis

the prior)

– which

also

minimizes

the

section

4.3

below),

following

previous

work

by

Wilson

(2006).

o µ = the a function

priori preferredto

weight

for

the constraint

WAY

• THIS

The objective

be

maximized:

o σ = how tightly the weight is constrained (or conversely, allowed to vary from) its µ

ound

anyWith

standard

optimization

strategy

(Berger

et

1996).

What

itof

mean

to

maximize

the

‘log

likelihood’?

Let’sof

seett

inclusion

of afor

Gaussian

prior,

the

goal of

learning

isal.,

to choose

the

of implement

during

learning.

So using

the model

isthe

searching

thedoes

set

weights

thatthen

maximize

thesetTo

likelihood

• For smoothing purposes, µ for every constraint is usually set to 0, and σ is set to some

(32)

data (again,

vis-à-vis

the

prior)

– objective

which

also

minimizes

ofis the unob

constraint

weights

that

the

in (32),

the likelihood

prior

term in

standard

amount

formaximize

each

constraint,

often 1.function

This means

thatinitwhich

takesthe

more

evidence

for(31)

the

Imagine we have are trying to model these data:

What does

model to be convinced that a weight needs to rise above 0 – just a couple of examples won’t

[badup]:

7 (30)):

subtracted

fromrobustness

the log probability

of /badub/

the

observed

(the

function in

cut it (adds

to statistical

noise

into thedata

model).

it• mean

to amaximize

the

‘log function

likelihood’?

Let’s 3tosee

a very

100

Let’s take

look again at the

objective

(to be[badub]:

maximized)

see with

why it works

that simple

way:

Earlier example:

✔︎

✔︎

ex

And wethese

want to

compare these two grammars:

magine we have

data:

(32)are trying to model

The

search space

of log7likelihoods

provably

convex,

meaning

Grammar1:is*D]

IDENT(voice)

1.30 tha

word 1.55

/badub/

[badup]:

Grammar2: 100

*D]word 2.70

IDENT(voice)

2.00

[badub]:

3

Log likelihood

ofside

the(thedata

a penalty

Looking at the right

prior term), wi is the constraint’s

actual weight, µi isMinus

the

The search

of log

likelihoods

is provably

convex,

meaning

that

there

is help

always

oneµ.

preferred

weight

form

the

prior,

σ ismaximize

how tightly

the constraint

is

to its

objective

set space

of

weights

that

will

the

function

inLet’s

(32),

and

(all

theconstraint’s

observed

input-output

pairs)

First,

we

need

to

know

the

predicted

probability

of

each

candid

see how this affects our mini grammar competition from above:

i

see

how

smoothing

priorthe(µ

= 0, inσ(32),

= 1)

the outcome:

nd weLet’s

wantobjective

to compare

these

twomaximize

grammars:

set of a

weights

that will

function

andaffects

this set of weights

can be

Grammar

Grammar

*D]word

1.55 optimization

IDENT

(voice)

1.30 *D](Berger

1

Pred.

Total

ID(vce)et al., 1996).

Pred.

1: any

word

found

using

standard

strategy

found

using

any

standard

optimization

strategy

(Berger

et

al.,

1996).

To

implement

the

model,

I

(–pen)

• Grammar

So Summed

the model

is

searching

for

the

set

of

weights

that

maximize

th

log

likelihood 2.70

Penalty for

C1 e (voice)

Penalty

for

C2

Total

/badub/

prob.

Penalty

1.55

1.30

/badub/! prob.

:

*D]

I

DENT

2.00

2

word

(from above)

(weight: 1.55)

(weight: 1.30)

badup

!

data (again, vis-à-vis

the prior) – which

also

minimizesbadup

the! likeli

( 1.20125 +

)

.845

–6.5216

– badub

=

–8.56785 badub!

2.04625of each candidate under the each! gra

rst, we need to know the predicted probability

What does it mean to maximize

the ‘log

likelihood’?

Let’s

see with

Grammar

Then, we calculate

the log

likelihood of the

data under

each ga

Pred.Summed log likelihood

Total

(–pen)

prob. e(from above)

Penalty

adub/

Imagine

adup

we

have are

–6.1296

2

*D]

Total

*D]w

Penalty

Total

1 (vce) Penalty for C2 Pred.

word for ICD

100

(–pen)

(weight:

2.00)

1.55 2.70)1.30 (weight:

/badub/

! prob.! Plog,

e

! Penalty! Pred

2.70

Predicted

4

( 7.29

+

)

trying

to model

these

data:

–

badup

!

Observed

probability,

! = Grammar

!–17.4196

!Plog x !proba

1

11.29

MAXENT GRAMMAR TOOL

• Software developed by Colin Wilson, Ben George, and

Bruce Hayes.

• Available at Bruce Hayes’s webpage:

• http://www.linguistics.ucla.edu/people/hayes/MaxentGrammarTool/

• Takes an input file, a prior file (optional), and an output file.

• Includes a Gaussian prior.

• Does the learning and outputs the weights and predicted

probabilities for each candidate.

• Very user-friendly.

• Works on any platform.

MAXENT GRAMMAR TOOL

• A little demonstration: MaxEnt does categorical cases.

• Sample case: Nasal fusion in Indonesian (examples from

Pater 1999)

• /məŋ + pilih/ ⟶ [məmilih] ‘choose’

• /məŋ + tulis/ ⟶ [mənulis] ‘write’

• /məŋ + kasih/ ⟶ [məŋasih] ‘give’

• /məŋ + bəli/ ⟶ [məmbəli] ‘buy’

• /məŋ + dapat/ ⟶ [məndapat] ‘get’

• /məŋ + ganti/ ⟶ [məŋganti] ‘change’

OT ACCOUNT

/məŋ1p2ilih/

*NC̥

☞ məm1,2ilih

məm1b2ilih

məm1p2ilih

məp2ilih

IDENT(voice)

MAX-NASAL

UNIFORMITY

*

*!

*!

*!

IMPLEMENTING A SUBSTANTIVE BIAS

VIA THE PRIOR

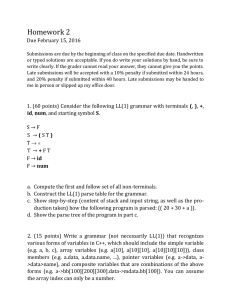

RESULTS (GENERALIZATION PHASE)

Potentially Saltatory

condition

Control condition

Input:

Input:

p

b

v

v

Results:

Results:

p

b

.96

f

.45

p

.21

.70

v

b

f

White (2014), Cognition

.16

.89

v

25

RECALL THE P-MAP (STERIADE 2001)

• 1. Mental representation of perceptual similarity between

pairs of sounds.

• 2. Minimal modification bias (large perceptual changes

dispreferred by the learner).

• How can we implement this in a learning model?

SOLUTION

• Expand traditional faithfulness constraints to *MAP

constraints (proposed by Zuraw 2007, 2013).

• *MAP(x, y):

• violated if sound x is in correspondence with sound y.

• E.g.: *MAP(p, v) is violated by p ~ v.

• These constraints are constrained by a P-map bias.

• *Map constraints penalizing two sounds that are less similar will

have a larger preferred weight (µ).

White, 2013, under review

marked whenever the two sounds listed in the constraint were confused for one another. For

instance, a violation was marked

for *MAPAND

(p, v) when THE

[p] was confused

for [v], or vice versa.

CONFUSION

DATA

RESULTING

PRIORS

10

Table 2. Confusion values for the combined CV and VC contexts from Wang & Bilger

(1973, Tables 2 and 3), which were used to generate the prior. Only the sound pairs relevant

for the current study are shown here.

Responses

Responses

Stimulus

p

b

f

v

Stimulus

t

d

θ

ð

p

1844

54

159

26

t

1765

107

92

26

b

206

1331

241

408

d

91

1640

75

193

f

601

161

1202

93

θ

267

118

712

135

v

51

386

127

1428

ð

44

371

125

680

(confusion data from Wang & Bilger 1973)

20

Table 3. Prior weights (µ) for *MAP constraints in the substantively biased model, based on

confusion

data

from Wangthen

& Bilger

(1973).

The *M

AP constraints

received

weights based on how often the two sounds named in

Labial sounds

Coronal sounds

the constraint were confused for each other in the confusion experiment. The resulting weights

Constraint

Prior weight (µ)

Constraint

Prior weight (µ)

are*M

provided

in Table 3.3.65

Sounds that are very confusable,

AP(p, v)

*MAP(t,and

ð) thus assumed

3.56 to be highly similar,

*MAPin(f,low

v) weights whereas

2.56

*MAP(θ,resulted

ð)

1.91 substantial weights.

resulted

sounds that are dissimilar

in more

*MAP(p, b)

2.44

*MAP(t, d)

2.73

For*M

instance,

[b]

and

[v]

are

very

similar

to

each

other,

so

*M

AP

(b,

v)

received

a small weight of

AP(f, b)

1.96

*MAP(θ, d)

2.49

*MOn

APthe

(p, f)

*MAP(t,

1.30.

other hand,1.34

[p] and [v] are quite dissimilar,

so θ)

*MAP(p, v)1.94

received a greater weight

*MAP(b, v)

1.30

*MAP(d, ð)

1.40

of 3.65. These weights were entered directly into the primary learning model’s prior as the

preferred weights (µ) for each *MAP constraint. It is preferable to derive the prior weights

directly from the confusion data

in a systematic

way, asreview

I have done here, rather than ‘cherry

White,

2013, under

UNBIASED MODEL. For comparison, the second version of the model, which I will call the

5. TESTING THE MODEL. To assess the MaxEnt model’s predictions, the model was provided

GIVE

THE

MODEL

THE SAME

INPUTin Table

AS4). The

the same training

data received

by the experimental

participants (summarized

model predictions

were then fitted to the aggregate

experimental results.

THE

EXPERIMENTAL

PARTICIPANTS

Table 4. Overview of training data for the MaxEnt model, based on the experiments in Author

2014.

Experiment 1

Experiment 2

Potentially

Control

Saltatory

Control

Saltatory condition

condition

condition

condition

18 p ⟶"v

18 b ⟶"v

18 p ⟶"v

18 b ⟶"v

18 t ⟶"ð

18 d ⟶"ð

18 t ⟶"ð

18 d ⟶"ð

9 d ⟶"d

9 t ⟶"t

9 b ⟶"b

9 p ⟶"p

5.1. GENERATING THE MODEL PREDICTIONS. During learning, the model considered all

obstruents within the same place of articulation as possible outputs for a given input. For

instance, for input /p/, the model considered the set {[p], [b], [f], [v]} as possible outputs. In the

actual observed training data, however, each input had only one possible output because there

was no free variation in the experimental training data (e.g. in Experiment 1, /p/ or /b/

(depending on condition) changed to [v] 100% of the time). Thus, during learning the model was

LET’S TAKE A LOOK IN THE MAXENT

GRAMMAR TOOL

Table 6. Model predictions (substantively biased, unbiased, and anti-alternation models) and

experimental results from Experiment 1. Values represent percentage of trials in which the

changing option was chosen (experiment) or in which the changing option was predicted

(models). Shaded rows indicate the trained alternations.

Experiment 1: Potentially Saltatory condition

Experimental

Substantively

Unbiased

Anti-alternation

result

biased model

model

model

98

87

95

88

p⟶v

MODEL PREDICTIONS

t⟶ð

95

86

95

88

b⟶v

73

64

82

34

d⟶ð

67

61

82

34

f⟶v

49

41

82

34

41

57

82

34

θ⟶ð

Experiment 1: Control condition

Substantively

Unbiased

biased model

model

84

85

b⟶v

Experimental

results

88

d⟶ð

89

82

85

79

p⟶v

18

22

96

66

t⟶ð

23

23

96

66

f⟶v

14

12

80

18

18

21

80

18

θ⟶ð

Anti-alternation

model

79

White,

2013,

underbiased

review

SUBSTANTIVELY BIASED MODEL

. The

substantively

model correctly predicts greater

greater for the subset of data with intermediate values.

OVERALL RESULTS

Figure 2. Predictions of each model plotted against the experimental results, for each of the

observations above. Fitted regression lines are also included.

100

●

●●

●

●●

●

●

Experimental results

Experimental results

100

Unbiased model

80

●

●

60

●

40

●

●

●

●

20

●●

●

●

0

0

●

●●

●●

●

●

40

60

80

100

●

●●

●

●

●

80

●

●

60

●

40

●

●

●

●

20

●

●

●

●

●

●

100

0

20

Model predictions

40

●

●

80

●

●●

●

●

●

60

●

40

●

●

●

●

20

●

0

60

●

●

80

●

●

0

20

Anti−alternation model

Experimental results

Substantively biased model

100

0

Model predictions

●

●

●

●

●

●

●

32

20

40

60

80

Model predictions

Table 8. Proportion of variance explained (r2) and log likelihood, fitting each model’s

predictions to the experimental results.

All data

Model

Substantively biased

Unbiased

Anti-alternation

r2

.94

.25

.67

Log likelihood

–1722

–2827

–1926

White, 2013,

under review

6. CONSIDERING OTHER POSSIBILITIES

.

Middle two-thirds of data

r2

.91

.15

.36

Log likelihood

–1253

–2247

–1446

100

REFERENCES

• Becker, Michael, Nihan Ketrez, & Andrew Nevins. (2011). The surfeit of the stimulus: Analytic biases filter lexical

statistics in Turkish laryngeal alternations. Language, 87, 84–125.

• Becker, Michael, Andrew Nevins, & Jonathan Levine. (2012). Asymmetries in generalizing alternation to and from initial

syllables. Language, 88, 231–268.

• Ernestus, Mirjam & R. Harald Baayen. (2003). Predicting the unpredictable: Interpreting neutralized segments in

Dutch. Language, 79, 5–38.

• Goldwater, Sharon, & Mark Johnson. (2003). Learning OT constraint rankings using a maximum entropy model. In

Proceedings of the Workshop on Variation within Optimality Theory.

• Hayes, Bruce, & Colin Wilson. (2008). A maximum entropy model of phonotactics and phonotactic learning. Linguistic

Inquiry, 39, 379–440.

• Hayes, Bruce, Kie Zuraw, Péter Sipár, Zsuzsa Londe. (2009). Natural and unnatural constraints in Hungarian vowel

harmony. Language, 85, 822–863.

• Kawahara, Shigeto. (2006). A faithfulness ranking projectd from a perceptibility scale: the case of [+voice] in

Japanese. Language, 82, 536–574.

• Legendre, G., Miyata, Y., & Smolensky, P. (1990). Harmonic Grammar — A formal multi level connectionist theory of

linguistic well formedness: Theoretical foundations. Proceedings of the Twelfth Annual Conference of the Cognitive

Science Society (385–395). Cambridge, MA.

• Pater, Joe. (2009). Weighted constraints in generative linguistics. Cognitive Science, 33, 999–1035.

• Potts, C., Pater, J., Jesney, K., Bhatt, R., & Becker M. (2009). Harmonic grammar with linear programming: from

linguistic systems to linguistic typology. Ms., University of Massachusetts, Amherst.

• White, James. (2013). Bias in phonological learning: Evidence from saltation. Ph.D. dissertation, UCLA.

• White, James. (2014). Evidence for a learning bias against saltatory phonological alternations. Cognition, 130, 96–115.

• Wilson, Colin. (2006). Learning phonology with substantive bias: An experimental and computational study of velar

palatalization. Cognitive Science, 30, 945–982.