Danielle Duffourc, Ph.D. Director for Institutional Effectiveness and Assessment Xavier University

advertisement

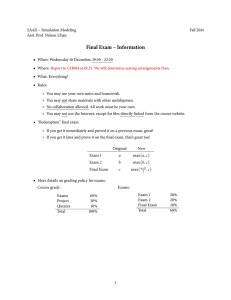

Danielle Duffourc, Ph.D. Director for Institutional Effectiveness and Assessment Xavier University A documented “process of assessment” at the University. A focus on “the design and improvement of educational experiences to enhance student learning.” Unit outcomes that are related to the mission and role of the institution. “The expectation is that the institution will engage in on-going planning and assessment to ensure that for each academic program, the institution develops and assesses student learning outcomes. Program and learning outcomes specify the knowledge, skills, values and attitudes students are expected to attain in courses or in a program.” “Methods for assessing the extent to which students achieve these outcomes are appropriate to the nature of the discipline and consistent over time to enable the institution to evaluate cohorts of students who complete courses or a program.” “The institution should develop and/or use methods and instruments that are uniquely suited to the goal statements and that are supported by faculty.” 2-4 Outcomes. At least 2 means of assessment per outcome. Annual reporting at the conclusion of the Spring semester, with an optional semiannual reporting cycle for reporting Fall data. Documentation of assessment processes and improvements. “Closing the loop” “Closing the loop” is an expression used in assessment to describe the following process: ◦ Study the assessment results to determine what they mean for the program and what course of action needs to be taken for improvement. ◦ Document that the action will be taken. ◦ Follow-up on the results of that action in the next reporting cycle. The loop has not been closed if you are not meeting your assessment goals and do not develop and implement an action plan. Are the measures valid? ◦ Does it measure what it intends to? Are the measures reliable? ◦ Are test scores consistent? Is the process transparent? ◦ Does everyone have a clear idea of how they will be assessed? Are the components clearly defined? Does it provide relevant feedback to students? Student anonymity – only a few students being measured. Program representation – if someone fails, pass rate dramatically drops. Failure to capture the “service course” element, where faculty spend 90% of their time tending to the needs of non-majors. Increased demand for information about small populations is often at odds with the need to preserve privacy and data confidentiality. Possible Solution: ◦ Aggregation: reporting several years of data at once. Data will overlap annually, but may still be usable to show improvement over time. Small numbers also raise statistical issues concerning the usefulness of the data. Possible solution: ◦ Tracking students: Instead of measuring 100 students once, measure one student 10 times and document progress. (Portfolios, Pre/Post-Test) Faculty may feel that they are not able to capture the contributions of their nonmajors. Possible solution: ◦ How are your majors doing relative to non-majors? How would you expect them to do? ◦ “On average, majors scored a 3.5 using the established rubric while non-majors scored a 3.3.” Capstone projects or exams Culminating experiences (e.g. internships, senior thesis, etc.) Juried review of student projects or performances Student work samples (e.g., case study responses, research papers, essay responses, etc.) Collection of student work samples (portfolios) Exit exams (standardized/proprietary exams or locally developed exams) Pre- and post-tests Performance on licensure or certification exams (must have access to subset or item analysis to be considered a direct measure, overall pass rates, while an important and informative indicator of program effectiveness, are insufficient in terms of learning outcomes assessment) Weaknesses of qualitative assessment: ◦ Assessors may not do a good job ensuring that assessment is valid, reliable, and transparent. ◦ Analysis of individual components in isolation does not provide knowledge of the whole subject. (Not easy to merge different observations). Proposed solution: ◦ Constrained qualitative assessment using rubrics or concept maps. The reason that we do assessment is to demonstrate that students are acquiring the knowledge and skills that faculty have determined to be important before they graduate. Assessment is not “scientific research.” The size of the data set is not as important as the information that we can extract from it – general trends of student learning. Another way to get faculty involved in assessment is to ask them to write one paragraph explaining how their course supports a specific program learning outcome. This is not acceptable as a sole means of assessment but might be helpful as supplemental documentation that even if the results are undesirable, students have been given opportunities to learn. This requires someone to read through and group the statements for common themes. Who is going with us? What time are we leaving and how long will we be gone? How much will the trip cost? What kind of activities will we do on the trip? Is there any car maintenance to be done before leaving? Remember that measurement is not judgment. When the gas tank tells you it’s empty, it’s not judging you – just stating a fact. It is your job to make the best decisions possible with the information from that measure. You need gas to make the trip, but you would never have known that if you didn’t look at the meter. Find your measures and don’t look at them as failure indicators. Look at them as opportunities to improve the program.