EVALUATING HUhfAN GOAL-DIRECTED ACTIVITIES

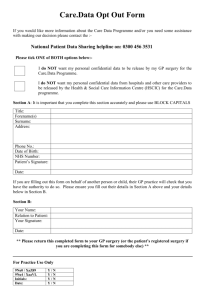

advertisement

EVALUATING HUhfAN GOAL-DIRECTED ACTIVITIES IN VIRTUAL AND AUGMENTED ENVIRONMENTS CL. MacKenzie,KS. Booth*, J.C.Dill, K. Inkpen and S. Payandeh SimonFraserUniversity and University of British Columbia* Bumaby, and Vancouver*, British Columbia, CANADA Email: Christine-mackenzie@sfu.ca Introduction and Objectives Position data from the OPTOTRAK are used also for extensiveanalysesof humanmovementbehavior. To datewe have conductedexperimentson pointing, object manipulation andremotemanipulation.We analyzethesekinematic datato make inferences about planning, organization and human performancein natural,virtual and augmentedenvironments. Virhtal and augmentedenvironments have been applied primarily to visualizationand entertainmentin the past. They arenow being exploredfor goal-directedhumanactivities like surgery, training and collaborative work. For these applications,we are pursuingaugmentedenvironmentswhere there are both physical (“real world”) objects, and virtual (computer-generated) objects. These environmentsmay be renderedthrough graphics,haptics, audio or even olfactory displays. The objectivesof our 5-yearteamprojectare: 1) to design and implement an Enhanced Virtual Hand Laboratory,incorporatingintegrateddisplaysandcontrols that exploit natural human perception and movement. This will providea high degreeof presence,and rich data acquisition/analysisof goal-directedhumanbehavior. 2) to ask basic research questions about the processes underlying successful human manipulation in goaldirected activities in natural, augmentedand remote environments 3) to examine human-computerinteraction @ICI) in the performanceof selectedtasks in the domainsof surgery, design,remotemanipulationandtraining. We use a triangle framework of user-task-tool, studying actionsof humansusing B particulartool in the task-specific contextof a computer-augmented environment. Our vision is to extend computer-augmented environments from simple manipulation tasks to complex goal-directed activities. Task analysesand hierarchical decompositionsof goal-directedactivities arebeing carriedout in the domainsof surgery, training, design, and collaborative work. For example,in surgery,basedon fieldwork and extensivevideo annotation, we have hierarchically decomposed selected laparoscopic procedures. These have been abstracted to surgicalsteps,sub-stepsandtasks.In turn, thesesurgicaltasks have beenfurther broken down to the subtasksand motions, detectableby 3-D motion analysissystems.In parallel,we are analyzing what information is used by the surgeon, and exploringways of presentingthis information to the surgeon through computergraphics,in augmentedreality systems.In this way, we can make recommendationsfor the design of usable,efficient, human-centered tools that enablehumansto perform their tasks in augmentedenvironmentswith a high degreeof easeandpresence. Virtual Hand Laboratory Enhanced Virtual Hand Laboratory Our current Virtual Hand Laboratory (VI&) provides stereoscopic,head-coupledgraphicaldisplaysas usersinteract with physical, virhml or augmentedobjects. The hardware consists of an opto-electric 3-D motion analysis system (OPTOTIUK, Northern Digital Inc.) with infrared emitting markers, a graphics workstation (Onyx& Silicon Graphics Inc.), and stereoscopicglasses(CrystalEYES,StereoCrapbics Corp.). An SGI monitor is placed upside-downon a cart, aboveand parallel to a table surface.The graphicsimage is reflected in a half-silveredmirror parallel to the tabletop so that virtual objectsappearto the subjectto be in the workspace below. We have calibrated this small desktop workspace. Position co-ordinatesare used to drive the graphicsin realtime (60 Hz with one frame lag), as the subject engagesin goal-directedmovement. In the EnhancedVirtual Hand Lab, we are adding other modalitiesto the visual modality Computer-generated haptic and audio renderingof objectswill complementthe computer graphics in the current system for multimodal augmented environments. We highlight technical, theoretical and empiricalchallenges,our systemstatus,andfuture directions. Decomposition of goal-directed activity Acknowledgements The authorsthank Evan Graham,Valerie Summersand Cohn Swindells for VHL sofhvaredevelopment.Supportedby the Natural Sciences and Engineering Research Council of Canada(NSERC StrategicGrantsProgram).