COMMUNICATION SYSTEM ELEMENTS References [1.1]

advertisement

![COMMUNICATION SYSTEM ELEMENTS References [1.1]](http://s2.studylib.net/store/data/012525045_1-e2a535a80e9b1a76d792dc9d928f8eab-768x994.png)

[1.1]

COMMUNICATION SYSTEM ELEMENTS

U

References

U

1. B. P. Lathi, “Modern Digital and Analog Communication Systems”, 3rd Ed., 1998.

2. R. Ziemer, W. Tranter, “Principles Of Communications, Systems, Modulation, and Noise” , 5th Ed., 2002.

3. Hwei P. Hsu, “theory and problems of analog and digital communications”, McGRAW-HILL, Schaume’s

outline series.1993.

4. F. G. Stremler, “Introduction to communication system”, Addison-Wesley Publishing Com., 1999.

5. B. P. Lathi, “signal processing and linear systems”, Berkeley Cambridge Press, 1998.

6. J. G. Proakis, “Digital Communications”, 4th Ed., 2001.

1. COMMUNICATION SYSTEM ELEMENTS

U

Figure 1.1 shows a commonly used model for a single-link communication system. Although

it suggests a system for communication between two remotely located points, this block

diagram is also applicable to remote sensing systems, such as radar or sonar, in which the

system input and output may be located at the same site. Regardless of the particular

application and configuration, all information transmission systems invariably involve three

major subsystems-a transmitter, the channel, and a receiver.

Input Transducer: The wide variety of possible sources of information results in many

different forms for messages. Regardless of their exact form, however, messages may be

categorized as analog or digital. The former may be modeled as functions of a continuoustime variable (e.g., pressure, temperature, speech, music), whereas the latter consists of

discrete symbols (e.g., written text). Almost invariably, the message produced by a source

must be converted by a transducer to a form suitable for the particular type of communication

system employed. For example, in electrical communications, speech waves are converted by

a microphone to voltage variations. Such a converted message is referred to as the message

signal.

U

U

Transmitter: The purpose of the transmitter is to couple the message to the channel.

Although it is not uncommon to find the input transducer directly coupled to the transmission

medium, as for example in some intercom systems, it is often necessary to modulate a carrier

wave with the signal from the input transducer. Modulation is the systematic variation of

some attribute of the carrier, such as amplitude, phase, or frequency, in accordance with a

function of the message signal. There are several reasons for using a carrier and modulating it.

Important ones are

U

U

(1) For ease of radiation.

(2) To reduce noise and interference.

(3) For channel assignment.

(4) For multiplexing or transmission of several messages over a single channel.

(5) To overcome equipment limitations.

Channel: The channel can have many different forms; the most familiar, perhaps, is the

channel that exists between the transmitting antenna of a commercial radio station and the

receiving antenna of a radio. In this channel, the transmitted signal propagate through the

atmosphere, or free space, to the receiving antenna. However, it is not uncommon to find the

transmitter hard-wired to the receiver, as in most local telephone systems. This channel is

vastly different from the radio example. However, all channels have one thing in common: the

signal undergoes degradation from transmitter to receiver. Although this degradation may

occur at any point of the communication system block diagram, it is customarily associated

with the channel alone. This degradation often results from noise and other undesired signals

U

U

Dr. Ahmed A. Alrekaby

[1.2]

or interference but also may include other distortion effects as well, such as fading signal

levels, multiple transmission paths, and filtering

Receiver: The receiver's function is to extract the desired message from the received signal at

the channel output and to convert it to a form suitable for the output transducer. Although

amplification may be one of the first operations performed by the receiver, especially in radio

communications, where the received signal may be extremely weak, the main function of the

receiver is to demodulate the received signal. Often it is desired that the receiver output be a

scaled, possibly delayed, version of the message signal at the modulator input, although in

some cases a more general function of the input message is desired. However, as a result of

the presence of noise and distortion, this operation is less than ideal.

U

U

Output Transducer: The output transducer completes the communication system. This

device converts the electric signal at its input into the form desired by the system user.

Perhaps the most common output transducer is a loudspeaker. However, there are many other

examples, such as tape recorders, personal computers, meters, and cathode-ray tubes.

U

U

Input

message

Fig.1.1

2. CLASSIFICATION OF SIGNALS

U

A signal is a function representing a physical quantity. Mathematically, a signal is represented

as a function of an independent variable t. usually t represents time. Thus a signal is denoted

by x(t).

2.1 Continuous-Time and Discrete-Time Signals

U

A signal x(t) is a continuous-time signal if t is a continuous variable. If t is a discrete variable,

that is, x(t) is defined at discrete times, then x(t) is a discrete-time signal. Since a discrete-time

signal is defined at discrete times, it is often identified as a sequence of numbers, denoted by

{x(n)} or x[n], where n = integer.

2.2 Analog and Digital Signals

U

If a continuous-time signal x(t) can take on any values in the continuous interval (a, b), where

a may be −∞ and b may be +∞, then the continuous-time signal x(t) is called an analog signal.

If a discrete-time signal x(t) can take only a finite number of distinct values, then we call this

signal a digital signal. The discrete-time signal x[n] is often formed by sampling a

continuous-time signal x(t) such that x[n]=x(nTs), where Ts is the sampling interval.

Dr. Ahmed A. Alrekaby

[1.3]

2.3 Real and Complex Signals

U

A signal x(t) is a real signal if its value is a real number and is a complex signal if its value is

a complex number.

2.4 Deterministic and random signals

U

Deterministic signals are those signals whose values are completely specified for any given

time. Random signals are those signals that take random values at any given time, and these

must be characterized statistically.

2.5 Energy and Power Signals

U

The normalized energy content E of a signal x(t) is defined as

∞

𝐸𝐸 = � |𝑥𝑥(𝑡𝑡)|2 𝑑𝑑𝑑𝑑

(2.1)

1 ∞

� |𝑥𝑥(𝑡𝑡)|2 𝑑𝑑𝑑𝑑

𝑇𝑇→∞ 𝑇𝑇 −∞

(2.2)

−∞

The normalized average power P of a signal x(t) is defined as

•

•

𝑃𝑃 = lim

If 0 < E < ∞, that is, if E is finite (so P = 0), then x(t) is referred to as an energy signal.

If E = ∞, but 0 < P < ∞, that is, P is finite, then x(t) is referred to as a power signal.

Example 2.1: determine whether the signal x(t) = 𝑒𝑒 −𝑎𝑎|𝑡𝑡| is power or energy signals or neither.

U

U

Sol.

U

U

∞

−𝑎𝑎𝑎𝑎

𝑥𝑥(𝑡𝑡) = 𝑒𝑒 −𝑎𝑎|𝑡𝑡| = �𝑒𝑒 𝑎𝑎𝑎𝑎

𝑒𝑒

𝑡𝑡 > 0

𝑡𝑡 < 0

∞

∞

1

𝐸𝐸 = ∫−∞ [𝑥𝑥(𝑡𝑡)]2 𝑑𝑑𝑑𝑑 = ∫−∞ 𝑒𝑒 −2𝑎𝑎|𝑡𝑡| 𝑑𝑑𝑑𝑑 = 2 ∫0 𝑒𝑒 −2𝑎𝑎𝑎𝑎 𝑑𝑑𝑑𝑑 = 𝑎𝑎 < ∞, thus, x(t) is an energy signal.

x(t)

𝑒𝑒 𝑎𝑎𝑎𝑎

𝑒𝑒 −𝑎𝑎𝑎𝑎

0

t

H.W: repeat Example 2.1 with x(t)=A[u(t+a)-u(t-a)], a>0 and x(t)=tu(t).

U

U

2.6 Periodic and Non-periodic Signals

U

A signal x(t) is periodic if there is a positive number To such that

x(t+nTo) = x(t)

(2.3)

The smallest positive number To is called the period, and the reciprocal of the period is called

the fundamental frequency fo

𝑓𝑓𝑜𝑜 =

1

𝑇𝑇𝑜𝑜

ℎ𝑒𝑒𝑒𝑒𝑒𝑒𝑒𝑒 (𝐻𝐻𝐻𝐻)

(2.4)

Dr. Ahmed A. Alrekaby

[1.4]

Any signal for which there is no value of To satisfying Eq.(2.3) is said to be nonperiodic or

aperiodic, A periodic signal is a power signal if its energy content per period is finite, and

then the average power of the signal need only be calculated over a period.

Example 2.2: Let x1(t) and x2(t) be periodic signals with periods T1 and T2, respectively:

Under what conditions is the sum

x(t) =x1(t) +x2(t)

periodic, and what is the period of x(t) .if it is periodic?

U

U

Sol. From Eq. (2.3)

U

U

x1(t) = x1(t + mT1)

m an integer

x2(t) = x2(t +nT2)

n an integer

If, therefore,T1, and T2 are such that

mT1 = nT2 = T

then,

x(t + T) =x1(t + T) +x2(t + T) =x1(t) +x2(t) =x(t)

that is, x(t) is periodic. Thus, the condition for x(t) to be periodic is

𝑇𝑇1 𝑛𝑛

= = rational number

𝑇𝑇2 𝑚𝑚

The smallest common period is the least common multiple of T1 and T2. If the ratio T1/T2 is

an irrational number, then the signals x1(t) and x2(t) do not have a common period and x(t)

cannot be periodic.

1

1

1

To check 𝑥𝑥(𝑡𝑡) = 𝑐𝑐𝑐𝑐𝑐𝑐 �3 𝑡𝑡� + 𝑠𝑠𝑠𝑠𝑠𝑠(4 𝑡𝑡) for periodicity, 𝑐𝑐𝑐𝑐𝑐𝑐 �3 𝑡𝑡� is periodic with period T1=6π,

1

and 𝑠𝑠𝑠𝑠𝑠𝑠(4 𝑡𝑡) is periodic with period T2=8π. Since

periodic with period T = 4T1 =3T2 = 24π.

𝑇𝑇1

𝑇𝑇2

6𝜋𝜋

3

= 8𝜋𝜋 = 4 is a rational number, 𝑥𝑥(𝑡𝑡) is

H.W: Is the following signal periodic? If so, find their period.

U

U

2.7 Singularity Functions

U

𝑥𝑥(𝑡𝑡) = 𝑐𝑐𝑐𝑐𝑐𝑐(𝑡𝑡) + 2𝑠𝑠𝑠𝑠𝑠𝑠(√2𝑡𝑡)

An important subclass of non periodic signals in communication theory is the singularity

functions (or, as they are sometimes called, the generalized functions).

2.7.1 Unit Step Function

U

The unit step function u(t) is defined as

1

𝑢𝑢(𝑡𝑡) = �

0

u(t)

0

Fig.2.1

𝑡𝑡 > 0

𝑡𝑡 < 0

(2.5)

t

Note that it is discontinuous at t = 0 and that the value at t = 0 is undefined.

Dr. Ahmed A. Alrekaby

[1.5]

2.7.2 Unit Impulse Function

U

The unit impulse function, also known as the Dirac delta function, δ(t) is not an ordinary

function and is defined in terms of the following process:

∞

� 𝜙𝜙(𝑡𝑡)𝛿𝛿(𝑡𝑡) 𝑑𝑑𝑑𝑑 = 𝜙𝜙(0)

(2.6)

−∞

where φ(t) is any test function continuous at t = 0.

Some additional properties of δ(t) are

∞

� 𝜙𝜙(𝑡𝑡)𝛿𝛿(𝑡𝑡 − 𝑡𝑡𝑜𝑜 ) 𝑑𝑑𝑑𝑑 = 𝜙𝜙(𝑡𝑡𝑜𝑜 )

(2.7)

𝛿𝛿(−𝑡𝑡) = 𝛿𝛿(𝑡𝑡)

(2.9)

−∞

𝛿𝛿(𝑎𝑎𝑎𝑎) =

1

𝛿𝛿(𝑡𝑡)

|𝑎𝑎|

(2.8)

𝑥𝑥(𝑡𝑡)𝛿𝛿(𝑡𝑡) = 𝑥𝑥(0)𝛿𝛿(𝑡𝑡)

(2.10)

𝑥𝑥(𝑡𝑡)𝛿𝛿(𝑡𝑡 − 𝑡𝑡𝑜𝑜 ) = 𝑥𝑥(𝑡𝑡𝑜𝑜 )𝛿𝛿(𝑡𝑡 − 𝑡𝑡𝑜𝑜 )

(2.11)

An alternate definition of δ(t) is provided by the following two conditions:

𝑡𝑡 2

� 𝛿𝛿(𝑡𝑡 − 𝑡𝑡𝑜𝑜 ) 𝑑𝑑𝑑𝑑 = 1

𝑡𝑡 1

𝛿𝛿(𝑡𝑡 − 𝑡𝑡𝑜𝑜 ) = 0

𝑡𝑡1 < 𝑡𝑡𝑜𝑜 < 𝑡𝑡2

(2.12)

𝑡𝑡 ≠ 𝑡𝑡𝑜𝑜

(2.13)

Conditions in Eq.(2.12) and (2.13) correspond to the intuitive notion of a unit impulse as the

limit of a suitably chosen conventional function having unity area in an infinitesimally small

width. For convenience, δ(t) is shown schematically in Fig. 2.2.

δ(t)

0

t

Fig.2.2

If g(t) is a generalized function, its derivative g'(t) is defined by the following relation:

∞

∞

́ 𝜙𝜙(𝑡𝑡)𝑑𝑑𝑑𝑑 = − � g(𝑡𝑡) 𝜙𝜙́(𝑡𝑡)𝑑𝑑𝑑𝑑

� g(𝑡𝑡)

−∞

−∞

By using Eq. (2.14), the derivative of u(t) can be shown to be δ(t); that is,

𝛿𝛿(𝑡𝑡) = 𝑢𝑢́ (𝑡𝑡) =

∞

𝑑𝑑𝑑𝑑(𝑡𝑡)

𝑑𝑑𝑑𝑑

Example 2.3: Evaluate the integral, ∫−∞ (𝑡𝑡 2 + 𝑐𝑐𝑐𝑐𝑐𝑐𝑐𝑐𝑐𝑐)𝛿𝛿(𝑡𝑡 − 1) 𝑑𝑑𝑑𝑑

U

U

(2.14)

(2.15)

Dr. Ahmed A. Alrekaby

[1.6]

∞

Sol. ∫−∞ (𝑡𝑡 2 + 𝑐𝑐𝑐𝑐𝑐𝑐𝑐𝑐𝑐𝑐)𝛿𝛿(𝑡𝑡 − 1) 𝑑𝑑𝑑𝑑 = 𝑡𝑡 2 + 𝑐𝑐𝑐𝑐𝑐𝑐𝑐𝑐𝑐𝑐|𝑡𝑡=1 = 1 + 𝑐𝑐𝑐𝑐𝑐𝑐𝑐𝑐 = 1 − 1 = 0

U

U

H.W: Evaluate the following integrals;

U

U

∞

(a) ∫−∞ 𝑒𝑒 −𝑡𝑡 𝛿𝛿(2𝑡𝑡 − 2)𝑑𝑑𝑑𝑑

∞

(b) ∫−∞ 𝑒𝑒 −2𝑡𝑡 𝛿𝛿́ (𝑡𝑡)𝑑𝑑𝑑𝑑

3. COMPLEX EXPONENTIAL FOURIER SERIES

U

Let x(t) be a periodic signal with period To. Then we define the complex exponential Fourier

series of x(t) as

∞

𝑥𝑥(𝑡𝑡) = � 𝑐𝑐𝑛𝑛 𝑒𝑒 𝑗𝑗𝑗𝑗 𝜔𝜔 𝑜𝑜 𝑡𝑡

𝑛𝑛 =−∞

(3.1)

where 𝜔𝜔𝑜𝑜 = 2𝜋𝜋⁄𝑇𝑇𝑜𝑜 = 2𝜋𝜋𝑓𝑓𝑜𝑜 , which is called the fundamental angular frequency. The

coefficients cn are called the Fourier coefficients, and they are given by

1 𝑡𝑡𝑜𝑜 +𝑇𝑇𝑜𝑜

𝑐𝑐𝑛𝑛 = �

𝑥𝑥(𝑡𝑡) 𝑒𝑒 −𝑗𝑗𝑗𝑗 𝜔𝜔 𝑜𝑜 𝑡𝑡 𝑑𝑑𝑑𝑑

(3.2)

𝑇𝑇𝑜𝑜 𝑡𝑡𝑜𝑜

3.1 Frequency Spectra

U

If the periodic signal x(t) is real, then

𝑐𝑐𝑛𝑛 = |𝑐𝑐𝑛𝑛 |𝑒𝑒 𝑗𝑗 𝜃𝜃𝑛𝑛

𝑐𝑐−𝑛𝑛 = 𝑐𝑐𝑛𝑛∗ = |𝑐𝑐𝑛𝑛 |𝑒𝑒 −𝑗𝑗 𝜃𝜃𝑛𝑛

(3.3)

|𝑐𝑐−𝑛𝑛 | = |𝑐𝑐𝑛𝑛 |

𝜃𝜃−𝑛𝑛 = −𝜃𝜃𝑛𝑛

(3.4)

The asterisk (*) indicates the complex conjugate. Note that

A plot of |𝑐𝑐𝑛𝑛 | versus the angular frequency ω = 2 π f is called the amplitude spectrum of the

periodic signal x(t). A plot of 𝜃𝜃𝑛𝑛 versus ω is called the phase spectrum of x(t). These are

referred to as frequency spectra of x(t). Since the index n assumes only integers, the frequency

spectra of a periodic signal exist only at the discrete frequencies nωo. These are therefore

referred to as discrete frequency Spectra or line spectra. From Eq. (3.4) we see that the

amplitude spectrum is an even function of ω and the phase spectrum is an odd function of ω.

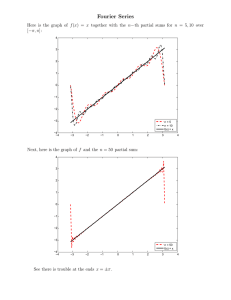

Example 3.1: Find and sketch the magnitude spectra for the periodic square pulse train signal

x(t) shown in Figure beside for (a) d=T/4 and (b) d=T/8.

U

U

Dr. Ahmed A. Alrekaby

[1.7]

The magnitude spectrum for this case is shown in Figure below

(b) d=T/8, nωod/2=nπd/T=nπ/8

The magnitude spectrum for this case is shown in Figure below

H.W: If x1(t) and x2(t) are periodic signals with period T and their complex Fourier series

expressions are

U

U

𝑗𝑗𝑗𝑗 𝜔𝜔 0 𝑡𝑡

𝑥𝑥1 (𝑡𝑡) = ∑∞

𝑛𝑛=−∞ 𝑑𝑑𝑛𝑛 𝑒𝑒

𝑗𝑗𝑗𝑗 𝜔𝜔 0 𝑡𝑡

𝑥𝑥2 (𝑡𝑡) = ∑∞

𝑛𝑛=−∞ 𝑔𝑔𝑛𝑛 𝑒𝑒

𝜔𝜔𝑜𝑜 =

2𝜋𝜋

𝑇𝑇

show that the signal x(t) =x1(t) x2(t) is periodic with the same period T and can be expressed

𝑗𝑗𝑗𝑗 𝜔𝜔 0 𝑡𝑡

𝑥𝑥(𝑡𝑡) = ∑∞

𝑛𝑛=−∞ 𝑐𝑐𝑛𝑛 𝑒𝑒

where cn is given by

𝑐𝑐𝑛𝑛 = ∑∞

𝑘𝑘=−∞ 𝑑𝑑𝑘𝑘 𝑔𝑔𝑛𝑛−𝑘𝑘

3.2 Power Content of a Periodic Signal

U

The power content of a periodic signal x(t) with period To is defined as the mean-square value

over a period:

Dr. Ahmed A. Alrekaby

[1.8]

𝑃𝑃 =

1 𝑇𝑇𝑜𝑜 ⁄2

|𝑥𝑥(𝑡𝑡)|2 𝑑𝑑𝑑𝑑

�

𝑇𝑇𝑜𝑜 −𝑇𝑇𝑜𝑜 ⁄2

(3.5)

3.3 Parseval’s Theorem for the Fourier Series

U

Parseval's theorem for the Fourier series states that if x(t) is a periodic signal with period To,

then

∞

1 𝑇𝑇𝑜𝑜 ⁄2

2

|𝑥𝑥(𝑡𝑡)| 𝑑𝑑𝑑𝑑 = � |𝑐𝑐𝑛𝑛 |2

�

(3.6)

𝑇𝑇𝑜𝑜 −𝑇𝑇𝑜𝑜 ⁄2

𝑛𝑛=−∞

H.W: Verify Eq.(3.6).

U

U

4. FOURIER TRANSFORM

U

To generalize the Fourier series representation Eq.(3.1) to a representation valid for nonperiodic signals in the frequency domain, we introduce the Fourier transform.

Let x(t) be a non-periodic signal. Then the Fourier transform of x(t), symbolized by ℱ, is

defined by

∞

𝑋𝑋(𝜔𝜔) = ℱ[𝑥𝑥(𝑡𝑡)] = � 𝑥𝑥(𝑡𝑡)𝑒𝑒 −𝑗𝑗𝑗𝑗𝑗𝑗 𝑑𝑑𝑑𝑑

−∞

(4.1)

The inverse Fourier transform of X(ω), symbolized by ℱ −1 , is defined by

1 ∞

−1 [𝑋𝑋(𝜔𝜔)]

𝑥𝑥(𝑡𝑡) = ℱ

� 𝑋𝑋(𝜔𝜔)𝑒𝑒 𝑗𝑗𝑗𝑗𝑗𝑗 𝑑𝑑𝑑𝑑

(4.2)

=

2𝜋𝜋 −∞

Equations (4.1) and (4.2) are often called the Fourier transform pair. Writing X(ω) in terms of

amplitude and phase as

𝑋𝑋(𝜔𝜔) = |𝑋𝑋(𝜔𝜔)|𝑒𝑒 𝑗𝑗𝑗𝑗 (𝜔𝜔 )

(4.3)

we can show, for real x(t), that

(4.4)

𝑋𝑋(−𝜔𝜔) = 𝑋𝑋 ∗ (𝜔𝜔) = |𝑋𝑋(𝜔𝜔)|𝑒𝑒 −𝑗𝑗𝑗𝑗 (𝜔𝜔 )

or

|𝑋𝑋(−𝜔𝜔)| = |𝑋𝑋 ∗ (𝜔𝜔)|

𝜃𝜃(−𝜔𝜔) = −𝜃𝜃(𝜔𝜔)

(4.5)

Thus, just as for the complex Fourier series, the amplitude spectrum of x(t), denoted by

|𝑋𝑋(𝜔𝜔)|, is an even function of ω, and the phase spectrum θ(ω) is an odd function of ω. These

are referred to as Fourier spectra of x(t). Equation (4.4) is the necessary and sufficient

condition for x(t) to be real.

4.1 Properties of the Fourier Transform

U

We use the notation x(t) ↔X(ω) to denote the Fourier transform pair.

4.1.1 Linearity (Superposition)

U

a1x1(t)+a2x2(t) ↔ a1X1(ω)+a2X2(ω)

(4.6)

where a1 and a2 are any constants.

4.1.2 Time Shifting

U

4.1.3 frequency shifting

U

𝑥𝑥(𝑡𝑡 − 𝑡𝑡𝑜𝑜 ) ↔𝑋𝑋(ω)𝑒𝑒 −𝑗𝑗 ω𝑡𝑡𝑜𝑜

(4.7)

𝑥𝑥(𝑡𝑡)𝑒𝑒 𝑗𝑗 𝜔𝜔 𝑜𝑜 𝑡𝑡 ↔𝑋𝑋(𝜔𝜔 − 𝜔𝜔𝑜𝑜 )

(4.8)

Dr. Ahmed A. Alrekaby

[1.9]

4.1.4 Scaling

U

𝑥𝑥(𝑎𝑎𝑎𝑎)↔

4.1.5 Time Reversal

U

1

𝑤𝑤

𝑋𝑋 � �

|𝑎𝑎|

𝑎𝑎

(4.9)

x(− t)↔X(− ω)

(4.10)

X(t) ↔2πx(−ω)

(4.11)

4.1.6 Duality

U

4.1.7 Differentiation

U

•

•

Time differentiation

Frequency differentiation

𝑥𝑥́ (𝑡𝑡) =

𝑑𝑑

𝑥𝑥(𝑡𝑡)↔𝑗𝑗𝑗𝑗𝑗𝑗(𝜔𝜔)

𝑑𝑑𝑑𝑑

(4.12)

𝑑𝑑

𝑋𝑋(𝜔𝜔)

(4.13)

𝑑𝑑𝑑𝑑

Example 4.1: Find the Fourier transform of the rectangular pulse signal x(t) defined by

|𝑡𝑡| < 𝑎𝑎

1

𝑥𝑥(𝑡𝑡) = 𝑝𝑝𝑎𝑎 (𝑡𝑡) = �

|𝑡𝑡| > 𝑎𝑎

0

U

(−𝑗𝑗𝑗𝑗)𝑥𝑥(𝑡𝑡)↔X́ (𝜔𝜔) =

U

∞

𝑎𝑎

𝑋𝑋(𝜔𝜔) = ∫−∞ 𝑥𝑥(𝑡𝑡)𝑒𝑒 −𝑗𝑗𝑗𝑗𝑗𝑗 𝑑𝑑𝑑𝑑 = ∫−𝑎𝑎 𝑒𝑒 −𝑗𝑗𝑗𝑗𝑗𝑗 𝑑𝑑𝑑𝑑 =

Sol.

U

U

2𝑠𝑠𝑠𝑠𝑠𝑠 (𝑎𝑎𝑎𝑎 )

𝜔𝜔

= 2𝑎𝑎

𝑠𝑠𝑠𝑠𝑠𝑠 (𝑎𝑎𝑎𝑎 )

𝑎𝑎𝑎𝑎

H.W: Verify Eqs. (4.6) to (4.13).

U

U

4.2 Fourier Transforms of Some Useful Signals

U

Dr. Ahmed A. Alrekaby

[1.10]

Example 4.2: Find the Fourier transform of 𝑥𝑥(𝑡𝑡) =

U

U

𝑠𝑠𝑠𝑠𝑠𝑠 (𝑎𝑎𝑎𝑎 )

2

𝜋𝜋𝜋𝜋

Sol. From Example 4.1 we have ℱ[𝑝𝑝𝑎𝑎 (𝑡𝑡)] = 𝜔𝜔 𝑠𝑠𝑠𝑠𝑠𝑠(𝑎𝑎𝑎𝑎) and from the duality property

U

U

Thus, ℱ �

𝑠𝑠𝑠𝑠𝑠𝑠 (𝑎𝑎𝑎𝑎 )

1

2

ℱ � 𝑠𝑠𝑠𝑠𝑠𝑠(𝑎𝑎𝑎𝑎)� = 2𝜋𝜋𝑝𝑝𝑎𝑎 (−𝜔𝜔)

𝑡𝑡

2

� = 2𝜋𝜋 ℱ � 𝑡𝑡 𝑠𝑠𝑠𝑠𝑠𝑠(𝑎𝑎𝑎𝑎)� = 𝑝𝑝𝑎𝑎 (−𝜔𝜔) = 𝑝𝑝𝑎𝑎 (𝜔𝜔)

𝜋𝜋𝜋𝜋

Where 𝑝𝑝𝑎𝑎 (𝜔𝜔) = �

|𝜔𝜔| < 𝑎𝑎

|𝜔𝜔| > 𝑎𝑎

1

0

1

X(ω)

−a

a

ω

5. CONVOLUTION

U

The convolution of two signals x1(t) and x2(t), denoted by x1(t) ∗ x2(t), is a new signal x(t),

defined by

∞

𝑥𝑥(𝑡𝑡) = 𝑥𝑥1 (𝑡𝑡) ∗ 𝑥𝑥2 (𝑡𝑡) = � 𝑥𝑥1 (𝜏𝜏)𝑥𝑥2 (𝑡𝑡 − 𝜏𝜏)𝑑𝑑𝑑𝑑

5.1 Properties of Convolution

U

(5.1)

−∞

(Commutative property)

(Associative property)

(5.2)

(Distributive property)

The Convolution with δ Functions:

(5.3)

5.2 Convolution Theorem

U

• Time convolution:

Let

x1(t) ↔ X1(ω) and x2(t) ↔ X2(ω)

Then

x1(t) ∗ x2(t) ↔ X1(ω) X2(ω)

• Frequency convolution:

1

x1(t) x2(t) ↔ 2𝜋𝜋 X1(ω) ∗ X2(ω)

Example 5.1: Verify Eq. (5.4) and (5.5).

U

(5.4)

(5.5)

U

Sol. Let X(ω)=X1(ω) X2(ω), then using Eq.(4.2)

U

U

𝑥𝑥(𝑡𝑡) = ℱ

−1 [𝑋𝑋(𝜔𝜔)]

1 ∞

� 𝑋𝑋 (𝜔𝜔)𝑋𝑋2 (𝜔𝜔)𝑒𝑒 𝑗𝑗𝑗𝑗𝑗𝑗 𝑑𝑑𝑑𝑑

=

2𝜋𝜋 −∞ 1

Dr. Ahmed A. Alrekaby

[1.11]

𝑥𝑥(𝑡𝑡) =

1 ∞ ∞

� � 𝑥𝑥 (𝜏𝜏) 𝑒𝑒 −𝑗𝑗𝑗𝑗𝑗𝑗 𝑋𝑋2 (𝜔𝜔)𝑒𝑒 𝑗𝑗𝑗𝑗𝑗𝑗 𝑑𝑑𝑑𝑑 𝑑𝑑𝑑𝑑

2𝜋𝜋 −∞ −∞ 1

∞

1 ∞

= � 𝑥𝑥1 (𝜏𝜏) � � 𝑋𝑋2 (𝜔𝜔)𝑒𝑒 𝑗𝑗𝑗𝑗 (𝑡𝑡−𝜏𝜏) 𝑑𝑑𝑑𝑑� 𝑑𝑑𝑑𝑑

2𝜋𝜋 −∞

−∞

∞

= � 𝑥𝑥1 (𝜏𝜏)𝑥𝑥2 (𝑡𝑡 − 𝜏𝜏)𝑑𝑑𝑑𝑑 = 𝑥𝑥1 (𝑡𝑡) ∗ 𝑥𝑥2 (𝑡𝑡)

−∞

Example 5.1: Find the convolution of the two signals

U

U

𝑥𝑥1 (𝑡𝑡) = 𝑒𝑒 −𝛼𝛼𝛼𝛼 𝑢𝑢(𝑡𝑡) and 𝑥𝑥2 (𝑡𝑡) = 𝑒𝑒 −𝛽𝛽𝛽𝛽 𝑢𝑢(𝑡𝑡),

𝛼𝛼 > 𝛽𝛽 > 0

Sol. The steps involved in the convolution are illustrated in Fig. (5.1) for α = 4 and β =2.

Mathematically, we can form the integrand by direct substitution:

U

U

∞

𝑥𝑥(𝑡𝑡) = 𝑥𝑥1 (𝑡𝑡) ∗ 𝑥𝑥2 (𝑡𝑡) = � 𝑒𝑒 −𝛼𝛼𝛼𝛼 𝑢𝑢(𝜆𝜆) 𝑒𝑒 −𝛽𝛽(𝑡𝑡−𝜆𝜆) 𝑢𝑢(𝑡𝑡 − 𝜆𝜆)𝑑𝑑𝑑𝑑

−∞

But

thus

0,

𝑡𝑡

0,

𝑢𝑢(𝜆𝜆)𝑢𝑢(𝑡𝑡 − 𝜆𝜆) = �1,

0,

𝜆𝜆 < 0

0 < 𝜆𝜆 < 𝑡𝑡

𝜆𝜆 > 𝑡𝑡

1

�𝑒𝑒 −𝛽𝛽𝛽𝛽 − 𝑒𝑒 −𝛼𝛼𝛼𝛼 �,

𝛼𝛼 − 𝛽𝛽

0

This result for x(t) is also shown in Figure below

𝑥𝑥(𝑡𝑡) = �

� 𝑒𝑒 −𝛽𝛽𝛽𝛽 𝑒𝑒 −(𝛼𝛼−𝛽𝛽)𝜆𝜆 𝑑𝑑𝑑𝑑 =

𝑡𝑡 < 0

𝑡𝑡 ≥ 0

Fig. (5.1)

Example 5.1: Find the convolution of the two signals shown in Fig.(5.2a) and Fig.(5.2b).

Sol.: Here, f(t) has a simpler mathematical description than that of g(t), so it is preferable to

invert f(t). Hence we shall determine g(t)∗f(t) rather than f(t)∗g(t). Thus

U

U

U

U

c(t) = g(t) ∗ f(t)

− 𝜏𝜏)𝑑𝑑𝑑𝑑

Fig.(5.2c) shows g(τ) and f(-τ), whereas Fig.(2.5d) shows g(τ) and f(t−τ), which is f(−τ)

shifted by t. because the edges of f(−τ) are at τ= −1 and 1, the edges of f(t−τ) are at −1+t and

1+t. the two functions overlaps over the interval (0,1+t), so that

∞

=∫−∞ 𝑔𝑔(𝜏𝜏)𝑓𝑓(𝑡𝑡

Dr. Ahmed A. Alrekaby

[1.12]

∞

𝑐𝑐(𝑡𝑡) = � 𝑔𝑔(𝜏𝜏)𝑓𝑓(𝑡𝑡 − 𝜏𝜏)𝑑𝑑𝑑𝑑

−∞

1+𝑡𝑡

1

𝜏𝜏 𝑑𝑑𝑑𝑑

3

0

1

= 6 (𝑡𝑡 + 1)2 -1≤ t ≤1

=�

(5.6)

This situation, depicted in Fig.(2.5d), is valid only for −1≤ t ≤1. For 1< t <2, the situation is as

illustrated in Fig.(5.2e). The two functions overlap only over the range −1+t to 1+t. therefore

1+𝑡𝑡

1

𝑐𝑐(𝑡𝑡) = �

𝜏𝜏𝜏𝜏𝜏𝜏

−1+𝑡𝑡 3

2

= 3 𝑡𝑡

1 ≤ t ≤2

(5.7)

At the transition point t =1, both Eq.(5.6) and Eq.(5.7) yield a value of 2/3. i.e., c(1)=2/3. For

2≤ t <4 the situation is as shown in Fig.(5.2f). g(τ) and f(t−τ) overlap over the interval from

−1+t to 3, so that

3

1

𝑐𝑐(𝑡𝑡) = �

𝜏𝜏𝜏𝜏𝜏𝜏

−1+𝑡𝑡 3

1

(5.8)

= − 6 (𝑡𝑡 2 − 2𝑡𝑡 − 8)

Again, both Eqs. (5.7) and (5.8) apply the transition point t =2. For t ≥ 4, f(t−τ) does not

overlap g(τ) as depicted in Fig.(5.2g). Consequently

c(t) = 0

t≥4

for t<−1 there is no overlap between the two functions (see Fig.(5.2h), so that

c(t) = 0

t<−1

Figures (5.2i) shows c(t) plotted according to Eqs.(5.6) through (5.10)

(5.9)

(5.10)

Dr. Ahmed A. Alrekaby

[1.13]

Fig.(5.2)

H.W: Find the convolution of the two signal f(t) and g(t) depicted in Fig. (5.3). Write a

MATLAB program to accomplish this task.

U

U

Fig(5.3)

6. CORRELATION

U

6.1 Correlation of Energy Signals

U

Let x1(t) and x2(t) be real-valued energy signals. Then the cross-correlation function Rl2(τ) of

x1(t) and x2(t) is defined by

∞

𝑅𝑅12 (𝜏𝜏) = � 𝑥𝑥1 (𝑡𝑡)𝑥𝑥2 (𝑡𝑡 − 𝜏𝜏)𝑑𝑑𝑑𝑑

(6.1)

𝑅𝑅11 (𝜏𝜏) = � 𝑥𝑥1 (𝑡𝑡)𝑥𝑥1 (𝑡𝑡 − 𝜏𝜏)𝑑𝑑𝑑𝑑

(6.2)

−∞

The autocorrelation function of x1(t) is defined as

∞

Properties of correlation functions:

−∞

𝑅𝑅12 (𝜏𝜏) = 𝑅𝑅21 (−𝜏𝜏)

𝑅𝑅11 (𝜏𝜏) = 𝑅𝑅11 (−𝜏𝜏)

∞

𝑅𝑅11 (0) = � [𝑥𝑥1 (𝑡𝑡)]2 𝑑𝑑𝑑𝑑 = 𝐸𝐸

−∞

(6.3)

(6.4)

(6.2)

where E is the normalized energy content of x1(t).

Example 6.1: find and sketch the autocorrelation function for 𝑥𝑥1 (𝑡𝑡) = 𝑒𝑒 −𝑎𝑎𝑎𝑎 𝑢𝑢(𝑡𝑡) and a>0.

Sol.

U

U

U

∞

𝑅𝑅11 (𝜏𝜏) = � 𝑥𝑥1 (𝑡𝑡)𝑥𝑥1 (𝑡𝑡 − 𝜏𝜏)𝑑𝑑𝑑𝑑

−∞

Dr. Ahmed A. Alrekaby

[1.14]

∞

= � 𝑒𝑒 −𝑎𝑎𝑎𝑎 𝑢𝑢(𝑡𝑡)𝑒𝑒 −𝑎𝑎(𝑡𝑡−𝜏𝜏) 𝑢𝑢(𝑡𝑡 − 𝜏𝜏)𝑑𝑑𝑑𝑑

−∞

= 𝑒𝑒

𝑎𝑎𝑎𝑎

∞

� 𝑒𝑒 −2𝑎𝑎𝑎𝑎 𝑢𝑢(𝑡𝑡)𝑢𝑢(𝑡𝑡 − 𝜏𝜏)𝑑𝑑𝑑𝑑

−∞

1

𝑡𝑡 > 𝜏𝜏

For τ > 0,

𝑢𝑢(𝑡𝑡)𝑢𝑢(𝑡𝑡 − 𝜏𝜏) = �

0

𝑡𝑡 < 𝜏𝜏

∞

1

Thus, 𝑅𝑅11 (𝜏𝜏) = 𝑒𝑒 𝑎𝑎𝑎𝑎 ∫𝜏𝜏 𝑒𝑒 −2𝑎𝑎𝑎𝑎 𝑑𝑑𝑑𝑑 = 2𝑎𝑎 𝑒𝑒 −𝑎𝑎𝑎𝑎 , since 𝑅𝑅11 (𝜏𝜏)is an even function of τ, we conclude

1

that 𝑅𝑅11 (𝜏𝜏) = 2𝑎𝑎 𝑒𝑒 −𝑎𝑎|𝜏𝜏| a > 0

𝑅𝑅11 (𝜏𝜏)

τ

0

6.2 Energy Spectral Density

U

Let R11(τ) be the autocorrelation function of x1(t). Then

∞

𝑆𝑆11 (𝜔𝜔) = ℱ[𝑅𝑅11 (𝜏𝜏)] = � 𝑅𝑅11 (𝜏𝜏)𝑒𝑒 −𝑗𝑗𝑗𝑗𝑗𝑗 𝑑𝑑𝑑𝑑

(6.3)

−∞

is called the energy spectral density of x1(t). Now taking the inverse Fourier transform of Eq.

(6.3), we have

1 ∞

� 𝑆𝑆 (𝜔𝜔)𝑒𝑒 𝑗𝑗𝑗𝑗𝑗𝑗 𝑑𝑑𝑑𝑑

(6.4)

𝑅𝑅11 (𝜏𝜏) = ℱ −1 [𝑆𝑆11 (ω)] =

2𝜋𝜋 −∞ 11

If x1(t) is real, then we have

𝑆𝑆11 (𝜔𝜔) = ℱ[𝑅𝑅11 (𝜏𝜏)] = |𝑋𝑋1 (𝜔𝜔)|2

(6.5)

1 ∞

2

𝑗𝑗𝑗𝑗𝑗𝑗

(6.6)

and

𝑅𝑅11 (𝜏𝜏) = 2𝜋𝜋 ∫−∞ |𝑋𝑋1 (𝜔𝜔)| 𝑒𝑒 𝑑𝑑𝑑𝑑

setting τ=0, we have

1 ∞

𝑅𝑅11 (0) =

� |𝑋𝑋1 (𝜔𝜔)|2 𝑑𝑑𝑑𝑑

(6.7)

2𝜋𝜋 −∞

Thus, from Eq.(6.2)

∞

1 ∞

2

𝐸𝐸 = � |𝑥𝑥1 (𝑡𝑡)| 𝑑𝑑𝑑𝑑 =

� |𝑋𝑋1 (𝜔𝜔)|2 𝑑𝑑𝑑𝑑

(6.8)

2𝜋𝜋

−∞

−∞

This is the reason why S11(ω) = |𝑋𝑋1 (𝜔𝜔)|2 is called the energy spectral density of x1(t).

Equation (6.8) is also known as Parseval's theorem for the Fourier transform.

Example 6.2: Find the energy of signal f(t)=e-atu(t). Determine the frequency W (rad/s) so that

the energy contributed by the spectral components of all frequencies below W is 95% of the

signal energy Ef.

Sol.: we have

∞

∞

1

2

𝐸𝐸𝑓𝑓 = � 𝑓𝑓 (𝑡𝑡) 𝑑𝑑𝑑𝑑 = � 𝑒𝑒 −2𝑎𝑎𝑎𝑎 𝑑𝑑𝑑𝑑 =

2𝑎𝑎

−∞

0

We can verify this result by Parseval’s theorem. For this signal

1

𝐹𝐹(𝜔𝜔) =

𝑗𝑗𝑗𝑗 + 𝑎𝑎

U

U

U

and

U

1

∞

1

∞

𝐸𝐸𝑓𝑓 = 𝜋𝜋 ∫0 |𝐹𝐹(𝜔𝜔)|2 𝑑𝑑𝑑𝑑 = 𝜋𝜋 ∫0

1

1

𝜔𝜔

∞

1

𝑑𝑑𝑑𝑑 = 𝜋𝜋𝜋𝜋 𝑡𝑡𝑡𝑡𝑡𝑡−1 � 𝑎𝑎 �� = 2𝑎𝑎

𝜔𝜔 2 +𝑎𝑎 2

0

the band ω=0 to ω=W contains 95% of the signal energy, that is, 0.95/2a. Therefore,

Dr. Ahmed A. Alrekaby

[1.15]

0.95 1 𝑊𝑊 𝑑𝑑𝑑𝑑

1

𝜔𝜔 𝑊𝑊

1

𝑊𝑊

−1

= �

=

𝑡𝑡𝑡𝑡𝑡𝑡

� =

𝑡𝑡𝑡𝑡𝑡𝑡−1

2

2

2𝑎𝑎

𝜋𝜋 0 𝜔𝜔 + 𝑎𝑎

𝜋𝜋𝜋𝜋

𝑎𝑎 0

𝜋𝜋𝜋𝜋

𝑎𝑎

𝑊𝑊

0.95𝜋𝜋

or

= 𝑡𝑡𝑡𝑡𝑡𝑡−1 𝑎𝑎 ⟹ 𝑊𝑊 = 12.706𝑎𝑎 𝑟𝑟𝑟𝑟𝑟𝑟/𝑠𝑠

2

this result indicates that the spectral components of f(t) in the band from 0 (DC) to 12.706a

rad/s (2.02a Hz) contribute 95% of the total signal energy, the remaining spectral components

(from 12.706a rad/s to ∞) contribute only 5% of the signal energy.

6.3 Correlation of Power Signals:

U

The time-average autocorrelation function 𝑅𝑅�11 (𝜏𝜏) of a real-valued power signal x1(t) is

defined as

1 𝑇𝑇 ⁄2

�

𝑅𝑅11 (𝜏𝜏) = lim � 𝑥𝑥1 (𝑡𝑡)𝑥𝑥1 (𝑡𝑡 − 𝜏𝜏) 𝑑𝑑𝑑𝑑

(6.9)

𝑇𝑇→∞ 𝑇𝑇 −𝑇𝑇 ⁄2

Note that

1 𝑇𝑇 ⁄2

�

𝑅𝑅11 (0) = lim � [𝑥𝑥1 (𝑡𝑡)]2 𝑑𝑑𝑑𝑑 = 𝑃𝑃1

(6.10)

𝑇𝑇→∞ 𝑇𝑇 −𝑇𝑇 ⁄2

If x1(t) is periodic with period To, then

1 𝑇𝑇0 ⁄2

�

𝑅𝑅11 (𝜏𝜏) = �

𝑥𝑥 (𝑡𝑡)𝑥𝑥1 (𝑡𝑡 − 𝜏𝜏) 𝑑𝑑𝑑𝑑

(6.11)

𝑇𝑇𝑜𝑜 −𝑇𝑇0 ⁄2 1

6.4 Power Spectral Density

U

̅ (ω), is defined as

The power spectral density of x1(t), denoted 𝑆𝑆11

∞

̅ (𝜔𝜔) = ℱ[𝑅𝑅�11 (𝜏𝜏)] = � 𝑅𝑅�11 (𝜏𝜏) 𝑒𝑒 −𝑗𝑗𝑗𝑗𝑗𝑗 𝑑𝑑𝑑𝑑

𝑆𝑆11

(6.12)

̅ (𝜔𝜔)] =

𝑅𝑅�11 (𝜏𝜏) = ℱ −1 [𝑆𝑆11

(6.13)

−∞

Then

Setting τ=0, we get

𝑅𝑅�11 (0) =

Thus, from Eq. (6.10)

1 ∞

� 𝑆𝑆̅ (𝜔𝜔) 𝑒𝑒 𝑗𝑗𝑗𝑗𝑗𝑗 𝑑𝑑𝑑𝑑

2𝜋𝜋 −∞ 11

1 ∞

� 𝑆𝑆̅ (𝜔𝜔) 𝑑𝑑𝑑𝑑

2𝜋𝜋 −∞ 11

1 𝑇𝑇 ⁄2

1 ∞

� [𝑥𝑥1 (𝑡𝑡)]2 𝑑𝑑𝑑𝑑 =

� 𝑆𝑆̅ (𝜔𝜔) 𝑑𝑑𝑑𝑑

𝑇𝑇→∞ 𝑇𝑇 −𝑇𝑇 ⁄2

2𝜋𝜋 −∞ 11

̅ (𝜔𝜔) is called the power spectral density of x1(t).

This is the reason why 𝑆𝑆11

𝑃𝑃1 = lim

(6.14)

(6.15)

Example 6.2: Verify Eq.(6.15) for the sine wave signal x1(t)=A sin(ω1t+φ), where ω1=2π/T1.

U

U

Sol. From Eq.(6.11),

U

U

𝑅𝑅�11 (𝜏𝜏) =

=

1 𝑇𝑇1 ⁄2

�

𝑥𝑥 (𝑡𝑡)𝑥𝑥1 (𝑡𝑡 − 𝜏𝜏) 𝑑𝑑𝑑𝑑

𝑇𝑇1 −𝑇𝑇1 ⁄2 1

𝐴𝐴2 𝑇𝑇1 ⁄2

�

𝑠𝑠𝑠𝑠𝑠𝑠(𝜔𝜔1 𝑡𝑡 + 𝜙𝜙) 𝑠𝑠𝑠𝑠𝑠𝑠[𝜔𝜔1 (𝑡𝑡 − 𝜏𝜏) + 𝜙𝜙]𝑑𝑑𝑑𝑑

𝑇𝑇1 −𝑇𝑇1 ⁄2

𝐴𝐴2 𝑇𝑇1 ⁄2

=

�

[𝑐𝑐𝑐𝑐𝑐𝑐(𝜔𝜔1 τ) − 𝑐𝑐𝑐𝑐𝑐𝑐(2𝜔𝜔1 𝑡𝑡 + 2𝜙𝜙 − 𝜔𝜔1 τ)]𝑑𝑑𝑑𝑑

2𝑇𝑇1 −𝑇𝑇1 ⁄2

Dr. Ahmed A. Alrekaby

[1.16]

and,

From Eq.(6.12)

Then

=

𝑇𝑇1 ⁄2

𝐴𝐴2

𝐴𝐴2

𝑐𝑐𝑐𝑐𝑐𝑐(𝜔𝜔1 τ) �

𝑑𝑑𝑑𝑑 = 𝑐𝑐𝑐𝑐𝑐𝑐(𝜔𝜔1 τ)

2𝑇𝑇1

2

−𝑇𝑇1 ⁄2

2

𝐴𝐴

𝑅𝑅�11 (0) = 2

𝐴𝐴2

𝜋𝜋𝐴𝐴2

𝜋𝜋𝐴𝐴2

̅ (𝜔𝜔) = ℱ[𝑅𝑅�11 (𝜏𝜏)] = ℱ � 𝑐𝑐𝑐𝑐𝑐𝑐(𝜔𝜔1 τ)� =

𝑆𝑆11

𝛿𝛿(𝜔𝜔 − 𝜔𝜔1 ) +

𝛿𝛿(𝜔𝜔 + 𝜔𝜔1 )

2

2

2

1 ∞ 𝜋𝜋𝐴𝐴2

𝜋𝜋𝐴𝐴2

1 ∞

̅ (𝜏𝜏) 𝑑𝑑𝑑𝑑 =

� 𝑆𝑆11

� �

𝛿𝛿(𝜔𝜔 − 𝜔𝜔1 ) +

𝛿𝛿(𝜔𝜔 + 𝜔𝜔1 )� 𝑑𝑑𝑑𝑑

2𝜋𝜋 −∞ 2

2

2𝜋𝜋 −∞

𝐴𝐴2 ∞

=

� [𝛿𝛿(𝜔𝜔 − 𝜔𝜔1 ) + 𝛿𝛿(𝜔𝜔 + 𝜔𝜔1 )]𝑑𝑑𝑑𝑑

4 −∞

𝐴𝐴2

𝐴𝐴2

=

(1 + 1) =

= 𝑃𝑃1

4

2

7. SYSTEM REPRESENTATION AND CLASSIFICATION

U

7.1 System Representation:

U

A system is a mathematical model of a physical process that relates the input signal (source

or. excitation signal) to the output signal (response signal).

Let x(t) and y(t) be the input and output signals, respectively, of a system. Then the system is

viewed as a mapping of x(t) into y(t). Symbolically, this is expressed as

𝑦𝑦(𝑡𝑡) = 𝒯𝒯[𝑥𝑥(𝑡𝑡)]

(7.1)

where 𝒯𝒯 is the operator that produces output y(t) from input x(t), as illustrated in Fig.7.1.

x(t)

System

y(t)

𝒯𝒯

Fig.7.1

7.2 System Classification

U

7.2.1 Continuous-Time and Discrete-Time Systems

U

If the input and output signals x(t) and y(t) are continuous-time signals, then the system is

called a continuous-time system. If the input and output signals are discrete-time signals or

sequences, then the system is called a discrete-time system.

7.2.2 Linear Systems

U

If the operator 𝒯𝒯 in Eq. (7.1) satisfies the following two conditions, then 𝒯𝒯 is called a linear

operator and the system represented by 𝒯𝒯 is called a linear system;

• Additivity

𝒯𝒯[𝑥𝑥1 (𝑡𝑡) + 𝑥𝑥2 (𝑡𝑡)] = 𝒯𝒯[𝑥𝑥1 (𝑡𝑡)] + 𝒯𝒯[𝑥𝑥2 (𝑡𝑡)] = 𝑦𝑦1 (𝑡𝑡) + 𝑦𝑦2 (𝑡𝑡)

(7.2)

For all input signals x1(t) and x2(t).

• Homogeneity

𝒯𝒯[𝑎𝑎𝑎𝑎(𝑡𝑡)] = 𝑎𝑎𝑎𝑎[𝑥𝑥(𝑡𝑡)] = 𝑎𝑎𝑎𝑎(𝑡𝑡)

(7.3)

For all input signals x(t) and scalar a.

Any system that does not satisfy Eq. (7.2) and/or Eq. (7.3) is classified as a nonlinear system

Dr. Ahmed A. Alrekaby

[1.17]

Example 7.1: For each of the following systems, determine whether the system is linear.

U

U

Thus, the system represented by (b) is not linear. The system also does not satisfy the

homogeneity condition

7.2.3 Time Invariant Systems

U

If the system satisfies the following condition, then the system is called a time-invariant or

fixed system:

(7.4)

𝒯𝒯[𝑥𝑥(𝑡𝑡 − 𝑡𝑡𝑜𝑜 )] = 𝑦𝑦(𝑡𝑡 − 𝑡𝑡0 )

to

to

to

where to is any real constant. Equation (7.4) indicates that the delayed input gives delayed

output. A system which does not satisfy Eq. (7.4) is called a time-varying system.

7.2.4 Linear Time-Invariant (LTI) Systems:

U

If the system is linear and time-invariant, then the system is called a linear time-invariant

(LTI) system.

8. IMPULSE RESPONSE AND FREQUENCY RESPONSE

U

8.1 Impulse Response

U

The impulse response h(t) of an LTI system is defined to be the response of the system when

the input is δ(t), that is

ℎ(𝑡𝑡) = 𝒯𝒯[𝛿𝛿(𝑡𝑡)]

The function h(t) is arbitrary, and it need not be zero for t < 0. If

h(t) = 0 for t < 0

then the system is called causal.

(8.1)

(8.2)

8.2 Response to an Arbitrary Input:

U

The response y(t) of an LTI system to an arbitrary input x(t) can be expressed as the

convolution of x(t) and the impulse response h(t) of the system, that is,

Dr. Ahmed A. Alrekaby

[1.18]

∞

𝑦𝑦(𝑡𝑡) = 𝑥𝑥(𝑡𝑡) ∗ ℎ(𝑡𝑡) = � 𝑥𝑥(𝜏𝜏)ℎ(𝑡𝑡 − 𝜏𝜏)𝑑𝑑𝑑𝑑

(8.3)

𝑦𝑦(𝑡𝑡) = ℎ(𝑡𝑡) ∗ 𝑥𝑥(𝑡𝑡) = � ℎ(𝜏𝜏)𝑥𝑥(𝑡𝑡 − 𝜏𝜏)𝑑𝑑𝑑𝑑

(8.4)

−∞

Since the convolution is commutative, we also can express the output as

∞

−∞

8.3 Response of Causal Systems

U

From Eqs. (8.2) and (8.3) or (8.4), the response y(t) of a causal LTI system is given by

𝑡𝑡

∞

𝑦𝑦(𝑡𝑡) = � 𝑥𝑥(𝜏𝜏)ℎ(𝑡𝑡 − 𝜏𝜏)𝑑𝑑𝑑𝑑 = � 𝑥𝑥(𝑡𝑡 − 𝜏𝜏)ℎ(𝜏𝜏)𝑑𝑑𝑑𝑑

−∞

(8.5)

0

A signal x(t) is called causal if it has zero values for t < 0. Thus if the input x(t) is also causal,

then

𝑡𝑡

𝑡𝑡

𝑦𝑦(𝑡𝑡) = � 𝑥𝑥(𝜏𝜏)ℎ(𝑡𝑡 − 𝜏𝜏)𝑑𝑑𝑑𝑑 = � 𝑥𝑥(𝑡𝑡 − 𝜏𝜏)ℎ(𝜏𝜏)𝑑𝑑𝑑𝑑

0

(8.6)

0

8.4 Frequency Response

U

Applying the time convolution theorem of the Fourier transform, we obtain

Y(ω) =X(ω)H(ω)

H(ω) is referred to as the frequency response (or transfer function) of the system. Thus

𝐻𝐻(𝜔𝜔) = ℱ[ℎ(𝑡𝑡)] =

𝑌𝑌(𝜔𝜔)

𝑋𝑋(𝜔𝜔)

(8.7)

(8.8)

H(ω)

1

δ(t)

x(t)

X(ω)

LTI

system

h(t)

y(t)=x(t)∗h(t)

Y(ω)=X(ω)H(ω)

8.5 Distortionless Transmission

For distortionless transmission through a system, we require that the exact input signal shape

be reproduced at the output.

Therefore, if x(t) is the input signal, the required output is

𝑦𝑦(𝑡𝑡) = 𝐾𝐾𝐾𝐾(𝑡𝑡 − 𝑡𝑡𝑑𝑑 )

(8.9)

where td is the time delay and K is a gain constant.

Taking the Fourier transform of both sides of Eq. (8.9), we get

𝑌𝑌(𝜔𝜔) = 𝐾𝐾𝑒𝑒 −𝑗𝑗𝑗𝑗 𝑡𝑡𝑑𝑑 𝑋𝑋(𝜔𝜔)

(8.10)

For distortionless transmission the system must have

U

U

(8.11)

That is, the amplitude of 𝐻𝐻(𝜔𝜔) must be constant over the entire frequency range, and the

phase of 𝐻𝐻(𝜔𝜔)must be linear with frequency.

Dr. Ahmed A. Alrekaby

[1.19]

9. RELATIONSHIP BETWEEN INPUT AND OUTPUT SPECTRAL DENSITIES

U

Consider an LTI system with frequency response H(ω), input x(t), and output y(t). If x(t) and

y(t) are energy signals, then by Eq.(6.5) their energy spectral densities are 𝑆𝑆𝑥𝑥𝑥𝑥 (𝜔𝜔) =

|𝑋𝑋(𝜔𝜔)|2 and 𝑆𝑆𝑦𝑦𝑦𝑦 (𝜔𝜔) = |𝑌𝑌(𝜔𝜔)|2 , respectively. Since Y(ω) = H(ω)X(ω), it follows that

𝑆𝑆𝑦𝑦𝑦𝑦 (𝜔𝜔) = |𝐻𝐻(𝜔𝜔)|2 𝑆𝑆𝑥𝑥𝑥𝑥 (𝜔𝜔)

(9.1)

̅ (𝜔𝜔) = |𝐻𝐻(𝜔𝜔)|2 𝑆𝑆𝑥𝑥𝑥𝑥

̅ (𝜔𝜔)

𝑆𝑆𝑦𝑦𝑦𝑦

(9.2)

A similar relationship holds for power signals and power spectral densities; that is,

Example 9.1: Consider a system with 𝐻𝐻(ω) = 1⁄(1 + 𝑗𝑗𝑗𝑗) and input x(t)=e-2tu(t). Find the

energy spectral density of the output.

U

U

Sol.

U

1

𝑗𝑗𝑗𝑗 + 2

1

𝑆𝑆𝑥𝑥𝑥𝑥 (𝜔𝜔) = |𝑋𝑋(𝜔𝜔)|2 = 2

𝜔𝜔 + 4

The energy spectral density of the output is

𝑥𝑥(𝑡𝑡) = 𝑒𝑒 −2𝑡𝑡 𝑢𝑢(𝑡𝑡) ↔ 𝑋𝑋(𝜔𝜔) =

𝑆𝑆𝑦𝑦𝑦𝑦 (𝜔𝜔) = |𝐻𝐻(𝜔𝜔)|2 |𝑋𝑋(𝜔𝜔)|2 =

1

(𝜔𝜔 2 + 1)(𝜔𝜔 2 + 4)

Dr. Ahmed A. Alrekaby