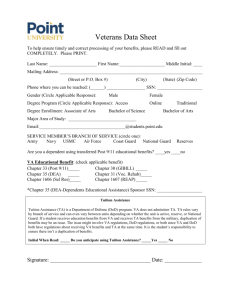

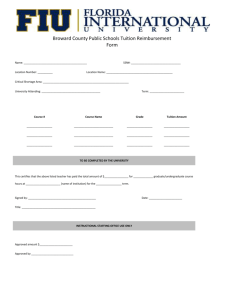

Federal Educational Assistance Programs Available to Service Members

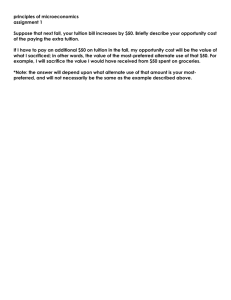

advertisement