Efficient emulation of high- dimensional outputs using manifold learning: Theory and applications

advertisement

Efficient emulation of highdimensional outputs using manifold

learning: Theory and applications

Akeel Shah, Wei Xing, Vasilis Triantafyllidis

(WCPM)

Prasanth Nair

(University of Toronto)

• Problems and definition of emulator

• Types and basic construction

• ‘Data’ from PDE models

• Gaussian process emulation (scalar case)

• Gaussian process emulation for PDE outputs

• Gaussian process emulation for PDE outputs using

dimensionality reduction

• Limitations of linear dimensionality reduction

• Nonlinear dimensionality reduction

• Application to PDE output data

• Emulation of multiple fields

WCPM 19/2/2015

• High fidelity simulation of fields, e.g., velocity or temperature

field, in many computational physics and engineering problems

! Design optimization (4 inputs, 10 values = 104 cases!)

! Model calibration, validation, sensitivity analysis,

uncertainty analysis (parameter/structural, numerical error)

• Computational cost of Monte Carlo based methods for

sensitivity/uncertainty analysis can be prohibitive

• For field problems, the dimesionality of the output space is

typically high, e.g., numerical grid 100 × 100 × 100 = 106 points

• Use emulators (meta-model, surrogate) to replace calls to full

simulation model (simulator)

• Rapidly predict and visualize behavior of complex systems as

functions of design parameters

WCPM 19/2/2015

• How to construct emulators?

• Two basic approaches:

• Data driven (statistical)

• Physics based

• Data-driven emulators constructed by applying function

approximation/machine learning (e.g., neural network,

Gaussian process modeling) to input-output data

• Physics-based emulation works directly with the PDE

model to achieve model order reduction, e.g. reduced basis

expansion. No natural way of dealing with nonlinearities

• Both require careful design of experiment

WCPM 19/2/2015

To illustrate

theuitypes

underunder

consideration,

consider

(q ,the

t; xof

): problems

Toables

illustrate

types

of problems

consideration,

considera

em of order-n nonlinear

PDEs for dependent

variand parameterized.

It is assumed that the P

ms

consideration,

consider a system

paramezedunder

nonlinear,

time-dependent

of order-n

PDEs

forfor

depend

terized

nonlinear,

time-dependent

system

of order-n

PDEs

depen

2

n derivatives of u of ord

partial

i (0, T ],

@t ufor

Fi (qvalue

, t, u, Du,

D

u,

.

.

.

,

D

u; x ) = 0caninbe

⌦ compute

⇥

i + any

of

x

and

that

a

solution

ystem

of, t;order-n

PDEs

ables

u):

x ): for dependent varis ui (q

x

i (q , t;

• Nonlinear PDE model

↵

↵

↵

D

u

=

{D

u

,

.

.

.

,

D

uM }, de

1

We describe a method

for

constructing

emulators

fo

T

l

x ) = 0 inin⌦which

⇥ (0, Tu], = i(u=1 ,1,.2.. .. ,. u

, JJ ) (1)

,nx 2 X ⇢ R is a vector of l par

2

n

@

u

+

F

(q

,

t,

u,

Du,

D

u,

.

.

.

,

D

u;

x0) =

0 ⌦in⇥⌦(0,

T ], ibounda

i=

1,

all

variables

u⇥i .(0,

The

t i, t, u,i Du, D u, . . . , D u; x )of

ui + Fi (q

=

in

T

],

=

1,

.

to (1), together

2 with consistent initial/boundary condit

is . the

domain over which the p

u;lx ) = 0 time,

in ⌦ ⇥and

(0, T⌦], ⇢ iR= 1,

. . , Jphysical

(1)

nonlinear

and parameterized. I

R is a vector of

l

parameters,

t

denotes

T

l

model

is

referred

to2asX la⇢simulator.

The

quantity

or

in which

u

=

(u

,

.

.

.

,

u

)

,

x

R

is

a

vector

of

l

parameters,

1

J

T

qa =

(⇠, ).

F

i = that

1, . . .a, Jst

i ,and

which u =The

(u1 ,spatial

. . . , uJ variable

) , x 2 is

X denoted

⇢ R for

is any

vector

of

lxparameters,

value

of

main

over

which

thel parameters,

problem

defined.

2any orisall

⇢ Rl time,

is a vector

of

t denotes

include

of the

ui , orover

functions

derived

fro

and 2⌦ ⇢ R is the physical

domain

which the

problem

rameterized

functions

ofdomain

their arguments.

Forthe

amethod

given

multi-i

e,

and

⌦

⇢

R

is

the

physical

over

which

problem

is

We

describe

a

for

co

⇠,

).

F

,

i

=

1,

.

.

.

,

J

are

nonlinear

pa•

are

nonlinear,

parameterized

operators

i

omain

over

which

the

is defined.

The

spatial

variable

Fi , iof=a 1,2D

. . . steady-sta

, J are

quantity,

u(q

; xq), =in(⇠,

the).case

↵⇠ problem

↵ is denoted

↵ such

k non

D ui = is

@⇠ denoted

@ ui . For

a (⇠,

non-negative

integer

k,

let

D nonli

ui =

spatial

variable

q(↵=

).

F

,

i

=

1,

.

.

.

,

J

are

to

(1),

together

with

consistent

i

ts.

For

a

given

multi-index

↵

=

,

↵

),

⇠

spatial

grid

(⇠, • rameterized

). In

Fi2D,

, i =simulated

1,functions

...,J

are

pa- the

ofnonlinear

their

arguments.

Forsimulations

a given multi-index

↵=

or

derived

from

at

specifie

where k|↵|of:=

↵↵⇠ +

↵ is themodel

order

ofreferred

themulti-index

multi-index,

den

eterized

functions

their

arguments.

For

a

given

↵

is

to

as

a

simul

↵

↵

integer

let

D

u

=

{D

u

:

|↵|

=

k},

⇠ multi-index

↵ k,

k

↵=

i

i

ents.

For

a

given

↵

=

(↵

,

↵

),

⇠

D ui = @grid

uii,. For

ai =

non-negative

integer

k,

let. DThe

ui =

{Dpoin

ui

⇠ @ (⇠

),

1,

.

.

.

N

,

j

=

1,

.

.

.

N

grid

j

⇠

↵⇠ ↵

kall of the↵u , o

kdenote

↵ set of all

include

any

or

ufve

=

@

@

u

.

For

a

non-negative

integer

k,

let

D

ui =denote

{D uiithe

:|

the

multi-index,

the

i integer

i

k,

let

D

u

=

{D

u

:

|↵|

=

k},

⇠

i the order of the multi-index,

where

|↵|spaced

:= ↵i⇠ +

↵

is

provided that the same grid

is used for each si

5

• Consider

of

interest

(steady

state): u(qdenote

such

quantity,

; x ), in the

the sc

reof |↵|

:=

↵⇠ + 1↵quantity

is the the

order

the

multi-index,

the

multi-index,

denote

set of all

For di↵erent design inputs x (k) 2 X ⇢ Rl , k =

5

simulated or derived from the

(k) 19/2/2015 5

WCPM

gives outputs u (⇠i , j ), i = 1, . . . N⇠ , j = 1, . . . N .

grid (⇠ , ), i = 1, . . . N , j = 1

The grid points

need

noti =

be1,uniformly

grid

(⇠

,

),

...N

N⇠., outputs

jThe

= 1,grid

. . .uN

grid

points

n.

(k). The

i

j

d (⇠i , j ), i = 1, . . . N⇠ , j = 1, ...gives

points

not

be

u

(⇠i , need

),

i

=

1,

.

.

j

sed for eachspaced

simulation.

provided

the

samefor

grid

is used

(k)

(k) for each simul

ced provided that the

same that

grid

is

used

each

simulation.

notation ui,j := u (⇠i , j ). Each

l

(k)

l

X ⇢ R , k = For

1, . .di↵erent

. , m, thedesign

simulator

(k)

l

inputs

x

2

X

⇢

R

= 1,s

Choosedesign

design inputs

inputs x

For • di↵erent

2 X ⇢ R , k = 1, . . . , ,m,k the

(k)

(k)

(k)

(k)

(k)

j = 1,

.

.

.

N

.

We

will

use

the

compact

y

=

(u

,

.

.

.

,

u

,

u

, . the

.We

.,u

(k)

• Simulator

outputs

u 1, (⇠

= 1,

1,1,1

.. .. .. N

=

. . .2,1

N

1,N1,

es outputs

ugives

(⇠ outputs

, ), i =

. .i., Nj ),, ji =

N⇠ ,. jWe

will

use

i

j

⇠

(k)

e outputs

be

as(k)follows:

(k) can

(k)vectorized

notation

u

:=

u

(⇠i(k)

,thej ).outputs

Each

thebe

outputs

can be

v

(i,

j

+

1)

(k)ofcan

(k)vectorized

ation ui,j := u (⇠i , i,j

as

j ). Each of

y

=d =(uN

. . .N

, u1,N

where

. In, the case o

1,1⇠, ⇥

(k)

(k)

(k) (k)

(k) (i + 1, j)

d

(k)

(k)

(k)

(k)

(k)

(i

1,

j)

u

,

.

.

.

,

u

,

(k)

(k)

(k)

(k)

,(k)

. . . . . . . (k)

. . , uNy⇠ ,1 , (k)

.=

. . ,(u

u1,1

)

2

R

(2)

2,1

2,N

, ., .. .. ,. u, u

, u, in

.. .. ., .u. , uN⇠ ,1) ,2.

steps

⇠ ,N

2,1

1,N

= (u1,1 , . . . , u1,N , uN2,1

. . .,the

.. .. .. ., temporal

.u.2,N

, uN,⇠.,1. ,. .. discretizatio

2,N

N⇠ ,N

(i, j)

.........,

(k)

d (k)

(k)

Td = N

d⇠

(x

,

t)

and

y

2

R

,

where

u

,

.

.

.

,

u

)

2

R

me-dependentwhere

simulations

with

time

N⇠ ,1

N⇠ ,N

dIn

N

NN

. tIn

the case

of time-dependent

simu

j= the

1)⇠ ⇥

ere d = N⇠ ⇥ N . (i,

case

of

time-dependent

simulations

with

This

equivalent

toadt

is treated assteps

an additional

input,

x is

7!a multivariate

in

the

temporal

discretization,

t

is

treated

as

an

ps in• the

discretization,

t istime

treated

as an additional

inp

Fortemporal

time dependent

case treat

as additional

input

[13].

For. The

spatially-unifor

(k)

d O’Hagan

. The number

points

iswhere

mNtd.samples

parameter.

Then

there

are

(training

points)

t)

and

y

2

R

,

=

N

⇥N

number

of tr

(k) (x ,of

d training

⇠

t) and y 2 R , where d = N⇠ ⇥N . The number of training

points

where

The input

same(TI)

proced

• For(TI)

spatially

uniform

t . time

me input

case

defined

byproblems

Conti

andd = toNthe

This

is

a

multivariate

equivalent

case

s is a multivariate equivalent to the time input (TI) case defined by

C

interest

simulations. Simu

ime-dependent

simulations,

y (k)

2 Rd from the time-dependent

O’Hagan

[13].

For

spatially-uniform,

Hagan [13]. For spatially-uniform, time-dependent simulations, sim

y(

WCPM 19/2/2015

in section

Append

can be extended

wheretod other

= N .quantities

Thediscussed

sameof procedure

can4beand

extended

t

me procedure can be extended to other quantities of

perature), which are

functionsinofsection

multiple

inputAp

p

discussed

4 and

tions. Simultaneous emulation of multiple quantities is

interest could include

one or umore

scalar/vector

fie

quantity

is the

target for em

nd Appendix

C.. can

Forbenow

it is assumed

that a from

singlethe

• Simulator

considered

as a mapping

aspace

scalar

quantity.

For

aThe

single

scalar quantity,

simulator

can bethe

cons

design

to

the

output

space

for emulation.

as a function ⌘ : Xlthe

! PDE

Rdd , taking

as inputs

x) 2

X

solution

u(q

;

x

at

e considered as a mapping ⌘ : R ! R (representing

• The goal

of emulation

is to outputs

approximate

this vectorized

mapping field

puts

y 2 Rd . The

are

the

is to learn the mapping ⌘ , g

x ) at the

defined

gridpoints

points).

The goal

of design

emulation

using

training

generated

as the

points

points in a spatial domain or time interval. Very f

(i)

g ⌘ , given data y (i) = ⌘ (x (i) ) for

design

, high dim

i =

1, . .points

. , m. xThe

the

emulation

ofspace

such

simulators,

which

• Machine

learning

strategies

formeans

such

general

(input- poses en

h dimension

d of

the output

that most

output) problems, e.g., neural networks, linear

of computational efficiency. For even moderately co

regression, Gaussian process emulation

6 a 100 ⇥ 100 ⇥ 100 grid in R3 , the value of d

e.g.,

• GP emulation

is attractive because it is Bayesian and

non-parametric (no basis functions to choose)

involving complex geometries or multiple spatial sc

be required WCPM

to adequately

resolve small-scale charac

19/2/2015

T

k(·, ·) = b b = ( ⌧ ) ⌧ = ⌧ T K⌧⌧

x 2 X, which can be discrete (X ✓ N) or contin

T

T

T

b

k(i,

·)

=

=

(

⌧

)

=

⌧

krestricted

i

i density function

i

joint probability

to a fi

• A GP is a stochastic process Sx , x 2 X : the joint

Gaussian.

A GP

is specified

its mean

Single output

Gaussian

process

emulation

probability

density

function

restricted

to by

a finite

subsetand

of co

the index set is multivariate Gaussian. A GP is specified

An emulator

provides

a probability

distribut

We summarize

the

standard

(scalar)

formulation

in

Oakley

and O’Ha

by its mean and covariance functions

The

emulator

mulator

is definedasimulator.

as

an unknown

function

⌘ :isRltrained

! R of using

inputsmx sim

2

• Consider

scalar

valued

simulator

(i)

l

from

design

points

x

2

X

⇢

R

(this

notation

•

Run

as

before

at

design

points

ochastic process is a family of random variables, W(x),

indexed

by e

(i)

(i)

y

=

⌘(x

),of or

ithe

=continuous

1,

. . . , m (X ✓simulator

• Outputs are

the outputs

multi-output

X, which can be discrete

(X ✓y N)

R). For a⌘

• GP prior assumption: ⌘(·) is regarded as a GP indexed by

regarded

as a random function that has a GP d

probability

density

the inputs

x function restricted to a finite subset of X is mul

ssian. A GP is⌘(x

specified

by its

covariance

T

) = T h(x

) +mean

G(x )and

+ ✏(x

) ⌘(x ) =functions.

h(x ) + W

Modelling

measurement

error

for

⌘(·) given

data

An emulator

provides

a probability

distribution

f

Regression

functions

Zero mean

GP

l

p

where

h

:

R

!

R

a vector of

regression

fu

lator. The emulator is trained using mis simulator

outputs

Z (i) =

WCPM 19/2/2015

(i)

is a vectorl of regression coefficients and W(x )

• In Bayesian linear regression (without noise)

⌘(x ) =

T

h(x )

• Place a prior distribution on the weight vector, e.g.,

Gaussian with i.i.d coordinates, ⇠ N (0, b2 I)

• Underlying function is distributed according to a

Gaussian process indexed by the inputs x, namely

⌘(x ) ⇠ GP(0, b2 h(x )T h(x 0 )

• GPE generalizes this concept by directly placing a

covariance structure on the GP – allows for a much

broader class of functions

WCPM 19/2/2015

• Covariance function

k(x , x 0 ) =

2

c(x , x 0 ; ✓ )

• Stationary correlation function

c(x , x 0 ; ✓ ) = exp (x x 0 )T diag(✓1 , . . . , ✓l )(x

x 0)

• Expresses smoothness of the GP (of the mean square

derivatives to all orders)

• Conditional distribution of data t = (y (1) , . . . , y (m) )T

t| ,

2

, ✓ , ⇠ N (H ,

2

C)

⇥

⇤

(1)

(m) T

H = h(x ) . . . h(x

)

[C]ij = c(x (i) , x (j) ; ✓ )

WCPM 19/2/2015

• Predictive distribution given data and hyperparameters

for a test point x

⌘(·)| ,

2

, ✓ , t ⇠ GP µ0 (·),

2 0

⌫ (·, ·)

µ0 (x ) = T h(x ) + a(x )T C 1 (t H )

⌫ 0 (x , x 0 ) = c(x , x 0 ; ✓ ) a(x )T C 1 a(x 0 )

T

a(x ) = c(x (1) , x ; ✓ ), . . . c(x (m) , x ; ✓ )

• Once hyperparameters are known, predictions can be

made easily and quickly with simple formulae

• Formulae can be extended to predict several points

simultaneously

WCPM 19/2/2015

• Fully Bayesian approach places a prior f (✓✓ ) on these

hyperparameters and uses MCMC to present predictions

as a sample

• Time consuming process so often ‘plug in’ (point)

estimates are used

• Maximum a-posteriori (MAP) using a conjugate prior

• Maximum likelihood estimate (MLE)

✓

1

1 T

✓ M LE = arg max ✓

ln |C|

t C

2

2

• Depends on number of samples m

WCPM 19/2/2015

1

t

m

ln(2⇡)

2

◆

• How do we extend the scalar case to outputs in a high

dimensional space?

• A naive approach (Kennedy and O’Hagan (2001)) is to

treat the output index as an additional parameter and

perform scalar GPE – for emulating a whole field this

requires d computations

• How do we overcome this problem?

• Consider (Paulo et al. (2102)) the linear model of

coregionalization (Wackernagel (1995))

⌘ (x ) = Aw (x )

w (x ) = (w1 (x ), . . . , wJ (x ))T

• Independent zero-mean GPs. A independent of x!

WCPM 19/2/2015

• Correlation functions for the GPs are ci (·, ·, ✓ i )

• The covariance function for the multivariate GP is

Cov(⌘⌘ (x ), ⌘ (x 0 )) =

J

X

a i a Ti ci (x , x 0 , ✓ i )

i=1

• Fairly general assumption – allows different scales to be

incorporated by using a linear combination of correlation

functions with different scale parameters ✓ i

• We can assume a separable structure for the covariance by

taking all wi to be i.i.d., i.e. a single correlation function

• Leads to a tractable problem (Conti & O’Hagan (2009);

Rougier (2008)) – equivalent to a multivariate GP prior!

WCPM 19/2/2015

• An alternative method is to use the data to find a ‘suitable’

A and at the same time restrict the number of univariate

GPs to those that contribute the ‘most’

• Leads to a reduction in dimensionality of the output space

(restrict to a linear subspace) – Higdon et al. (2008)

• The method relies on only principal component analysis

• Singular value decomposition of data matrix (or eigendecomposition of sample covariance matrix) reorders

data according to variance in the d dimensions with

uncorrelated coefficients

• Natural basis (columns of A) for output space and

expansion in terms of coefficients (assumed to be GPs)

WCPM 19/2/2015

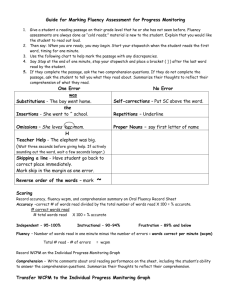

• Orthonormal basis p j , i = 1, . . . , m for d . Select linear

subspace: span{p 1 , . . . , p r }, r ⌧ d

(i)

• Coefficients in this basis cj , j = 1, . . . , d, i = 1, . . . , m

• Expansion (restrict to subspace)

r

X

y = (x ) ⇡

cj p j

j=1

Select a test point x for prediction

for j = 1 : r

(i)

scalar GPE on training set cj , x (i) , i = 1, . . . , m, prediction cj

end

Approximate y = (x ) ⇡

Pr

j=1 cj p j

WCPM 19/2/2015

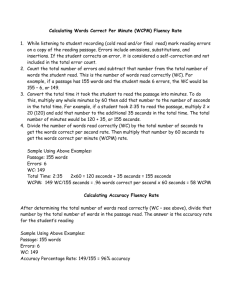

(b)

(a)

20

20

10

10

0

0

−10

−10

−20

40

−20

50

20

0 −10

0

10

20

0

−50 −10

(c)

0

10

20

• Fails in many cases to provide an accurate representation

20

of a response surface

10

• Informally, works

with a relatively ‘flat’ surface

0

• For highly nonlinear

response surfaces with abrupt

changes it could

−10 fail completely

−20

40

WCPM

19/2/2015

20

0

10

20

..........................................................

reduction method is, therefore, a natural extension, which forms the motivation for the method

developed below.

0

0

−10

−10

−20

40

−20

50

20 reduction

• There are other methods

for dimensionality

20

0

10

• Linear methods

0 −10

0

−50 −10

(c)

– PCA

– Multi-dimensional scaling

– Independent component analysis

• Nonlinear methods

– Kernel PCA

– Isomap/kernel Isomap

20

10

0

−10

−20

40

20

– Diffusion maps

0 −10

Figure 1.

– Laplacian eigenmaps

– Local linear embedding

(c) Dimensionality reduction via Isomap

WCPM 19/2/2015

(i) Multidimensional scaling (MDS)

0

10

20

• Manifold assumption: the input data resides on or close

to a ‘low-dimensional’ manifold embedded in the

ambient space – informally the dimension is the number

of parameters needed to specify a point, e.g., a surface of

a sphere has dimension 2

• Learning/characterizing such manifolds from given data

is called manifold learning

• Approaches are characterized in a number of ways, e.g.,

spectral, kernel-based, embeddings

• Each have their own advantages and disadvantages – no

universal technique

• Performance on toy data sets can be misleading

WCPM 19/2/2015

Euclidean norm.

Gaussian kernel

e

2s2

Polynomial (order n) ((y (i) )T y (j) +

p

finding the eigenvectors and eigenvalues of the sample covariance

matrix

(i)

Multiquadric

1

+

s||y

• Mapped to a higher dimensional (possibly infinite)

m

⇣

⌘data

X

T

(i) T

1

feature space and apply LPCA

to

mapped

(Scholkopf

(i)

(i)

Sigmoid

tanh(s(y

)

e

e

S=

(y ) (y )

et al. (1998))

m i=1

• Transform data in such a way that it liesP

in

(or near) a

0

m

(i)

(i)

(i)

(j)

e

of

kernels.

s and s are user

defined

shape

n whichlinear

(y subspace

) =Table

(y 1: of

)Examples

=

(y

)

(1/m)

(y

) is the

ith

j=1

the feature space

Euclidean norm.

point • centered

in

feature

space.

This

problem

is recast(possibly

by introducing a

Feature space

can

be very

high

dimensional

unctioninfinite)

k(·, ·) (examples of kernels are given in Table 2.1). The centred

finding the

eigenvectors and eigenvalues of the sample

d

• Feature

R ! F is implicitly specified via kernel

e

unction

k(·, ·) is map

defined: as:

m

⇣

⌘T

X

function

1

e(y (i) ) e(y (i) )

S

=

e(y (i) )T e(y (j) ) = e

e ij

m)i=1

k(y (i) , y (j)

=K

Pm

(i)

(i)

(i)

e

e(y (j) )j

in which

(y two

) = centred

(y ) points,

= e(y

(1/m)

which is a dot product

between

(y (i))) and

e ij , i, j point

in feature

space. This

recas

matrix [K]

= 1, . .centered

. , m, is called

the centred

kernelproblem

matrix.isWe

u

WCPM 19/2/2015

e m ↵ =

or in matrix form (using the positive definiteness of K),

ethe m

then

becomes

one

of

finding

the

eigenvectors

of

ke

orproblem

in matrix

form

(using

the

positive

definiteness

of

K),

or in matrix form (using the positive definitene

p

e i the

If• the

eigenvectors

↵ i are

replaced

by

↵

= ↵eigenvectors

In general

the

map

is one

not

known

explicitly

i/

i , normalized

problem

then

becomes

of

finding

of th

problem then becomes one of finding

the

eigenv

Pm

p (in

•

Recast

eigenproblem

for

sample

covariance

matrix

ve j , j = 1, . . . , dim(F ), can be defined as ve i = k=1

↵

eki ek , in p

e i = matrix

If thefeature

↵ai eigenproblem

are replaced

bykernel

↵

↵ i /by ↵

ei ,i normal

If theaseigenvectors

↵ i are

replaced

= ↵i/

space)

for

peigenvectors

Pm projectio

↵ki / i and ↵ki denotes the kth component of ↵ i . The

e

e

ve j • , jEigenvectors

= 1, . .ve. ,,dim(F

),

can

be

defined

as

v

=

↵

e

of

sample

covariance

matrix

are

not

i

ki

as ve i =k

j j = 1, . . . , dim(F ), can be defined k=1

ve i is

given but

by: the coefficients in an expansion are known

p

known

p

and↵↵ki/ denotes

the

kth

component

of

↵ i . The proj

m

m

and

↵

denotes

the

kth

component

of ↵

ki

i

ki

X

X

T

(j)

Te

e kj i = 1, . . . , m

e

z

=

v

↵

eki ek ej =

↵

eki K

i j =

i

ve i is given by:

ve is given by:

↵ki /

i

i

k=1

k=1

m

m

X

X

• Do GPE on

first

r

coefficients

(test

input

x)

to approximate

m

m

T

X

X

(j)

T

e

T

e ))=

e

ecalculated

erelated

Theprojection

centredzkernel

values

k(i,

j),↵

can

bev

are

to 1,

the.

(j)

T

e

=

v

e

=

↵

e

K

i

=

(y

(x

e

span{e

,

.

.

.

,

v

}

e

e

e

ki

ki

kj

of

onto

j

j

i

k

i

zi = ve i j =1

↵

ekir k j =

↵

eki K

k=1

kernel values k(i, j) := k(y (i)

,X

yr(j) ) by: k=1k=1

k=1

b(y (x )) =

zi (x )e

vi +

e

The centred kernel

values

k(i,i=1

j),values

canTbee

calculated

are

related t

T can be

T calculated

e

The centred

kernel

k(i,

j),

k(i, j) = k(i, j)

1 ki

1 k j + 1 K1

(i)

kernel valueskernel

k(i, j)values

:= WCPM

k(yk(i,

, j)

y (j)

by: (i) , y (j) ) by:

19/2/2015

:=) k(y

• Examples of a kernel functions

1

2s2

||y (i) y (j) ||2

Gaussian kernel

e

Polynomial (order n)

((y (i) )T y (j) + s)n

p

1 + s||y (i) y (j) ||2

Multiquadric

Sigmoid

tanh(s(y (i) )T y (j) + s0 )

• Since the map is not known (nor the basis vectors in feature

space), a pre-image problem has to be solved –

uclidean norm.

approximate the inverse map

able 1: Examples of kernels. s and s0 are user defined shape parameters. || · || denotes t

• It turns out this is possible to do in 3 main ways for most

standard kernels

nding the eigenvectors and eigenvalues of the sample covariance matrix S:

m

1 X e (i) ⇣e (i) ⌘T

S=

(y ) (y )

WCPM

19/2/2015

m i=1

(

• Least squares approximation is possible by expressing

distances between points in physical space to distances

between points in feature space via kernel function.

Method can suffer from numerical instabilities if m < d.!

• A fixed-point iterative algorithm (Mika et al. (1999)) can

be used but is again prone to instability

• Local linear interpolation (Ma & Zabaras (2011)) is the

third method (again based on distance information) –

use a weighted sum of known data point values. This

gives stable results

WCPM 19/2/2015

1. Select design points x(j) 2 X ⇢ Rl , j = 1, . . . , m, using DOE

2. Collect outputs y(j) = ⌘ (x(j) ) 2 Rd from the simulator

(j)

3. Perform kPCA on y(j) ) zi , j = 1, . . . , m, i = 1, . . . , d

4. Select a test point x for prediction

for i = 1 : r

(j)

perform scalar GPE on Di = {x(j) , zi }m

j=1 ) zi

end

Reconstruct ) b

y=

1

(b) ⇡ ⌘ (x)

WCPM 19/2/2015

r·u=0

density and buoyant flow is generated. The free-convection problem is

nts

temperature,

g denotesbuoyancy

gravitational

acceleration,

is lifting

the

by introducing

a Boussinesq

term (accounting

for ⇢the

Subsurface

flowtointhe

media

driven

variations

etoreference

temperature

Tporous

✏ is the

porosity

ofby

thedensity

medium,

thermal

expansion)

equation:

c ,Brinkmans

µ

cient ofµ volumetric

thermal

The heat

u + rp r ·

ru +expansion

ruT = ⇢gof(Tthe Tfluid.

c)

form:

✏

(55)

r·u=0

T = Tc

Tc )s

(Th

T = Th

(Tc

Th to Tcequations

along outerare

edges

he Brinkman

subject to no-slip conditions on

T = Tc

T = Tc

T represents

g denotes

gravitational acceleration, ⇢(56)

is the

⇢Cp utemperature,

· rT r · (k

m rT ) = 0

sity at the reference temperature Tc , ✏ is the porosity of the medium,

• Use

Brinkman’s

equation

with thermal conductivity of

tes the

e↵ective

(volume

averaged)

the coefficient of volumetric thermal expansion of the fluid. The heat

Boussinesq buoyancy term

ture,

andform:

Cp is the specific heat capacity of⌘ the fluid at conakes the

• Temperature varies from high

Th )s

⇢Cp u · rT r · (km rT ) = 0

(56)

⇠

Initially pressure

water stagnant

but arbitrarily). The boundary

th a • reference

specified

T = Th

temperature

gradients

alteraveraged)

fluid

km denotes

the e↵ective

(volume

thermal conductivity

of

densityare

andshown

buoyantinflow

generated

temperature

Figure

2.FigureThe

model

was

solved

2: Temperature

boundary

for the ‘Free

convection in porous media’ examp

solid mixture, and Cp is the specific heat capacity of the fluid at con-

2 [10 , 10 ]media’

(K ) and T under

2 [40, 60] ( C).

This method generally pro

tiphysics 4.3b (‘Free convection in porous

the

11

8

1

h

o

ssure. The Brinkman equations

are subject

to coverage

no-slip

conditions

WCPM

19/2/2015

a more uniform

of the

input space thanon

a Latin hypercube.

4

• Two input parameters varied: coefficient of volumetric

thermal expansion β and the high temperature Th

• A total of 500 numerical experiments were performed, with inputs selected using a Sobol sequence

• For each simulation, magnitude of the velocity |u| was

recorded on regular 100 × 100 square spatial grid

• The 10000 points in the 2D spatial domain re-ordered

into vector form in

• 400 samples reserved for testing

WCPM 19/2/2015

kGPE, on the other hand, captures the test case extremely accurately.

0

1

(a)

3

5

(b)

0

7

9

0.14

0.12

−4

4

2

0

0

2

4

6

0.6

5

8

0.08

0.5

0.4

0.06

0.3

4

6

3

4

2

0.2

0.04

2

1

0.1

6

0.02

ξ / cm

(c)

8

2

4

ξ / cm

6

8

10

(d)

0

10

0

0

10

0.7

1

8

0.6

6

10

3

5

8

7

4

0.3

2

4

Relative square error

2

ξ / cm

6

8

0

0

10

4

0.06

6

4

0.3

5

0.04

8

4

0.2

0.15

0.02

4

ξ / cm

6

0

6

3

4

2

0.1

2

0.05

2

10

10

0.25

2

0

0

8

(f)

η / cm

η / cm

8

ξ / cm

6

8

1

10

3

Figure 3:

18

0

0

1

2

5

4

ξ / cm

6

7

8

10

9

9

5

7

9

0.1

0.08

0.06

0.04

0.02

0

1

3

5

(d)

0.14

x 10

0.12

2

(e)

10

3

1

0.1

0.08

0

0

7

0

2

0.2

2

0

0.020

3

(c)

5

0.04

0.04

0.02

6

4

0.12

1

4

η / cm

η / cm

0.4

0.14

10

0.06

95

0.5

6

0.1

0.08

0.08

Relative square error

6

10

η / cm

Relative square error

η / cm

8

0.7

RelativeRelative

square error

square error

0.1

10

x 10

(c)

0.12

(b)

Relative square error

(a)

7

−4

Figure 2:

6

0.1

9

4

0.08

2

3.2. Continuously stirred

tank reactor

0.06

In

0.04

0

this example, a continuously

7

9 stirred tank rea

duce

0.02propylene glycol (PrOH) from the reaction of

water0 in the presence of an acid catalyst (H2 SO4 ): P

1

3

5

7

9

liquid phase reaction takes place in a CSTR, equip

Methanol (MeOH) is added to the mixture but do

WCPM Figure

19/2/2015

2:

ci Cp,i

i

dt

=

Hr+

H2 SO4

x

1

e

PrOV+

H

O

!

PrOH

2

r

p,x

+

Vr

i

The liquid phase reaction takes place in a CSTR, equipped with a heat-exc

in which:

capacity

is rassumed

to beE/(RT

a molar

flow ratethe

intospecific

(and outheat

of) the

tank; and

= AcPrO exp(

)) isaveraged

the rate valu

• CSTR

usedistoadded

produce

propylene

glycol

(PrOH)

from the

Methanol

(MeOH)

to

the

mixture

but

does

not

react.

The ma

of

reaction

(57).

The

reaction

rate

is

assumed

to

be

first

order

(mass

action)

the specific

heatoxide

capacities

of the

individual

in the liquid,

p,i ; H is

propylene

(PrO)

with

water inspecies

the presence

of HC2SO

4

nces for

therespect

species

(i =

MeOH,

PrOH,

H2 O) are:

with

to PrO

andPrO,

to have

an Arrhenius

temperature

dependence, with

enthalpy

reaction (57), with the first term on the right hand side represen

• MassofBalances

activation energy E and pre-exponential factor A (R is the universal gas constant

dcsecond

i

the heat of reaction. V

The

term

on the

side represents

=reactor).

vf (c

) + right

⌫ibalance

Vr rhand

r of the

f,i Thecienergy

and T is the temperature

is expressed as

dt

heat

loss to

the heat exchanger, where Fx is the flow rate, Cp,x is the spe

• follows:

Heat

balance

n which:

c is the concentration, ⌫i⇣ is the stoichiometric

coefficient a

⌘ X

X i

heat capacity

isx Cthe

inlet

of the heat

exchanger

dT and Tx F

Ttemperature

)

vf cf,i

(hf,i hi ) med

p,x (T

x

U A/(Fx Cp,x )

ci Cp,i

=

Hr+

1

e

+

dt concentration

Vr of species i, respectively;

s theU feed

stream

vf Varea

is

r the volu

i

i

and

A are the

heat transfer

coefficient and heat exchange

of the

(59)

Heat

loss

to HX

Convective

heat

exchanger,

respectively.

third

ontothe

side

isflow

the

Heat

reaction

in which:

theofspecific

heatThe

capacity

is term

assumed

be aright

molarhand

averaged

value

of enth

1 Available

fromto

http://www.di.ens.fr/

mschmidt/Software/minFunc.html

specific

heat

ofspecies

the individual

species

the liquid,

Cp,imolar

; H is the

change

due

thecapacities

flowofofspecies

the inreactor.

The

enthalp

• the

Molar

enthalpy

ithrough

enthalpy of reaction (57), with the first term on the right hand side representing

species

i is given by hi = Cp,i (T Tref ) + hi,ref , in which hi,ref is the stan

the heat of reaction. The second term 25

on the right hand side represents the

heat of formation of i at the reference temperature Tref . The feed stream m

heat loss to the heat exchanger, where Fx is the flow rate, Cp,x is the specific

WCPM

19/2/2015

enthalpies

h

are

calculated

similarly.

Theofmodel

is completed

by initial

f,i

heat capacity and Tx is the inlet temperature

the heat

exchanger medium.

va

• The model was solved in COMSOL Multiphysics 4.3b

(‘Free convection in porous media’)

• Three input parameters varied: initial temperatures,

initial concentrations of PrO, heat exchange

parameter, UA!

• A total of 500 numerical experiments were performed, with inputs selected using a Sobol sequence

• Temperature recorded every 14 s up to 7000 s

• The 501 points re-ordered into vector form

• 400 samples reserved for testing

WCPM 19/2/2015

2

aining points, kGPE

showed minor improvements

90

−3

5

5

9

1

(c)

60

50

30

30

5

20

1

3

5

−3

90

(d)

0

15 x 10

10

5

40 7

60 9 80

Time / min

7

9

(c)

100

10

5

1

120

0

1

100

120

(c)

80

70

60

60

50

50

40

50

20

20 5

40

60

80

Time / min

40 7 60 9 80

Time(d)/ min

100

21

40

60

80

7 Time / min

9

100

120

100

120

Figure 6:

70

60

50

WCPM 19/2/2015

30

0

60

50

120

40

20

5

70

40

80

60

30

0

80

70

Figure 5:

Figure 6:

Figure 5:

(d)

9

60

80

Time(b)/ min

90

70

30

0

3

7

40

90

40

30

0

30

03

40

0

5

20

90

80

Temperature / C

Temperature / C

80

90

−3

15 x 10

70

1

30 3

0

(a)

40

0

Relative square error

7

80

Temperature / C

Relative

square

errorerror

Relative

square

15 x 10

15

10

10

5

(b)

−3

x 10

9

40

Temperature / C

3

Temperature

Temperature

/C /C

1

Relative square error

all tests cases.

9

40

0

0

20

40

60

80

Time / min

100

120

22

30

0

• Classical multidimensional scaling provides a lowdimensional Euclidean space representation of data that

lies on a manifold in a high dimensional ambient space

• It relates ‘dissimilarities’ dij between points i and j to

Euclidean distances δij in the low-dimensional space

• Classical scaling is an isometric embedding

ij

= ||z (i)

z (j) || = dij

Dissimilarity matrix

dij

z

(j)

z

(i)

D = [dij ]

WCPM 19/2/2015

y (i)

ij

y (j)

• When dissimilarities are defined by Euclidean distance

MDS is equivalent to PCA (easily seen from least-squares

optimality of PCA)

• Method is also spectral: eigenvectors of a centred kernel

matrix K = (1/2)H(D D)H

• Idea generalised by Tenenbaum et al. using geodesic

distances for dij (e.g. shortest path distance) – Isomap

• Can be considered equivalent to kPCA: Dissimilarity

matrix defines distances between points in feature space

and leads to a centred kernel matrix

• Coordinates obtained from a spectral decomposition same

as before, provided the kernel matrix is p.s.d.

WCPM 19/2/2015

• Kernel ISOMAP guarantees p.s.d. kernel matrices and

therefore the existence of a feature space

• Can use same procedure as before on coefficients learned

by ISOMAP

• Pre-image problem can be solved similarly by relating

distances between points in the low-dimensional space to

dissimilarities (= Euclidean distances for ‘neighbours’)

WCPM 19/2/2015

at constant

g denotes grav

nd solid phases are treated as separate liquid

domains

sharing apressure,

moving melting

⇠

ence

density

liquid,according

⇢ is the lineari

onstant

pressure,

g denotes

gravitational

acceleration,

⇢the

0 is the refer(see Figure

8). The

position

of the melting

front isofcalculated

n · ( krT ) =density,

0

thermal

and in

Tf Wol↵

denotes th

ty

of the

liquid, ⇢ A

is the

is theexpansion,

metal

tefan

condition.

full linearized

description ofofthe

model

can

be coefficient

found

Metal and

melting

front:the

a square

cavity

containing

solid

and takes th

the

solid

phase,

the

heat

balance

expansion,

T

denotes

fusion

temperature

of

the

solid.

In

f

iskanta [29]. The liquid part on the left of Figure 8 is governed by the

re 8: Temperature

boundaryto

fora the

‘Metal melting

front’ example.

liquid submitted

temperature

difference

between

the

@Ts

⇢Cp

r·(

@t

@u

T

tant pressure,

acceleration,

⇢0 =

is 0the refers +gravitational

⇢0

+ ⇢0g⇢C

(udenotes

·p @T

r)u

rp

r

·

µ

ru

+

ru

⇢g

r

·

(krT

)

=

0

(61)

@t

in which Ts represents the solid temperatu

@t

of the liquid, ⇢ is the linearized

density,

is the metal coefficient

r·u=

0

n · ( krT

= (60)

0 the same

tivity

of the

solid

(assumed

to )be

represents

the

solid

temperature

and

k

denotes

the

thermal

conducs

@T

l

xpansion, and⇢C

Tf denotes

fusionrtemperature

+ ⇢Cpthe

u · rT

· (krTl ) = 0 of the solid. In

p

l

front

defined

by liquid-solid

⇠ = s(⌘, t), along

T = T whic

e solid (assumed to @t

be the same as that of

the is

liquid).

The

se, the heat balance takes

⇢ =the

⇢0 form:

(Tl Tf )

front is given by [29]:

fined by ⇠ = s(⌘, t), along which T = Tmelting

f . An energy balance at the

T =

Liquid!

T =T

@Ts Tl represents the liquid temperature,

ch u is the liquid velocity,

k denotesSolid!

✓ ◆

⇢Cp

r · (krT ) = 0

ont is given by [29]:

@s (61)

@s

⌘

@t

⇢0 capacity

hf

=of 1the

+

ermal conductivity of the

liquid,

C

is

the

specific

heat

p

!

@t

@y

✓ ◆2 ✓

◆

@s

@s

@Ts

@Tlthe thermal conducepresents

and

⇠ (62)

⇢0 the

hf solid

= temperature

1+

k k denotes

k

where @x

hf is the latent heat

of fusion. A n

@t

@y

n · ( krT ) = 0

30 @y

olid (assumed to be the same as that of the liquid). The liquid-solid

Figure the

8: Temperature

boundary

for the ‘Metal

melting front’

boundaries for

liquid.

The

model

wasexam

is the latent heat of fusion. A no slip condition is applied at the other

ed by ⇠ = s(⌘, t), along which

T =19/2/2015

Tf . Anliquid

energy

balance

at the acceleration, ⇢

WCPM

at constant

pressure,under

g denotes gravitational

(‘Tin Melting

Front’

the Heat Tran

for the liquid. The model was solved in COMSOL Multiphysics 4.3b

hase, left

theequations

heat

takes

the form: approximation:

r-Stokes

using

a Boussinesq

and balance

right

boundaries

f

h

• The model was solved in COMSOL Multiphysics 4.3b

• Two input parameters varied: latent heat of fusion ∆hf

and thermal conductivity k

• 50 numerical experiments were performed, with

inputs selected using a Sobol sequence.

• For each set of parameters, 10 snapshots of the

velocity field were recorded for t = 50, 100, . . . , 500 s.

• For each simulation, magnitude of the velocity |u| was

recorded on regular 100 × 100 square spatial grid

• 400 samples reserved for testing

WCPM 19/2/2015

0

0

2

4

ξ / cm

6

8

10

0

0 0

0 0

3

2.5 10 10

10

8

8

8

1.5 6

6

6

1

4

4

4

0.5 2

2

2

2.5 2

8 8

4 4

1

2 2

0.5

/ cm

ηη/ cm

1.5

η / cm

2

6 6

η / cm

η / cm

0.5

2 2

4 4 6 6

ξ / cm

ξ / cm

(a)

(e) (d)

8 8

22

0.5

00

00

0 0

10 10

3

3 2.5

1

1 1

44

66

ξ /ξcm

/ cm

8 8

0

1010 0

(b)

(c)

(f)

3 3 2.5

2.5

2.5

2

88 8

22

6 6

6

1.5

1.5

1.5

1

1

1

4 4

4

2 2

2

0.5

0.50.5

6

6

6

4

4

4

2

2

2

0.5 0.5

0.5

0

0 00

0 0

2

2

2

4

6

4 4 ξ /6cm 6

ξ / cm

ξ / cm

(c)

(f)

8

010 0

8

0

8 10 10

2

2

2

2

2

36

0 0

00 0

0

2

2

2

4 4 6 6

ξ / 6cm

4 ξ / cm

ξ / cm

(e) (d)

8

8

WCPM 19/2/2015

0.5

0.5 0.5 2

0

0 0

8 10 10

10

10 10

10

3

10

3

gure 10: Predictions of the velocity magnitude using 40 training points and 9 princi2.5

8 8

8

components in the ‘Metal melting front’ example:

the test case for (a) ( hf , k, t) =

2.5

8

η / cm

8

8

0 0

10 10

η /ηcm

/ cm

8

8 8

η / cm

10

10 10

4 4

6 6

ξ / cm

ξ / cm

(a)

(e) (d)

η / cm

2 2

0

0 000

0

22

10

10

10

2.5

2.5

2

2

2

1.5

1.5

1.5

0.50.5

0 0

0 00

4

6

8

10

0

2 2

4 4

6 6

8 8

0

4

6

8

10

ξ

/

cm

ξ / cm

2

4

6

8

10

0

2

4ξ / cm

6

8

ξ / cm

ξ / cm

ξ / cm

(c) (b)

(e) (d)

(f)

3

10 10

3 2.5 10 10

3 2.5

10

3

Figure 10: Predictions of the velocity magnitude using 40 training points and 9 princi2.5

2.5

8 8

2.5 2 8 8

2.5pal components

in

the

‘Metal

melting

front’

example:

the test case for (a) ( hf , k, t) =

8

2

2

150) and (b) ( hf , k, t) = (246.88,2282.03, 100). The corresponding predictions

2 (1031.25, 76.25,

6 6

1.5 6 6

6

1.5

using kPCA-GPR are shown in (c) and (d), respectively,

while the corresponding predictions

1.5

1.5

1.5 1.5

4 4

1 4 4

using LPCA-GPR

are shown in (e) and (f), respectively.

1

4

1

1

1

0 0

0 0

η /ηcm

/ cm

η / cm

2

(c) (b)

10 10

2

0.5

/ cm

ηη/ cm

η / cm

2

2

0 00

0

0.5

0.5

0.5

0

0 0

10

10

10

3

2.5

2.5 2

2

1.5

1.5

1

1

0.5 0.5

2

2

4

4 6 6

ξ / cm

ξ / cm

8

0

0

8 10 10

(f)

10

3

2.5

Figure 10: Predictions of the velocity magnitude us

2.5

2.5pal components in the ‘Metal melting front’ example

8

2

2 (1031.25, 76.25, 150) and (b) ( hf , k, t) = (246.88,282.03

3

• We can emulate multiple fields or vector fields by

combining data sets or separate emulation assuming

independence

• Could lead to problems with scaling (e.g., temperature

variations vs. velocity variations) or ignores correlations

between outputs (e.g., electric potential and current)

(i)

y

• Multiple outputs types (fields) j , j = 1, . . . , J

• Perform NDR for each output type and extract

(i)

coefficients zk,j where k indexes the coefficient number, j

indexes the output type and i indexes inputs

(i)

(i) T

=

(z

,

.

.

.

,

z

• For a fixed k, define Z (i)

k

k,1

k,J )

WCPM 19/2/2015

• Use LMC to infer coefficients simultaneously for test

inputs: a J-variate GP

Z k (·) = FkW k (·)

• W k = (W1,k . . . , WJ,k )T where coordinates are independent

GPs with zero mean and correlation functions ci,k (·, ·, ✓ i,k )

Mk (·), Kk (·, ·))

Z k (·)| parameters, data ⇠ GP (M

• Explicit formulae for mean function M k (x ) and variancecovariance matrix Kk (x , x ) are given

• Parameters determined via likelihood or MCMC

• Repeat for each k and solve pre-image problem

WCPM 19/2/2015

• Diffusion maps, with a new method for solving preimage problem based on an extended diffusion matrix

and local interpolation

• Physics based approaches using NDR (direct

approach?)

• Large scale problems (more complex data sets)

including issues with DOE, number of samples and

dimensionality of input space

WCPM 19/2/2015

1) M. Kennedy, A. O’Hagan, J. R. Stat. Soc. Ser. B Stat. Methodol. 63 (2001)

425–464

2) R. Paulo, G. Garcia-Donato, J. Palomo, Comput. Stat. Data Analysis 56

(2012) 3959–3974

3) H. Wackernagel, Multivariate Geostatistics, Springer, Berlin, 1995.

4) S. Conti, A. O’Hagan, J. Statistical Planning and Inference 140 (2010)

640–651

5) J. Rougier, J. Computational and Graphical Statistics 17 (2008) 827–843

6) D. Higdon et al., J. Am. Stat. Association 103 (482) (2008) 570–583

7) B. Scholkopf, A. Smola, K.-R. Muller, Neural Computation 10 (1998)

1299–1319

8) S. Mika et al., Advances in neural information processing systems II 11

(1999) 536–542

9) B. Ganapathysubramanian, N. Zabaras, J. Comput. Phys. 227 (2008)

6612–6637

10) X. Ma, N. Zabaras, J. Comput. Phys. 230 (2011) 7311-7331

11) J.B. Tenenbaum, V. De Silva, J.C. Langford, Science 290 (2000) 2319–

2323

WCPM 19/2/2015