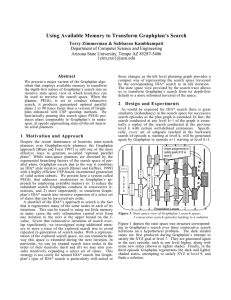

Planning Graph as a (Dynamic) CSP: Abstract Subbarao Kambhampati

advertisement