Lecture 2: Inverse Crimes, Model Discrepacy and Statistical Error Modeling Erkki Somersalo

advertisement

Lecture 2: Inverse Crimes, Model Discrepacy

and Statistical Error Modeling

Erkki Somersalo

Case Western Reserve University

Cleveland, OH

Dynamic model

Forward model

dx

= f (t, x, θ),

dt

x(0) = x0 ,

I

x = x(t) ∈ Rn is the state vector,

I

θ ∈ Rk is the unknown, or poorly known parameter vector,

I

f : R × Rn × Rk → Rn is the model function

I

x0 possibly unknown, or poorly unknown initial value.

(1)

Observation model

Data: discrete noisy observations, may depend on the parameter

vector:

bj = g (x(tj ), θ) + nj ,

t1 < t2 < . . . ,

I

g : Rn × Rk → R is the observation function

I

nj is the observation noise

The inverse problem: Estimate the state vector and the

parameter vector, (x(t), θ), based on the observations.

(2)

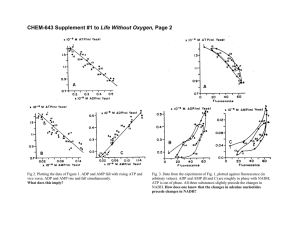

Two motivational problem

The dynamical system of acetate metabolism in brain by PET scan

data:

dm1

(t) = K1 c(t) − (k2 + k3 )m1 (t)

dt

dm2

(t) = k3 m1 (t) − k5 m2 (t)

dt

Observation: Noisy measurements of

c(tj ),

m(tj ) = V0 c(tj ) + m1 (tj ) + m2 (tj ).

Blood

Precursor

Product

k3

K1

[1-11C]acetate

[1-11C]acetate

[1-11C]O2

k2

k5

[1-11C]O2

[1-11C]O2

Astrocyte

+Neuron

Astrocyte

m1

Astrocyte

m2

HIV-1 strain competition assay

HIV-1 strain competition assay

Ċ

˙

CAe

˙

CAi

˙

CBe

˙

CBi

˙

CABe

˙

CABi

V˙A

V˙B

C˙0

=

=

=

=

=

=

=

=

=

=

λC0 T − (kA VA + kB VB + ηkA kB VA VB )C

kA VA C − kB VB CAe − rCAe

rCAe − δA CAi

kB VB C − kA VA CBe − rCBe

rCBe − δB CBi

ηkA kB VA VB C + kA VA CBe + kB VB CAe − rCABe

rCABe − δAB CABi

pA CAi + pAD CABi − cVA

pB CBi + pBD CABi − cVB

−λC0 T ,

Metabolic pathway of skeletal muscle

PCr Glu ATP ADP ADP G6P ATP Gly ATP ATP Cr ADP ADP GA3P NAD TGL NADH ADP BPG GLC ATP ATP FFA ADP Pyr Ala NADH ADP ATP NAD NAD FAC NADH Lac ACoA NAD NADH ATP OAA ADP Cit NAD NADH NADH NAD Mal O2 AKG NAD NADH NADH ADP NAD NAD Suc SCoA NADH CO2 ATP ADP AMP ATP H2O ATP ADP ATP The discrete time Markov models framework

Evolution model:

Xj+1 = F (Xj , θ) + Vj+1 ,

I

F is a known propagation model

I

Vj+1 is an innovation process

I

θ is a parameter: assumed known now, later to be estimated.

The observation model

Yj = G (Xj ) + Wj ,

the observation noise Wj independent of Xj .

Update scheme for posterior densities given accumulated data:

π(xj | Dj ) −→ π(xj+1 | Dj ) −→ π(xj+1 | Dj+1 )

Bayesian filtering

1. Propagation step: Chapman-Kolmogorov formula

Z

π(xj+1 | Dj ) =

π(xj+1 | xj , Dj )π(xj | Dj )dxj

Z

=

π(xj+1 | xj )π(xj | Dj )dxj ,

2. Analysis step: Bayes’ formula conditional on Dj

π(xj+1 | Dj+1 ) = π(xj+1 | yj+1 , Dj )

∝ π(yj+1 | xj+1 , Dj )π(xj+1 | Dj )

= π(yj+1 | xj+1 )π(xj+1 | Dj ),

Combining:

Z

π(xj+1 | Dj+1 ) ∝ π(yj+1 | xj+1 )

π(xj+1 | xj )π(xj | Dj )dxj .

Bayesian filtering

Assume that the current distribution is represented in terms of a

sample

Sj = (xj1 , wj1 ), (xj2 , wj2 ), . . . , (xjN , wjN ) .

The particle version (Monte Carlo integration):

Z

π(xj+1 | Dj+1 ) ∝ π(yj+1 | xj+1 ) π(xj+1 | xj )π(xj | Dj )dxj

is approximated by

π(xj+1 | Dj+1 ) ∝ π(yj+1 | xj+1 )

N

X

n=1

wjn π(xj+1 | xjn ).

Example: Gaussian innovation and noise

Assuming the propagation model

Xj+1 = F (Xj ) + Vj+1 ,

Vj+1 ∼ N (0, Γj+1 ),

we have

π(xj+1

1

T −1

| xj ) ∝ exp − (xj+1 − F (xj )) Γj+1 (xj+1 − F (xj )) ,

2

Similarly, the observation model

Yj = G (Xj ) + Wj ,

Wj ∼ N (0, Σj )

gives the likelihood

1

π(yj | xj ) ∝ exp − (yj − G (xj ))T Σ−1

(y

−

G

(x

))

.

j

j

j

2

Sampling Importance Resampling (SIR)

Layered sampling: For n = 1, 2, . . . , N,

n

1. Draw a candidate particle xej+1

from π(xj+1 | xjn );

n = π(y

n );

ej+1

2. Compute the relative likelihood gj+1

j+1 | x

3. Resample with replacement from

1

1

2

2

N

N

ej+1

ej+1

ej+1

(e

xj+1 , w

), (e

xj+1

,w

), . . . , (e

xj+1

,w

) ,

where the probability weights are defined as

n

gj+1

n

ej+1

w

=P n .

gj+1

Sampling Importance Resampling (SIR)

Data thinning:

I

n

Most particles xej+1

may have vanishingly small likelihood.

I

Few candidate particles are sampled over and over: The new

sample consists mostly copies of few candidate particles.

I

The density is poorly sampled.

Improvement: Auxiliary particles

Before resampling, calculate an auxiliary predictor:

x nj+1 = F (xjn ).

We write

π(xj+1 | Dj+1 ) ∝

N

X

π(yj+1 | xj+1 )

wjn π(yj+1 | x nj+1 )

π(xj+1 | xjn ),

π(yj+1 | x nj+1 )

|

{z

}

n=1

n

=gj+1

n

is a predictor of how well the auxiliary particle

The quantity gj+1

would explain the data.

Survival of the Fittest (SOF)

Given the initial probability density π0 (x0 ),

1. Initialization: Draw the particle ensemble from π0 (x0 ):

S0 = (x01 , w01 ), (x02 , w02 ), . . . , (x0N , w0N ) ,

w01 = w02 = . . . = w0N =

1

.

N

Set j = 0.

2. Propagation: Compute the predictor:

x nj+1 = F (xjn ),

1 ≤ n ≤ N.

Survival of the Fittest (SOF)

3. Survival of the fittest: For each n:

(a) Compute the fitness weights

n

gj+1

= wjn π(yj+1 | x nj+1 ),

n

gj+1

n

gj+1

←P n ;

n gj+1

(b) Draw indices with replacement `n ∈ 1, 2, . . . , N using

probabilities

k

P{`n = k} = gj+1

;

(c) Reshuffle

xjn ← xj`n ,

n

x nj+1 ← x `j+1

,

1 ≤ n ≤ N.

Survival of the Fittest (SOF)

4. Innovation: For each n: Proliferate

n

n

xj+1

= x nj+1 + vj+1

.

5. Weight updating: For each n, compute

n

wj+1

=

n )

π(yj+1 | xj+1

π(yj+1 | x nj+1 )

,

n

wj+1

6. If j < T , increase j ← j + 1 and repeat.

n

wj+1

←P n .

n wj+1

Estimating parameters: Sequential Monte Carlo

For the discrete time model, the propagation (and possibly the

likelihood) may depend on the unknown θ,

xj+1 = F (xj , θ).

Monte Carlo integral for posterior update:

π(xj+1 , θ | Dj+1 ) ∝ π(yj+1 | xj+1 , θ)

Z

× π(xj+1 | xj , θ)π(xj | θ, Dj )π(θ | Dj )dxj ,

Sample update:

Sj → Sj+1 ,

N

Sj = (xjn , θjn , wjn ) n=1 .

where Sj is drawn from π(xj , θ | Dj ).

Auxiliary parameter particles

Given the current parameter sample,

(θj1 , wj1 ), (θj2 , wj2 ), . . . , (θjN , wjN ),

estimate the mean and covariance,

θj =

N

X

n=1

wjn θjn ,

Cj =

N

X

n=1

wjn θjn − θj

θjn − θj

T

.

Auxiliary parameter particles

Approximate the marginal probability density π(θ | Dj ) of θ by a

Gaussian mixture model,

π(θ | Dj ) ≈

N

X

n

wjn N θ | θj , s 2 Cj ,

n=1

for which we define the auxiliary particle by

n

θj = aθjn + (1 − a)θj ,

where a is a shrinkage factor, 0 < a < 1 and a2 + s 2 = 1 to avoid

artificial diffusion.

Auxiliary parameter particles

Left as an exercise:

)

( N

X

n

= θj ,

E

wjn N θ | θj , s 2 Cj

n=1

and

cov

( N

X

n=1

wjn N θ |

n

θ j , s 2 Cj

)

= Cj ,

Auxiliary parameter particles

Approximate

π(xj+1 , θ | Dj+1 )

∝

N

X

n

wjn π(yj+1 | xj+1 , θ)π(xj+1 | xjn , θ)N (θ | θj , s 2 Cj ),

n=1

which we write as

π(xj+1 , θ | Dj+1 ) ∝

N

X

n

wjn π(yj+1 | x nj+1 , θj )

|

{z

}

n=1

n

=gj+1

×

π(yj+1 | xj+1 , θ)

π(yj+1 |

n π(xj+1

x nj+1 , θj )

n

| xjn , θ)N (θ | θj , s 2 Cj ),

n

where the coefficient gj+1

is the fitness of the predictor

n

n

n

n

x nj+1 , θj = F (xj+1

, θj ), θj .

Survivial of the Fittest – Sequential Monte Carlo (SOF –

SMC)

1. Initialization: Draw the particle ensemble from π0 (x0 , θ):

S0 = (x01 , θ01 , w01 ), (x02 , θ02 , w02 ), . . . , (x0N , θ0N , w0N ) ,

1

w01 = w02 = . . . = w0N = .

N

Compute the parameter mean and covariance:

θ0 =

N

X

n=1

Set j = 0.

w0n θ0n ,

C0 =

N

X

n=1

T

w0n θ0n − θ0 θ0n − θ0 .

Survivial of the Fittest – Sequential Monte Carlo (SOF –

SMC)

2. Propagation: Shrink the parameters

n

θj = aθjn + (1 − a)θj ,

1 ≤ n ≤ N,

by a factor 0 < a < 1. Compute the state predictor:

n

x nj+1 = F (xjn , θj ),

1 ≤ n ≤ N.

Survivial of the Fittest – Sequential Monte Carlo (SOF –

SMC)

3. Survival of the fittest: For each n:

(a) Compute the fitness weights

n

n

gj+1

= wjn π(yj+1 | x nj+1 , θj ),

n

gj+1

n

gj+1

←P n ;

n gj+1

(b) Draw indices with replacement `n ∈ 1, 2, . . . , N using

k

probabilities P{`n = k} = gj+1

;

(c) Reshuffle

n

`n n

x nj+1 , θj ← x `j+1

, θj ,

n

x nj+1 ← x `j+1

,

1 ≤ n ≤ N.

Survivial of the Fittest – Sequential Monte Carlo (SOF –

SMC)

4. Proliferation: For each n:

(a) Proliferate the parameter by drawing

n

n

θj+1

∼ N θj , s 2 Cj , s 2 = 1 − a2 ;

(b) Repropagate and add innovation:

n

n

n

xj+1

= F (xjn , θj+1

) + vj+1

.

Survivial of the Fittest – Sequential Monte Carlo (SOF –

SMC)

5. Weight updating: For each n, compute

n

wj+1

=

n , θn )

π(yj+1 | xj+1

j+1

n

π(yj+1 | x nj+1 , θj )

,

n

wj+1

n

wj+1

←P n .

n wj+1

6. If j < T , update

θj+1 =

N

X

n

n

,

wj+1

θj+1

Cj+1 =

n=1

increase j ← j + 1 and repeat.

N

X

n=1

n

T

n

n

θj+1

−θj+1 θj+1

wj+1

−θj+1 ,

Propagation and innovation

The problem we are addressing assumes

dx

= f (t, x, θ),

dt

x(0) = x0 ,

while the discrete propagation is written as

xj+1 = F (xj , θ) + vj+1 .

Questions:

1. How do we propagate?

2. What is the innovation?

Propagation and innovation

Naive propagation scheme

xj+1 ≈ xj + ∆tF (xj , θ),

which is used for SDE schemes (Euler-Maruyama) has several

problems:

I

The systems are typically stiff, leading to prohibitively small

step size to guarantee stability

I

The innovation needs to be related to approximation error.

Propagation and innovation

A more sophisticated solution: Use a standard stiff solver such as

ode15s.

Error proportional to approximation accuracy.

Problem:

I

Variable time step: some particles require longer integaration

I

The slowest particle determines the propagation speed.

Histogram of propagation times: An example

2000

12000

10000

1500

8000

1000

6000

4000

500

2000

0

0

5

10

15

20

0

5

10

Distribution of propagation times, ∼ 18 000 particles.

15

Stiffness and syncronization

For systems which are inherently stiff, we use a good stiff solver:

xj+1 = F exact (xj , θ) = F (xj , θ) + approximation error,

where the approximation error is due to numerical integration.

If the stiffness of the systems varies a lot with the parameter values

prescribing a fixed accuracy may be a problems because

I

The time for the particles propagation may vary widely;

I

The slowest particle determines the propagation speed

I

We cannot take full advantage of parallel and vectorized

computing environment.

Linear Multistep Methods

Given r past values,

un , un+1 , . . . , un+r −1 ,

write

r

X

j=0

αj un+j = h

r

X

βj f (un+j , tn+j ),

j=0

and determine the coefficients αj , βj from a condition that the

formula is accurate for a polynomial.

I

Adams methods: αr = 1, αr −1 = −1, αj = 0 for j < r − 1.

I

Backwards Differentiation Formulas:

β0 = β1 = . . . = βr −1 = 0.

For stiff problems: AM1–AM4, BDF1 – BDF4.

Stability regions:Adams-Bashford (explicit)

AB1 (forward Euler)

AB3

7

7

0

0

−7

−6

0

−7

11 −6

0

11

Stability regions:Adams-Moulton (implicit)

AM1 (trapezoidal)

AM3

7

7

0

0

−7

−6

0

−7

11 −6

0

11

Stability regions: BDF (implicit)

BDF1 (backward Euler)

BDF2

7

7

0

0

−7

−6

0

−7

11 −6

0

11

Higher Order Method Error Control (HOMEC)

I

un+r = candidate solution at time tn+r computed by the

LMM of order p,

I

ubn+r = solution at tn+r given by the higher order method.

I

u = exact solution with initial value u(tn ) = un

un+r

= u(tn+r ) + `hp+1 + O(hp+2 )

ubn+r

= u(tn+r ) + O(hp+2 )

as h → 0, where ` is some vector depending on the solution u(t) of

the ODE system but not on h.

Subtracting and neglecting higher order terms:

`hp+1 ≈ un+r − ubn+r

Prescribe time, not accuracy

Thus, to improve the performance of the algorithm we

I

Propagate each particle with fixed propagation time.

I

Estimate the numerical accuracy for each particle

I

Set the jth particle innovation variance proportional to the

integration error.

This yields the innovation covariance matrix

Vj+1 ∼ N (0, Γj+1 ),

where for 1 ≤ i ≤ d,

Γj+1 = diag(γ) + εI,

with τ > 1.

γi = τ 2 (uj+1 − ubj+1 )2i ,

SOF with error estimate innovation

Given the initial probability density π0 (x0 ),

1. Initialization: Draw the particle ensemble from π0 (x0 ):

S0 = (x01 , w01 ), (x02 , w02 ), . . . , (x0N , w0N ) ,

w01 = w02 = . . . = w0N =

1

.

N

Set j = 0.

2. Propagation: Compute the predictor using LMM:

x nj+1 = Ψ(xjn , h),

1 ≤ n ≤ N.

3. Survival of the fittest: For each n:

(a) Compute the fitness weights

n

gj+1

= wjn π(yj+1 | x nj+1 ),

n

gj+1

n

gj+1

←P n ;

n gj+1

(b) Draw indices with replacement `n ∈ 1, 2, . . . , N using

probabilities

k

P{`n = k} = gj+1

;

(c) Reshuffle

xjn ← xj`n ,

n

x nj+1 ← x `j+1

,

1 ≤ n ≤ N.

4. Innovation: For each n:

(a) Using error estimate, estimate Γnj+1 = Γj+1 (xjn );

n

∼ N (0, Γnj+1 );

(b) Draw vj+1

(c) Proliferate

n

n

xj+1

= x nj+1 + vj+1

.

5. Weight updating: For each n, compute

n

wj+1

=

n )

π(yj+1 | xj+1

π(yj+1 | x nj+1 )

,

n

wj+1

n

wj+1

←P n .

n wj+1

6. If j < T , increase j ← j + 1 and repeat from (ii).

The parameter estimation SMC can be also carried out

concurrently.

Extensions to EnKF

Assuming that the current density π xj , θ | Dj is represented in

terms of an ensemble

n

o

1

2

N

Sj|j =

xj|j

, θj1 , xj|j

, θj2 , . . . , xj|j

, θjN

,

the state prediction ensemble is obtained by

n

n

n

, n = 1, 2, . . . , N

xj+1|j

= F xj|j

, θjn + vj+1

following the state evolution equation and setting the innovation

variance as for the particle filter.

Assume a linear observation model.

Combining state and parameter vectors

Compute the prediction ensemble statistics by defining the state

and parameter vectors

"

#

n

x

n

j+1|j

zj+1|j

=

,

n = 1, 2, . . . , N.

θjn

The prediction ensemble mean is

z j+1|j

N

1 X n

zj+1|j

=

N

n=1

and the prior covariance matrix is

N

Γj+1|j

T

1 X n

n

=

zj+1|j − z nj+1|j zj+1|j

− z nj+1|j

N −1

n=1

When an observation yj+1 arrives, an observation ensemble is

generated by parametric bootstrapping

n

n

yj+1

= yj+1 + wj+1

n ∼ N (0, D) is a realization of the noise. In the case of

where wj+1

a linear observation the combined posterior ensemble is obtained as

n

n

n

n

zj+1|j+1

= zj+1|j

+ Kj+1 yj+1

− Gj+1 zj+1|j

,

n = 1, 2, . . . , N

where the Kalman gain Kj+1 is

−1

T

+

D

Kj+1 = Γj+1|j GT

G

Γ

G

.

j+1

j+1|j j+1

j+1

The posterior means and covariances for the states and parameters

are computed using the posterior ensemble statistics.

A simple example motivated by acetate metabolism

x1

x1 + k1

dx1

dt

= Φ(t) − V1

dx2

dt

= V1

x1

x2

− V2

x1 + k1

x2 + k2

dx3

dt

= V2

x2

− λ(x3 − c0 )

x2 + k2

with known parameters λ and c0 and input function

Φ(t) = A0 + A(t − t0 ) exp(−(t − t0 )/τ )

(3)

1.5

x1

x2

x3

1

0.5

0

0

5

10

15

20

25

30

Time series for V1

Time series for k1

1.6

0.14

1.4

0.12

1.2

0.1

1

0.08

0.8

0.06

5

10

15

20

25

30

0.04

5

10

Time series for V2

15

20

25

30

25

30

Time series for k2

0.8

0.7

0.7

0.6

0.6

0.5

0.5

0.4

5

0.4

10

15

20

25

30

5

10

15

20

Time series for V1

Time series for k1

2

0.25

1.5

0.2

1

0.15

0.5

0.1

0

5

10

15

20

25

30

0.05

5

10

Time series for V2

1

1

0.8

0.8

0.6

0.6

0.4

0.4

0.2

5

10

15

20

15

20

25

30

25

30

Time series for k2

25

30

0.2

5

10

15

20

Time t = 5

Time t = 10

1.5

Time t = 15

0.15

1

0.6

0.14

0.58

x2

x2

x2

0.5

0.13

0

0.56

0.12

−0.5

−1

0.2

0.4

0.6

k2

0.8

0.11

0.2

1

0.4

0.8

0.54

0.4

1

0.165

0.135

0.135

0.155

x2

0.14

0.16

0.13

0.125

0.5

0.6

k2

0.7

0.8

0.12

0.45

0.6

k2

0.7

0.8

0.6

0.65

Time t = 30

0.14

0.15

0.4

0.5

Time t = 25

0.17

x2

x2

Time t = 20

0.6

k2

0.13

0.125

0.5

0.55

k2

0.6

0.65

0.12

0.45

0.5

0.55

k2

The humble and lowly skeletal muscle

and its metabolism

PCr Glu ATP ADP ADP G6P ATP Gly ATP ATP Cr ADP ADP GA3P NAD TGL NADH ADP BPG GLC ATP ATP FFA ADP Pyr Ala NADH ADP ATP NAD NAD FAC NADH Lac ACoA NAD NADH ATP OAA ADP Cit NAD NADH NADH NAD Mal O2 AKG NAD NADH NADH ADP NAD NAD Suc SCoA NADH ATP CO2 ATP ADP AMP ATP H2O ADP ATP Stiffness and many unknown parameters

Problem: follow the time courses of metabolites and intermediates

in skeletal model cellular metabolism model over 100 minutes.

I

State vector: 38 concentrations, 30 in tissue, 8 in blood;

I

Evolution model: nonlinear system of ODEs;

I

Number of unknown parameters: 44+52.

I

The stiffness of the underlying ODE system reflects the

difference in time constants of the different reactions and

transports.

Numerical test

I

Data: Noisy measurements of 8 concentrations in blood at 11

time instances.

I

Propagation: use implicit time integrators BDF2 (and BDF3)

with fixed time step h = 0.01 or 0.6 seconds.

I

Ensemble size: N=250.

I

Fix 44 parameters corresponding to facilitators and estimate

remaining 52 (max flux and affinity)

I

Initial ensemble: cloud of parameters values not centered

around true values and corresponding initial values which

support steady state.

I

At each time step we inflate the covariance matrix by 20%.

Spatio-temporal prior

I

We want to favor solutions with moderate rates of change.

I

Exponential a priori bound:

M −∆tj+1 C (tj ) ≤ C (tj+1 ) ≤ M ∆tj+1 C (tj )

for M > 1 and ∆tj+1 = tj+1 − tj , which implies

k log

I

C (tj+1 ) k = |x(tj+1 ) − x j|j | ≤ (log M)∆tj+1 .

C (tj )

We set

x(tj+1 ) ∼ N x j|j , γ 2 (∆tj+1 )2 Id )

with M = 20 and γ = log M/2.

Estimation of blind components

Lactate

Malate

1.1

0.105

concentration, [mmol/l]

concentration, [mmol/l]

0.1

1

0.9

0.8

0.095

0.09

0.085

0.08

0.075

0.7

0

20

40

60

time, [min]

80

100

0

20

ATP

100

80

100

0.08

6.2

concentration, [mmol/l]

concentration, [mmol/l]

80

NADH

6.22

6.18

6.16

6.14

6.12

0

40

60

time, [min]

0.075

0.07

0.065

0.06

0.055

20

40

60

time, [min]

80

100

0.05

0

20

40

60

time, [min]

Estimation of parameters

Vmax, Malate production

maximum reaction rate, [mmol/l/min]

maximum reaction rate, [mmol/l/min]

Vmax, ATP hydrolysis

8

7.9

7.8

7.7

7.6

7.5

7.4

0

20

40

60

time, [min]

80

100

2.2

2.15

2.1

2.05

2

1.95

0

0.057

0.056

0.055

0.054

0.053

0.052

0

20

40

60

time, [min]

80

20

40

60

time, [min]

80

100

Vmax, Pyruvate reduction

maximum reaction rate, [mmol/l/min]

maximum reaction rate, [mmol/l/min]

Vmax, Alanine production

100

0.54

0.53

0.52

0.51

0.5

0.49

0.48

0

20

40

60

time, [min]

80

100

Predictive skill of the filter

Question: How well does a dynamical system identified by the

estimated parameters describe predict other protocols?

fading

ischemia

increasing

Q, [mL/min]

1.5

1

0.5

0

0

50

t, [min]

100

ATP

ATP

6.26

6.24

concentration, [mmol/l]

concentration, [mmol/l]

6.24

6.22

6.2

6.18

6.16

6.14

6.12

0

20

40

60

time, [min]

80

6.22

6.2

6.18

6.16

6.14

0

100

20

NADH

0.08

100

80

100

0.07

concentration, [mmol/l]

concentration, [mmol/l]

80

NADH

0.075

0.07

0.065

0.06

0.055

0.05

0

40

60

time, [min]

20

40

60

time, [min]

80

100

0.068

0.066

0.064

0.062

0.06

0

20

40

60

time, [min]

Lactate

1.6

1.4

1.4

concentration, [mmol/l]

concentration, [mmol/l]

Lactate

1.6

1.2

1

0.8

0

20

40

60

time, [min]

80

100

1.2

1

0.8

0

20

Pyruvate

0.1

100

80

100

0.09

concentration, [mmol/l]

concentration, [mmol/l]

80

Pyruvate

0.09

0.08

0.07

0.06

0.05

0.04

0.03

0

40

60

time, [min]

20

40

60

time, [min]

80

100

0.08

0.07

0.06

0.05

0.04

0

20

40

60

time, [min]

References

I

Arnold A, Calvetti D, and Somersalo E (2013) Linear

multistep methods, particle filtering and sequential Monte

Carlo. Inverse Problems, 29(8), 085007.

I

Arnold A, Calvetti D, Gjedde A, Iversen P, and Somersalo E

(2014) Astrocyte tracer dynamics estimated from

[1 −11 C ]-acetate PET measuraments. Math. Med. Biol.

doi: 10.1093/imammb/dqu021.

I

Arnold A, Calvetti D, and Somersalo E (2014) Parameter

estimation for stiff deterministic dynamical systems via

Ensemble Kalman filter. Inverse Problems 30 : 105008.

References

I

Cotter SL, Roberts GO, Stuart AM and White D (2013).

MCMC methods for functions: modifying old algorithms to

make them faster. Statistical Science, 28, 424-446.

I

Liu J and West M 2001 Combined parameter and state

estimation in simulation-based filtering Sequential Monte

Carlo Methods in Practice eds A Doucet, J F G de Freitas and

N J Gordon (New York: Springer-Verlag) pp 197-223

I

Pitt M and Shephard N 1999 Filtering via simulation:

auxiliary particle filters J. Amer. Statist. Assoc. 94 590-599