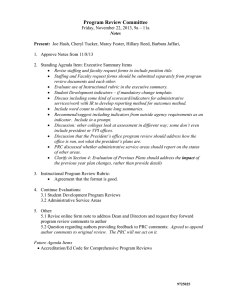

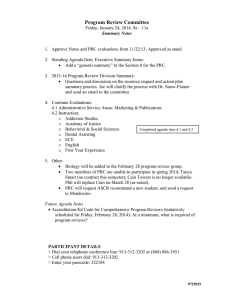

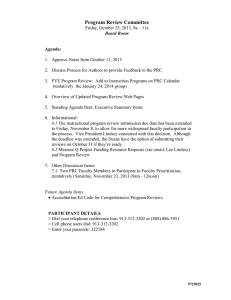

PROGRAM REVIEW Self-Study Resource Guide 2007-08

advertisement