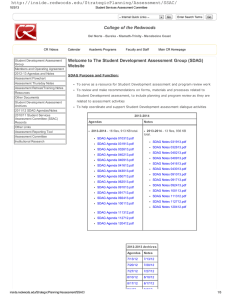

Student Development Assessment Summit Friday, May 17 , 2013

advertisement

Student Development Assessment Summit Friday, May 17th, 2013 9:00 – 12:00 SS109 I Welcome and Introduction to SDAG - II Year in Review - III Who we are and what we have been doing Results of our Assessment Work Dialogue Year to Come - - Assessment Reporting and Program Review updates o Calendar o Forms o Instructions Assessment Planning o Shared Outcomes o Connecting to strategic planning Annual plan allignment Small Group Work if needed IV Evaluation SDAG Purpose and Function: • • • To serve as a resource for Student Development assessment and program review work To review and make recommendations on forms, materials and processes related to Student Development assessment, to include planning and program review as they are related to assessment activities To help coordinate and support Student Development assessment dialogue activities ASSESSMENT REPORTING TOOL, revised draft for SD: Web Form Fields and Labels Academic Year; Service Area; Method of Student Contact or Service; List all Faculty / Staff involved; Where are artifacts housed and who is the contact person?; Additional Information to help you track this assessment What assessment methods or tools were used to evaluate how well the outcome was met? 1. DATA - Collect new data, tracking ongoing processes, counting data elements, making observations; or use existing internal or external data sources 2. NOTES - Reports, meeting notes, documentation, narrative or descriptive information 3. SURVEYS - Surveys, Interviews, focus groups, training or workshop attendee evaluations 4. ANALYSIS - Cost/benefit analysis, SWOT, ROI analysis, or qualitative analysis 5. COURSE OUTCOME – Applying assessments used in courses to student services outcomes; shared outcomes and assessments 6. OTHER What criteria was used to determine the usefulness of the assessment? [Examples: benchmark, norm, best practices, standard, expectations, hypotheses, assumptions] To what extent did performance meet the criteria? [benchmark - assumptions]? Provide the number or percent: 1. Did not meet [benchmark - assumptions]; 2. Met [benchmark - assumptions]; 3. Exceeded [benchmark - assumptions]; 4. Not measured What do these results indicate? Check if actions or changes are recommended by the results. (Checking here will activate the section below) What category best describes how the recommendations will be addressed: 1. 2. 3. 4. 5. 6. 7. 8. SUBMIT FORM Reevaluate / modify outcomes Reevaluate / modify processes Modify evaluation tool / methods Change funding priorities / shift staff or resources Implement or modify staff training Implement or modify student orientations Purchase equipment, supplies, technology Other ASSESSMENT REPORTING TOOL, existing text from current web form Acad Year Area (Choose an area) Method of Instruction Choose an Outcome List all Faculty/Staff involved Where are artifacts housed and who is the contact person? Additional information to help you track this assessment (eg. MATH-120-E1234 2012F) What assessment tool(s)/assignments were used? Use the text box to describe the student prompt, such as the exam questions or lab assignments. Embedded Exam Questions: Writing Assignment: Surveys/ Interviews: Culminating Project: Lab assignments: Other: Describe the Rubric or criteria that is used for evaluating the tool/assignment. To what extent did performance meet expectation? Enter the number of students who fell into each of the following categories. Did not meet expectation/did not display ability: Met expectation/displayed ability: Exceeded expectation/displayed ability: (NA) Ability was not measured due to absence/non-participation in activity: A. What are your current findings? What do the current results show? B. Check if actions/changes are indicated. (checking here will open a 'closing the loop' form which you will need to complete when you reassess this outcome) If you checked above, what category(ies) best describes how they will be addressed? Redesign faculty development activities on teaching. Purchase articles/books. Consult teaching and learning experts about methods. Create bibliography/books. Encourage faculty to share activities that foster learning. Other: Reevaluate/modify learning outcomes. Write collaborative grants. Use the text box to describe the proposed changes and how they will be implemented in more detail. 1) Overview of the cycles and calendars for assessment reporting, program reviews, strategic planning, other planning cycles. How they all fit together, the big picture, ways that some departments have aligned their outcomes and assessments 2) Assessment Dialogue highlights: Institutional Learning Outcomes, FYE Assessment and more 3) Next steps . . . what you and we need to do within the next 4-5 months, Q & A, offer appointments to assist with outcomes and assessments and how they tie into the program review a. `Sept 15th – deadline for pr and assessment last day for 12/13, first day for 13/14 b. Assessment for Instruction will probably be Feb 15, semesterly for instr c. Template changes for next time: • Add more detailed instructions on the template, or add a separate document explaining what to put for each question (like with taxes). For example, more clearly indicate that # of degrees refers to the number of degrees that the program offers, not the number of students who were awarded a degree. Provide more guidance for the next program review process. • Remove the shaded boxes including budget info, unless the committee can come up with a strategy as to how the authors can provide this information. • Reposition the Labor Market Data question earlier in the data section so that it is more clearly relevant to all CTE areas. • Change the two narrative questions at the end of the data section. They were confusing to authors. Maybe we can add one question such as: Overall, what does this data tell you about your program? • The rational linkage section of the resource requests was answered inconsistently, and at different levels of specificity. The template should give detailed instructions. • In the program plan section create hot link to institutional plans as a reminder to link planning. • The PRC would like detail relating to recurring costs; specifically, the use. There seems to be confusion on the requests for operational budget: the intent is that expenditures fall into two categories: planning or an increase for operational needs, • Clarify in the resource request instructions that ongoing resource requests need be included only if it is for an increase due to increased costs is being asked. • For degrees and programs that are district-wide, future template to include a box for sitespecific variations, constraints, issues and the inherent challenges. • A place for the author to provide feedback about the template and the data should be included directly in the next template. • The budget section is difficult to import into a spreadsheet; some revision in the future will be needed in this section for import and collapse. Changes to Data • The structure of a few of the program groupings (e.g., Fine Arts, Behavioral Sciences) needs to be reevaluated to make sure that collaborative assessment efforts are reflected in the program review structure, and to better fit faculty needs. • All courses associated with a program need to be reevaluated with the Dean and area coordinators. Several improvements were made this year, but this should be looked at systematically. • Indicators based on declared program (persistence, completions) was problematic because many students don’t accurately declare a program. These should be replaced with data based on cohorts who have completed at least a given number of units in a particular TOPS code. • The number of students who have declared the program, and the number of students who complete the program annually should be reported. • We need to find a way of treating courses that we don’t consider consistently active (e.g., 40s, 88s, etc.) and shouldn’t count against the currency of the curriculum and assessment activity. • Persistence for low-credit certificates needs to be adjusted because the student may complete within a year. • A dataset specific to online courses in programs should be reviewed by the PRC.