Assessment Thursday notes – 11/8/12

advertisement

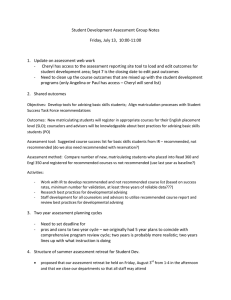

Assessment Thursday notes – 11/8/12 Exploring Best Practices in Student Development Assessment Attended: Trish Blair, Mary Grace McGovern, Robert Ekholdt, Sheila Hall, Kathy Goodlive, Cheryl Tucker, Anna Duffy, Kintay Johnson The focus of this activity was to explore assessment methods other than surveys. Discussion highlights: “It’s like trying to assess a class that never meets!” Kathy Goodlive, Admissions and Records Manager We would be able to better assess student learning outside the classroom if we had a lab for students to complete their student development/services work – we could measure how they are learning on a regular and consistent basis when they complete forms, registration, educational plans, etc. Using archival data for assessment such as transcript analyses: Pros – useful for exposing problematic trends (where students get stuck!) and Cons – time consuming, getting access to the data, and potentially boring (!) o Ways we could use (or are using) this assessment method: Financial Aid loan process DSPS already using for basic skills assessment ASC is getting students referred from progress reports CDC/EOPS/CalWORKs/Advising could compare SEP and courses actually taken and completed or identify barriers to completions Could focus groups for front-line staff, faculty, assoc. faculty and student workers be used to find out how to better inform students about key concepts we need them to know? Would faculty be interested in a flex day activity this January where we could present the data we have from recent assessment and have further dialogue on how to improve? Notes: Transcript Analyses (using archival data for assessment) – useful for identifying trends; it exposes problematic trends (how long does it take to complete a process, where in the process of moving through an academic program or completing FA paperwork are students getting stuck i.e.); cons are that this assessment method can be time consuming, boring, and there can be data access barriers/issues. We need to better measure behavior trends, determine why a negative behavior occurs, develop plan or intervention to change behavior, then re-assess: i.e. identify what questions were most frequently not answered, develop workshop to teach students how to correctly provide the information, then measure again. Ways we could use (or are using) this assessment method: - Financial Aid loan process DSPS already using for basic skills assessment ASC is getting students referred from progress reports CDC/SP/Advising could compare SEP and courses actually taken/completed or identify barriers to completions Another way we could get at this information would be to have individual students describe how they interpret the questions on a form or what the meaning is for them. We have many different learning styles, need to provide information in different ways. Tracking web hits has been done in the past, but in a more general way. What if we tracked more specific “hits” and correlated with time of year. How many times they access certain forms? Ask certain questions? If we gathered over long many years then the trends become more apparent (useful for comprehensive reviews). Seems to be a trend at other institutions to have less info available on phone contact, more electronic. Students in focus groups said they need to talk to a real person though if it is about financial aid or educational planning/advising. Portfolios – for services this might be considered a student file, i.e. Financial Aid file – it shows work that they have completed. How does this show us what they have learned? Can we better measure this through these applications? When did they start and stop certain activities/processes – when they applied and finished. We would be able to better assess student learning outside the classroom if we had a lab for students to complete their student development/services work – we could measure how they are learning on a regular and consistent basis when they complete forms, registration, educational plans, etc. “It’s like trying to assess a class that never meets!” Other ways to use portfolio assessment: - Sometimes students think they have completed a process and are surprised to have found out there are more steps For those that do complete and understand a process, what was different? We need a graph that shows financial aid file completion link to awards . . . what percentage of students received their awards if they had their files completed by date x, y, z . . . i.e. 100% of the students who had completed files by July 1 received awards on first day of school, etc. We could highlight on web page. Interviews/Focus Groups – reviewed methodology used last week. DSPS does not use because only a select group of students will participate. Other uses of interviews/focus groups: - Identify groups that are responsible for getting out key information to students i.e. faculty (be sure to include associate faculty), front-line staff, student workers. Determine if they know the specific concepts we need for them to know and share with students. Would it help to have professional development at specific times of year? Could we have some focus groups or training developed for this upcoming flex day in January; could we present the data we have from recent assessment and have further dialogue on how to improve? (Kathy, Sheila, Anna and Cheryl volunteered to support) Other: Could we get data on how often students access their email accounts? Might be more accurate than a survey to have the direct data. (Anna will check with tech mtg). Need a training program for our student workers and front-line staff based on these assessment results. Need to remember to ask ourselves the “so what” question – make sure that what we are measuring/asking is relevant and meaningful.