Friday, 9/4/12 1. Assessment Reports – finalize by Sept 7

advertisement

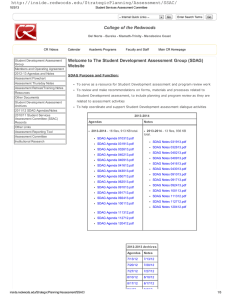

SDAG Notes Friday, 9/4/12 1. Assessment Reports – finalize by Sept 7 Revisions are under way for the Assessment Reporting Tool on the assessment homepage. The current Reporting Tool will need to be taken down before the revised tool for this academic year can come up. The Assessment Reporting Tool is scheduled to come down two weeks after the start of this Fall 2012 semester. All assessment report must be completed by 9/7. 2. Two year plans Everyone’s plans are up Ruth shared the two year plan for the library reference section 3. Data Needs for Program Review and Assessment – Cheryl met with IR last Friday and Zach is working on – IR recommends combining surveys into; SDAG is keeping second half hour open to meet with program review authors and review assessment data 4. Website Discussion on changes to new site – we all like the front page and links New reporting form feedback from Ruth to IR: Choose an Outcome I see that there is a specific and limited list of outcomes, looks like they were pulled from the program review and/or assessment report. I did not see my Reference Services outcomes so I wonder how that would be integrated so that I could report my assessments according to my plan. Can outcomes not by typed into the form? List all Faculty/Staff involved Where are artifacts housed and who is the contact person? Additional information to help you track this assessment (eg. MATH-120-E1234 2012F) No comments on this section, looks OK. What assessment tool(s)/assignments were used? Use the text box to describe the student prompt, such as the exam questions or lab assignments. Embedded Exam Questions: Writing Assignment: Surveys/ Interviews: Culminating Project: Lab assignments: Other: For this section, I don’t know that many of us are actually able to use any of these assessment methods except the survey/interview, so I am glad to see the option to include “other.” I think that I may need a lot of text, unfortunately. For example, my assessment of the book collection will result in a report that I plan to present to the Senate and maybe the Board; obviously a 10-15 page report will not fit in the form box. So how do I summarize that? If my outcome is, “book collection will support assignments, courses, and programs” with the outcome proven by a) more books in the subject areas; b) books in those subject areas circulate more frequently, could I just put that in the box followed by “SEE artifacts page for “Library Book Collection Assessment” at (link) ?? Describe the Rubric or criteria that is used for evaluating the tool/assignment. Is this asking for the criteria for evaluating, not the assessment itself, but rather the tool used to make the assessment? In other words, would it be something like, “I used the following data collection and analysis method to assess the book collection, and it worked well because of X, Y, and Z; and/or it did not work well because of A, B, and C.?” Or, is it the place to describe the assessment and the target level desired and whether it was met or not. For example, something like, “circulation rates by subject were expected to be at least 15% for subject areas relevant to courses and programs, based on professional associate standards and previous published research reports of similar studies at similar institutions” To what extent did performance meet expectation? Enter the number of students who fell into each of the following categories. Did not meet expectation/did not display ability: Met expectation/displayed ability: Exceeded expectation/displayed ability: (NA) Ability was not measured due to absence/non-participation in activity: I don’t have any assessments that will provide me a breakdown of the number of students who met these levels. I can get things like usage rates, satisfaction and confidence levels, and I will be working on something this fall to link student grades, success and retention, other factors, to library research workshops. Glendale has done this with good results. A. What are your current findings? What do the current results show? No Comments, looks OK.I guess I could use this section to enter the “met expectations” (or not) levels and what the target and measure was, and the method. B. Check if actions/changes are indicated. (checking here will open a 'closing the loop' form which you will need to complete when you reassess this outcome) I have not seen the CTL form and would like to see it before commenting. If you checked above, what category(ies) best describes how they will be addressed? Redesign faculty development activities on teaching. I am not understanding this language- it implies that we currently do have faculty development activities [on] teaching. Also not sure what “redesign” means here. How about “develop professional development activities to support improved teaching” or something like that. However, does not really affect me so if others who are affected understand this language then I’m OK with it, too. Purchase articles/books. Create bibliography/books. These suggest some work with the librarian. I am surprised, pleasantly, but again, it’s not anything I heard of until now. A phone call or email when discussing library role or services would be nice. I assume the bibliography is for something like a recommended reading list or maybe like the research guides I’ve been working on with the associate faculty librarians? (SEE http://www.redwoods.edu/Eureka/Library/SRG.asp) Again, if I am already doing it …. Also, if these will be created by the faculty, I would still like to be in that loop and wonder if this could somehow be routed to me if checked. The purchase I hope is clear to everyone, that should go through the library- that is what we are set up to do. Rather than individual or departmental purchases that would then have to be stored in individual or department offices. Maybe to clarify, add the text, “Provide the library with your list of books, videos, online databases, or other resources recommended for purchase” although that is probably too long. Consult teaching and learning experts about methods. Encourage faculty to share activities that foster learning. These two look OK and I might actually be able to use these as a response for some things. Is there some other place where it will be recorded that the consultation and encouragement happened? Other: Reevaluate/modify learning outcomes. Write collaborative grants. Use the text box to describe the proposed changes and how they will be implemented in more detail. I think that for most of my outcomes the check boxes will be left blank and everything will go into the text box. I don’t mind, but it might make it difficult to get broader, crossdepartmental summary data, especially if it’s true for other services departments. But we may have to get a few years of this before we see what patterns emerge.