S aurash htra U

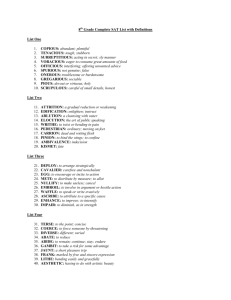

advertisement