CDA 5155 Computer Architecture Week 1.5

advertisement

CDA 5155

Computer Architecture

Week 1.5

Start with the materials:

Conductors and Insulators

• Conductor: a material that permits electrical current

to flow easily. (low resistance to current flow)

Lattice of atoms with free electrons

• Insulator: a material that is a poor conductor of

electrical current (High resistance to current flow)

Lattice of atoms with strongly held electrons

• Semi-conductor: a material that can act like a

conductor or an insulator depending on conditions.

(variable resistance to current flow)

Making a semiconductor using

silicon

e

e

e

e

e

e

e

e

e

e

e

e

e

e

e

e

e

e

e

e

What is a pure silicon lattice?

A. Conductor

B. Insulator

C. Semi conductor

N-type Doping

We can increase the conductivity by adding

atoms of phosphorus or arsenic to the silicon

lattice.

They have more electrons (1 more) which is free to

wander…

This is called n-type doping since we add some free

(negatively charged) electrons

Making a semiconductor using

silicon

This electron is easily

moved from here

e

e

e

e

e

e

e

e

e

e

P

e

e

e

e

e

e

e

e

e

e

e

What is a n-doped silicon lattice?

A. Conductor

B. Insulator

C. Semi-conductor

P-type Doping

Interestingly, we can also improve the conductivity

by adding atoms of gallium or boron to the silicon

lattice.

They have fewer electrons (1 fewer) which creates a hole.

Holes also conduct current by stealing electrons from their

neighbor (thus moving the hole).

This is called p-type doping since we have fewer (negatively

charged) electrons in the bond holding the atoms together.

Making a semiconductor using

silicon

e

e

e

e

e

e

e

e

e

e

Ga

e

e

?

e

e

e

e

e

e

e

This atom will accept an electron even

though it is one too many since it fills the

eighth electron position in this shell. Again

this lets current flow since the electron must

come from somewhere to fill this position.

Using doped silicon to make a

junction diode

A junction diode allows current to flow in

one direction and blocks it in the other.

Electrons like

to move to Vcc

Electrons move from GND

to fill holes.

GND

Vcc

Using doped silicon to make a

junction diode

A junction diode allows current to flow in

one direction and blocks it in the other.

Current flows

e

e

e

e

e

e

e

Vcc

GND

Making a transistor

Our first level of abstraction is the transistor.

(basically 2 diodes sitting back-to-back)

Gate

P-type

Making a transistor

Transistors are electronic switches connecting

the source to the drain if the gate is “on”.

Vcc

Vcc

Vcc

http://www.intel.com/education/transworks/INDEX.HTM

Review of basic pipelining

•

5 stage “RISC” load-store architecture

–

About as simple as things get

•

Instruction fetch:

•

•

Instruction decode:

•

•

perform ALU operation

Memory:

•

•

translate opcode into control signals and read regs

Execute:

•

•

get instruction from memory/cache

Access memory if load/store

Writeback/retire:

•

update register file

Pipelined implementation

• Break the execution of the instruction into

cycles (5 in this case).

• Design a separate datapath stage for the

execution performed during each cycle.

• Build pipeline registers to communicate

between the stages.

Stage 1: Fetch

Design a datapath that can fetch an instruction from

memory every cycle.

Use PC to index memory to read instruction

Increment the PC (assume no branches for now)

Write everything needed to complete execution to

the pipeline register (IF/ID)

The next stage will read this pipeline register.

Note that pipeline register must be edge triggered

PC

en

Instruction

memory

en

IF / ID

Pipeline register

Rest of pipelined datapath

+

Instruction

bits

1

PC + 1

M

U

X

Stage 2: Decode

Design a datapath that reads the IF/ID pipeline

register, decodes instruction and reads register file

(specified by regA and regB of instruction bits).

Decode is easy, just pass on the opcode and let later stages

figure out their own control signals for the instruction.

Write everything needed to complete execution to the

pipeline register (ID/EX)

Pass on the offset field and both destination register specifiers

(or simply pass on the whole instruction!).

Including PC+1 even though decode didn’t use it.

Instruction

bits

Destreg

Data

en

IF / ID

ID / EX

Pipeline register

Pipeline register

Contents

Of regA

PC + 1

Rest of pipelined datapath

Contents

Of regB

Register File

Instruction

bits

PC + 1

Stage 1: Fetch datapath

regA

regB

Stage 3: Execute

Design a datapath that performs the proper ALU

operation for the instruction specified and the values

present in the ID/EX pipeline register.

The inputs are the contents of regA and either the contents of

regB or the offset field on the instruction.

Also, calculate PC+1+offset in case this is a branch.

Write everything needed to complete execution to the

pipeline register (EX/Mem)

ALU result, contents of regB and PC+1+offset

Instruction bits for opcode and destReg specifiers

Result from comparison of regA and regB contents

ID / EX

EX/Mem

Pipeline register

Pipeline register

Contents

Of regA

Alu

Result

PC + 1

PC+1

+offset

Rest of pipelined datapath

contents

of regB

M

U

X

bits

Contents

Of regB

A

L

U

Instruction

Instruction

bits

Stage 2: Decode datapath

+

Stage 4: Memory Operation

Design a datapath that performs the proper memory

operation for the instruction specified and the values

present in the EX/Mem pipeline register.

ALU result contains address for ld and st instructions.

Opcode bits control memory R/W and enable signals.

Write everything needed to complete execution to the

pipeline register (Mem/WB)

ALU result and MemData

Instruction bits for opcode and destReg specifiers

en R/W

bits

contents

of regB

Alu

Result

Alu

Result

PC+1

+offset

MUX control

for PC input

Rest of pipelined datapath

Memory

Read Data

Data Memory

Instruction

bits

Instruction

Stage 3: Execute datapath

This goes back to the MUX

before the PC in stage 1.

EX/Mem

Mem/WB

Pipeline register

Pipeline register

Stage 5: Write back

Design a datapath that completes the execution of

this instruction, writing to the register file if

required.

Write MemData to destReg for ld instruction

Write ALU result to destReg for add or nand

instructions.

Opcode bits also control register write enable signal.

Alu

Result

Memory

Read Data

M

U

X

bits

Instruction

Stage 4: Memory datapath

This goes back to data

input of register file

Mem/WB

Pipeline register

bits 0-2

This goes back to the

destination register specifier

register write enable

M

U

X

bits 16-18

M

U

X

1

+

+

PC

Inst

mem

Register

file

M

U

X

Sign extend

M

U

X

A

L

U

Data

memory

0-2

M

16-18 U

X

IF/

ID

ID/

EX

EX/

Mem

Mem/

WB

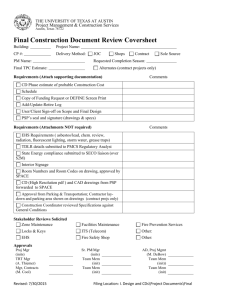

Sample Test Question (Easy)

•

Which item does not need to be included in the

Mem/WB pipeline register for the LC3101

pipelined implementation discussed in class?

A.

B.

C.

C.

D.

E.

ALU result

Memory read data

PC+1+offset

PC+1+offset

Destination register specifier

Instruction opcode

Sample Test Question (Hard?)

•

What items need to be added to one of the pipeline

registers (discussed in class) to support the

<insert nasty instruction description here> ?

A.

B.

C.

D.

E.

IF/ID: PC

ID/EX: PC+offset

EX/Mem: Contents of regA

EX/Mem: ALU2 result

Mem/WB: Contents of regA

Things to think about…

1. How would you modify the pipeline

datapath if you wanted to double the

clock frequency?

2. Would it actually double?

3. How do you determine the

frequency?

Sample Code (Simple)

Run the following code on pipelined LC3101:

add 1 2 3

nand 4 5 6

lw 2 4 20

add 2 5 5

sw 3 7 10

; reg 3 = reg 1 + reg 2

; reg 6 = reg 4 & reg 5

; reg 4 = Mem[reg2+20]

; reg 5 = reg 2 + reg 5

; Mem[reg3+10] =reg 7

M

U

X

1

+

+

PC+1

PC+1

R0

regA

R1

regB

R2

Register file

Inst

mem

instruction

PC

target

R3

0

eq?

valA

R4

R5

valB

R6

R7

M

U

X

A

L

U

ALU

result

ALU

result

mdata

Data

memory

data

offset

dest

valB

Bits 0-2

Bits 16-18

Bits 22-24

IF/

ID

M

U

X

M

U

X

dest

dest

dest

op

op

op

ID/

EX

EX/

Mem

Mem/

WB

M

U

X

1

+

+

0

0

R0

R1

noop

Inst

mem

Register file

R2

PC

0

R3

R4

R5

R6

R7

0

36

9

12

18

7

41

22

0

0

0

0

M

U

X

A

L

U

0

0

Data

memory

data

0

dest

0

Bits 0-2

Initial

State

Time: 0

Bits 16-18

Bits 22-24

IF/

ID

M

U

X

M

U

X

0

0

0

noop

noop

noop

ID/

EX

EX/

Mem

Mem/

WB

add 1 2 3

M

U

X

1

+

+

1

0

R0

R1

R2

Register file

Inst

mem

add 1 2 3

PC

0

R3

R4

R5

R6

R7

0

36

9

12

18

7

41

22

0

0

0

0

M

U

X

A

L

U

0

0

Data

memory

data

0

dest

0

Fetch:

add 1 2 3

Time: 1

Bits 0-2

Bits 16-18

Bits 22-24

IF/

ID

M

U

X

M

U

X

0

0

0

noop

noop

noop

ID/

EX

EX/

Mem

Mem/

WB

nand 4 5 6

add 1 2 3

M

U

X

1

+

+

2

1

R0

R1

1

R2

2

Register file

Inst

mem

nand 4 5 6

PC

0

R3

R4

R5

R6

R7

0

36

9

12

18

7

41

22

0

0

36

9

3

M

U

X

A

L

U

0

0

Data

memory

data

dest

0

Fetch:

nand 4 5 6

Time: 2

Bits 0-2

Bits 16-18

Bits 22-24

IF/

ID

M

U

X

M

U

X

3

0

0

add

noop

noop

ID/

EX

EX/

Mem

Mem/

WB

lw 2 4 20

nand 4 5 6

add 1 2 3

M

U

X

3

1

+

3

2

R0

4

R2

5

Register file

Inst

mem

lw 2 4 20

PC

R1

R3

R4

R5

R6

R7

0

36

9

12

18

7

41

22

+

1

4

0

18

7

0

36

9

M

U

X

6

A

L

U

45

0

Data

memory

data

dest

9

Fetch:

lw 2 4 20

Time: 3

Bits 0-2

Bits 16-18

Bits 22-24

IF/

ID

M

U

X

6

M

U

X

3

3

0

nand

add

noop

ID/

EX

EX/

Mem

Mem/

WB

add 2 5 5

lw 2 4 20

nand 4 5 6

add 1 2 3

M

U

X

6

1

+

4

3

R0

2

R2

4

Register file

Inst

mem

add 2 5 8

PC

R1

R3

R4

R5

R6

R7

0

36

9

12

18

7

41

22

+

2

8

0

9

18

45

18

7

M

U

X

20

A

L

U

-3 45

0

Data

memory

data

dest

7

Fetch:

add 2 5 5

Time: 4

Bits 0-2

Bits 16-18

Bits 22-24

IF/

ID

M

U

X

4

M

U

X

6

6

3

3

lw

nand

add

ID/

EX

EX/

Mem

Mem/

WB

sw 3 7 10

add 2 5 5

lw 2 4 20

nand 4 5 6

add

M

U

X

20

1

+

5

4

R0

2

R2

5

Register file

Inst

mem

sw 3 7 10

PC

R1

R3

R4

R5

R6

R7

0

36

9

45

18

7

41

22

3

+

23

0

9

7

5

-3

9

M

U

20 X

A

L

U

29 -3

45

0

Data

memory

data

dest

18

Fetch:

sw 3 7 10

Time: 5

Bits 0-2

Bits 16-18

Bits 22-24

IF/

ID

M

U

X

5

M

U

X

4

4

6

6

3

add

lw

nand

ID/

EX

EX/

Mem

Mem/

WB

sw 3 7 10

add 2 5 5

lw 2 4 20

nand

M

U

X

5

1

+

5

R0

R1

3

R2

7

Inst

mem

Register file

PC

R3

R4

R5

R6

R7

0

36

9

45

18

7

-3

22

+

4

9

0

45

22

29

9

7

M

U

X

10

A

L

U

16 29

-3

99

Data

memory

data

dest

7

No more

instructions

Time: 6

Bits 0-2

Bits 16-18

Bits 22-24

IF/

ID

M

U

X

7

M

U

X

5

5

4

4

6

sw

add

lw

ID/

EX

EX/

Mem

Mem/

WB

sw 3 7 10

add 2 5 5

lw

M

U

X

10

1

5

+

R0

R1

R2

Inst

mem

Register file

PC

R3

R4

R5

R6

R7

0

36

9

45

99

7

-3

22

+

15

0

16

45

M

U

10 X

A

L

U

55 16

0

M

U

99 X

Data

memory

data

dest

22

No more

instructions

Time: 7

Bits 0-2

Bits 16-18

7

M

U

X

Bits 22-24

IF/

ID

ID/

EX

7

5

5

4

sw

add

EX/

Mem

Mem/

WB

sw 3 7 10

add

M

U

X

1

+

+

R0

R1

R2

Inst

mem

Register file

PC

R3

R4

R5

R6

R7

0

36

9

45

99

16

-3

22

55

M

U

X

A

L

U

55

22

16

0

Data

memory

data

dest

22

No more

instructions

Time: 8

Bits 0-2

Bits 16-18

M

U

X

7

Bits 22-24

IF/

ID

5

sw

ID/

EX

EX/

Mem

M

U

X

Mem/

WB

sw

M

U

X

1

+

+

R0

R1

R2

Inst

mem

Register file

PC

R3

R4

R5

R6

R7

0

36

9

45

99

16

-3

22

M

U

X

M

U

X

A

L

U

Data

memory

data

dest

No more

instructions

Time: 9

Bits 0-2

Bits 16-18

M

U

X

Bits 22-24

IF/

ID

ID/

EX

EX/

Mem

Mem/

WB

Time graphs

Time: 1

add

nand

lw

add

sw

2

fetch decode

fetch

3

4

5

6

7

8

9

execute memory writeback

decode

fetch

execute memory writeback

decode

fetch

execute memory writeback

decode

execute memory writeback

fetch

decode

execute memory writeback

What can go wrong?

• Data hazards: since register reads occur in stage 2

and register writes occur in stage 5 it is possible

to read the wrong value if is about to be written.

• Control hazards: A branch instruction may

change the PC, but not until stage 4. What do we

fetch before that?

• Exceptions: How do you handle exceptions in a

pipelined processor with 5 instructions in flight?

Data Hazards

Data hazards

What are they?

How do you detect them?

How do you deal with them?

Pipeline function for ADD

Fetch: read instruction from memory

Decode: read source operands from reg

Execute: calculate sum

Memory: Pass results to next stage

Writeback: write sum into register file

Data Hazards

add 1 2 3

nand 3 4 5

time

add

nand

fetch decode

fetch

execute memory

writeback

decode

memory writeback

execute

If not careful, nand will read the wrong value of R3

M

U

X

1

+

+

PC+1

PC+1

R0

0

eq?

R1

regA

regB

R2

Register file

instruction

PC

Inst

mem

target

R3

valA

R4

R5

valB

R6

R7

M

U

X

A

L

U

ALU

result

ALU

result

mdata

Data

memory

data

offset

dest

valB

Bits 0-2

Bits 16-18

Bits 22-24

IF/

ID

M

U

X

dest

dest

dest

op

op

op

EX/

Mem

Mem/

WB

ID/

EX

M

U

X

M

U

X

1

+

+

PC+1

PC+1

R0

regA

regB

M

U

X

0

eq?

R1

R2

Register file

instruction

PC

Inst

mem

target

R3

valA

R4

R5

valB

R6

R7

M

U

X

A

L

U

ALU

result

ALU

result

mdata

Data

memory

data

offset

dest

valB

IF/

ID

dest

dest

dest

op

op

op

EX/

Mem

Mem/

WB

ID/

EX

M

U

X

M

U

X

1

+

+

PC+1

PC+1

R0

regA

regB

M

U

X

data

0

eq?

R1

R2

Register file

instruction

PC

Inst

mem

target

R3

valA

R4

R5

valB

R6

R7

M

U

X

A

L

U

ALU

result

ALU

result

mdata

Data

memory

offset

valB

IF/

ID

op

op

op

fwd

fwd

fwd

EX/

Mem

Mem/

WB

ID/

EX

M

U

X

Three approaches to handling

data hazards

Avoid

Make sure there are no hazards in the code

Detect and Stall

If hazards exist, stall the processor until they go

away.

Detect and Forward

If hazards exist, fix up the pipeline to get the correct

value (if possible)

Handling data hazards I:

Avoid all hazards

Assume the programmer (or the compiler)

knows about the processor implementation.

Make sure no hazards exist.

• Put noops between any dependent instructions.

write R3 in cycle 5

add 1 2 3

noop

noop

read R3 in cycle 5

nand 3 4 5

Problems with this solution

Old programs (legacy code) may not run correctly

on new implementations

Longer pipelines need more noops

Programs get larger as noops are included

Especially a problem for machines that try to execute more

than one instruction every cycle

Intel EPIC: Often 25% - 40% of instructions are noops

Program execution is slower

–CPI is 1, but some instructions are noops

Handling data hazards II:

Detect and stall until ready

Detect:

Compare regA with previous DestRegs

• 3 bit operand fields

Compare regB with previous DestRegs

• 3 bit operand fields

Stall:

Keep current instructions in fetch and decode

Pass a noop to execute

First half of cycle 3

M

U

X

1

target

PC+1

Hazard detection

nand 3 4 5

PC

Inst

mem

PC+1

3

M

U

X

regA

regB

3

data

0

R1 14

R2 7

R3 10

R0

Register file

+

+

eq?

14

R4

R5

7

R6

R7

3

M

U

X

A

L

U

ALU

result

ALU

result

mdata

Data

memory

valB

add

IF/

ID

ID/

EX

op

op

EX/

Mem

Mem/

WB

M

U

X

Hazard

detected

compare compare

regA

3

compare

compare

regB

REG

file

3

IF/

ID

ID/

EX

1

Hazard detected

compare

0

0

0

011

regA

regB

011

3

Handling data hazards II:

Detect and stall until ready

• Detect:

– Compare regA with previous DestReg

• 3 bit operand fields

– Compare regB with previous DestReg

• 3 bit operand fields

Stall:

Keep current instructions in fetch and decode

Pass a noop to execute

First half of cycle 3

en

M

U

X

1

PC

Inst

mem

target

1

Hazard

nand 3 4 5

en

2

3

M

U

X

R0

R1

regA

regB

3

data

R2

Register file

+

+

R3

R4

R5

0

14

7

10

11

eq?

14

7

R6

R7

M

U

X

A

L

U

ALU

result

ALU

result

mdata

Data

memory

valB

add

IF/

ID

ID/

EX

EX/

Mem

Mem/

WB

M

U

X

Handling data hazards II:

Detect and stall until ready

• Detect:

– Compare regA with previous DestReg

• 3 bit operand fields

– Compare regB with previous DestReg

• 3 bit operand fields

Stall:

– Keep current instructions in fetch and decode

Pass a noop to execute

End of cycle 3

M

U

X

1

+

+

2

R0

M

U

X

3

data

R2

Register file

Inst

mem

nand 3 4 5

PC

R1

regA

regB

R3

R4

0

14

7

10

11

ALU

result

R5

M

U

X

R6

R7

noop

IF/

ID

ID/

EX

A

L

U

21

mdata

Data

memory

add

EX/

Mem

Mem/

WB

M

U

X

First half of cycle 4

en

M

U

X

1

PC

Inst

mem

Hazard

nand 3 4 5

en

2

3

M

U

X

R0

R1

regA

regB

3

data

R2

Register file

+

+

R3

R4

0

14

7

10

11

ALU

result

R5

M

U

X

R6

R7

noop

IF/

ID

ID/

EX

A

L

U

21

mdata

Data

memory

add

EX/

Mem

Mem/

WB

M

U

X

End of cycle 4

M

U

X

1

+

+

2

R0

M

U

X

3

data

R2

Register file

Inst

mem

nand 3 4 5

PC

R1

regA

regB

R3

R4

0

14

7

10

11

21

R5

M

U

X

R6

R7

noop

IF/

ID

ID/

EX

A

L

U

Data

memory

noop

add

EX/

Mem

Mem/

WB

M

U

X

First half of cycle 5

M

U

X

1

Inst

mem

No Hazard

nand 3 4 5

PC

2

3

M

U

X

R0

R1

regA

regB

3

data

R2

Register file

+

+

R3

R4

0

14

7

10

11

21

R5

M

U

X

R6

R7

noop

IF/

ID

ID/

EX

A

L

U

Data

memory

noop

add

EX/

Mem

Mem/

WB

M

U

X

End of cycle 5

M

U

X

1

+

+

3

2

0

R1 14

R2 7

R3 21

R4 11

R5 77

R6 1

R7 8

R0

M

U

X

5

data

Register file

add 3 7 7

PC

Inst

mem

regA

regB

21

11

nand

IF/

ID

ID/

EX

M

U

X

M

U

X

A

L

U

Data

memory

noop

noop

EX/

Mem

Mem/

WB

No more stalling

add 1 2 3

nand 3 4 5

time

add

nand

fetch decode execute memory writeback

fetch

decode decode

decode execute

hazard hazard

Assume Register File gives the right value of R3 when

read/written during same cycle.

Problems with detect and stall

CPI increases every time a hazard is detected!

Is that necessary? Not always!

Re-route the result of the add to the nand

• nand no longer needs to read R3 from reg file

• It can get the data later (when it is ready)

• This lets us complete the decode this cycle

– But we need more control to remember that the data that we

aren’t getting from the reg file at this time will be found

elsewhere in the pipeline at a later cycle.

Handling data hazards III:

Detect and forward

Detect: same as detect and stall

Except that all 4 hazards are treated differently

• i.e., you can’t logical-OR the 4 hazard signals

Forward:

New bypass datapaths route computed data to where it is

needed

New MUX and control to pick the right data

•Beware: Stalling may still be required even in the

presence of forwarding

Sample Code

Which hazards do you see?

add 1 2 3

nand 3 4 5

add 4 3 7

add 6 3 7

lw 3 6 10

sw 6 2 12

First half of cycle 3

M

U

X

1

1

Hazard

nand 3 4 5

PC

Inst

mem

2

3

M

U

X

regA

regB

3

data

0

R1 14

R2 7

R3 10

R4 11

R5 77

R6 1

R7 8

R0

Register file

+

+

14

7

M

U

X

M

U

X

A

L

U

Data

memory

add

fwd

IF/

ID

ID/

EX

fwd

fwd

EX/

Mem

Mem/

WB

End of cycle 3

M

U

X

1

+

+

3

2

R0

M

U

X

53

data

R2

Register file

add 4 3 7

PC

Inst

mem

R1

regA

regB

R3

R4

R5

R6

R7

0

14

7

10

11

77

1

8

10

11

nand

M

U

X

A

L

U

M

U

X

21

Data

memory

add

H1

IF/

ID

ID/

EX

EX/

Mem

Mem/

WB

First half of cycle 4

M

U

X

1

2

New Hazard

add 6 3 7

PC

Inst

mem

3

R0

R1

regA

regB

M

U

X

3

53

data

R2

Register file

+

+

R3

R4

R5

R6

R7

0

14

7

10

11

77

1

8

21 M

U

X

10

11

nand

11

M

U

X

A

L

U

M

U

X

21

Data

memory

add

H1

IF/

ID

ID/

EX

EX/

Mem

Mem/

WB

End of cycle 4

M

U

X

1

+

+

4

3

0

R1 14

R2 7

R3 10

R4 11

R5 77

R6 1

R7 8

R0

M

U

X

75 3

data

IF/

ID

Register file

lw 3 6 10

PC

Inst

mem

regA

regB

1

10

M

U

X

M

U

X

21

A

L

U

-2

Data

memory

add

nand

H2

H1

ID/

EX

EX/

Mem

add

Mem/

WB

M

U

X

First half of cycle 5

M

U

X

1

lw 3 6 10

PC

Inst

mem

4

3

M

U

X

75 3

0

R1 14

R2 7

R3 10

R4 11

R5 77

R6 1

R7 8

R0

regA

regB

data

IF/

ID

3

No Hazard

Register file

+

+

1

10

1

M

U

X

21 M

21

A

L

U

-2

Data

memory

U

X

add

nand

H2

H1

ID/

EX

EX/

Mem

add

Mem/

WB

M

U

X

End of cycle 5

M

U

X

1

+

+

5

4

R0

6 2 12

M

U

X

67 5

data

R2

Register file

sw

PC

Inst

mem

R1

regA

regB

R3

R4

R5

R6

R7

0

14

7

21

11

77

1

8

21

M

U

X

M

U

X

-2

A

L

U

22

Data

memory

10

lw

IF/

ID

ID/

EX

add

nand

H2

H1

EX/

Mem

Mem/

WB

M

U

X

First half of cycle 6

en

M

U

X

1

6 2 12

Inst

mem

4

Hazard

6

sw

en

PC

5

M

U

X

R0

R1

regA

regB

67 5

L

data

R2

Register file

+

+

R3

R4

R5

R6

R7

0

14

7

21

11

77

1

8

21

M

U

X

M

U

X

-2

A

L

U

22

Data

memory

10

lw

IF/

ID

ID/

EX

add

nand

H2

H1

EX/

Mem

Mem/

WB

M

U

X

End of cycle 6

M

U

X

1

+

+

5

R0

6 2 12

Inst

mem

M

U

X

67

data

R2

Register file

sw

PC

R1

regA

regB

R3

R4

R5

R6

R7

0

14

7

21

11

-2

1

8

M

U

X

M

U

X

noop

22

A

L

U

31

Data

memory

lw

add

H2

IF/

ID

ID/

EX

EX/

Mem

Mem/

WB

M

U

X

First half of cycle 7

M

U

X

1

5

Hazard

6 regA

sw

Inst

mem

6 2 12

PC

R0

R1

R2

regB

M

U

X

67

data

Register file

+

+

R3

R4

R5

R6

R7

0

14

7

21

11

-2

1

8

M

U

X

M

U

X

noop

22

A

L

U

31

Data

memory

lw

add

H2

IF/

ID

ID/

EX

EX/

Mem

Mem/

WB

M

U

X

End of cycle 7

M

U

X

1

+

+

5

R0

Inst

mem

M

U

X

6

data

R2

Register file

PC

R1

regA

regB

R3

R4

R5

R6

R7

0

14

7

21

11

-2

1

22

1

7

M

U

X

M

U

X

A

L

U

99

Data

memory

12

sw

noop

lw

H3

IF/

ID

ID/

EX

EX/

Mem

Mem/

WB

M

U

X

First half of cycle 8

M

U

X

1

+

+

5

R0

Inst

mem

M

U

X

6

data

R2

Register file

PC

R1

regA

regB

R3

R4

R5

R6

R7

0

14

7

21

11

-2

1

8

99

1

7

M

U

X

M

U

12 X

A

L

U

99

Data

memory

12

sw

noop

lw

EX/

Mem

Mem/

WB

H3

IF/

ID

ID/

EX

M

U

X

End of cycle 8

M

U

X

1

+

+

5

R0

Inst

mem

M

U

X

data

R2

Register file

PC

R1

regA

regB

R3

R4

R5

R6

R7

0

14

7

21

11

-2

99

8

1

7

M

U

X

M

U

X

A

L

U

M

U

X

111

Data

memory

12

sw

noop

H3

IF/

ID

ID/

EX

EX/

Mem

Mem/

WB

Control hazards

How can the pipeline handle branch and

jump instructions?

Pipeline function for BEQ

Fetch: read instruction from memory

Decode: read source operands from reg

Execute: calculate target address and

test for equality

Memory: Send target to PC if test is equal

Writeback: Nothing left to do

Control Hazards

beq

sub

1 1 10

3 4 5

time

beq

sub

fetch

decode

fetch

execute memory

decode

execute

writeback

Approaches to handling control

hazards

Avoid

Make sure there are no hazards in the code

Detect and Stall

Delay fetch until branch resolved.

Speculate and Squash-if-Wrong

Go ahead and fetch more instruction in case it is

correct, but stop them if they shouldn’t have been

executed

Handling control hazards I:

Avoid all hazards

Don’t have branch instructions!

Maybe a little impractical

Delay taking branch:

dbeq r1 r2 offset

Instructions at PC+1, PC+2, etc will execute

before deciding whether to fetch from

PC+1+offset. (If no useful instructions can be

placed after dbeq, noops must be inserted.)

Problems with this solution

Old programs (legacy code) may not run correctly

on new implementations

Longer pipelines need more instuctions/noops after delayed

beq

Programs get larger as noops are included

Especially a problem for machines that try to execute more

than one instruction every cycle

Intel EPIC: Often 25% - 40% of instructions are noops

Program execution is slower

–CPI equals 1, but some instructions are noops

Handling control hazards II:

Detect and stall

Detection:

Must wait until decode

Compare opcode to beq or jalr

Alternately, this is just another control signal

Stall:

Keep current instructions in fetch

Pass noop to decode stage (not execute!)

M

U

X

1

+

+

PC

Inst

mem

REG

file

sign

ext

M

U

X

M

U

X

A

L

U

Data

memory

noop

Control

IF/

ID

ID/

EX

EX/

Mem

Mem/

WB

Control Hazards

beq

sub

1 1 10

3 4 5

time

beq

fetch

sub

decode

fetch

execute memory

fetch

fetch

writeback

fetch

or

Target:

fetch

Problems with detect and stall

CPI increases every time a branch is detected!

Is that necessary? Not always!

Only about ½ of the time is the branch taken

• Let’s assume that it is NOT taken…

– In this case, we can ignore the beq (treat it like a noop)

– Keep fetching PC + 1

• What if we are wrong?

– OK, as long as we do not COMPLETE any instructions we

mistakenly executed (i.e. don’t perform writeback)

Handling data hazards III:

Speculate and squash

Speculate: assume not equal

Keep fetching from PC+1 until we know that the

branch is really taken

Squash: stop bad instructions if taken

Send a noop to:

• Decode, Execute and Memory

Send target address to PC

M

U

X

1

+

+

equal

REG

file

sign

ext

beq

IF/

ID

Data

memory

noop

beq

Control

M

U

X

A

L

U

noop

sub

beq

sub

add

nand

Inst

mem

noop

add

PC

M

U

X

ID/

EX

EX/

Mem

Mem/

WB

Problems with fetching PC+1

CPI increases every time a branch is taken!

About ½ of the time

Is that necessary?

No!, but how can you fetch from the target

before you even know the previous instruction

is a branch – much less whether it is taken???

M

U

X

1

+

target

+

Inst

mem

PC

REG

file

eq?

sign

ext

bpc target

M

U

X

M

U

X

A

L

U

Data

memory

Control

beq

IF/

ID

ID/

EX

EX/

Mem

Mem/

WB

Branch prediction

Predict not taken:

Predict backward taken:

Predict same as last time:

~50% accurate

~65% accurate

~80% accurate

Pentium:

Pentium Pro:

Best paper designs:

~85% accurate

~92% accurate

~97% accurate

Handling control hazards II:

Detect and stall

• Detection:

– Must wait until decode

– Compare opcode to beq or jalr

– Alternately, this is just another control signal

• Stall:

– Keep current instructions in fetch

– Pass noop to decode stage (not execute!)

M

U

X

1

+

+

PC

Inst

mem

REG

file

sign

ext

M

U

X

M

U

X

A

L

U

Data

memory

noop

Control

IF/

ID

ID/

EX

EX/

Mem

Mem/

WB

Role of the Compiler

• The primary user of the instruction set

– Exceptions: getting less common

• Some device drivers; specialized library routines

• Some small embedded systems (synthesized arch)

• Compilers must:

– generate a correct translation into machine code

• Compilers should:

– fast compile time; generate fast code

• While we are at it:

– generate reasonable code size; good debug support

Structure of Compilers

• Front-end: translate high level semantics to

some generic intermediate form

– Intermediate form does not have any resource

constraints, but uses simple instructions.

• Back-end: translates intermediate form into

assembly/machine code for target

architecture

– Resource allocation; code optimization under

resource constraints

Architects mostly concerned with optimization

Typical optimizations: CSE

• Common sub-expression elimination

c = array1[d+e] / array2[d+e];

c = array1[i] / arrray2[i];

• Purpose:

–reduce instructions / faster code

• Architectural issues:

–more register pressure

Typical optimization: LICM

• Loop invariant code motion

for (i=0; i<100; i++) {

t = 5;

array1[i] = t;

}

• Purpose:

– remove statements or expressions from loops that

need only be executed once (idempotent)

• Architectural issues:

– more register pressure

Other transformations

• Procedure inlining: better inst schedule

– greater code size, more register pressure

• Loop unrolling: better loop schedule

– greater code size, more register pressure

• Software pipelining: better loop schedule

– greater code size; more register pressure

• In general – “global”optimization: faster code

– greater code size; more register pressure

Compiled code characteristics

• Optimized code has different characteristics than

unoptimized code.

– Fewer memory references, but it is generally the “easy

ones” that are eliminated

• Example: Better register allocation retains active data in

register file – these would be cache hits in unoptimized code.

– Removing redundant memory and ALU operations

leaves a higher ratio of branches in the code

• Branch prediction becomes more important

Many optimizations provide better instruction scheduling

at the cost of an increase in hardware resource pressure

What do compiler writers want

in an instruction set architecture?

• More resources: better optimization tradeoffs

• Regularity: same behaviour in all contexts

– no special cases (flags set differently for immediates)

• Orthogonality:

– data type independent of addressing mode

– addressing mode independent of operation performed

• Primitives, not solutions:

– keep instructions simple

– it is easier to compose than to fit. (ex. MMX operations)

What do architects want in an

instruction set architecture?

• Simple instruction decode:

– tends to increase orthogonality

• Small structures:

– more resource constraints

• Small data bus fanout:

– tends to reduce orthogonality; regularity

• Small instructions:

– Make things implicit

– non-regular; non-orthogonal; non-primative

To make faster processors

• Make the compiler team unhappy

– More aggressive optimization over the entire program

– More resource constraints; caches; HW schedulers

– Higher expectations: increase IPC

• Make hardware design team unhappy

– Tighter design constraints (clock)

– Execute optimized code with more complex execution

characteristics

– Make all stages bottlenecks (Amdahl’s law)