Generalised Density Forecast Combinations

advertisement

Generalised Density Forecast Combinations∗

N. Fawcett

G. Kapetanios

Bank of England

Queen Mary, University of London

J. Mitchell

S. Price

Warwick Business School

Bank of England

April 11, 2013

Abstract

Density forecast combinations are becoming increasingly popular as a means

of improving forecast ‘accuracy’, as measured by a scoring rule. In this paper we

generalise this literature by letting the combination weights follow more general

schemes. Sieve estimation is used to optimise the score of the generalised density

combination where the combination weights depend on the variable one is trying to

forecast. Specific attention is paid to the use of piecewise linear weight functions that

let the weights vary by region of the density. We analyse these schemes theoretically,

in Monte Carlo experiments and in an empirical study. Our results show that the

generalised combinations outperform their linear counterparts.

JEL Codes: C53

Keywords: Density Forecasting; Model Combination; Scoring Rules

1

Introduction

Density forecast combinations or weighted linear combinations of prediction models are

becoming increasingly popular in econometric applications as a means of improving forecast ‘accuracy’, as measured by a scoring rule (see Gneiting & Raftery (2007)), especially

∗

Email addresses: Nicholas Fawcett (Nicholas.Fawcett@bankofengland.co.uk); George Kapetanios (g.kapetanios@qmul.ac.uk);

James Mitchell (James.Mitchell@wbs.ac.uk);

Simon Price

(Simon.Price@bankofengland.co.uk)

1

in the face of uncertain instabilities and uncertainty about the preferred model; e.g.,

see Jore et al. (2010), Geweke & Amisano (2012) and Rossi (2012). Geweke & Amisano

(2011) contrast Bayesian model averaging with linear combinations of predictive densities,

so-called “opinion pools”, where the weights on the component density forecasts are optimised to maximise the score, typically the logarithmic score, of the density combination

as suggested in Hall & Mitchell (2007).

In this paper we generalise this literature by letting the combination weights follow

more general schemes. Specifically, we let the combination weights depend on the variable

one is trying to forecast. We let the weights in the density combination depend on, for

example, where in the forecast density you are, which is often of interest to economists.

This allows for the possibility that while one model may be particularly useful (and receive

a high weight in the combination) when the economy is in recession, for example, another

model may be more informative when output growth is positive. The idea of letting the

weights on the component densities vary according to the (forecasted) value of the variable

of interest contrasts with two recent suggestions, to let the weights in the combination

follow a Markov-switching structure (Waggoner & Zha (2012)), or to evolve over time

according to a Bayesian learning mechanism (Billio et al. (2013)). Accommodating timevariation in the combination weights mimics our approach to the extent that over time

one moves into different regions of the forecast density.

The plan of this paper is as follows. Section 2 develops the theory behind the generalised density combinations. It proposes the use of sieve estimation (cf. Chen & Shen

(1998)) as a means of optimising the score of the generalised density combination over a

tractable, approximating space of weight functions on the component densities. We consider, in particular, the use of piecewise linear weight functions that have the advantage

of explicitly letting the combination weights depend on the region, or specific quantiles, of

the density. This means prediction models can be weighted according to their respective

abilities to forecast across different regions of the distribution. Section 3 draws out the

flexibility afforded by generalised density combinations by undertaking two Monte-Carlo

simulations. These show that the generalised combinations are more flexible than their

linear counterparts and can better mimic the true but unknown density, irrespective of its

form. The benefits of using the generalised combinations are found to increase with the

sample size. But the advantage of more flexible weight functions can be outweighed by

estimation error, in small samples. Consequently, the simulations show that in practice

it may be prudent to use parsimonious weight functions, despite their more restrictive

form. Section 4 then shows how the generalised combinations can work better in practice,

2

finding that they deliver more accurate density forecasts of the S&P500 daily return than

optimal linear combinations of the sort used in Hall & Mitchell (2007) and Geweke &

Amisano (2011). Section 5 concludes.

2

Generalised density combinations: theory

We provide a general scheme for combining density forecasts. Consider a covariance

stationary stochastic process of interest yt , t = 1, ..., T and a vector of predictor variables xt , t = 1, ..., T . Our aim is to forecast the density of yt+1 conditional on Ft =

σ xt+1 , (yt , x0t )0 , ..., (y1 , x01 )0 .

We assume the existence of a set of density forecasts. Let there be N such forecasts

denoted by qi (y|Ft ) ≡ qit (y), i = 1, ..., N , t = 1, ...T . We suggest a generalised combined

density forecast, given by the generalised “opinion pool”

pt (y) =

N

X

wi (y) qit (y)

(1)

i=1

such that

Z

pt (y) dy = 1,

(2)

where wi (y) are the weights on the individual density forecasts which themselves depend

on y. This generalises existing work on optimal linear density forecast combinations,

where wi (y) = wi and wi are scalars; see Hall & Mitchell (2007) and Geweke & Amisano

(2011).

We need to provide a mechanism for deriving wi (y). Accordingly, we define a predictive loss function given by

T

X

LT =

l (pt (yt ) ; yt ) .

(3)

t=1

We assume that there exist wi0 (y) in the space of qi -integrable functions Ψqi where

Z

Ψqi = w (.) : w (y) qi (y) dy < ∞ , i = 1, .., N,

(4)

0

; yt ≤ E (l (pt (yt ; w1 , .., wN ) ; yt ))

E (l (pt (yt ) ; yt )) ≡ E l pt yt ; w10 , .., wN

(5)

such that

3

for all (w1 , .., wN ) ∈

Q

Ψqi . We suggest determining wi by minimising LT , i.e.,

i

{ŵ1T , ..., ŵN T } = arg

min

wi ,i=1,...,N

LT .

(6)

It is clear that the minimisation problem in (6) is difficult to solve unless one restricts

the space over which one searches from Ψqi to a more tractable space. A general way

forward à la Chen & Shen (1998) is to search over

(

Φq i =

wη (.) : wη (y) = v0 +

∞

X

)

ν s η s (y, θ s ) , ν s ≥ 0, η s (y, θ s ) ≥ 0

, i = 1, ..., N,

s=1

(7)

where

is a known basis, up to a finite dimensional parameter vector θ s , such

that Φqi is dense in Ψqi , and {ν s }∞

s=1 is a sequence of constants. Such a basis can be made

of any of a variety of functions including trigonometric functions, indicator functions,

neural networks and splines.

Φqi , in turn, can be approximated through sieve methods by

{η s (y, θ s )}∞

s=1

(

ΦTqi =

wT η (.) : wT η (.) = v0 +

pT

X

)

ν s η s (y, θ s ) ν s ≥ 0, η s (y, θ s ) ≥ 0

, i = 1, ..., N,

s=1

(8)

where pT → ∞ is either a deterministic or data-dependent sequence.

Note that a sufficient condition for (2) is

Z X

N

vi0 +

Y i=1

pT

X

!

ν is η s (y, θ s ) qit (y) dy =

s=1

N

X

vi0 +

pT

X

i=1

s=1

N

X

pT

!

Z

ν is

η s (y, θ s ) qit (y) dy

Y

(9)

=

i=1

where κis =

R

Y

η s (y, θ s ) qit (y) dy.

v0 +

X

!

ν is κis

=1

s=1

0

0

Defining ϑT = ν 1 , ..., ν m , θ 01 , ..., θ 0pT , ν i = vi0 , ..., ν 0ipT , w = w (ϑT ) = {w1 , ..., wm }

and

ϑ̂T = arg min LT = arg min LT (wT η (ϑT ))

(10)

ϑT

ϑT

4

we can use Corollary 1 of Chen & Shen (1998) to show the following result

1 LT wT η ϑ̂T

− LT w0 = op (1) .

T

(11)

In fact, Theorem 1 of Chen & Shen (1998) also provides rates for the convergence ϑ̂T

to w0 . However, the proofs depend crucially on the choice of the basis {η s (y, θ s )}∞

s=1 .

Chen & Shen (1998) discuss many possible bases. We will derive a rate for one such basis

below. Unfortunately, this rate is not fast enough to satisfy the condition associated with

(4.2) in Theorem 2 of Chen & Shen (1998) and, therefore, we cannot prove asymptotic

normality for v̂0 when pT → ∞.

Of course, we can limit our analysis to less general spaces. In particular, we can search

over

(

)

p

X

Φpqi = wT η (.) : wpη (.) = v0 +

ν s η s (y, θ s ) , i = 1, .., m.

(12)

s=1

0

for some finite p. Let ϑp = ν 1 , ..., ν p , θ 01 , ..., θ 0p . We make the following assumptions:

Assumption 1 There exists a unique ϑ0p ∈ int Θp that minimises E (LT (wT η (ϑT ))), for

all p.

Assumption 2 l (pt (., ϑp ) ; .) has bounded derivatives with respect to ϑp uniformly over

Θp , t and p.

Assumption 3 yt is an Lr -bounded (r > 2), L2 − N ED process of size −a, on an αmixing process, Vt , of size −r/(r − 2) such that a ≥ (r − 1)/(r − 2).

Assumption 4 Let l(i) (pt (yt , ϑp ) ; yt ) denote the i-th derivative of l with respect to yt ,

where l(0) (pt (yt , ϑp ) ; yt ) = l (pt (yt , ϑp ) ; yt ). Let

(i)

l pt y (1) , ϑ0p ; y (1) − l(i) pt y (2) , ϑ0p ; y (2) ≤ Bt(i) y (1) , y (2) y (1) − y (2) , i = 0, 1, 2

(13)

(i)

where Bt (., .) are nonnegative measurable functions. Then, for all m, and some r > 2,

(i)

B

(y

,

E

(y

|V

,

...,

V

))

t

t

t t−m

t+m < ∞, i = 0, 1, 2,

(14)

(i)

l pt yt , ϑ0p ; y (1) < ∞, i = 0, 1, 2,

r

(15)

r

where k.kr denotes Lr norm.

5

Assumption 5 qit (y) are bounded functions for i = 1, ..., N .

Then, it is straightforward to show that:

Theorem 1 Under Assumptions 1-5,

√ 0

T ϑ̂T − ϑp →p N (0, V )

(16)

where

V = V1−1 V2 V1−1 ,

T

1 X ∂ 2 l (pt (yt ) ; yt ) V1 =

,

T t=1

∂ϑ∂ϑ0

ϑ̂T

!

!0

T

1 X ∂l (pt (yt ) ; yt ) ∂l (pt (yt ) ; yt ) V2 =

.

T t=1

∂ϑ

∂ϑ

ϑ̂T

ϑ̂T

Using this result one can test the null hypothesis

H0 : ν 0i = (vi0 , 0, ..., 0)0 , ∀i.

(17)

This allows us to test whether it is useful to allow the weights to depend on y. Note

the need here for a basis that is fully known and does not depend on unknown parameters

as these would then be unidentified under the null and would require careful treatment.

It is clear from Theorem 1 that the generalised combination scheme can reduce the value

of the objective function compared to the linear combination scheme as long as ν 0i does

not minimise the population objective function. If ν 0i does minimise the loss function

then both schemes will achieve this minimum. And if one of the component densities

is, in fact, the true density, then the loss function is minimised when the true density is

given a unit weight, and all other densities are given a zero weight. However, there is

no guarantee, theoretically, that this will be the case in practice, as other sets of weights

potentially can also achieve the minimum objective function.

Furthermore, in general, the loss function may not have a single minimiser, if wi ∈ Ψqi .

We may then wish to modify the loss function in a variety of ways. We examine a couple

of possibilities here. First, we can require that wi that are far away from a constant

function are penalised. This would lead to a loss function of the form

LT =

T

X

t=1

l (pt (yt ) ; yt ) + T

γ

N Z

X

i=1

|wi (y) − fC (y)|δ dy, δ > 0, 0 < γ < 1,

C

6

(18)

R

where we impose the restriction |wi (y) − fC (y)|δ dy < ∞ and fC (y) is the uniform

density over C. An alternative way to guarantee uniqueness for the solution of (6) is to

impose restrictions that apply to the functions belonging in Φqi . Such a loss function can

take the form

LT =

T

X

l (pt (yt ) ; yt ) + T

t=1

γ

N X

∞

X

|ν s |δ , δ > 0, 0 < γ < 1,

(19)

i=1 s=1

P

δ

where we assume that ∞

s=1 |ν s | < ∞. This sort of modification has parallels to penalisations involved in methods such as penalised likelihood. Given this, it is worth noting

that the above general method for combining densities can easily cross the boundary between density forecast combination and outright density estimation. For example, simply

setting N = 1 and q1t (y) to the uniform density and using some penalised loss function

such as (18) or (19), reduces the density combination method to density estimation akin

to penalised maximum likelihood.

2.1

Piecewise linear weight functions

In what follows we suggest, in particular, the use of indicator functions for η s (y), i.e.

η s = I (rs−1 ≤ y < rs ) where the boundaries r0 < r1 < ... < rs−1 < rs are either known a

priori or estimated from the data. The boundaries might be assumed known on economic

grounds. For example, in a macroeconomic application, some models might be deemed to

forecast better in recessionary than expansionary times (suggesting a boundary of zero),

or when inflation is inside its target range (suggesting boundaries determined by the

inflation target). Otherwise, they must be estimated, as Section 3 considers further.

With piecewise linear weight functions, the generalised combined density forecast is

pt (y) =

pT

N X

X

ν is qit (y) I (rs−1 ≤ y < rs ) ,

(20)

i=1 s=1

where ν is are constants (to be estimated) and

Z

Z

rs

I (rs−1 ≤ y < rs ) qit (y) dy =

κis =

qit (y) dy.

(21)

rs−1

Y

These piecewise linear weights allow for considerable flexibility, and have the advantage

that they let the combination weight depend on y; e.g. it may be helpful to weight some

7

models more highly when y is negative, say the economy is in recession, than when y is

high and output growth is strongly positive. This flexibility increases as s, the number of

regions, tends to infinity.

2.2

Scoring rules

Gneiting & Raftery (2007) discuss a general class of proper scoring rules to evaluate

density forecast accuracy, whereby a numerical score is assigned based on the predictive

density at time t and the value of y that subsequently materialises, here assumed without loss of generality to be at time t + 1. A common choice for the loss function LT ,

within the ‘proper’ class (cf. Gneiting & Raftery (2007)), is the logarithmic scoring rule.

More specific loss functions that might be appropriate in some economic applications can

readily be used instead (e.g. see Gneiting & Raftery (2007)) and again minimised via

our combination scheme. But an attraction of the logarithmic scoring rule is that, absent

knowledge of the loss function of the user of the forecast, by maximising the logarithmic

score one is simultaneously minimising the Kulback-Leibler Information Criterion relative

to the true but unknown density; and when this distance measure is zero, we know from

Diebold et al. (1998) that all loss functions are, in fact, being minimised.

Using the logarithmic scoring rule, with piecewise linear weights, the loss function LT

is given by

LT =

T

X

− log pt (yt+1 ) =

T

X

t=1

t=1

log

pT

N X

X

!

ν is qit (yt+1 ) I (rs−1 ≤ yt+1 < rs ) .

(22)

i=1 s=1

Minimising this over the full-sample (t = 1, ..., T ) with respect to ν is , subject to

PN PpT

s=1 ν is κis = 1, gives the Lagrangian

i=1

L̃T =

T

X

t=1

log

pT

N X

X

!

ν is qit (yt+1 ) I (rs−1 ≤ yt+1 < rs )

i=1 s=1

− λs

pT

N X

X

!

ν is κis − 1 . (23)

i=1 s=1

Then,

"

#

T

X

∂ L̃T

qit (yt+1 ) I (rs−1 ≤ yt+1 < rs )

=

− λs κis = 0

PN PpT

∂ν is

ν

q

(y

)

I

(r

≤

y

<

r

)

is

it

t+1

s−1

t+1

s

i=1

s=1

t=1

8

(24)

N

pT

XX

∂ L̃T

=

ν is κis − 1 = 0.

∂λs

i=1 s=1

(25)

In practice, in a time-series application, without knowledge of the full sample (t =

1, ..., T ) this minimisation would be undertaken recursively at each period t based on

information through (t − 1). In a related context, albeit for evaluation, Diks et al. (2011)

discuss the weighted logarithmic scoring rule, wt (yt+1 ) log qit (yt+1 ), where the weight

function wt (yt+1 ) emphasises regions of the density of interest; one possibility, as in (22),

is that wt (yt+1 ) = I (rs−1 ≤ yt+1 < rs ). But, as Diks et al. (2011) show, the weighted

logarithmic score rule is ‘improper’ and can systematically favour misspecified densities

even when the candidate densities include the true density.

We can then prove the following result using Theorem 1 of Chen & Shen (1998).

Theorem 2 Let Assumptions 1, 3 and 4 hold. Let

T

X

− log pt (yt+1 )

(26)

η s = I (rs−1 ≤ y < rs )

(27)

LT =

t=1

and

T

where {rs }ps=1

are known constants. Then,

0

v − ν̂ T = op T −ϕ

(28)

for all ϕ < 1/2 as long as T 1/2 /pT → 0.

We also have the following Corollary of Theorem 1 for the leading case of using the

logarithmic score as a loss function, piecewise linear sieves and Gaussian like component

densities.

Corollary 3 Let Assumptions 1 and 3 hold. Let qi (y) be bounded functions such that

qi (y) ∼ exp (−y 2 ) as y → ±∞ for all i. Let

T

X

− log pt (yt+1 )

(29)

η s = I (rs−1 ≤ y < rs )

(30)

LT =

t=1

and

9

T

where {rs }ps=1

are known constants. Then, the results of Theorem 1 hold.

3

3.1

Monte Carlo study

Monte Carlo setup

In this Section we illustrate the performance of the generalised density forecast combination scheme using Monte Carlo experiments. We consider two different Monte Carlo

settings. These are distinguished by the nature of the true (but in practice, if not reality, unknown) density. In the first simulation a non-Gaussian true density is considered,

and we compare the ability of linear and generalised combinations of misspecified Gaussian densities to capture the non-Gaussianity. In the second simulation we assume the

true density is Gaussian, but again consider combinations of two misspecified Gaussian

densities. In this case, we know that linear combinations will, in general, incorrectly

yield non-Gaussian densities, given that they generate mixture distributions. It is therefore important to establish if and how the generalised combinations improve upon this.

We implement the generalised density forecast combinations below using piecewise linear weight functions, (20); and we use the simulation to draw out consequences both

of parameter estimation error about ri and uncertainty about the number of thresholds

pT = p.

Setting 1: The true density is a two part normal (TPN) density. We note that a

random variable Y has a two part normal density if the probability density function is

given by

(

A exp(−y − µ)2 /2σ 21 if y < µ

A exp(−y − µ)2 /2σ 22 if y ≥ µ

−1

√

2π(σ 1 + σ 2 )/2

A=

f (Y ) =

(31)

We assume that the investigator combines the normal components given by the densities of two normals with the same mean µ and variances given by σ 21 and σ 22 , respectively.

These two densities are then combined via (a) the generalised combination scheme and (b)

optimised (with respect to the logarithmic score) but fixed weights, as in Hall & Mitchell

(2007) and Geweke & Amisano (2011). When using the generalised combination scheme

we consider indicator functions where ri is either known or unknown but estimated using

a grid search, to examine how much estimation error of boundaries matters. In particular,

10

we set pt = p = 2 and r1 = 0, if ri is assumed known, while we consider a grid with 11

equally spaced points between -1 and 1 if ri is assumed unknown. We fix σ 21 = 1 and

consider various values for σ 22 . In particular, we set σ 22 = 1.5, 2, 4 and 8.

Setting 2: For this setting, the true density is the standard normal. There are two

component densities each of which is a misspecified normal density because the mean

is incorrect. We fix the variances of the component densities to unity but allow for

different values for the means, µ1 and µ2 . Then, we either take a combination of these two

misspecified normal densities using the generalised combination scheme and a piecewise

linear weight function; or use fixed weights, as before. We allow for various values of p from

p = 2 to p = 21 where ri are assumed known. We consider (µ1 , µ2 ) = (−0.5, 0.5) , (−1, 1)

and (−2, 2) .

We consider samples sizes of 50, 100, 200, 400, and 1000 observations and carry out

1000 Monte Carlo replications for each experiment. We compare the performance of the

generalised combination scheme with the performance of the linear combination scheme

and that of the component densities. Our performance criterion to measure the distance

between the true density and the forecast density is their integrated mean squared error.

3.2

Monte Carlo results

Results for the two settings are presented in Tables 1 and 2. We start by considering

the results for Setting 1. When the boundary is assumed known and set to 0 then the

generalised combination scheme dominates the linear scheme for all sample sizes and

values of σ 22 as expected. The difference in performance is massive for larger sample sizes

and values of σ 22 . When the boundary is assumed unknown then the performance of the

generalised combination scheme deteriorates as expected. For small sample sizes and low

values of σ 22 the linear scheme works better; but as more observations become available

and for larger variances the generalised scheme is again the best performing method by

far. Using either of the two component densities alone is, as expected, a bad idea.

Moving on to Setting 2, where the boundary is estimated in all cases, we see a nonlinear trade-off between the complexity of the weight function and the performance of the

generalised scheme. It seems that a weight function of medium complexity (say p = 5)

works best on average. The linear scheme is outperformed unless both the misspecification of the means of the component densities and the sample size is small. Overall, it

is clear that while there is benefit to be had from a more flexible weight function this is

reduced by the difficulty of estimating it as it becomes too complex.

11

Table 1: Monte Carlo results for Setting 1 reporting integrated mean square errors. E1

denotes the generalised combination scheme with known boundary; E2 denotes the generalised combination scheme with unknown boundary; E3 denotes the optimal linear

combination; N (0, 1) and N (0, σ 22 ) are the two marginal (component) densities

.

σ 22

T

50

100

1.5 200

400

1000

50

100

2

200

400

1000

50

100

4

200

400

1000

50

100

8

200

400

1000

Combined Density Component Density

E1

E2

E3

N (0, 1) N (0, σ 22 )

0.670 6.013 0.845 1.654

1.103

0.320 2.619 0.771 1.654

1.103

0.163 1.241 0.714 1.654

1.103

0.079 0.518 0.690 1.654

1.103

0.030 0.177 0.673 1.654

1.103

0.551 4.477 1.696 4.421

2.211

0.277 1.826 1.587 4.421

2.211

0.128 0.888 1.528 4.421

2.211

0.068 0.393 1.504 4.421

2.211

0.028 0.143 1.486 4.421

2.211

0.382 2.209 2.746 12.728

3.182

0.175 1.021 2.643 12.728

3.182

0.094 0.456 2.597 12.728

3.182

0.044 0.216 2.572 12.728

3.182

0.019 0.079 2.556 12.728

3.182

0.224 1.041 2.283 19.413

2.427

0.106 0.484 2.218 19.413

2.427

0.052 0.223 2.191 19.413

2.427

0.026 0.112 2.175 19.413

2.427

0.010 0.043 2.163 19.413

2.427

12

Table 2: Monte Carlo results for Setting 2 reporting integrated mean square errors. E2

denotes the generalised combination scheme with unknown boundary; E3 denotes the

optimal linear combination; N (µ1 , 1) and N (µ2 , 1) are the two marginal (component)

densities.

(µ1 , µ2 )

(−0.5, 0.5)

(−0.5, 0.5)

(−0.5, 0.5)

(−0.5, 0.5)

(−0.5, 0.5)

(−1, 1)

(−1, 1)

(−1, 1)

(−1, 1)

(−1, 1)

(−2, 2)

(−2, 2)

(−2, 2)

(−2, 2)

(−2, 2)

T

Combined Density

E2

p = 2 p = 3 p = 5 p = 7 p = 19 p = 21

50

1.306 1.456 2.033 3.569 7.208

9.986

100 0.725 0.823 1.158 1.894 3.700

5.494

200 0.384 0.442 0.647 0.995 1.868

3.335

400 0.215 0.240 0.379 0.543 0.982

2.270

1000 0.119 0.116 0.179 0.276 0.449

1.618

50

2.184 2.053 2.608 3.893 7.233

9.877

100 1.529 1.288 1.553 2.137 3.761

5.591

200 1.104 0.801 0.890 1.225 1.995

3.415

400 0.927 0.565 0.478 0.723 1.072

2.315

1000 0.813 0.430 0.221 0.358 0.519

1.665

50

9.409 5.926 4.160 4.916 7.745 10.098

100 8.403 4.727 2.451 2.883 4.088

5.786

200 8.107 4.240 1.474 1.738 2.317

3.641

400 7.897 3.981 0.982 1.012 1.360

2.521

1000 7.687 3.774 0.690 0.525 0.742

1.841

13

E3

0.673

0.476

0.390

0.344

0.316

3.997

3.792

3.676

3.617

3.586

22.252

22.039

21.920

21.873

21.838

Component Density

N (µ1 , 1) N (µ2 , 1)

3.418

3.418

3.418

3.418

3.418

12.480

12.480

12.480

12.480

12.480

35.663

35.663

35.663

35.663

35.663

3.418

3.418

3.418

3.418

3.418

12.480

12.480

12.480

12.480

12.480

35.663

35.663

35.663

35.663

35.663

4

Empirical Application

We consider S&P 500 daily percent log returns data from 3 January 1972 to 16 December

2005, the exact same dataset used by Geweke & Amisano (2010, 2011) in their analysis

of optimal linear pools.1 Following Geweke & Amisano (2010, 2011) we then estimate a

Gaussian GARCH(1,1) model and a Student t-GARCH(1,1) model via maximum likelihood (ML) using rolling samples of 1250 trading days (about five years). One day ahead

density forecasts are then produced recursively from the two models for the return on 15

December 1976 through to the return on 19 December 2005, delivering a full out-of-sample

period of 7325 daily observations. The predictive densities are formed by substituting the

ML estimates for the unknown parameters.

The two component densities are then combined using either a linear or generalised

combination scheme. We evaluate the generalised combination scheme in two ways.

Firstly, we fit the generalised and linear combinations over the whole dataset, insample (t = 1, ..., T ). This involves replicating part of the empirical analysis in Geweke &

Amisano (2011) who compared the optimised linear combination with these two marginal

(component) densities and like us (see Table 3 below) find gains to linear combination.

But of course we then consider our generalised combination scheme, setting p = 2, 5, 7,

with threshold intervals fixed as shown in Table 4. We compare the predictive log score

(LogS) of these three generalised combinations with the optimal linear combination as well

as the two marginal component densities themselves. We test equal predictive accuracy

between the linear combination and the other densities based on their Kullback Leibler

Information Criterion (KLIC) differences. A general test is provided by Giacomini &

White (2006); a Wald-type test statistic is given as

T

T

−1

T

X

!0

∆Lt

Σ

−1

T

−1

T

X

!

∆Lt

(32)

t=1

t=1

where ∆Lt is the expected difference in the logarithmic scores of the two prediction models

at time t and equals their KLIC or distance measure and Σ is an appropriate heteroscedasticity and autocorrelation consistent (HAC) estimate of the asymptotic covariance matrix.

Under the null hypothesis of equal accuracy E(∆Lt ) = 0, Giacomini & White (2006) show

that the test statistic tends to χ21 as T → ∞.

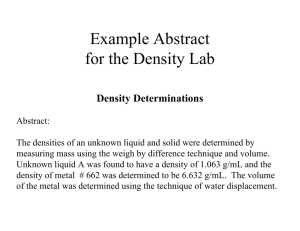

Table 3 shows that the generalised combinations deliver, as expected, higher in-sample

1

We thank Amisano and Geweke for providing both their data and computational details.

14

scores than either of the component densities or the optimal linear combination. Table 4

helps to explain why by reporting the (in-sample) combination weights by region of the

density. These weights are obviously not constrained to sum to unity, and can take any

positive value. They vary considerably both across and within regions, with the effect

that the generalised densities are much more flexible than either component density or the

linear combination: the component densities are building blocks which are put together

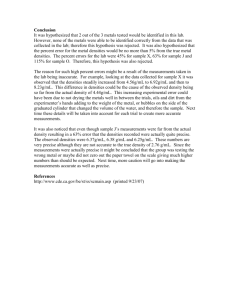

statistically to maximise the logarithmic score of the ensuing combined density. Figure 1

illustrates this flexibility by plotting the density forecasts for the return on 19 December

2005 from the linear and the three generalised combinations (p = 2, 5, 7). Alongside

these forecasts we plot a nonparametric estimate of the (unconditional) returns density,

estimated over the full-sample. We see from Figure 1 that the generalised densities better

capture the fat-tails in the data, albeit with spikes in the generalised densities observed

at the thresholds.

Secondly, we estimate the combination weights using the first 6000 observations and

evaluate the resulting densities over the remaining sample of 1325 observations, 12 September 2000 to 19 December 2005. This means we are performing a real-time exercise as only

past data are used for optimisation. This is an important test given the extra parameters involved when estimating the generalised rather than linear combination. As before,

we consider three generalised schemes setting p = 2, 5, 7 and compare the predictive log

score (LogS) of these generalised combinations with the optimal linear combination as

well as the two marginal component densities themselves. We again test equal predictive

accuracy between the linear combination and the other densities based on their Kullback

Leibler Information Criterion (KLIC) differences.

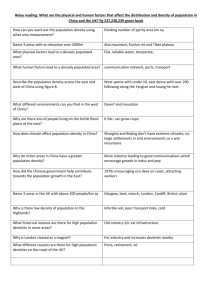

Table 5 shows that real-time generalised combinations do deliver higher scores than

either of the component densities themselves. Combination improves accuracy. But realtime optimal linear combinations do not improve upon the normal-GARCH density. Thus

while, as Geweke & Amisano (2011) show on this same dataset, optimal linear combinations will at least match the performance of the best component density when estimated

over the full sample (t = 1, ..., T ), there is no guarantee that the optimal combination

will help out-of-sample when computed recursively. Importantly, inspection of the KLIC

test statistics shows that the gains associated with use of the generalised combinations

are statistically significant at the 99% significance level. While there are improvements

in the average logarithmic score associated with more flexible generalised combinations,

so when p = 7 rather than when p = 2, the KLIC tests indicate that relative to the linear

combination there are benefits to using a parsimonious weighting function. While a high

15

p pays off in terms of delivering a higher logarithmic score on average, it is associated

with higher variability, which is penalised in the KLIC test.

Table 3: Average predictive logarithmic score (logS) and equal predictive accuracy test

(KLIC), versus the optimal linear combination: 3 January 1972 - 19 December 2005 (7325

observations). In-sample

Marginal

Linear combination

Generalised combination

LogS

KLIC

Normal-GARCH −9544.4154 -2.824

t-GARCH

−9363.5734 -1.205

−9308.0246 p=2

−8502.2566 18.174

p=5

−7681.9769 19.566

p=7

−7354.2126 18.958

Table 4: Model weights for the three generalised combination schemes over

sample.

Intervals (in %)

Model

(−∞, −2.5) (−2.5, −2) (−2, −1) (−1, 0) (0, 1) (1, 2) (2, 2.5)

Scheme 1: p = 2

Normal-GARCH

2.239

2.239

2.239

0.006 0.006 0.000 0.000

t-GARCH

0.692

0.692

0.692

0.831 0.831 2.736 2.736

Scheme 2: p = 5

Normal-GARCH

80.805

80.805

2.344

0.205 0.005 0.000 56.421

t-GARCH

6.407

6.407

0.000

0.619 0.846 2.242 1.178

Scheme 3: p = 7

Normal-GARCH

1035.967

50.326

2.346

0.116 0.005 0.022 32.019

t-GARCH

34.840

0.165

0.000

0.706 0.846 2.218 0.000

16

the whole

(2.5, ∞)

0.000

2.736

56.421

1.178

993.512

2.915

Table 5: Average predictive logarithmic score (logS) and equal predictive accuracy test

(KLIC), versus the optimal linear combination: 12 September 2000 - 19 December 2005

(1325 observations). Out-of-sample

Marginal

Linear combination

Generalised combination

5

Normal-GARCH

t-GARCH

p=2

p=5

p=7

LogS

−1902.6516

−1912.1234

−1907.7278

−1874.0055

−1777.4188

−1725.7779

KLIC

0.953

-4.564

9.673

9.296

8.956

Conclusion

With the growing recognition that point forecasts are best seen as the central points of

ranges of uncertainty more attention is now paid to density forecasts. Coupled with uncertainty about the best means of producing these density forecasts, and practical experience

that combination can render forecasts more accurate, density forecast combinations are

being used increasingly in macroeconomics and finance.

This paper extends this existing literature by letting the combination weights follow

more general schemes. It introduces generalised density forecast combinations, where the

combination weights depend on the variable one is trying to forecast. Specific attention

is paid to the use of piecewise linear weight functions that let the weights vary by region

of the density. These weighting schemes are examined theoretically, with sieve estimation

used to optimise the score of the generalised density combination. The paper then shows

both in simulations and in an application to S&P500 returns that the generalised combinations can deliver more accurate forecasts than linear combinations with optimised

but fixed weights as in Hall & Mitchell (2007) and Geweke & Amisano (2011). Their

use therefore seems to offer the promise of more effective forecasts in the presence of a

changing economic climate.

17

Figure 1: Linear and generalised combination density forecasts (for p = 2, 5, 7) for the

S&P500 daily return on 19 December 2005 compared with a nonparametric estimate of

the (unconditional) returns density (dashed-line)

18

References

Amemiya, T. (1985), Advanced Econometrics, Harvard University Press.

Billio, M., Casarin, R., Ravazzolo, F. & van Dijk, H. K. (2013), ‘Time-varying combinations of predictive densities using nonlinear filtering’, Journal of Econometrics .

Forthcoming.

Chen, X. & Shen, X. (1998), ‘Sieve extremum estimates for weakly dependent data’,

Econometrica 66(2), 289–314.

Davidson, J. (1994), Stochastic Limit Theory, Oxford University Press.

Diebold, F. X., Gunther, A. & Tay, K. (1998), ‘Evaluating density forecasts with application to financial risk management’, International Economic Review 39, 863–883.

Diks, C., Panchenko, V. & van Dijk, D. (2011), ‘Likelihood-based scoring rules for comparing density forecasts in tails’, Journal of Econometrics 163(2), 215–230.

Geweke, J. & Amisano, G. (2010), ‘Comparing and evaluating Bayesian predictive distributions of asset returns’, International Journal of Forecasting 26(2), 216–230.

Geweke, J. & Amisano, G. (2011), ‘Optimal prediction pools’, Journal of Econometrics

164(1), 130–141.

Geweke, J. & Amisano, G. (2012), ‘Prediction with misspecified models’, American Economic Review: Papers and Proceedings 102(3), 482–486.

Giacomini, R. & White, H. (2006), ‘Tests of conditional predictive ability’, Econometrica

74, 1545–1578.

Gneiting, T. & Raftery, A. E. (2007), ‘Strictly proper scoring rules, prediction, and estimation’, Journal of the American Statistical Association 102, 359–378.

Hall, S. G. & Mitchell, J. (2007), ‘Combining density forecasts’, International Journal of

Forecasting 23, 1–13.

Jong, R. D. (1997), ‘Central limit theorems for dependent heterogeneous random variables’, Econometric Theory 13, 353–367.

19

Jore, A. S., Mitchell, J. & Vahey, S. P. (2010), ‘Combining forecast densities from VARs

with uncertain instabilities’, Journal of Applied Econometrics 25, 621–634.

Rossi, B. (2012), Advances in Forecasting under Instabilities, in G. Elliott & A. Timmermann, eds, ‘Handbook of Economic Forecasting, Volume 2’, Elsevier-North Holland

Publications. Forthcoming.

Waggoner, D. F. & Zha, T. (2012), ‘Confronting model misspecification in macroeconomics’, Journal of Econometrics 171(2), 167 – 184.

20

Appendix

Proof of Theorem 1

C or Ci where i takes integer values, denote generic finite positive constants.

We wish to prove that minimisation of

LT (ϑp ) =

T

X

l (pt (yt , ϑp ) ; yt ) ,

t=1

where

pt (y, ϑp ) =

N

X

wpη,i (y, ϑp ) qit (y) ,

i=1

wpη,i (y, ϑp ) = vi0 +

p

X

ν is η s (y, θ is ) ,

s=1

0

produces an estimate, denoted by ϑ̂T , of the value of ϑp = ν 1 , ..., ν p , θ 01 , ..., θ 0p that

minimises limT →∞ E(LT (ϑp )) = L (ϑp ), denoted by ϑ0p , that is asymptotically normal

with an asymptotic variance given in the statement of the Theorem. To prove this we use

Theorem 4.1.3 of Amemiya (1985). The conditions of this Theorem are satisfied if the

following hold:

LT (ϑp ) →p L (ϑp ) , uniformly over ϑp ,

(33)

1 ∂LT (ϑp ) →d N (0, V2 )

T

∂ϑp ϑ0p

(34)

1 ∂ 2 LT (ϑp ) →p V1

T ∂ϑp ∂ϑ0p ϑ̂

(35)

T

To prove (33), we note that by Theorems 21.9, 21.10 and (21.55)-(21.57) of Davidson

(1994), (33) holds if

LT (ϑp ) →p L (ϑp ) ,

(36)

and

∂LT (ϑp ) sup ∂ϑp < ∞

ϑp ∈Θp

(37)

(37) holds by the fact that l (pt (., ϑp ) ; .) has uniformly bounded derivatives with respect

21

to ϑp over Θp and t, by Assumption 2. (35)-(36) and (34) follow by Theorems 19.11 of

Davidson (1994) and Jong (1997), respectively given Assumption 4 on the boundedness

of the relevant moments and if the processes involved are N ED processes on some αmixing processes satisfying the required size restrictions. To show the latter we need to

show that (A) l (pt (yt , ϑp ) ; yt ) and l(2) (pt (yt , ϑp ) ; yt ) are L1 − N ED process on an αmixing process, where l(i) (pt (yt , ϑp ) ; yt ) denotes the i-th derivative of l with respect to

yt , and (B) l(1) (pt (yt , ϑp ) ; yt ) is an Lr -bounded, L2 − N ED process, of size −1/2, on

an α-mixing process of size −r/(r − 2). These conditions are satisfied by Assumption 4,

given Theorem 17.16 of Davidson (1994).

Proof of Theorem 2

We need to show that the conditions A of Chen & Shen (1998) hold. Condition A.1 is

satisfied by Assumption 3. Conditions A.2-A.4 have to be confirmed for the particular

instance of the loss function and the approximating function basis given in the Theorem.

We show A.2 for

T

X

− log pt (yj+1 ) .

LT =

t=1

where

pt (y) =

pT

N X

X

ν is qit (y) I (rs−1 ≤ y < rs ) .

i=1 s=1

0

Let ν 0 = (ν 01 , ..., ν 0N ), ν 0i = (ν 0i1 , ...) denote the set of coefficients that maximise E (LT )

T

and ν T a generic point of the space of coefficients {ν T,is }N,p

i,s=1 . We need to show that

V ar log pt y, ν 0 − log pt (y, ν T ) ≤ Cε2 .

sup

(38)

{kν 0 −ν T k≤ε}

We have

0

log pt y, ν −log pt (y, ν T ) = log

0

pt (y, ν )

pt (y, ν T )

PN PpT

log 1 +

PN PpT

= log

0

s=1 ν is qit (y) I (rs−1 ≤ y < rs )

PNi=1PpT

i=1

s=1

ν T,is qit (y) I (rs−1 ≤ y < rs )

0

i=1

s=1 (ν is − ν T,is ) qit (y) I (rs−1 ≤ y < rs )

PN PpT

i=1

s=1 ν T,is qit (y) I (rs−1 ≤ y < rs )

22

!

!

=

But,

!

PN PpT

0

(ν

−

ν

)

q

(y)

I

(r

≤

y

<

r

)

T,is

it

s−1

s

is

i=1

s=1

≤

log 1 +

PN PpT

i=1

s=1 ν T,is qit (y) I (rs−1 ≤ y < rs )

P P

pT

N

i=1 s=1 (ν 0is − ν T,is ) qit (y) I (rs−1 ≤ y < rs ) C1 ε + PN PpT

i=1

s=1 ν T,is qit (y) I (rs−1 ≤ y < rs )

Then, (38) follows from Assumption 5. Condition A.4, requiring that

sup

{kν 0 −ν T k≤ε}

log pt y, ν 0 − log pt (y, ν T ) ≤ εs UT (y) ,

(39)

where supT E (UT (yt ))γ < ∞, for some γ > 2, follows similarly.

Next we focus on Condition A.3. First, we need to determine the rate at which a function in ΦTqi , where η s = I (rs−1 ≤ y < rs ) can approximate a continuous function, f , with

finite L2 -norm defined on a compact interval of the real line denoted by M = [M1 , M2 ].

To simplify notation and without loss of generality we denote the piecewise linear apPT

proximating function by ps=0

ν s I (rs−1 ≤ y < rs ) and would like to determine the rate at

R P

1/2

2

pT

which M ( s=1 ν s I (rs−1 ≤ y < rs ) − f (y)) dy

converges to zero as pT → ∞. We

∞

∞

pT

T

assume that the triangular array {{rs }s=0 }T =1 = {{rT s }ps=0

}T =1 defines an equidistant

grid in the sense that M1 ≤ rT 0 < rT pT ≤ M2 and

sup rs − rs−1 = O

s

and

inf rs − rs−1 = O

s

1

pT

1

pT

.

For simplicity, we set M1 = rT 0 and rT pT = M2 . We have

Z

M

pT

X

!2

ν s I (rs−1 ≤ y < rs ) − f (y)

s=1

dy =

pT Z

X

s=0

rs

rs−1

By continuity of f we have that there exist ν s such that

sup

sup

s

y∈[rs−1 ,rs ]

|ν s − f (y)| = O

23

1

pT

(ν s − f (y))2 dy

This implies that

Z

rs

C1

sup

(ν s − f (y)) dy ≤ 2 sup

pT s

s

rs−1

2

Z

rs

dy ≤

rs−1

C1

p3T

which implies that

pT Z

X

rs

Z

2

s

rs−1

s=0

rs

(ν s − f (y))2 dy ≤

(ν s − f (y)) dy ≤ pT sup

rs−1

C1

p2T

giving

Z

!2

pT

X

M

ν s I (rs−1 ≤ y < rs ) − f (y)

1/2

dy

= O p−1

.

T

s=1

The next step involves determining HΦTq () which denotes the bracketing L2 metric eni

tropy of ΦTqi . HΦTq () is defined as the logarithm of the cardinality of the -bracketing set

i

of ΦTqi that has the smallest cardinality among all -bracketing sets. An -bracketing set

for ΦTqi , with cardinality Q, is defined as a set of L2 bounded functions hl1 , hu1 , ..., hlQ , huQ

such that maxj huj − hlj ≤ and for any function h in ΦTqi , defined on M = [M1 , M2 ],

there exists j such that hlj ≤ h ≤ huj almost everywhere. We determine the bracketing L2

metric entropy of ΦTqi where η s = I (rs−1 ≤ y < rs ), from first principles. By the definition

of ΦTqi , any function in ΦTqi is bounded. We set

sup sup sup |h (y)| = B < ∞.

T

h∈ΦT

qi

y

Q

Then, it is easy to see that an -bracketing set for ΦTqi is given by hli , hui i=1 where

hliq

=

pT

X

ν lis I (rs−1 ≤ y < rs ) ,

s=0

huiq

=

pT

X

ν uis I (rs−1 ≤ y < rs ) ,

s=0

ν lis = ν lT is takes values in {−B, −B + /pT , −B + 2/pT , ..., B − /pT } and ν uis = ν uT is

2

takes values in {−B + /pT , −B

T , ..., B}. Clearly for > C > 0, Q = QT = O (pT )

+2 2/p

2BpT

and so, then, HΦTq () = ln

= O (ln (pT )). For some δ, such that 0 < δ < 1,

i

24

Condition A.3 of Chen & Shen (1998) involves

δ

−2

Zδ

1/2

HΦT

qi

() d = δ

−2

δ2

Zδ

ln

2Bp2T

1/2

d ≤ δ

−2

δ2

δ

−2

δ ln

2Bp2T

δ

Zδ

ln

2Bp2T

d =

δ2

2

− δ ln

2Bp2T

δ2

<δ

−1

ln

2Bp2T

δ

which must be less than T 1/2 . We have that

2Bp2T

−1

δ ln

≤ T 1/2

δ

or

δ

−1

ln

p2T

δ

= o T 1/2

Setting δ = δ T and parametrising δ T = T −ϕ and pT = T φ gives ϕ < 1/2. So using the

result of Theorem 1 of Chen & Shen (1998) gives

0

v − ν̂ T = Op max T −ϕ , T −φ = Op T − min(ϕ,φ)

Proof of Corollary 3

We need to show that the conditions of Theorem 2 hold for the choices made in the

statement of the Corollary. We have that

l (pt (yt , ϑp ) ; yt ) = log

p

N X

X

!

ν is qit (yt ) I (rs−1 ≤ yt < rs )

i=1 s=1

Without loss of generality we can focus on a special case given by

l (pt (yt , ϑp ) ; yt ) = log (ν 1 q1 (yt ) + ν 2 q2 (yt )) .

We have

l(1) (pt (yt , ϑp ) ; yt ) =

and

l(1) (pt (yt , ϑp ) ; yt ) = −

q1 (yt )

,

ν 1 q1 (yt ) + ν 2 q2 (yt )

q1 (yt )2

.

(ν 1 q1 (yt ) + ν 2 q2 (yt ))2

25

It is clear that both l(1) (pt (yt , ϑp ) ; yt ) and l(2) (pt (yt , ϑp ) ; yt ) are bounded functions of yt

so, by Theorem 17.13 of Davidson (1994), the N ED properties of yt given in Assumption 3

are inherited by l(1) (pt (yt , ϑp ) ; yt ) and l(2) (pt (yt , ϑp ) ; yt ). So we focus on l (pt (yt , ϑp ) ; yt )

and note that, since q(y) ∼ exp (−y 2 ) as y → ±∞,

log exp −y 2

= −y 2

Then, using Example 17.17 of Davidson (1994) which gives that if yt is L2 − N ED of size

−a and Lr -bounded (r > 2), then y 2 is L2 -NED of size −a(r − 2)/2(r − 1). Since we need

that the N ED size of l (pt (yt , ϑp ) ; yt ) to be greater than 1/2 the minimum acceptable

value for r is a ≥ (r − 1)/(r − 2).

26