Efficient MPI Bcast across Different Process Arrival Patterns

advertisement

Efficient MPI Bcast across Different Process Arrival Patterns

Pitch Patarasuk

Xin Yuan

Department of Computer Science, Florida State University

Tallahassee, FL 32306

{patarasu, xyuan}@cs.fsu.edu

Abstract

A Message Passing Interface (MPI) collective operation

such as broadcast involves multiple processes. The process arrival pattern denotes the timing when each process

arrives at a collective operation. It can have a profound

impact on the performance since it decides the time when

each process can start participating in the operation. In

this paper, we investigate the broadcast operation with different process arrival patterns. We analyze commonly used

broadcast algorithms and show that they cannot guarantee high performance for different process arrival patterns.

We develop two process arrival pattern aware algorithms

for broadcasting large messages. The performance of proposed algorithms is theoretically within a constant factor of

the optimal for any given process arrival pattern. Our experimental evaluation confirms the analytical results: existing broadcast algorithms cannot achieve high performance

for many process arrival patterns while the proposed algorithms are robust and efficient across different process arrival patterns.

1 Introduction

A Message Passing Interface (MPI) collective operation

such as broadcast involves multiple processes. The process

arrival pattern denotes the timing when each process arrives at a collective operation [?]. It can have a profound

impact on the performance because it decides the time when

each process can start participating in the operation. A process arrival pattern is said to be balanced when all processes

arrive at the call site at the same time (and thus, start the

operation simultaneously). Otherwise, it is said to be imbalanced.

It has been shown in [?] that (1) process arrival patterns

for collective operations in MPI applications are usually

sufficiently imbalanced to affect the communication performance significantly; (2) it is virtually impossible for MPI

application developers to control the process arrival pat-

terns in their applications; and (3) the performance of collective communication algorithms is sensitive to process arrival patterns. Hence, for an MPI collective operation to

be efficient in practice, it must be able to achieve high performance for both balanced and imbalanced process arrival

patterns. Process arrival pattern is one of the most important factors that affect the performance of collective communication operations. Unfortunately, this important factor

has been largely overlooked by the research and development community. Almost all existing algorithms for MPI

collective operations were designed, analyzed, and evaluated under an unrealistic assumption that all processes start

the operation at the same time (a balanced process arrival

pattern).

Broadcast operation is one of the most common collective operations. In this operation, a message from a process, called root, is sent to all other processes. The MPI

routine that realizes this operation is MPI Bcast [?]. Existing broadcast algorithms that are commonly considered

as efficient include the binomial tree algorithm [?, ?], the

pipelined algorithms [?, ?, ?, ?], and the scatter followed

by all-gather algorithm [?]. These algorithms have been

adopted in widely used MPI libraries such as MPICH [?]

and OPEN MPI [?]. While all of these algorithms perform reasonably well with a balanced process arrival pattern (they were designed under such an assumption), their

performance with more practical imbalanced process arrival

patterns has never been thoroughly studied.

This paper investigates the broadcast operation with different process arrival patterns. We analyze commonly used

broadcast algorithms including the flat-tree algorithm, the

binomial-tree algorithm, the pipelined algorithms, and the

scatter-allgather algorithm, and show that all of these algorithms cannot guarantee high performance for different

process arrival patterns. Our analysis follows a competitive

analysis framework where the performance of an algorithm

relative to the best possible algorithm is given. The performance of an algorithm under different process arrival patterns is characterized by the competitive ratio that bounds

the ratio between the performance of the algorithm and the

performance of the optimal algorithm for any given process

arrival pattern. We show that the competitive ratios of the

commonly used broadcast algorithms are either unbounded

or Ω(n), where n is the number of processes in the operation. This indicates that all of these algorithms may perform

significantly worse than the optimal algorithms with some

process arrival patterns. We developed two process arrival

pattern aware algorithms for broadcasting large messages.

Both algorithms have constant competitive ratios: they perform within a constant factor of the optimal for any process arrival pattern. We empirically evaluate the commonly

used and the proposed broadcast algorithms with different

process arrival patterns. The experimental evaluation confirms our analytical results: existing broadcast algorithms

cannot achieve high performance for many process arrival

patterns while the proposed algorithms are robust and efficient across different process arrival patterns.

The rest of the paper is organized as follows. Section

2 formally describes the process arrival pattern and introduces the performance metrics for measuring the performance of broadcast algorithms with different process arrival

patterns. Section 3 presents the competitive analysis of existing broadcast algorithms. Section 4 details the proposed

process arrival pattern aware algorithms. Section 5 reports

the results of our experiments. Section 6 discusses the related work. Finally, Section 7 concludes the paper.

p

average

arrival time

p

0

a

δ e

f

a

ω

e δ

e a

f

f

p

1

p

2

3

2

δ2

0

0

δ1

0

a1

e1

0

f1

arrival time

exit time

3

2

3

3

3

2

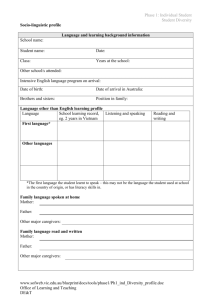

Figure 1. Process arrival pattern

messages that can be sent during the period when some processes arrive while others do not. To capture this notion, the

worst case and average case imbalance times are normalized

by the time to communicate one message. The normalized

results are called the average/worst case imbalance factor.

Let T be the time to communicate the broadcast message

from one process to another process. The average case imbalance factor equals to Tδ̄ and the worst case imbalance

factor equals to Tω . A worst case imbalance factor of 20

means that by the time the last process arrives at the operation, the first process may have sent 20 messages. It has

been shown in [?] that the worst case imbalance factors of

the process arrival patterns in MPI applications are in many

cases much larger than the number of processes involved in

the operations.

2 Background

2.2 Performance metrics

2.1 Process arrival pattern

Let n processes, p0 , p1 , ..., pn−1 , participate in a broadcast operation. Without loss generality, we will assume

that p0 is the root. Let ai be the time when process pi

arrives at the operation. The process arrival pattern can

be represented by the tuple P AP = (a0 , a1 , ..., an−1 ).

n−1

The average process arrival time is ā = a0 +a1 +...+a

.

n

Let fi be the time when process pi finishes the operation.

The process exit pattern can be represented by the tuple

P EP = (f0 , f1 , ..., fn−1 ). Let δi be the time difference

between pi ’s arrival time ai and the average arrival time ā,

δi = |ai − ā|. The imbalance in the process arrival pattern can be characterized by the average case imbalance

n−1

, and the worst case imbalance

time, δ̄ = δ0 +δ1 +...+δ

n

time, ω = maxi {ai } − mini {ai }. Figure ?? depicts the

described parameters.

The different process arrival times at a broadcast operation can significantly affect the performance. For instance,

if a broadcast algorithm requires a process to forward messages to other processes (this occurs in all tree based broadcast algorithms), the forwarding can happen only after the

process arrives. The impacts of an imbalanced process arrival pattern can be better characterized by the number of

Let the process arrival pattern P AP = (a0 , ..., an−1 )

and the process exit pattern P EP = (f0 , ..., fn−1 ). The

elapsed time of each process pi , 0 ≤ i ≤ n − 1, is ei =

fi − ai . Thus, the total time for the operation is e0 + e1 +

... + en−1 and the average per node time for this operation

n−1

is ē = e0 +e1 +...+e

. The worst case per node time is

n

g = maxi {ei }.

In an application, the total time or the average per node

time accurately reflects the time that the program spends on

an operation. Hence, we will use the average per node time

(ē) as the performance metric to characterize the performance of a broadcast algorithm with a given process arrival

pattern. The worst case per node time (g) is also interesting.

However, we consider it secondary since it does not reflect

the actual time that an application spends on the operation.

Note that the impact of load balancing in a broadcast operation, which is better reflected by the worst case per node

time, is not clear with imbalanced process arrival patterns.

In analyzing the performance of broadcast algorithms,

we assume an ideal platform such that the process exit pattern (and thus the average per node time) is a function of the

broadcast algorithm and the process arrival pattern. We ignore other deterministic and non-deterministic factors that

can affect the performance. Our assumptions will be detailed in the next section. We denotes the performance (average per node time) of a broadcast algorithm r with a process arrival pattern P AP = (a0 , ..., an−1 ) as ē(r, P AP ).

An optimal broadcast algorithm for a given process arrival pattern P AP is an algorithm that minimizes the average per node time. Formally, the optimal average per node

time for a process arrival pattern P AP is given by

OP T (P AP ) =

min

{ē(r, P AP )}

r is a broadcast algorithm

The performance ratio of a given broadcast algorithm r

on a given process arrival pattern P AP measures how far

is r from being optimal for the process arrival pattern. It is

defined as the average per node time of r on P AP divided

by the minimum possible average per node time on P AP .

P ERF (r, P AP ) =

ē(r, P AP )

OP T (P AP )

The value for P ERF (r, P AP ) is at least 1. It is exactly

1 if and only if the broadcast algorithm is optimal for the

P AP . When a broadcast algorithm is optimized for a specific process arrival pattern, it does not provide any guarantees for other process arrival patterns. The definition of the

performance ratio follows the competitive analysis framework where performance guarantees of a certain solution

are provided relative to the best possible solution. The definition of performance ratio of a broadcast algorithm can

be extended to be with respect to a set of process arrival

patterns. Let Γ be a set of process arrival patterns, the performance ratio of a broadcast algorithm r on Γ is defined

as

P ERF (r, Γ) = max {P ERF (r, P AP )}

P AP ∈Γ

When Γ includes all possible process arrival patterns,

the performance ratio is referred to as the competitive ratio. The competitive ratio of an algorithm r is denoted by

P ERF (r). The competitive ratio is the worst performance

ratio that an algorithm obtains with respect to all process arrival patterns. In our analysis, the performance of a broadcast algorithm with different process arrival patterns is characterized by its competitive ratio.

3 Competitive ratios of existing broadcast algorithms

3.1 System models

The performance of broadcast algorithms is affected

heavily by both the system architecture and the process arrival pattern. To focus on analyzing the impact of process

arrival patterns, we make the following assumptions.

• When both the sender and the receiver are ready, the time

to communicate a message of size msize between any pair

of processes is T (msize). Communications between multiple pairs of processes can happen simultaneously and do

not interfere with one another. This assumption holds when

each process runs on a different compute node and all compute nodes are connected by a cross-bar switch.

• The communications follow the 1-port model. That is,

when a process is ready, it can send and receive simultaneously. Most contemporary networking technology such as

Ethernet, InfiniBand, and Myrinet supports this model when

each compute node is equipped with one network interface

card.

• When a process receives a message, it can start forwarding the message as soon as the first bit of the message is received. Furthermore, the time to send a message is proportional to the message size: T (a × msize) = a × T (msize).

These assumptions simplify the situation when a message

is forwarded in a pipelined fashion: the message can be

pipelined for each single bit. These assumptions underestimate the communication time by not counting the startup overheads.

We consider two communication models: the blocking

model and the non-blocking model. These two models differentiate in how the system behaves when the sender tries

to send a message and the receiver is not ready for the message (e.g. not arriving at the operation yet). In the blocking

model, the sender is blocked until the receiver is ready. The

actual communication takes place after the receiver is ready.

In the non-blocking model, the sender is not blocked even

when the receiver is not ready. The actual communication

takes place when the sender is ready to send the message.

The message is thus buffered at the receiving end. When the

receiver arrives, it can read the message from local memory.

We assume that reading a message from the local memory

does not take any time. Note that in both models, a process

must arrive at the operation before it can forward a message.

Both the blocking and non-blocking models have practical applications. In MPI implementations, small messages

are usually buffered at the receiving side and follow the

non-blocking model. Large messages are typically communicated using the rendezvous protocol, which follows the

blocking model.

Let a process arrival pattern P AP = (a0 , a1 , ..., an−1 ).

Since nothing can happen in the broadcast operation before

the root arrives at the operation, we will assume that a0 ≤

ai , 1 ≤ i ≤ n−1. Note that p0 is the root. In practice, when

ai < a0 , we can treat it as if ai = a0 , and add ai − a0 in

calculating the total communication time. We denote ∆ =

maxi {ai } − a0 .

3.2 Optimal performance for a given process arrival pattern

To perform the competitive analysis of the algorithms,

we will need to establish the optimal broadcast performance

for a process arrival pattern. The following lemmas bound

the optimal performance.

Lemma 1: Consider broadcasting a message of size msize

with a process arrival pattern P AP = (a0 , ..., an−1 ). Under the non-blocking model, OP T (P AP ) ≤ T (msize).

Under the blocking model, OP T (P AP ) ≤ ∆

n +T (msize),

where ∆ = maxi {ai } − a0 .

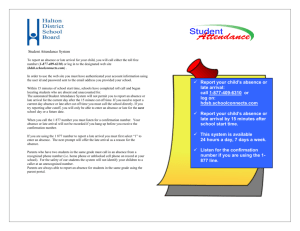

Proof: We prove by constructing an algorithm, called

nearopt, such that ē(nearopt, P AP ) = T (msize) under

the non-blocking model and ē(nearopt, P AP ) = ∆

n +

T (msize) under the blocking model.

Let us sort the arrival times in ascending order and let the

sorted arrival times be (a0 , a01 , a02 , ..., a0n−1 ) and the corresponding processes be p0 , p01 , ..., p0n−1 . Algorithm nearopt,

depicted in Figure ??, works as follows: (1) p0 sends the

broadcast message to p01 and then exits the operation; and

(2) p0i , 1 ≤ i ≤ n − 2, forwards the message it receives to

p0i+1 and then exits the operation.

p0

p’1

p’2

p’n−1

Figure 2. The near optimal broadcast algorithm (nearopt)

Under the non-blocking model, since a0i ≤ a0i+1 , each

process can start forwarding data as soon as it reaches the

operation. Hence, each process pi , 0 ≤ i ≤ n − 1, will exit

the operation at time fi = ai + T (msize). The total time

for this operation is n×T (msize) and ē(nearopt, P AP ) =

n×T (msize)

= T (msize). Under the non-blocking model,

n

OP T (P AP ) ≤ ē(nearopt, P AP ) = T (msize).

Under the blocking model, p0 will need to wait until p01 arrives before it can start sending the data. Thus,

f0 = a01 + T (msize). A process p0i , 1 ≤ i ≤ n − 2,

will need to wait until p0i+1 before it can send the data:

fi0 = a0i+1 + T (msize). The last process p0n−1 will exit at

a0n−1 + T (msize). The total time for this operation is thus,

0

f0 − a0 + f10 − a01 + ... + fn−1

− a0n−1 = ∆ + n × T (msize)

∆

and ē(nearopt, P AP ) = n + T (msize). Hence, under

the blocking model, OP T (P AP ) ≤ ē(nearopt, P AP ) =

∆

n + T (msize). 2

While the nearopt is not always optimal, it is very close

to optimal for any process arrival pattern under both models

as shown in the following lemma.

Lemma 2: Under the non-blocking communication model,

OP T (P AP ) ≥ n−1

n × T (msize). Under the blocking

(msize)

model, OP T (P AP ) ≥ ∆+(n−1)×T

.2

n

The proof is omitted due to the space limitation. Basically, for the non-blocking case, the broadcast operation

must communication at least (n − 1) × msize messages

(each of the receivers must receive a msize sized message) and the total time for this operation is at least T ((n −

=

1) × msize). Hence, OP T (P AP ) ≥ T ((n−1)×msize)

n

n−1

×

T

(msize).

The

blocking

case

follows

a

similar

arn

gument.

3.3 Competitive ratios of common broadcast algorithms

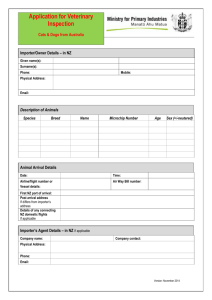

We analyze common broadcast algorithms including the

flat tree algorithm (used in OPEN MPI [?]), the binomial

tree broadcast algorithm (used in OPEN MPI and MPICH

[?]), the linear-tree pipelined broadcast algorithm (used in

SCI-MPICH [?]), and the scatter-allgather broadcast algorithm (used in MPICH). In the flat tree algorithm (Figure ?? (a)), the root sends the broadcast message to each

of the receivers one-by-one. In the binomial tree algorithm

(Figure ?? (b)), broadcast follows a hypercube communication pattern. There are lg(n) steps in this algorithm. In the

first step, p0 sends to p1 ; in the second step, p0 sends to p2

and p1 sends to p3 ; in the i-th step, processes p0 to p2i−1 −1

have the data, each of the process pi , 0 ≤ i ≤ 2i−1 − 1,

sends the message to process pi⊕2i−1 , where ⊕ is the exclusive or operator. In the linear tree pipelined algorithm,

the msize-byte message is partitioned into X segments of

size msize

X . The communication is done by arranging the

processes into a linear array: pi , 0 ≤ i ≤ n − 2, sends to

pi+1 , as shown in Figure ?? (c). Broadcasting the msizebyte message is realized by X pipelined broadcasts of segments of size msize

X . In the scatter followed by all-gather

algorithm, the msize-byte message is first distributed to

the n processes by a scatter operation (each machine gets

msize

n -byte data). After that, an all-gather operation is performed to combine messages to all processes. We denote

the flat tree algorithm as flat, the binomial algorithm as binomial, the linear tree pipelined algorithm as linear p, and

the scatter-allgather algorithm as sca-all.

0

0

1

2

3

4

1

5

6

4

5

7

0

2

3

1

6

7

(a) flat tree

(b) binomial tree

(c) linear tree

Figure 3. Broadcast algorithms

6

7

Lemma 3: Under the non-blocking model, the competitive

ratio of any broadcast algorithm that requires a receiver to

forward a message is unbounded (can potentially be infinite).

Proof: Let a broadcast algorithm r requires a process pi

to forward a message to pj in the operation. To show that

P ERF (r) is unbounded, we must show that there exists

ē(r,P AP )

a process arrival pattern P AP such that OP

T (P AP ) is unbounded. Let us denote Λ as a very large number. We construct the process arrival pattern where all processes except

pi arrive at the same time as p0 . Process pi arrives at a

much later time a0 + Λ. P AP = (a0 , a0 , .., ai , .., a0 =

a0 , a0 , .., a0 + Λ, .., a0 ). Under this arrival pattern, process pj can only finish the operation after it receives the

message forwarded by pi . Hence, fj ≥ a0 + Λ. Thus,

e

n−1

ē(r, P AP ) = e0 +e1 +...e

≥ nj ≥ Λ

n

n . From Lemma

1, OP T (P AP ) ≤ T (msize). Hence, P ERF (r) ≥

Λ

Λ

n

≥ T (msize)

= n×T (msize)

. Since Λ is indeΛ

pendent of n or T (msize), n×T (msize) can potentially be

infinite and P ERF (r) is unbounded. 2

We will use the notion P ERF (r) = ∞ to denote that

P ERF (r) is unbounded.

ē(r,P AP )

OP T (P AP )

Theorem 1: In both the blocking and non-blocking models,

PERF(flat) = Ω(n).

Proof: Consider first the non-blocking model with a balanced process arrival pattern P AP = (a0 , a0 , ..., a0 ). Since

the root sequentially sends the message to each of the receivers, f0 = a0 + (n − 1) × T (msize); f1 = a0 +

T (msize); f2 = a0 + 2 × T (msize); ...; fn−1 = a0 +

(n − 1) × T (msize). Thus, the total time for this operation is f0 − a0 + f1 − a0 + ... + fn−1 − a0 = (n − 1) ×

Pn−1

× T (msize).

T (msize) + i=1 i × T (msize) ≥ n(n−1)

2

Hence, ē(f lat, P AP ) ≥ n−1

×

T

(msize).

From

Lemma

2

1, OP T (P AP ) ≤ T (msize). We have P ERF (f lat) ≥

lat,P AP )

n−1

Hence,

P ERF (f lat, P AP ) = ē(f

OP T (P AP ) ≥

2 .

P ERF (f lat) = Ω(n).

In the blocking model, consider the same P AP as the

non-blocking case. Since all processes arrive at the same

time, ∆ = 0. With the flat-tree algorithm, the total time

for both the blocking and non-blocking models are the

same. From Lemma 1: OP T (P AP ) ≤ ∆

n + T (msize) =

T (msize). Hence, in the blocking model, P ERF (f lat) =

Ω(n). 2

Theorem 2: In the non-blocking model, PERF(binomial) =

∞. In the blocking model, PERF(binomial) = Ω(n).

Proof: In the non-blocking model, since the binomial tree

algorithm requires forwarding of messages, from Lemma 3,

P ERF (binomial) = ∞.

In the blocking model, consider the arrival pattern P AP

where the lg(n) direct children of the root arrive at a0 +

Λ and all other processes arrive at a0 . Let us assume

Λ

Λ

n ≥ T (msize). From Lemma 1, OP T (P AP ) ≤ n +

2Λ

T (msize) ≤ n . Clearly, in this operation, all processes will exit the operation after a0 + Λ. Hence, the total time for this operation is larger than (n − lg(n))Λ ≥

n

2 Λ. The average per node time ē(binomial, P AP ) ≥

Λ

2 . P ERF (binomial) ≥ P ERF (binomial, P AP ) =

ē(binomial,P AP )

≥ n4 . Hence, P ERF (binomial) = Ω(n).

OP T (P AP )

Theorem 3: In the non-blocking model, PERF(linear p) =

∞. In the blocking model, PERF(linear p) = Ω(n).

Proof: The linear tree pipelined algorithm requires the intermediate processes to forward messages. From Lemma 3,

in the non-blocking model, P ERF (linear p) = ∞.

In the blocking model, consider the process arrival pattern where process p1 arrives much later than all other

nodes: P AP = (a0 , a0 + Λ, a0 , ..., a0 ). Let Λ be much

larger than T (msize). Following the linear tree, all processes can receive the broadcast message after a0 + Λ.

Hence, the total time is at least (n − 1)Λ ≥ n2 Λ. Following the similar argument as that in the binomial tree,

P ERF (linear p) = Ω(n). 2

Theorem 4: In the non-blocking model, PERF(sca-all) =

∞. In the blocking model, PERF(sca-all) = Ω(n).

Proof: In the scatter-allgather algorithm, there is an implicit synchronization: in order for any process to finish

the all-gather operation, all process must arrive at the operation. This implicit synchronization applies to both the

blocking and non-blocking models. Consider arrival pattern when p1 arrives late: P AP = (a0 , a0 + Λ, a0 , ..., a0 ).

For both blocking and non-blocking models, due to the implicit synchronization, the total time for this algorithm is at

least (n − 1)Λ ≥ n2 × Λ and the per node time is at least Λ2 .

In the non-blocking model: since OP T (P AP ) ≤

Λ

, which is unT (msize), PERF(sca-all, PAP) ≥ 2T (msize)

bounded. Hence, PERF(sca-all) ≥ PERF(sca-all, PAP)

= ∞. In the blocking model, when Λ

n ≥ T (msize),

2Λ

OP T (P AP ) ≤ Λ

+T

(msize)

≤

.

PERF(sca-all,

PAP)

n

n

≥ n4 . Hence, PERF(sca-all) = Ω(n). 2

Theorems 1 to 4 show that the competitive ratios of all

of these commonly used algorithms are either unbounded or

Ω(n) for both the blocking or non-blocking models, which

indicates that these algorithms cannot guarantee high performance for different process arrival patterns: all of these

algorithms can potentially perform much worse than the optimal algorithm for some process arrival patterns.

4 Process arrival pattern aware algorithms

We present two new broadcast algorithms, one for each

communication model, with constant competitive ratios:

these algorithms guarantee high performance for any process arrival patterns. The new algorithms are designed for

broadcasting large messages. The idea is to add control

messages to make the processes aware of and adapt to the

process arrival pattern. Note that any efficient algorithm

will not wait until all processes arrive before taking any

action. Such an algorithm must make on-line decisions as

processes arrive: hence, the nearopt algorithm in Figure ??

cannot be used.

Since the algorithms are designed for broadcasting large

messages, we will assume that sending and receiving a

small control message do not take time. Moreover, under

the assumption in Section 3.1, broadcasting to a group of

processes when all receivers are ready will take T (msize)

time (e.g. using the nearopt algorithm). In practice, the

linear tree pipelined broadcast algorithm can have a communication time very close to T (msize) when the message

size is sufficiently large [?]. We will call the broadcast to

a sub-group of processes sub-group broadcast. In a subgroup broadcast, only the root knows the group members.

Hence, the root will need to initiate the operation. In practice, this can be done efficiently. Consider for example using the linear tree pipelined broadcast algorithm for a subgroup broadcast. The root can send a header that contains

the list of receivers in the sub-group before the broadcast

message. When a receiver receives the headers, it will know

how to form the linear tree by using the information in the

header.

The algorithm for the non-blocking model, called arrival nb, is shown in Figure ??. When a receiver arrives at

the operation, it checks to see whether the broadcast message has been received. If the message has been received,

it copies the message from the local memory to the output buffer and the operation is completed. If the message

has not been received, the receiver sends a control message

ARRIVED to the root and waits for the sub-group broadcast initiated by the root to receive the message. The root

repeatedly checks whether some process arrives. If some

processes arrive, it initiates a sub-group broadcast among

the processes. If no process arrives, the root picks a receiver

that have not been sent the broadcast message and sends the

message to the receiver.

Theorem 5: Under the assumptions in Section 3.1, ignoring

the control message overheads, PERF(arrival nb) ≤ 3.

Proof: In arrival nb, the algorithm works in rounds, in

each round, the root either sends a message to one node, or

performs a sub-group broadcast. In both case, T (msize)

will be incurred in each round. Since at most n − 1 rounds

are performed by the root, f0 ≤ a0 + (n − 1)T (msize).

Consider a receiving process, pi , 1 ≤ i ≤ n − 1.

In the worst case, pi must wait one full round before it

starts participating in the sub-group broadcast. It takes

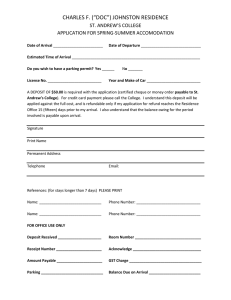

Receiving process:

(1) Check if the message has been buffered locally

(2) If yes, copy the message and exit;

(3) Else, send control message ARRIVED to the root.

(4) Participate in the sub-group broadcast initiated by

the root to receive the broadcast message.

Root process:

(1) While (some receivers have not been sent the message)

(2)

Check if any ARRIVED messages have arrived

(3)

Discard the ARRIVED messages from receivers who

have been sent the broadcast message

(4)

if (a group of receivers have arrived)

(5)

Initiate a sub-group broadcast

(6)

else

(7)

Select a process pi that has not been sent the

message and send the broadcast message to pi

Figure 4. Broadcast algorithm for the nonblocking model (arrival nb)

pi at most 2T (msize) to complete the operation: fi ≤

ai + 2T (msize). Hence, the total time among all processes

in the operation is no more than (n − 1)T (msize) + 2(n −

1)T (msize) and the average per node time is no more than

3(n−1)

×T (msize). This applies to any process arrival patn

tern, PAP. From Lemma 2, OP T (P AP ) ≥ n−1

n T (msize).

P ERF (arrival nb) ≤ 3. 2

The algorithm for the blocking model, called arrival b is

shown in Figure ??. When a receiver arrives at the operation, it sends a control message ARRIVED to the root and

waits for the sub-group broadcast initiated by the root to

receive the broadcast message. The root repeatedly checks

whether some process arrives. If some processes arrive, it

initiates a sub-group broadcast among the processes. Otherwise, it just waits until some processes arrive.

Receiving process:

(1) Send control message ARRIVED to the root.

(2) Participate in the sub-group broadcast initiated by

root to receive the broadcast message.

Root process:

(1) While (some receivers have not been sent the message)

(2)

Check if any ARRIVED messages have arrived

(3)

if (a group of receivers have arrived)

(4)

Initiate a sub-group broadcast

Figure 5. Broadcast algorithm for the blocking

model (arrival b)

Theorem 6: Under the assumptions in Section 3.1, ignoring

the control message overheads, PERF(arrival b) ≤ 3.

Proof: In arrival b, the algorithm works in rounds. In each

round, the root waits for some processes to arrive and performs the sub-group broadcast. Consider a receiving process, pi , 1 ≤ i ≤ n − 1. In the worst case, after pi arrives at the operation, it must wait T (msize) time before

it starts participating in the sub-group broadcast. Hence,

it takes pi at most 2T (msize) to complete the operation:

fi ≤ ai + 2T (msize).

The root exits the operation only after the last process receives the broadcast message. After the last process arrives, it will wait at most 2T (msize) to receive the

message. Hence, f0 ≤ a0 + ∆ + 2T (msize). Hence,

the total time among all processes in the operation is no

more than ∆ + 2T (msize) + 2(n − 1)T (msize) and the

average per node time is no more than ∆+2n×Tn (msize) .

This applies to any process arrival pattern PAP. From

(msize)

Lemma 2, OP T (P AP ) ≥ ∆+(n−1)×T

. Hence,

n

∆+2n×T (msize)

P ERF (arrival b) ≤ ∆+(n−1)×T (msize) ≤ 3. 2

5 Performance study

The study is performed on two clusters: Draco and Cetus. The Draco cluster has 16 compute nodes with a total

of 128 cores. Each node is a Dell PowerEdge 1950 with

two 2.33GHz Quad-core Xeon E5345’s (8 cores per node)

and 8GB memory. The compute nodes are connected by a

20Gbps InfiniBand DDR switch. The MPI library in this

cluster is mvapich-0.9.9. The Cetus cluster is a 16-node

Ethernet switched cluster. Each node is a Dell Dimension 2400 with a 2.8GHz P4 processor and 640MB memory. All nodes run Linux (Fedora) with 2.6.5-1.358 kernel.

The Ethernet card in each machine is Broadcom BCM 5705

1Gbps/100Mbps/10Mbps card with the driver from Broadcom. The Ethernet switch connecting all nodes is a Dell

Powerconnect 2724 (24-port, 1Gbps switch). The MPI library in this cluster is MPICH2-1.0.4.

To evaluate the performance of the existing and proposed broadcast algorithms, we implemented these algorithms over MPI point-to-point routines. Since we consider broadcasting large messages and almost all existing

implementations for broadcasting large messages follow the

blocking model, our evaluation also focuses on the blocking

model. We implemented the flat tree algorithm (flat), the

linear-tree pipelined algorithm (linear p), the binomial tree

algorithm (binomial), and the proposed process arrival pattern aware algorithm (arrival b). In addition, we also consider the native algorithm from the MPI library (denoted

as native). In the implementation of arrival b, there can

be multiple methods to perform the sub-group broadcast.

We choose the most efficient scheme for each platform:

the linear p with a segment size of 8KB on Cetus and

the scatter-allgather algorithm for Draco. Besides studying

the blocking model, we also implemented two algorithms

that follow the non-blocking model on Draco when each

node runs one process: the non-blocking flat tree algorithm

(denoted as flat nb) and the process arrival pattern aware

non-blocking algorithm (denoted as arrival nb). The nonblocking communication is achieved by chopping a large

point-to-point message into a set of small messages to avoid

the rendezvous protocol in MPI and other factors that cause

communications to block. Note that using the MPI Rsend

routine can avoid the rendezvous protocol. However, for

some reason, this routine is still blocking in the broadcast

operation.

(1) r = rand() % MAX IF;

(2) for (i=0; i<ITER; i++) {

(3) MPI Barrier (...);

(4) for (j=0; j<r; j++) {

(5)

/* computation time equals to one message time */

(6) }

(7) t0 = MPI Wtime();

(8) MPI Bcast(...);

(9) elapse += MPI Wtime() - t0 ;

(10)}

Figure 6. Code segment for studying controlled random process arrival patterns

We perform extensive experiments to study the broadcast

algorithms with many different process arrival patterns. We

will present two representative experiments. The first study

investigates the performance of the algorithms with random

process arrival patterns. The second study investigates the

performance when a small subset of processes arrive at the

operation later than other processes. The code segment in

the benchmark to study random process arrival patterns is

shown in Figure ??. In this benchmark, a random value that

is bounded by a constant MAX IF is generated and stored

in r. For different processes, r is different since different

processes have different seeds. The controlled random process arrival pattern is created by first calling an MPI Barrier

and then executing the computation in the loop in lines (4)

to (6) whose duration is controlled by the value of r. The

loop body in line (5) executes roughly the time to send one

broadcast message between two nodes. Hence, the maximum imbalance factor (defined in Section 2) is at most

MAX IF in this experiment. The code to investigate the effect with a few late arriving processes is similar except that

the loop in lines (4)-(6) is always executed MAX IF times

and that each process (except the root) has a probability to

run this loop.

In reporting the results, we fix either the broadcast mes-

1

2

4

8 16 32 64 128

MAX_IF

Average per node time (ms)

(a) Draco (2MB)

300

Native

Arrival_b

Flat

Linear_p

Binomial

250

200

150

Average per node time (ms)

Native

Arrival_b

Flat

Linear_pl

Binomial

100

160

Native

140

Arrival_b

120

Flat

Linear_pl

100

Binomial

80

60

40

20

0

128K 256K 512K

1M

Message Size (bytes)

2M

(a) Draco

50

0

1

2

4

8 16 32 64 128

MAX_IF

(b) Cetus (256KB)

Figure 7. Performance with controlled random process arrival patterns

Average per node time (ms)

Average per node time (ms)

160

140

120

100

80

60

40

20

0

sage size or the M AX IF value. Each data point in our

results is an average of twenty random samples (twenty

random process arrival patterns). In studying the blocking model, n = 128 on Draco (8 processes per node) and

n = 16 on Cetus. In studying the non-blocking model,

n = 16 on Draco.

Figure ?? shows the performance of different algorithms

with controlled random process arrival patterns. The broadcast message size is 2MB on Draco and 256KB on Cetus. On both clusters, when the imbalanced factor is

small (MAX IF is small), arrival b performs slightly worse

than the best performing algorithms. Even in such cases,

arrival b is competitive: the performance is only slightly

worse than the best performing algorithm mainly due to the

overheads introduced. When the process arrival pattern becomes more imbalanced (MAX IF is larger), the arrival b

significantly out-performs all other algorithms. The performance difference is large when MAX IF is large.

400

Native

350

Arrival_b

300

Flat

Linear_p

250

Binomial

200

150

100

50

0

128K 256K 512K

1M

Message Size (bytes)

2M

(b) Cetus

Figure 8. Broadcast with different message

size with M AX IF = n

Native

Arrival_b

Flat

Linear_p

Binomial

120

100

80

60

40

140

120

100

80

40

20

0

128K

0

1

2

4

300

250

200

150

100

50

0

1

2

4

8 16 32 64 128

MAX_IF

(b) Cetus (512KB)

Figure 9. Performance when 20% of processes arrive late

Figure ?? shows the performance when a small subset of

processes arrive late. In this experiment, the sender always

arrives early and each of the receiving process has a 20%

probability to arrive late. The time for late arrival is roughly

equal to the time to send MAX IF messages. The broadcast

message size is 1MB on Draco and 512KB on Cetus. As can

be seen in the figure, binomial, linear p, and native behave

similarly and perform worse than arrival b when MAX IF

is large. The flat and arrival b have similar characteristics

with arrival b performing much better. Figure ?? shows

256K 512K

1M

Message Size (bytes)

2M

(a) Draco

8 16 32 64 128

MAX_IF

Native

Arrival_b

Flat

Linear_p

Binomial

Native

Arrival_b

Flat

Linear_p

Binomial

60

20

(a) Draco (1MB)

Average per node time (ms)

Average per node time (ms)

140

the performance on different message sizes with the same

settings. MAX IF = n (the number of processes) and the

broadcast message size varies. Arrival b is efficient for a

wide range of message sizes.

Average per node time (ms)

Average per node time (ms)

Figure ?? shows the performance with different message

sizes. We fixed MAX IF = n. On Draco, n = 128 and

on Cetus, n = 16. As can be seen in the figure, on both

clusters, arrival b maintains its advantage for a wide range

of message sizes. When the message size is not sufficiently

large, arrival b may not achieve a good performance due

to the control message overheads it introduces. This can be

seen in the 128KB case on Draco.

300

250

200

Native

Arrival_b

Flat

Linear_p

Binomial

150

100

50

0

128K

256K 512K

1M

Message Size (bytes)

2M

(b) Cetus

Figure 10. Performance when 20% of processes arrive late (MAX IF = n)

Figure ?? shows the performance of the non-blocking

algorithms. This figure shows the cases when 20% of processes arrive late. There are two trends in the figure. First,

the non-blocking algorithm performs better than the corresponding blocking algorithm. This is because the reduction

of the waiting time in a non-blocking algorithm. Second,

with a non-blocking algorithm, the average per node time is

not very sensitive to the process arrival pattern: see the flat

line in Figure ?? (a), which indicates that the non-blocking

model is more efficient than the blocking model. Unfortunately, making point-to-point communications with a large

amount of data non-blocking is very difficult: many factors

such as the limited system buffers in various levels of soft-

Average per node time (ms)

ware can make the communication blocking.

20

Arrival_b

Arrival_nb

Flat

Flat_nb

15

10

5

0

1

2

4

8 16 32

MAX_IF

64 128

Average per node time (ms)

(a) 1MB

18

Arrival_b

16

Arrival_nb

14

Flat

12

Flat_nb

10

8

6

4

2

0

128K 256K 512K

1M

Message Size (bytes)

have been developed and adopted in common MPI libraries.

These algorithms achieve high performance with a balanced

process arrival pattern. However, their performance with

imbalanced process arrival patterns has not been thoroughly

studied.

The characteristics and impacts of process arrival patterns in MPI applications were studied in [?]. Our work is

motivated by the results in [?]. While process arrival pattern is an important factor, few collective communication

algorithms have been developed to deal with imbalanced

process arrival patterns. The only algorithms that can adapt

to different process arrival patterns (known to us) were published in [?], where algorithms for all-reduce and barrier

can automatically change their logical topologies based on

the process arrival pattern. We formally analyze the performance of existing broadcast algorithms with different process arrival patterns. This is the first time such analysis is

performed on any collective algorithms.

7 Conclusion

2M

We study efficient broadcast schemes with different process arrival patterns. We show that existing algorithms cannot guarantee high performance with different process arrival patterns, and develop new broadcast algorithms that

achieve constant competitive ratios for the blocking and

non-blocking models. The experiment results show that the

proposed algorithms are robust and efficient for different

process arrival patterns.

(b) MAX IF = 16

Acknowledgement

Figure 11. Non-blocking case (Draco with

each node running one process (n = 16))

Our experimental results confirm the analytical findings.

The commonly used algorithms perform poorly when the

process arrival pattern has a large imbalance factor. On the

other hand, the proposed process arrival pattern aware algorithms react much better to imbalanced process arrival patterns. Moreover, when the imbalance factor is large, using

our algorithms improves the performance very significantly.

6 Related Work

The broadcast operation in different environments has

been extensively studied. Many algorithms were developed

for topologies used in parallel computers such as meshes

and hypercubes [?, ?]. Generic broadcast algorithms including the binomial tree algorithms [?, ?], the pipelined broadcast algorithms [?, ?, ?], the scatter-allgather algorithm [?],

This work is supported in part by National Science Foundation (NSF) grants: CCF-0342540, CCF-0541096, and

CCF-0551555.

References

[1] A. Faraj, P. Patarasuk, and X. Yuan, “A Study of Process Arrival Patterns for MPI Collective Operations,” the 21th ACM

ICS, pages 168-179, June 2007.

[2] S. L. Johnsson and C. T. Ho, “Optimum Broadcasting and

Personalized Communication in Hypercube.” IEEE Trans.

on Computers, 38(9):1249-1268, 1989.

[3] R. Kesavan, K. Bondalapati, and D.K. Panda, “Multicast on

Irregular Switch-based Networks with Wormhole Routing.”

IEEE HPCA, Feb. 1997.

[4] H. Ko, S. Latifi, and P. Srimani, “Near-Optimal Broadcast in

All-port Wormhole-routed Hypercubes using Error Correcting Codes.” IEEE TPDS, 11(3):247-260, 2000.

[5] A. Mamidala, J. Liu, D. Panda. “Efficient Barrier and Allreduce on InfiniBand Clusters using Hardware Multicast and

Adaptive Algorithms.” IEEE Cluster, pages 135-144, Sept.

2004.

[6] P.K. McKinley, H. Xu, A. Esfahanian and L.M. Ni,

“Unicast-Based Multicast Communication in WormholeRouted Networks.” IEEE TPDS, 5(12):1252-1264, Dec.

1994.

[7] MPICH - A Portable Implementation

http://www.mcs.anl.gov/mpi/mpich.

of

MPI.

[8] The MPI Forum, “The MPI-2: Extensions to the Message Passing Interface,” Available at http://www.mpiforum.org/docs/mpi-20-html/mpi2-report.html.

[9] Open MPI: Open Source High Performance Computing,

http://www.open-mpi.org/.

[10] P. Patarasuk, A. Faraj, and X. Yuan, “Pipelined Broadcast on

Ethernet Switched Clusters.” The 20th IEEE IPDPS, April

25-29, 2006.

[11] SCI-MPICH: MPI for SCI-connected Clusters. Available at: www.lfbs.rwth-aachen.de/ users/joachim/SCIMPICH/pcast.html.

[12] R. Thakur, R. Rabenseifner, and W. Gropp, “Optimizing of Collective Communication Operations in MPICH,”

ANL/MCS-P1140-0304, Mathematics and Computer Science Division, Argonne National Laboratory, March 2004.

[13] S. S. Vadhiyar, G. E. Fagg, and J. Dongarra, “Automatically Tuned Collective Communications,” In Proceedings

of SC’00: High Performance Networking and Computing,

2000.

[14] J. Watts and R. Van De Gejin, “A Pipelined Broadcast

for Multidimensional Meshes.” Parallel Processing Letters,

5(2)281-292, 1995.