Transforming an Urban School System New Haven Promise Education Reforms (2010–2013)

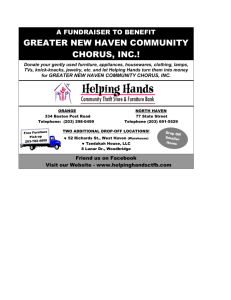

advertisement