Mapping the cosmological expansion REVIEW ARTICLE Eric V Linder

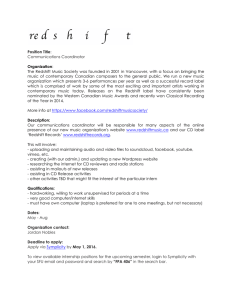

advertisement