Belief Propagation for Min-Cost Network Flow: Convergence & Correctness Please share

advertisement

Belief Propagation for Min-Cost Network Flow:

Convergence & Correctness

The MIT Faculty has made this article openly available. Please share

how this access benefits you. Your story matters.

Citation

David Gamarnik, Devavrat Shah, and Yehua Wei. 2010. Belief

propagation for min-cost network flow: convergence \&

correctness. In Proceedings of the Twenty-First Annual ACMSIAM Symposium on Discrete Algorithms (SODA '10). Society

for Industrial and Applied Mathematics, Philadelphia, PA, USA,

279-292. Copyright © 2010, Society for Industrial and Applied

Mathematics

As Published

http://dl.acm.org/citation.cfm?id=1873625

Publisher

Society for Industrial and Applied Mathematics

Version

Final published version

Accessed

Thu May 26 06:36:54 EDT 2016

Citable Link

http://hdl.handle.net/1721.1/73521

Terms of Use

Article is made available in accordance with the publisher's policy

and may be subject to US copyright law. Please refer to the

publisher's site for terms of use.

Detailed Terms

Belief Propagation for Min-cost Network Flow: Convergence & Correctness

David Gamarnik∗

Devavrat Shah†

Abstract

We formulate a Belief Propagation (BP) algorithm in the

context of the capacitated minimum-cost network flow problem (MCF). Unlike most of the instances of BP studied in

the past, the messages of BP in the context of this problem

are piecewise-linear functions. We prove that BP converges

to the optimal solution in pseudo-polynomial time, provided

that the optimal solution is unique and the problem input is

integral. Moreover, we present a simple modification of the

BP algorithm which gives a fully polynomial-time randomized approximation scheme (FPRAS) for MCF. This is the

first instance where BP is proved to have fully-polynomial

running time.

1 Introduction

The Markov random field or graphical model provides

a succinct representation for capturing the dependency

structure between a collection of random variables. In

the recent years, the need for large scale statistical inference has made graphical models the representation of

choice in many statistical applications. There are two

key inference problems for a graphical model of interest.

The first problem is the computation of marginal distribution of a random variable. This problem is (computationally) equivalent to the computation of partition

function in statistical physics or counting the number

of combinatorial objects (e.g., independent sets) in computer science. The second problem is that of finding the

mode of the distribution, i.e., the assignment with maximum likelihood (ML). For a constrained optimization

problem, when the constraints are modeled through a

graphical model and probability is proportional to the

cost of the assignment, an ML assignment is the same as

an optimal solution. Both of these questions, in general,

are computationally hard.

Belief Propagation (BP) is an “umbrella” heuristic

designed for these two problems. Its version for the

first problem is known as the “sum-product algorithm”

and for the second problem is known as the “max-

Yehua Wei

‡

product algorithm”. Both versions of the BP algorithm

are iterative, simple and message-passing in nature.

In this paper, our interest lies in the correctness and

convergence properties of the max-product version of

BP when applied to the minimum-cost network flow

problems (MCF), an important class of linear (or more

generally convex) optimization problems. In the rest

of the paper, we will use the term BP to refer to the

max-product version unless specified otherwise.

The BP algorithm is essentially an approximation

of the dynamic programming assuming that underlying graphical model is a tree [8], [22], [16]. Specifically,

assuming that the graphical model is a tree, one can

obtain a natural parallel iterative version of the dynamic programming in which variable nodes pass messages between each other along edges of the graphical

model. Somewhat surprisingly, this seemingly naive BP

heuristic has become quite popular in practice [2], [9],

[11], [17]. In our opinion, there are two primary reasons

for the popularity of BP. First, like dynamic programming, it is generically applicable, easy to understand

and implementation-friendly due to its iterative, simple

and message-passing nature. Second, in many practical

scenarios, the performance of BP is surprisingly good

[21] [22]. On one hand, for an optimist, this unexpected

success of BP provides a hope for its being a genuinely

much more powerful algorithm than what we know thus

far (e.g., better than primal-dual methods). On the

other hand, a skeptic would demand a systematic understanding of the limitations (and strengths) of BP, in

order to caution a practitioner in using it. Thus, irrespective of the perspective of an algorithmic theorist,

rigorous understanding of BP is very important.

Prior work. Despite these compelling reasons, only

recently have we witnessed an explosion of research

for theoretical understanding of the performance of the

BP algorithm in the context of various combinatorial

optimization problems, both tractable and intractable

(NP-hard) versions. To begin with, Bayati, Shah and

Sharma [6] considered the performance of BP for the

∗ Operations Research Center and Sloan School of Managethe problem of finding maximum weight matching in a

ment, MIT, Cambridge, MA, 02139, e-mail: gamarnik@mit.edu

bipartite graph. They established that BP converges in

† EECS,

MIT,

Cambridge,

MA,

02139,

e-mail: pseudo-polynomial time to the optimal solution when

devavrat@mit.edu

the optimal solution is unique [6]. Bayati et al. [4]

‡ Operations Research Center, MIT, Cambridge, MA, 02139,

as well as Sanghavi et al. [18] generalized this result

e-mail: y4wei@MIT.EDU

279

Copyright © by SIAM.

Unauthorized reproduction of this article is prohibited.

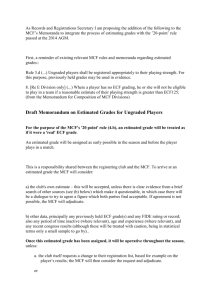

v1

v2

v3

min 4x1 + x2 − 3x4 + x5 + 2x6 − x7

s.t. x1 + 9x3 − x4 + 5x7 = 10

−2x1 + x2 + x3 − 3x4 + x5 − x6 + x7 = 3

e1

e2

e3

e4

e5

e6

x1 + x6 = 2

e7

x ≥ 0, x ∈ R7 .

Figure 1: An example of a factor graph

In FLP , for every 1 ≤ i ≤ m, let Ei = {ej | aij = 0}; and

let Vj = {vi | aij = 0}. For each factor node vi , define

the factor function ψi : R|Ei | → R̄ (R̄ = R ∪ ∞) as:

0

if

j∈Ei aij zj = bj

by establishing correctness and convergence of the BP

ψi (z) =

∞

otherwise

algorithm for b-matching problem when the linear programming (LP) relaxation corresponding to the node

Essentially, ψi represents the i-th equality constraints

constraints has a unique integral optimal solution. Note

in LP. If we let xEi be the subvector of x with indices

that the LP relaxation corresponding to the node conin Ei , then ψi (xEi ) < ∞ if and only if x does not violate

straints is not tight in general, as inclusion of the oddthe i-th equality constraint in LP. Also, for any variable

cycle elimination constraints [20] is essential. Furthernode ej , define variable function φj : R → R̄ as:

more, [4] and [18] established that the BP does not con

verge if this LP relaxation does have a non-integral soif z ≥ 0

cj z

φj (z) =

lution. Thus, for a b-matching problem BP finds an

∞

otherwise

optimal answer when the LP relaxation can find an

optimal solution. In the context of maximum weight Notice that problem LP is equivalent to

n

independent set problem, a one-sided relation between minx∈Rn m

i=1 ψi (xEi ) +

j=1 φj (xj ), which is deLP relaxation and BP is established [19]; if BP con- fined on FLP . As BP is defined on graphical models,

verges then it is correct and LP relaxation is tight. We we can now formulate the BP algorithm as an iterative

also take note of the work on BP for Steiner tree prob- message-passing algorithm on FLP . It is formally

lem [5], special instances of quadratic/convex optimiza- presented below as Algorithm 1. The idea of the

tion problems [13], [12], Gaussian graphical models [10], algorithm is that at the t-th iteration, every factor

and the relation between primal and message-passing for vertex vi sends a message function mtv →e (z) to

i

j

LP decoding [3].

each one of its neighbors ej , where mtvi →ej (z) is vi ’s

estimate of the cost if xj takes value z, based on the

2 BP for Linear Programing Problem

messages vi received at time t from its neighbors.

Suppose we are given an LP problem in the standard Additionally, every variable vertex ej sends a message

form:

function mtej →vi (z) to each one of its neighbors vi ,

where mtej →vi (z) is ej ’s estimate of the cost if xj takes

value z, based on the messages ej received at time t − 1

from all of its neighbors except vj . After N iterations,

min cT x

(LP)

the algorithm calculates the “belief” at variable node

s.t. Ax = b,

ej using the messages sent to vertex ej at time N for

x ≥ 0, x ∈ Rn

each j.

In Algorithm 1, one basic question is the computation procedure of message functions of the form mtej →vi

and mtvi →ej . We claim that every message function is a

where A is a real m × n matrix, b is a real vector convex piecewise-linear function, which allows us to enof dimension m, and c is a real vector of dimension code it in terms of a finite vector describing the break

n. We define its factor graph, FLP , to be a bipartite points and slopes of its linear pieces. In subsection 3.1,

graph, with factor nodes v1 , v2 , ..., vm and variable nodes we describe in detail how mtvi →ej (z) can be computed.

e1 , e2 , ..., en , such that ei and vj are adjacent if and only

In general, the max-product behaves badly when

if aij = 0. For example, the graph shown in Figure 1, is solving an LP instance without a unique optimal solution [6], [4]. Yet, even with the assumption that an

the factor graph of the LP problem:

280

Copyright © by SIAM.

Unauthorized reproduction of this article is prohibited.

Algorithm 1 BP for LP

1: Initialize t = 0, and for each variable node ej , create

factor to variable message m0vi →ej (z) = 0, ∀ej ∈ Ei .

2: for t = 1, 2, ..., N do

3:

∀ej , vi , where vi ∈ Vj , set mtej →vi (z) to

φj (z) +

vi ∈Vj \vi

4:

5:

6:

7:

8:

mt−1

vi →ej (z),

ej ∈Ei \ej

ce xe ,

(MCF)

e∈E

Δ(v, e)xe = bv , ∀v ∈ V

(demand constraints)

e∈Ev

0 ≤ xe ≤ ue ,

∀z ∈ R.

∀ej , vi , where ej ∈ Ei , set mtvi →ej (z) to

⎧

⎨

ψi (z̄) +

min

z̄∈R|Ei | ,z̄j =z ⎩

min

⎫

⎬

mtej →vi (z̄j ) ,

⎭

∀z ∈ R.

t := t + 1

end for

Set the “belief” function as bN

j (z) = φj (z) +

N

m

(z),

∀1

≤

j

≤

n.

vi →ej

vi ∈Vj

Calculate the “belief” by finding x̂N

=

j

arg min bN

(z)

for

each

j.

j

Return x̂N .

∀e ∈ E

(flow constraints)

where ce ≥ 0, ue ≥ 0, ce ∈ R, ue ∈ R̄, for each e ∈ E,

and bv ∈ R for each v ∈ V . As MCF is a special class

of LP, we can view each arc e ∈ E as a variable vertex

and each v ∈ V as a factor vertex, and define functions

ψv , φe , ∀v ∈ V , ∀e ∈ E:

0

if

e∈Ev Δ(v, e)ze = bv

ψv (z) =

∞

otherwise

φe (z) =

ce z

∞

if 0 ≤ z ≤ ue

otherwise

Algorithm 2 BP for Network Flow

1: Initialize t = 0. For each e ∈ E, suppose e = (v, w).

Initialize messages m0e→v (z) = 0, ∀z ∈ R and

m0e→w (z) = 0, ∀z ∈ R.

2: for t = 1, 2, 3, ..., N do

LP problem has a unique optimal solution, in general

3:

∀e ∈ E, let e = (v, w), update messages:

x̂N in Algorithm 1 does not converge to the unique opmte→v (z) = φe (z)

timal solution as N grows large. One such instance

⎫

⎧

(LP-relaxation of an instance of the maximum-weight

⎬

⎨

t−1

independent set problem) which was earlier described

mẽ→w

(z̄ẽ ) ,

ψw (z̄) +

+

min

⎭

z̄∈R|Ew | ,z̄e =z ⎩

in [19]:

ẽ∈Ew \e

9:

∀z ∈ R

min −

3

i=1

2xi −

3

s.t. xi + yj + zij = 1,

x, y, z ≥ 0

3

mte→w (z) = φe (z)

⎫

⎧

⎬

⎨

+

min

mt−1

(z̄ẽ ) ,

ψv (z̄) +

ẽ→v

⎭

z̄∈R|Ev | ,z̄e =z ⎩

3yi

j=1

∀1 ≤ i ≤ 3, 1 ≤ j ≤ 3

ẽ∈Ev \e

BP Algorithm for Min-Cost Network Flow

Problem

We start off this section by defining the capacitated mincost network flow problem (MCF). Given a directed

graph G = (V, E), let V , E denote the set of vertices

and arcs respectively, and let |V | = n, |E| = m. For any

vertex v ∈ V , let Ev be the set of arcs incident to v, and

for any e ∈ Ev , let Δ(v, e) = 1 if e is an out-arc of v (i.e.

arc e = (v, w), for some w ∈ V ), and Δ(v, e) = −1 if e

is an in-arc of v (i.e. arc e = (w, v), for some w ∈ V ).

MCF on G is formulated as follows [7],[1]:

281

∀z ∈ R.

4:

5:

6:

t := t + 1

end for

∀e ∈ E, let e = (v, w), and set the “belief” function:

N

N

nN

e (z) = me→v (z) + me→w (z) − φe (z)

Calculate the “belief” by finding x̂N

=

e

arg min nN

(z)

for

each

e

∈

E.

e

8: Return x̂N , which is the guess of the optimal

solution of MCF.

7:

Copyright © by SIAM.

Unauthorized reproduction of this article is prohibited.

Clearly, solving MCFis equivalent to solving

minx∈R|E| { v∈V ψv (xEv )+ e∈E φe (xe )}. We can then

apply BP algorithm for LP (Algorithm 1) for MCF.

Because of the special structure of MCF, each variable

node is adjacent to exactly two factor nodes. This allows us to skip the step of update messages mtv→e , and

present a simplified version of BP algorithm for MCF,

which we refer to as Algorithm 2.

To understand Algorithm 2 at a high level, each

arc can be thought of as an agent, which is trying to

figure out its own flow while meeting the conservation

constraints at its endpoints. Each link maintains an

estimate of its “local cost” as a function of its flow (thus

this estimate is a function, not a single number). At

each time step an arc updates its function as follows:

the cost of assigning x units of flow to link e is the cost

of pushing x units of flow through e plus the minimumcost way of assigning flow to neighboring edges (w.r.t.

the functions they computed last time step) to restore

flow conservation at the endpoints of e.

Before formally stating theorem of convergence of

BP for MCF, we first give the definition of a residual

network [1]. Define G(x) to be the residual network of

G and flow x as: G(x) has the same vertex set as G,

and ∀e = (v, w) ∈ E, if xe < ue , then e is an arc in

G(x) with cost cxe = ce , also, if xe > 0, then there is

an arc e = (w, v) in G(x)

with cost cxe = −ce , now, let

x

δ(x) = minC∈C {c (C) = e∈C cxe }, where C is the set

of directed cycles in G(x). Note that if x∗ is a unique

optimal solution of MCF with directed graph G, then

δ(x∗ ) > 0 in G(x∗ ).

(p(f ) denotes the number of linear pieces in f ) and

ci (z − ai−1 ) + f (ai−1 ) for z ∈ [ai−1 , ai ] the ith linear

piece of f .

Clearly, if f is a convex piecewise-linear function, then

we can store all the“information” about f in a finite

vector of size O(p(f )).

Property 3.1. Let f1 (x), f2 (x) be two convex

piecewise-linear functions. Then, f1 (ax + b), cf1 (x) +

df2 (x) are also convex piecewise-linear functions, for

any real numbers a, b, c and d, where c ≥ 0, d ≥ 0.

Definition 3.2. Let S = {f1 , f2 , ..., fk } be a set of

convex piecewise-linear functions, and let Ψt : Rk → R

be:

k

0

if

i=1 xi = t

Ψt (x) =

∞

otherwise

We say IS (t) = minx∈Rk {ψt (x) + ki=1 fi (xi )} is a

interpolation of S.

Theorem 3.1. Suppose MCF has a unique optimal

solution x∗ . Define L to be the maximum cost of a

simple directed path in G(x∗ ). Then for any N ≥

L

N

(

2δ(x

= x∗ .

∗ ) + 1)n, we have x̂

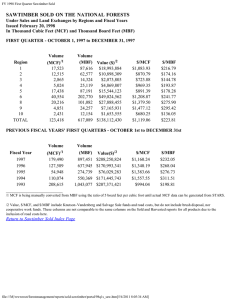

Figure 2: Functions f1 and f2

Figure 3: Interpolation of f1 and f2

Thus, by Theorem 3.1, the BP algorithm finds the

L

unique optimal solution of MCF in at most (

2δ(x

∗) +

1)n iterations.

3.1 Computing/Encoding Message Functions

First, we formally define a convex piecewise-linear function:

Lemma 3.1. Let f1 , f2 be two convex piecewise-linear

Definition 3.1. A function f is a convex piecewisefunctions, and suppose z1∗ , z2∗ are vertices of f1 , f2

linear function if for some finite set of reals, a0 < a1 <

and z1∗ = arg min f1 (z), z2∗ = arg min f2 (z). Let

... < an , (allowing a0 = −∞ and an = ∞), we have:

S = {f1 , f2 }, then IS (t) (the interpolation of S) is a

⎧

convex piecewise-linear function and it can be computed

if z ∈ [a0 , a1 ]

⎨ c1 (z − a1 ) + f (a1 )

innO(p(f1 ) + p(f2 )) operations.

ci+1 (z − ai ) + f (ai )

if z ∈ (ai , ai+1 ], 1 ≤ i ≤

f (z) =

⎩

∞

otherwise

Proof. We prove this lemma by describing a procedure

where c1 < c2 < ... < cn and f (a1 ) ∈ R. We to construct IS (t). The idea behind construction of

define a0 , a1 , ..., an as the vertices of f , p(f ) = n IS (t) is essentially to “stitch” together the linear pieces

282

Copyright © by SIAM.

Unauthorized reproduction of this article is prohibited.

of f1 and f2 . Specifically, let g(t) be the function

which is defined only at z1∗ + z2∗ , with g(z1∗ + z2∗ ) =

f1 (z1∗ )+ f2 (z2∗ ), and let L1 = z1∗ , L2 = z2∗ , U1 = z1∗ , U2 =

z2∗ . We will construct g iteratively and eventually have

g(t) = IS (t). The construction is described as follows:

at each iteration, let X1 (and X2 ) be the linear piece of

f1 (and f2 ) at the left side of L1 (and L2 ). Then choose

the linear piece with the larger slope from {X1 , X2 }, and

“stitch” this piece onto the left side of the left endpoints

of g. If Pi is chosen, update Li to the vertex which is

on the left end of Pi . As an example, consider f1 and

f2 in Figure 2, then z1∗ = 1 and z2∗ = 0 are vertices of f1

and f2 such that z1∗ = arg min f1 (z), z2∗ = arg min f2 (z).

Note that the linear piece X1 in the procedure is labeled

as P 1 on the graph, while X2 does not exist (since there

is no linear piece for f2 on the right side of z2 ). Hence,

we “stitch” P 1 to the left side of g, and update L1 to 0.

Similarly, let Y1 (Y2 ) be the linear piece of f1 (f2 )

at the right side of U1 (U2 ), then choose the linear piece

with the smaller slope and “stitch” this piece onto the

right side of the right endpoints of g. If Qi is chosen,

update Ui to the vertex which is on the right side of Pi .

Again, we use f1 and f2 in Figure 2 as an illustration.

Then the linear piece Y1 in the procedure is labeled as

P 2, while Y2 is labeled as P 3. As P 2 has a lower slope

than P 3, we “stitch” P 2 to the right side of g and update

U1 to 2.

Repeat this procedure until both L1 (and L2 )

and U1 (and U2 ) are the left most (and right most)

endpoints of f1 (and f2 ), or the both endpoints of g are

infinity. See Figure 2 and Figure 3 as an illutration of

interpolation of two functions.

Note that the total number of iterations is bounded

by O(p(f1 ) + p(f2 )), and each iteration takes at most

constant number of operations. By construction, it

is clear that g is a convex piecewise-linear function.

Also, g(z1∗ + z2∗ ) = f1 (z1∗ ) + f2 (z2∗ ), and by the way we

constructed g, we must have g(t) ≤ {Ψt (x) + f1 (x1 ) +

f2 (x2 )} for any t ∈ R. Therefore, we have g = IS , and

we are done.

Proof. For the sake of simplicity, assume k is divisible by

2. Let S1 = {f1 , f2 }, S2 = {f3 , f4 }, ..., S k = {fk−1 , fk },

2

and S = {IS1 , IS2 , ..., IS k }. Then one can observe that

2

IS = IS , by definition of IS . By Lemma 3.1, each

function S is piecewise-linear, and S can be computed

in O(P ) operations. Consider changing S to S as a

procedure of decreasing the number of convex piecewiselinear functions. This procedure reduces the number by

a factor of 2 each time, and consumes O(P ) operations.

Hence, it takes O(log k) procedures to reduce set S into

a single convex piecewise-linear function. And hence,

computing IS (t) takes O(P · log k) operations.

Definition 3.3. Let S = {f1 , f2 , ..., fk } be a set of

convex piecewise-linear functions, a ∈ Rk , and let Ψt :

Rk → R be:

k

0

if

i=1 ai xi = t ,

Ψt (x) =

∀v ∈ V

∞

otherwise

We say ISa (t) = minx∈Rk {ψt (x) +

scaled interpolation of S.

k

i=1

fi (xi )} is a

Corollary 3.1. Given any set of convex piecewiselinear functions S, ISa (t) is a convex piecewise-linear

function. Suppose |S| = k, and P =

f ∈S p(f ),

then, the corners and slopes of IS (t) can be found in

O(P · log k) operations.

Proof. Let S = {f1 (x), f2 (x), ..., fk (x)}, and let S =

{f1 (x) = f1 (a1 x), f2 (x) = f2 (a2 x), ..., fk (x) =

fk (ak x)}. Then we have ISa (t) = IS (t), and the result

follows immediately from Theorem 3.2.

Corollary 3.2. For any nonnegative integer t, e ∈ E

such that e = (v, w) (or e = (w, v)), then mte→v , a

message function at t-th iteration of Algorithm 2, is a

convex piecewise-linear function.

Proof. We show this by doing induction on t. For t = 0,

mte→v is a convex piecewise-linear function by definition.

For t > 0, let us remind

that mte→v (z) =

ourselves

t−1

Remark. In the case where either of f1 or f2 do φe (z)+minz̄∈R|Ew | ,z̄e =z ψw (z̄) + ẽ∈Ew \e mẽ→w (z̄ẽ ) ,

not have global minimal, the interpolation can be still for z ∈ R.

t−1

obtained in O(p(f1 ) + p(f2 )) operations, but it involves

By induction hypothesis, any mẽ→w

is a piecewisetedious analysis of different cases. As reader will notice, linear convex function. Suppose S = {mt−1

ẽ→w (z̄ẽ ),

the implementation of Algorithm 2 does not require this ẽ ∈ Ew \ e}, and a = REw \e , ae = Δ(v, e)ze for any

case and hence we don’t discuss it here.

e ∈ Ew \ e. Then, g(z) = minz̄∈R|Ew | ,z̄e =z {ψw (z̄) +

t−1

a

ẽ∈Ew \e mẽ→w (z̄ẽ )} is equal to IS (cz + d) for some real

Theorem 3.2. Given a set S consisting of convex number c and d (i.e., a shifted scaled interpolation of

piecewise-linear functions, IS (t) is a convex piecewise- S). By Corollary 3.1, g(z) is a convex piecewise-linear

linear

function. Moreover, suppose |S| = k, and P = function, and φe is a convex piecewise-linear function.

t

f ∈S p(f ). Then, IS (t) can be computed in O(P · log k) Therefore, we have that me→v = g + φe is a convex

operations.

piecewise-linear function.

283

Copyright © by SIAM.

Unauthorized reproduction of this article is prohibited.

(for some e = (u, v), t ∈ N+ ) is a piecewise-linear function with at most ue linear pieces. Thus, if ue is bounded

by some constant for all e, while b and u are integral,

then the message functions at each iteration are much

simpler. Next, we present a subclass of MCF, which

can be solved quickly using BP compare to the more

Proof. We prove the corollary using induction. For general MCF. Given a directed graph G = (V, E), this

t = 0, the statement is clearly true. For t ≥ 1, we subclass of problem is defined as follows:

recall that mte→v (z) − φe (z) = minz̄∈R|Ew | ,z̄e =z {ψw (z̄) +

t−1

ẽ∈Ew \e mẽ→w (z̄ẽ )} is a scaled interpolation of message

t−1

min

ce xe

(MCF )

functions in the form of mẽ→w

(possibly shifted). Since

e∈E

Δ(v, e) = ±1, the absolute values of the slopes for the

linear pieces of mte→v −φe is same as the absolute values

Δ(v, e)xe = bv ,

∀v ∈ V

of the slopes for the linear pieces of message functions

e∈Ev

mt−1

ẽ→w which was used for interpolation. Therefore, by

xe ≤ ũv , ∀v ∈ V, δ(v) = {(u, v) ∈ E}

induction hypothesis, the absolute values of the slopes

e∈δ(v)

of mte→v − φe are integral and bounded by (t − 1)cmax .

0 ≤ xe ≤ ue ,

∀e ∈ E

This implies the absolute values of slopes of mte→v are

integral and bounded by tcmax .

Corollary 3.3. Suppose the cost vector c in MCF is

integral, then at iteration t, for any defined message

function mte→v (z), let {s1 , s2 , ..., sk } be the slopes of

mte→v (z), then −tcmax ≤ s1 ≤ sk ≤ tcmax , and si is

integral for each 1 ≤ i ≤ k.

Corollary 3.4. Suppose the vectors b and u are integral in MCF, then at iteration t, for any message

function mte→v , the vertices of mte→v are also integral.

Proof. Again, we use induction. For t = 0, the

statement is clearly true. For t ≥ 1, as mte→v − φe is a

piecewise-linear function obtained from a shifted scaled

t−1

interpolation of functions mẽ→w

. Since Δ(v, e) = ±1,

and b is integral, the shift of the interpolation is integral,

and as Δ(v, e) = ±1, the scaled factor is ±1. As all

t−1

vertices in any function mẽ→w

are integral, we deduce

t

that all vertices of me→v − φe must also be integral.

Since u is integral, all vertices of φe are integral, and

therefore, all vertices of mte→v are integral.

Corollary 3.1 and Corollary 3.2 shows that at each

iteration, every message function can be encoded in

terms of a finite vector describing the corners and

slopes of its linear pieces in finite number of iterations.

Using similar argument, we can see that the message

functions in Algorithm 1 are also convex piecewise-linear

functions, and thus can also be encoded in terms of

a finite vector describing the corners and slopes of its

linear pieces. We would like to note the result that

message functions mte→v are convex piecewise-linear

functions can be also shown by sensitivity analysis of

LP (see Chapter 5 of [7]).

3.2 BP on Special Min-cost Flow Problems Although we have showed in Section 3.1 that each message

functions can be computed as piecewise-linear convex

functions, those functions tends to become complicated

to compute and store as the number of iterations grows.

When an instance of MCF has b, u, integral, then mte→v

c, u, and ũ are all integral, and all of their entries are

bounded by a constant K.

To see MCF is indeed a MCF, observe that for

each v ∈ V , if we split v into two vertices vin and vout ,

where vin is incident to all in-arcs of v with bvin = 0,

and vout is incident to all out-arcs of v with bvout = bv ;

while create an arc from vin to vout , set the capacity of

the arc to be ũv , and cost of the arc to be 0. Let the

new graph be G , then, the MCF on G is equivalent

to MCF . Although we can use Algorithm 2 to solve

the MCF on the new graph, we would like to define

functions ψv , φe , ∀v ∈ V , ∀e ∈ E:

⎧

⎨ 0

ψv (z) =

⎩

∞

φe (z) =

if

e∈E

v Δ(v, e)ze = bv

and

e∈δ(v) xe ≤ ũv

otherwise

ce z

∞

if 0 ≤ z ≤ ue

otherwise

and apply Algorithm 2 with defined ψ and φ. When

we update message functions mte→v for all e ∈ Ew , the

inequality e∈δ(w) xe ≤ ũw , allow us to only look at

the ũw linear pieces from message functions mt−1

ẽ→w , for

all but constant number of e ∈ Ew . This allows us to

further trim the running time of BP for MCF , and we

will present this more formally in Section 6.1.

4 Convergence of BP for MCF

Before proving Theorem 3.1, we define the computation trees, and then establish the connection between

computation trees and BP. Using this connection, we

proceed to prove the main result of our paper.

284

Copyright © by SIAM.

Unauthorized reproduction of this article is prohibited.

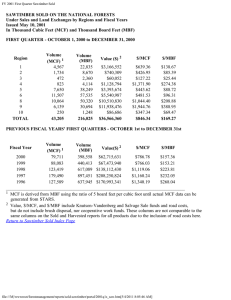

e5

v2

G

e1

v1

e2

v4

v2

e4

v1

v3

v2

v4

v4 v5

e6

v3

e3

v5

e7

demand/supply constraint for every node v ∈ V o (TeN ).

Now, we state the lemma which exhibits the connection

between BP and the computation trees.

∗

Lemma 4.1. MCF N

e has an optimal solution y , satisN

fying yr∗ = x̂N

(r

is

the

root

of

T

,

Γ(r)

=

e).

e

e

v1 v4 v1 v4 v2 v3 v1 v2

Te23

Figure 4: Computation tree of G rooted at e3 = (1, 3)

4.1 Computation Tree and BP Let TeN , the N computation tree corresponding to variable xe , be defined as follows:

1. Let V (TeN ) be the vertex set of TeN and E(TeN ) be

the arc set of TeN . Let Γ(.) be the map which maps

each vertex v ∈ V (TeN ) to a vertex v ∈ V . And

Γ preserves the arcs (i.e. For each e = (vα , vβ ) ∈

E(TeN ), Γ(e ) = (Γ(vα ), Γ(vβ )) ∈ E).

This result is not too surprising as BP is exact on

trees. A thorough analysis can show that the message

functions in BP are essentially the implementation

of an algorithm using the“bottom up” (aka dynamic

programming) approach, starting from the bottom level

of Tet . A more formal, combinatorial proof of this lemma

also exists, which is a rather technical argument using

induction. Proofs for similar statements can be found

in [4] and [18].

4.2

Proof of the Main Theorem

Proof. [Proof of Theorem 3.1] Suppose ∃e0 ∈ E and

L

N

∗

N ≥ (

2δ(x

∗ ) +1)n such that x̂e0 = xe0 . By Lemma 4.1,

we can find an optimal solution y ∗ for MCF N

e , where

2. Divide V (TeN ) into N + 1 levels, on the 0-th level, y ∗ = x̂N . Now, without loss of generality, we0 assume

r

e

we have a “root” arc r, and Γ(r) = e. And a vertex y ∗ > x∗0 . Let r = (v , v ), because y ∗ is feasible for

α β

r

e0

N

v is on the t-th level of Te if v is t arcs apart from

∗

MCF N

e0 and x is feasible for MCF, we have

either nodes of r.

Δ(vα , e)ye∗ = yr∗ +

Δ(vα , e)ye∗

3. For any v ∈ V (TeN ), let Ev be the set of all arcs bΓ(vα ) =

N

e∈Evα

e∈Evα \r

incident to v . If v is on the t-th level of Te , and

t < N , then Γ(Ev ) = EΓ(v ) (i.e. Γ preserves the b

∗

∗

Δ(vα , e)xΓ(e) = xe +

Δ(vα , e)x∗Γ(e)

Γ(vα ) =

neighborhood of v ).

e∈E

e∈E \r

vα

4. For every vertex v that is on the N -th level of

v is incident to exactly one arc in TeN .

TeN ,

In other literatures, TeN is known as the “unwrapped

tree” of G rooted at e. Figure 4 gives an example of a

computation tree. We make a note that the definition

of computation trees we have introduced is slightly

different from the definition in other papers [4] [6] [18],

although the analysis and insight for computation trees

is very similar.

Let V o (TeN ) be the set of all the vertices which are

not on the N -th level of TeN . Consider the problem

min

cΓ(f ) xf

vα

Then, we can find arc e1 incident to vα0 , e1 = r, such

that ye∗ > x∗Γ(e ) if e1 has the same orientation as r,

1

1

and ye∗ < x∗Γ(e ) if e1 has the opposite orientation as

1

1

r. Similarly, we can find some arc e−1 incident to vβ

satisfying the same condition. Let vα1 , vα−1 be the

vertices on e1 , e−1 which are one level below vα , vβ on

TeN , then, we can find some arc e2 , e−2 satisfying the

same condition. If we continue this all the way down

to the leaf nodes of TeN0 , we can find the set of arcs

{e−N , e−N +1 , ..., e−1 , , e1 , ..., eN } such that

ye∗i > x∗Γ(ei ) ⇐⇒ ei has the same orientation as r

ye∗i < x∗Γ(e ) ⇐⇒ ei has the opposite orientation as r

(MCF N

e )

i

f ∈E(TeN )

Let X = {e−N , e−N +1 , ..., e−1 , e0 = r, e1 , ..., eN }.

For any e = (vp , vq ) ∈ X, let Aug(e ) =

f ∈Ev

(vp , vq ) if ye∗ > x∗Γ(e ) , and Aug(e ) = (vq , vp )

N

if ye∗ < x∗Γ(e ) .

Then, each Γ(Aug(e )) is an

0 ≤ xf ≤ uΓ(f ) ,

f ∈ E(Te )

arc on the residual graph G(x∗ ), and W =

(Aug(e−N ), Aug(e−N +1 ), ..., Aug(e0 ), ..., Aug(eN )) is a

N

N

Loosely speaking, MCF e is almost an MCF on Te . directed path on TeN0 . We call W the augmenting path

There is a flow constraint for every arc e ∈ E(TeN ), and a of y ∗ with respect to x∗ . Also, Γ(W ) is a directed

Δ(v, f )xf = bΓ(v) ,

∀v ∈ V o (TeN )

285

Copyright © by SIAM.

Unauthorized reproduction of this article is prohibited.

walk on G(x∗ ). Now we can decompose Γ(W ) into

a simple directed path P (W ), and a set of simple directed cycles Cyc(W ). Because each simple directed

cycle/path on G(x∗ ) can have at most n arcs, and W

L

has 2N + 1 arcs, where N ≥ (

2δ(x

∗ ) + 1)n, we have

L

that |Cyc(W )| > δ(x∗ ) . Then, we have

Proof. The proof of this corollary uses the same idea of

finding an augmenting path on the computation tree as

in Theorem 3.1.

(⇒): Suppose MCF has a unique optimal solution.

Let y be the optimal solution MCF N

e0 when the variable

at r, yr is fixed to be ze∗ − 1. Then, we can find an

augmenting path W of y with respect to x∗ of length

2n2 cmax . Then W can be decomposed into at least

c∗ (C) + c∗ (P (W ))

c∗ (W ) =

2ncmax disjoint cycles and one path. Since each cycle

C∈Cyc(W )

has a cost of at most −δ(x∗ ), which is at least -1 as

≥

c∗ (C) − L

MCF has integral data. Hence, once we push 1 unit of

C∈Cyc(W )

flow of y on W , we can decrease the cost of y by at least

∗

N ∗

L

ncmax . Hence nN

∗

e (ze − 1) + ncmax < ne (ze ). Similarly,

·

δ(x

>

)

−

L

N

∗

N

∗

we can also show that ne (ze ) < ne (ze + 1) + ncmax .

δ(x∗ )

(⇐): Suppose MCF does not have a unique optimal

=0

solution. Let x∗ be an optimal solution of MCF, and y

N

Let F orw = {e|e ∈ X , ye∗ > x∗Γ(e) }, Back = {e|e ∈ be the optimal solution MCF e0 . Again, we can find an

∗

P , ye∗ < x∗Γ(e) }. Since both F orw and Back are finite, augmenting path W of y with respect to x of length

2

we can find λ > 0 such that ye∗ − λ ≥ x∗Γ(e) , ∀e ∈ F orw 2n cmax , and we can decompose W into at least 2ncmax

N

disjoint cycles. and one path P . The cost of P is at

and ye∗ + λ ≤ x∗Γ(e) , ∀e ∈ Back. Let ỹ ∈ R|E(Te0 )| such most (n − 1)c

max , while each cycle has a non-positive

that

⎧ ∗

cost.

Hence,

when

we push 1 unit of flow of y on W ,

⎨ ye − λ : e ∈ F orw

we

increase

the

cost

of y by at most (n − 1)cmax <

∗

y + λ : e ∈ Back

ỹe =

⎩ e

nc

.

Therefore,

depends

on the orientation of r on

max

0

: otherwise

∗

∗

W , we either have nN

(z

−

1) − ncmax ≤ nN

e

e

e (ze ) or

∗

N ∗

We can think ỹ as pushing λ units of flow on W for nN

e (ze + 1) − ncmax ≤ ne (ze ).

y ∗ . Since for each e ∈ F orw, ye∗ − λ ≥ x∗Γ(e) ≥ 0,

and for each e ∈ Back, ye∗ + λ ≤ x∗Γ(e) ≤ uΓ(e) , ỹ Theorem 4.1 shows that BP can be used to detect the

satisfy all the flow constraints. Also, because F orw = existence of an unique optimal solution for MCF.

{e|e ∈ X , e has the same orientation as r}, and Back =

{e|e ∈ X , e has the opposite orientation

as r}, we 5 Extension of BP on Convex-Cost Network

o

N

Flow Problems

have

that

for

any

v

∈

V

(T

),

Δ(v,

e)ỹe =

e

e∈Ev

∗

Δ(v,

e)y

=

b

,

which

implies

ỹ

satisfies

all

In this section, we discuss the extension of Theorem 3.1

Γ(e)

e

e∈Ev

the demand/supply constraints. Therefore, ỹ is feasible to convex-cost network flow problem (or convex flow

solution for (MCF N

problem). Convex flow problem is defined on graph

e0 ). But

G = (V, E) as follows:

cΓ(e) (ye∗ ) −

cΓ(e) (ỹe )

min

ce (xe )

(CP)

e∈E(TeN0 )

e∈E(TeN0 )

e∈E

cΓ(e) λ −

cΓ(e) λ

=

Δ(v, e)xe = bv ,

∀v ∈ V

e∈F orw

e∈Back

e∈Ev

= c∗ (W )λ

>0

0 ≤ xe ≤ ue ,

This contradicts the optimality of y ∗ , and completes the

proof of Theorem 3.1.

Corollary 4.1. Given an MCF with integral data c,

b and u. Suppose cmax = maxe∈E {ce }. Let Algorithm

2 run N = n2 cmax + n iterations. Let ze∗ = arg nN

e (z),

∗

N ∗

N ∗

then we have nN

(z

−1)−nc

>

n

(z

)

and

n

max

e

e

e

e

e (ze +

∗

1) − ncmax > nN

(z

)

iff

MCF

has

a

unique

optimal

e

e

solution.

∀e ∈ E

where each ce is a convex piecewise-linear function.

Notice that if we define ψ exactly same as we did for

MCF, and for each e ∈ E, define

if 0 ≤ z ≤ ue

ce (z)

φe (z) =

∞

otherwise

then, we can apply Algorithm 2 on the graph G with

functions ψ and φ, and hence obtain BP on convex flow

problem.

286

Copyright © by SIAM.

Unauthorized reproduction of this article is prohibited.

v2

Now, we give the definition of a residual graph

on convex cost flow problem. Suppose x is a feasible

solution for (CP), let G(x) be the residual graph for

G and x∗ defined as follows: ∀e = (vα , vβ ) ∈ E,

if xe < ue , then e is also an arc in G(x) with cost

e)

cxe = limt→0 c(xe +t)−c(x

(the value of the slope of ce at

t

the right side of xe ); if xe > 0, then there is an arc e =

e)

(vβ , vα ) in G(x) with cost cxe = limt→0 c(xe −t)−c(x

t

(negative value of the slope of ce at the left side of xe ).

Finally, let

x

δ(x) = min

ce .

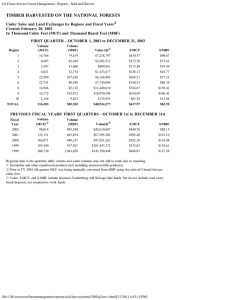

e2

e1

v1

v3

e3

Figure 5:

As the size of Ew is at most n, by Corollary 3.1,

t−1

g(z) = minz̄∈R|Ew | ,z̄e =z {ψw (z̄) + ẽ∈Ew \e mẽ→w

(z̄ẽ )}

can be calculated in O(log n · ntcmax ) operations. As

mte→v (z) = g(z)+ φe (z), we have that mte→v can also be

C∈C

e∈C

calculated in O(log n · ntcmax ) operations. Since at each

iteration,

we update 2m message functions, the total

where C is the set of directed cycles in G(x).

number of computations at iteration t can be bounded

Theorem 5.1. Suppose x∗ is the unique optimal solu- by O(nm log n · tcmax ).

tion for CP. Let L to be the maximum cost of a simple

6.1. Given MCF has a unique optimal sodirected path in G(x∗ ), and by uniqueness of optimal so- Theorem

∗

lution

x

and

integral data, the BP algorithm finds the

L

∗

lution, δ(x ) > 0. Then, for any N ≥ (

2δ(x∗ ) + 1)m,

unique

optimal

solution of MCF in O(n5 m log n · c3max )

x̂N = x∗ . Namely, the BP algorithm converges to the

operations.

L

optimal solution in at most (

2δ(x

∗ ) + 1)m iterations.

Proof. Because c is integral, the value δ(x∗ ) in Theorem

The proof of Theorem 5.1 is almost identical to the 3.1 is also integral. Recall that L is the maximum cost of

proof of Theorem 3.1. Theorem 5.1 is an illustration a simple directed path in G(x∗ ). Since simple directed

of the power of BP, that not only it can solve MCF, path has at most n − 1 arcs, L is bounded by ncmax .

but possibly many other variants of MCF as well.

Thus, by Theorem 3.1, the BP algorithm (Algorithm

2) converges to the optimal solution of MCF after

6 Running Time of BP on MCF

O(n2 cmax ) iterations. Combine this with Lemma 6.1,

In the next two sections, we will always assume vectors we have that BP converges to the optimal solution in

c, u and b in problem MCF are integral. This assump- O(n2 cmax ·nm log n·n2 cmax ·cmax ) = O(n5 m log n·c3max )

tion is not restrictive in practice, as the MCF solved in operations.

practice usually have rational data sets [1]. We define

We would like to point out that for MCF with

cmax = maxe∈E {ce }, and provide an upper bound for

a

unique

optimal solution, Algorithm 2 can take an

the running time of BP on MCF in terms m (the numexponential

number of iterations to converge. Consider

ber of arcs in G), n (the number of nodes in G), and

the

MCF

on

the directed graph G shown in Figure 5.

cmax .

Take a large positive integer D, set ce1 = ce2 = D,

Lemma 6.1. Suppose MCF has integral data, in Al- ce3 = 2D − 1, bv1 = 1, bv2 = 0 and bv3 = −1. It can

gorithm 2, the number of operations to update all the be checked that x̂N

1 alternates between 1 and −1 when

messages at iteration t is O(nm log n · tcmax ).

2N + 1 < 2D

.

This

means that BP algorithm takes at

3

least

Ω(D)

iterations

to converge. Since the input size

Proof. At iteration t, fix a valid message function mte→v , of a large D is just log(D), we have that Algorithm 2 for

and we update it as:

MCF does not converge to the unique optimal solution

in polynomial time.

t

me→v (z)

⎫

⎧

⎬

⎨

6.1 Runtime of BP on MCF Here we analyze

min

mt−1

(z̄

)

ψw (z̄) +

=φe (z) +

ẽ

the run time of BP on MCF , which is defined in

ẽ→w

⎭

z̄∈R|Ew | ,z̄e =z ⎩

ẽ∈Ew \e

Section 3.2. We show that BP performs much better

on this special class of MCF. Specifically, we state the

As MCF has integral data, by Corollary 3.3, any

following theorem:

t−1

messages of the form mẽ→w has integral slopes, and

the absolute values of the slopes are bounded by (t − Theorem 6.2. If MCF has a unique optimal solution,

1)cmax . This implies for each mt−1

ẽ→w , the number of Algorithm 2 for MCF finds the unique optimal solution

t−1

4

linear pieces of mẽ→w is bounded by 2(t − 1)cmax . in O(n ) operations.

287

Copyright © by SIAM.

Unauthorized reproduction of this article is prohibited.

First, we state the convergence result of BP for MCF which is reminiscent of Theorem 3.1.

to update all messages in the set {mte→v |e ∈ Ew }, we

can obtain information (1), (2), (3) for all the messages

in {mte→v |e ∈ Ew } in O(n) operations, and hence

Theorem 6.3. Suppose MCF has a unique optimal can compute all messages in {mt |e ∈ E } in O(n)

w

e→v

solution x∗ . Define L to be the maximum cost of a operations.

∗

simple directed path in G(x ). Then, Algorithm 2 for

Therefore, updating all the message functions at

MCF converges to the optimal solution in at most every iteration takes O(n2 ) operations. By Theorem

L

(

2δ(x

∗ ) + 1)n iterations.

6.2, since L is bounded by nK, δ(x∗ ) ≥ 1, then

L

2

The proof of this theorem is exactly the same as the (

2δ(x∗ ) + 1)n is bounded by O(n ). This concludes

proof of Theorem 3.1, and is hence omitted. Now, that4 Algorithm 2 finds a unique optimal solution in

we apply this result to compute the running time of O(n ) operations.

Algorithm 2 for MCF .

Note both the shortest-path problem and bipartite

Proof. [Proof of Theorem 6.2] For the simplicity of the

proof, we assume that every linear piece in a message

function has unit length. This assumption is not

restrictive, as each linear piece in general has integral

vertices (from Corollary 3.4), so we can always break a

bigger linear piece into many unit length linear pieces

with the same slopes. At iteration t ≥ 1, recall mte→v (z)

is defined as:

⎫

⎧

⎬

⎨

φe (z) +

min

mt−1

(z̄

)

ψw (z̄) +

ẽ

ẽ→w

⎭

z̄∈R|Ew | ,z̄e =z ⎩

ẽ∈Ew \e

We claim that each mte→v can be constructed using the

following information:

t−1

(1) ũw smallest linear pieces in {mẽ→w

|ẽ ∈ δ(w) \ e},

where δ(w) denotes all in-arcs of w;

t−1

(2) (ũw − bw ) smallest linear pieces in {mẽ→w

|ẽ ∈

γ(w) \ e} where γ(w)

denotes

all

out-arcs

of

w;

t−1

(3) The value of ẽ∈Ew mẽ→w

(0).

Observe that once all three sets of information is

given, for any integer 0 ≤ z ≤ ue , one can find mte→v (z)

in a constant number of operations. As ue is also

bounded by a constant, we have that mte→v can be

computed in constant time when information (1), (2)

and (3) are given.

Now, since |Ew | is bounded by n, obtaining each

one of information (1), (2) or (3) for a specific message

mte→v takes O(n) operations. When we update all

the messages in the set {mte→v |e ∈ Ew }, note that

every e ∈ Ew except for a constant number of them,

information (1) for updating mte→v is equivalent to

t−1

ũw smallest pieces in mẽ→w

for some ẽ ∈ δ(w); and

information (2) for updating mte→v is equivalent ũw −bw

smallest linear pieces in mt−1

ẽ→w for some ẽ ∈ γ(w).

In fact, the exceptions are precisely those e such that

mt−1

e→w contain at least one of those ũw smallest linear

t−1

pieces in {mẽ→w

|ẽ ∈ δ(w)} or one of those (ũw − bw )

smallest linear pieces in {mt−1

ẽ→w |ẽ ∈ γ(w)}. Moreover,

t

update any message in the set

|e ∈ Ew } has

{me→vt−1

information (3) to be exactly ẽ∈Ew mẽ→w (0). Hence,

matching problem belongs to the class of MCF , where

ũ, b, u are all bounded by 2.

7

FPRAS Implementation

In this section, we provide a fully polynomial-time

randomized approximation scheme (FPRAS) for MCF

using BP as a subroutine. First, we describe the main

idea of our approximation scheme.

As Theorem 6.1 indicated, in order to come up with

an efficient approximation scheme using BP (Algorithm

2), we need to get around the following difficulties:

1. The convergence of BP requires MCF to have a

unique optimal solution.

2. The running time of BP is polynomial in m, n and

cmax .

For an instance of MCF, our strategy for finding an

efficient (1 + ) approximation scheme is to find a

modified cost vector c̄ (let MCF be the problem with

the modified cost vector) which satisfies the following

properties:

1. The MCF has a unique optimal solution.

2. c̄max is polynomial in m, n and 1 .

3. The optimal solution of MCF is a “near optimal”

solution of MCF. The term “near optimal” is

rather fuzzy and we will address this question later

in the section.

First, in order to find a modified MCF with a unique

optimal solution, we first state a result which is a variant

of the Isolation Lemma introduced in [15].

7.1

Variation of Isolation Lemma

Theorem 7.1. Let MCF be an instance of min-cost

flow problem with underlying graph G = (V, E), demand

vector b, constraint vector u. Let its cost vector c̄ be

generated as follows: for each e ∈ E, c̄e is chosen

288

Copyright © by SIAM.

Unauthorized reproduction of this article is prohibited.

independently and uniformly over Ne , where Ne is a

discrete set of 4m positive numbers (m = |E|). Then,

the probability that MCF has a unique optimal solution

is at least 12 .

(x∗ + λd)e ≤ ue , ∀e ∈ E. As c̄e > 0 for any e ∈ E,

and c̄T d = c̄T x∗∗ − c̄T x∗ = 0, there exists some e such

that de < 0. Let λ∗ = sup{λ | x∗ + λd is an optimal

solution of MCF}, since de < 0, λ∗ is bounded; and

since x∗ + d = x∗∗ , λ∗ > 0. By optimality of λ∗ , there

must exists some e such that either (x∗ + λ∗ d)e = 0 or

ue . Since λ∗ > 0, x∗e = (x∗ + λ∗ d)e , this contradicts

the assumption that D(e ) is satisfied. Thus, MCF

must have a unique optimal solution.

Proof. Fix an arc e1 ∈ E, and fix c̄e for all e ∈ E \ e1 .

Suppose that there exists α ≥ 0, such that when

c̄e1 = α, MCF have optimal solutions x∗ , x∗∗ , where

x∗e1 = 0 and x∗∗

e1 > 0. Then, if c̄e1 > α, for any feasible

solution x of MCF , where xe1 > 0, we have

We note that Theorem 7.1 can be easily modified for

LP in standard form.

c̄e x∗e =

c̄e x∗e

e∈E

e∈E,e=e1

≤

c̄e xe + xe1 α

e∈E,e=e1

<

c̄e xe

e∈E

And if c̄e1 < α, for any feasible solution x of MCF

where xe1 = 0, we have

∗∗

c̄e x∗∗

c̄e x∗∗

e <

e + αxe1

e∈E

e∈E,e=e1

≤

c̄e xe + αxe1

e∈E,e=e1

=

c̄e xe

e∈E

This implies there exists at most one value for α,

such that if c̄e1 = α then MCF have optimal solutions

x∗ , x∗∗ , where x∗e1 = 0 and x∗∗

e1 > 0. Similarly, we can

also deduce that there exists at most one value for β,

such that if c̄e1 = β, MCF have optimal solutions x∗ ,

x∗∗ , where x∗e1 < ue1 and x∗∗

e1 = ue1 .

Let OS denote the set of all optimal solutions of

MCF, and let D(e) be the condition of either: 0 = xe ,

∀x ∈ OS, or 0 < xe < ue , ∀x ∈ OS, or xe = ue ,

∀x ∈ OS. Since c̄e1 has 4m possible values, where

each value is chosen with equal probability, we conclude

that the probability of D(e1 ) is satisfied is at least

4m−2

2m−1

4m = 2m . By the union bound of probability, we

have that the

probability of D(e) is satisfied for all e ∈ E

1

is at least 1− e∈E 2m

= 12 . Now, we state the following

lemma:

Lemma 7.1. If ∀e ∈ E, condition D(e) is satisfied, then

MCF have a unique optimal solution.

Then by Lemma 7.1, we conclude that the probability

that MCF has a unique optimal solution is at least 12 .

Corollary 7.1. Let LP be an LP problem with constraint Ax = b, where A is a m × n matrix, b ∈ Rm .

The cost vector c̄ of LP is generated as follows: for each

e ∈ E, c̄e is chosen independently and uniformly over

Ne , where Ne is a discrete set of 2n elements. Then,

the probability that LP has a unique optimal solution is

at least 12 .

7.2 Finding the Correct Modified Cost Vector c̄

Next, we provide a randomly generate c̄ with the desired

properties stated in the beginning of this section. Let

X : E → {1, 2, ..., 4m} be a random function where for

each e ∈ E, X(e) is chosen independently and uniformly

max over the range. Let t = c4mn

, and generate c̄ as: for

each e ∈ E, let c̄e = 4m · cte + X(e). Then, for any

c̄ generated at random, c̄max is polynomial in m, n and

1

, and by Theorem 7.1, the probability of MCF having

a unique optimal solution is greater than 12 .

Now, we introduce algorithm APRXMT(MCF, ),

which works as follows: select a random c̄; try to solve

MCF using BP. If BP discovers that MCF has no

unique optimal solution, then we start the procedure

by selecting another c̄ at random, otherwise, return

the unique optimal solution found by BP. Formally, we

present APRXMT(MCF, ) as Algorithm 3.

Corollary 7.2. The expected number of operations

8

7

for APRXMT(MCF, ) to terminate is O( n m3log n ).

Proof. With

Theorem

7.1,

when

we

call

APRXMT(MCF, ), the expected number of MCF

BP tried to solve is bounded by 2. For each selection

of c̄, we run Algorithm 2 for 2c̄max n2 iterations. As

2

c̄max = O( m n ), by Lemma 6.1, the expected number

of operations for APRXMT(MCF, ) to terminate is

8

7

O(c̄3max n5 m log n) = O( n m3log n ).

Now let c̄ be a randomly chosen vector such that

MCF has a unique optimal solution x2 . The next thing

Proof. [Proof of Lemma 7.1] Suppose x∗ and x∗∗ are two we want is to show that x2 is a “near optimal” solution

distinct optimal solutions of MCF. Let d = x∗∗ − x∗ , of MCF. To accomplish this, let e = arg max{ce } and

then x∗ + λd is an optimal solution of MCF iff 0 ≤ define

289

Copyright © by SIAM.

Unauthorized reproduction of this article is prohibited.

we have that c̄ k ≤ 0. And

Algorithm 3 APRXMT(MCF, )

cmax 1: Let t = 4mn

, for any e ∈ E, assign c̄e = 4m ·

ce

c̄e = 4m

+ pe , 1 ≤ pe ≤ 4m,

ce

t +pe , where pe is an integer chosen indepent

dently, uniformly random from {1, 2, . . . , 4m}

ce

ce

4mce

∈ [4m

, 4m(

+ 1)],

=⇒ c̄e ,

2: Let MCF be the problem with modified cost c̄.

t

t

t

3: Run Algorithm 2 on MCF for N = 2c̄max n2

4mce

=⇒ |c̄e −

| ≤ 4m,

iterations.

t

4: Use Corollary 4.1 to determine if MCF has a unique

4mce

−

c̄

=⇒

|

||k

|

≤

|4m||ke | ≤ 4mn,

e

e

solution.

t

e

e

5: if MCF does not have a unique solution then

4mce

4mc k

4mc k

6:

Restart the procedure APRXMT(MCF, ).

≤

− c̄ k ≤

− c̄e ||ke |,

|

but

t

t

t

7: else

e

8:

Terminate and return x2 = x̂N , where x̂N if the

4mc k

≤ 4mn,

thus,

we

have

“belief” vector found in Algorithm 2.

t

9: end if

=⇒ c k ≤ nt.

Clearly, x1 + k∈K k satisfy the demand/supply

constraints

of MCF. Since min{x1e , x2e } ≤ x1e +

1

2

k∈K ke ≤ max{xe , xe }for all e ∈ E, and each

1

k ∈ K , we have that x + k∈K k is a feasible solution

for MCF. Since

x3 is the optimal solution of MCF,

T 3

T 1

c x ≤ c x + k∈K cT k ≤ cT x1 + |K |nt. Since

min

ce xe

(MCF)

|K | = |x2e − x1e |, we have cT x3 − cT x1 ≤ |x2e − x1e |nt.

e∈E

Δ(v, e)xe = bv , ∀v ∈ V (demand constraints) Corollary 7.3. cT x3 ≤ (1 + 2m

)cT x1 , for any ≤ 2.

e∈Ev

Proof.

xe = x2e

0 ≤ xe ≤ ue ,

∀e ∈ E

(flow constraints)

By Lemma 7.2,

Suppose x3 is an optimal solution for (MCF), and x1

is an optimal solution of MCF, then

|x2 − x1 |nt

cT x3 − cT x1

≤ e T 3e

T

3

c x

c x

|x2e − x1e |nt

≤ 2

|xe − x1e |ce

ce , as t = 4mn

=

.

4m

Thus,

T

3

T

1

Lemma 7.2. c x − c x ≤

|x2e

−

cT x3 − cT x1

≤

cT x3

4m

1

T 1

=⇒ cT x3 ≤

c x

1 − 4m

T 1

)c x

=⇒ cT x3 ≤ (1 +

2m

x1e |nt.

Proof. Consider d = x2 − x1 , we call a vector k ∈

{−1, 0, 1}|E| an synchronous cycle vector of d if for any

e ∈ E, ke = 1 only if de > 0, ke = −1 only if de < 0,

and the set {(i, j)|kij = 1 or kji = −1} forms exactly

one directly cycle in G. Since d is a integral vector

of circulation (i.e., d send 0 unti amount of flow to

every vertex

v ∈ V ). Hence that we can decompose

d so that k∈K k = d, for some set K of consisting of

synchronous cycle vectors.

Now, let K ⊂ K be the subset of K containing

all vectors k ∈ K such that ke = 0. Then, for any

k ∈ K , observe that x2 − k is a feasible solution for

MCF. Because x2 is the optimal solution for MCF,

where the last inequality holds because (1 −

2

2m ) = 1 + 4m − 8m ≥ 1, as 2m ≤ 1.

4m )(1

+

7.3 A (1 + ) Approximation Scheme Loosely

speaking, Corollary 7.3 shows that x2 at arc e is “near

optimal”, since fixing the flow at arc e to x2e helps us

in finding a feasible solution of MCF which is close

to optimal. This leads us to approximate algorithm

AS(MCF, ) (see Algorithm 4); at each iteration, it

uses APRXMT (Algorithm 3), and iteratively fixes the

flow at the arc with the largest cost.

290

Copyright © by SIAM.

Unauthorized reproduction of this article is prohibited.

Algorithm 4 AS(MCF, )

1: Let G = (V, E) be the underlying directed graph of

(MCF), m = |E|, and n = |V |.

2: while (MCF) contains at least 1 arcs do

3:

Run APRXMT (MCF, ), let x2 be the solution

returned.

4:

Find (i , j ) = e = arg maxe∈E {ce }, modify

MCF by fix the flow on arc e by x2e , and change

the demands/supply on nodes i , j accordingly.

5: end while

Theorem 7.2. The expected number of operations for

8

8

AS(MCF, ) to terminate is O( n m3log n ). Suppose x∗

be an answer returned by AS(MCF, ), then cT x∗ ≤

(1 + )cT x1 , for any ≤ 1.

known as ‘decimation’ (see [14]). To the best of

out knowledge, this is the first disciplined, provable

instance of decimation procedure. The ‘decimation’ in

our scheme is extremely conservative, and a natural

question is if there exists a more aggressive ’decimation’

heuristic which can improve the running time.

Acknowledgements

While working on this paper, D. Gamarnik was partially

supported by NSF Project CMMI-0726733; D. Shah was

supported in parts by NSF EMT Project CCF 0829893

and NSF CAREER Project CNS 0546590; and Y. Wei

was partially supported NSERC PGS M. The authors

would also like to thank the anonymous referees for the

helpful comments.

Proof. By Corollary 7.2, the expected number of References

operations for APRXMT(MCF, ) to terminates is

8

7

O( n m3log n ). Since AS(MCF, ) calls the method

[1] R. K. Ahuja, T. L. Magnanti, and J. B. Orlin. PrenticeAPRXMT(MCF, ) m times, the expected numHall Inc. Addison-Wesley, 1993.

ber of operations for AS(MCF, ) to terminate is

[2] S. M. Aji and R. J. McEliece. The generalized distribu8

8

tive law. IEEE Transaction on Information Theory,

O( n m3log n ). And by Corollary 7.3,

cT x∗ ≤ (1 +

m T 1

) c x ≤ e 2 cT x1 ≤ (1 + )cT x1

2m

where the last two inequalities follows using standard

analysis arguments.

And thus, AS(MCF, ) is an (1 + ) approximation

scheme for MCF with polynomial running time.

8

Discussions

In this paper, we formulated belief propagation (BP) for

MCF, and proved that BP solves MCF exactly when

the optimal solution is unique. This result generalizes

the result from [6], and provides new insights for understanding BP as an optimization solver. Although the

running time of BP for MCF is slower than other existing algorithms for MCF, BP is rather a generic and

flexible heuristic, and one should be able to modify it to

solve other variants of network flow problems. One such

example, the convex-cost flow problem, was analyzed in

Section 5. Moreover, the distributed nature of BP can

be taken advantage of using parallel computing.

This paper also presents the first fully-polynomial

running time scheme for optimization problems using

BP. Since the correctness of BP often rely on the

optimization problem having a unique optimal solution,

Corollary 7.1 can be used for modifying BP in general

to work in the absence of a unique optimal solution.

In the approximation scheme, the heuristic of fixing

values on variables while running BP is commonly

291

46(2):325–343, March 2000.

[3] S. Arora, C. Daskalakis, and D. Steurer. Message

passing algorithms and improved lp decoding. 2009.

[4] M. Bayati, C. Borgs, J. Chayes, and R. Zecchina.

Belief-propagation for weighted b-matchings on arbitrary graphs and its relation to linear programs

with integer solutions.

Technical report, 2008.

arXiv:0709.1190v2.

[5] M. Bayati, A. Braunstein, and R. Zecchina. A rigorous analysis of the cavity equations for the minimum spanning tree. Journal of Mathematical Physics,

49(12):125206, 2008.

[6] M. Bayati, D. Shah, and M. Sharma. Max-product for

maximum weight matching: Convergence, correctness,

and lp duality. IEEE Transaction on Information

Theory, 54(3):1241–1251, March 2008.

[7] D. Bertsimas and J. Tsitsiklis. Introduction to Linear

Optimization, pages 289–290. Athena Scientific, third

edition, 1997.

[8] R. Gallager. Low Density Parity Check Codes. PhD

thesis, Massachusetts Institute of Technology, Cambridge, MA, 1963.

[9] G. B. Horn.

Iterative Decoding and Pseudocodewords. PhD thesis, California Institute of Technology,

Pasadena, CA, 1999.

[10] D. M. Malioutov, J. K. Johnson, and A. S. Willsky.

Walk-sums and belief propagation in gaussian graphical models. J. Mach. Learn. Res., 7:2031–2064, 2006.

[11] M. Mezard, G. Parisi, and R. Zecchina. Analytic and

algorithmic solution of random satisfiability problems.

Science, 297:812, 2002.

[12] C. Moallemi and B. V. Roy. Convergence of minsum message passing for convex optimization. In 45th

Copyright © by SIAM.

Unauthorized reproduction of this article is prohibited.

[13]

[14]

[15]

[16]

[17]

[18]

[19]

[20]

[21]

[22]

Allerton Conference on Communication, Control and

Computing, 2008.

C. C. Moallemi and B. V. Roy. Convergence of

the min-sum message passing algorithm for quadratic

optimization. CoRR, abs/cs/0603058, 2006.

A. Montanari, F. Ricci-Tersenghi, and G. Semerjian.

Solving constraint satisfaction problems through belief

propagation-guided decimation.

K. Mulmuley, U. Vazirani, and V. Vazirani. Matching is as easy as matrix inversion. Combinatorica,

7(1):105–113, March 1987.

J. Pearl. Causality: Models, reasoning, and inference.

Cambridge University Press, 2000.

T. Richardson and R. Urbanke. The capacity of

low-density parity check codes under message-passing

decoding. IEEE Transaction on Information Theory,

47(2):599–618, February 2001.

S. Sanghavi, D. Malioutov, and A. Willsky. Linear

programming analysis of loopy belief propagation for

weighted matching. In Proc. NIPS Conf, Vancouver,

Canada, 2007.

S. Sanghavi, D. Shah, and A. Willsky. Message-passing

for maximum weight independent set. July 2008.

A. Schrijver. Combinatorial Optimization. Springer,

2003.

Y. Weiss and W. Freeman. On the optimality of solutions of the max-product belief-propagation algorithm

in arbitrary graphs. IEEE Transactions on Information Theory, 47(2), February 2001.

J. Yedidia, W. Freeman, and Y. Weiss. Understanding

belief propagation and its generalizations. Technical

Report TR-2001-22, Mitsubishi Electric Research Lab,

2002.

292

Copyright © by SIAM.

Unauthorized reproduction of this article is prohibited.