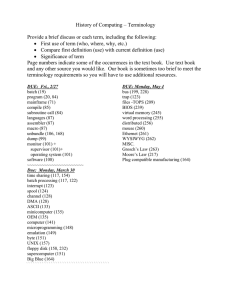

for the presented on February 2,

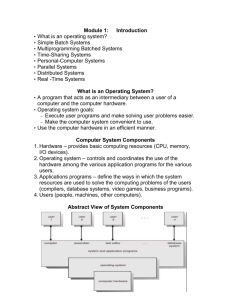

advertisement