Multiple Random Variables

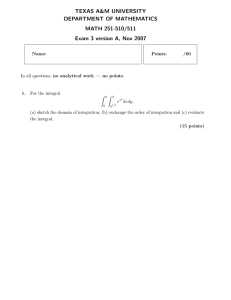

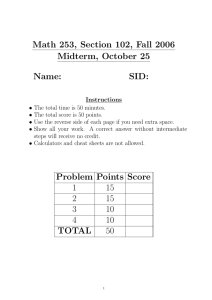

advertisement

Multiple Random Variables

Joint Cumulative Distribution

Function

Let X and Y be two random variables. Their joint cumulative

( )

distribution function is FXY x, y P X x Y y .

0 FXY x, y 1 , < x < , < y < (

( )

)

(

) (

( , ) = 1

)

FXY , = FXY x, = FXY , y = 0

( )

FXY

FXY x, y does not decrease if either x or y increases or both increase

(

)

( )

(

)

()

FXY , y = FY y and FXY x, = FX x

Joint Cumulative Distribution

Function

Joint cumulative distribution function for tossing two dice

Joint Probability Mass Function

Let X and Y be two discrete random variables.

Their joint probability mass function is

( )

PXY x, y P X = x Y = y .

Their joint sample space is

S XY =

{( x, y ) | P ( x, y ) > 0}.

XY

P ( x, y ) = 1

,

P A =

( ) P ( x, y )

,

PY y =

XY

ySY xS X

PX x =

XY

ySY

( x,y )A

( )

PXY x, y

( ) P ( x, y )

XY

xS X

( ) g ( x, y ) P ( x, y )

E g x, y =

XY

ySY xS X

Joint Probability Mass Function

Let a random variable X have a PMF

PXY

( )( )

0.8x 0.7 y

, 0 x < 5, 4 y < 2

x, y = 41.17

0 , otherwise

( )

Joint Probability Density

Function

f XY

( ( ))

2

x, y =

FXY x, y

x y

( )

( )

f XY x, y 0 , < x < , < y < ,

( )

( )

f XY x, y dxdy = 1 ,

()

fX x =

P

( )

( )

( X ,Y ) R

x

(

( )

f XY x, y dx

( )

=

f XY x, y dxdy

R

P x1 < X x2 , y1 < Y y2 =

y2 x2

( )

f XY x, y dxdy

y1 x1

((

E g X ,Y

)) =

( ) ( )

g x, y f XY x, y dxdy

)

f XY , d d FXY x, y =

f XY x, y dy and fY y =

y

The Unit Rectangle Function

1 , t <1/ 2 rect ( t ) = 1 / 2 , t = 1 / 2 = u ( t + 1 / 2 ) u ( t 1 / 2 )

0 , t >1/ 2

The product signal g(t)rect(t) can be thought of as the signal g(t)

“turned on” at time t = -1/2 and “turned back off” at time t = +1/2.

Joint Probability Density

Function

Let

f XY

x X0 y Y0 1

x, y =

rect rect wX wY

wX

wY

( )

( ) x f ( x, y ) dxdy = X

E X =

XY

0

( ) y f ( x, y ) dxdy = Y

E Y =

XY

0

( ) xy f ( x, y ) dxdy = X Y Correlation of X and Y

E XY =

XY

0 0

x X0 1

x, y dy =

rect wX

wX

( ) f ( )

fX x =

XY

Joint Probability Density

Function

( )

/ 2, F ( x, y ) = 1

For x < X 0 wX / 2 or y < Y0 wY / 2, FXY x, y = 0

For x > X 0 + wX / 2 and y > Y0 + wY

XY

For X 0 wX / 2 < x < X 0 + wX / 2 and y > Y0 + wY / 2,

( )

FXY x, y =

Y0 + wY /2

x

Y0 wY /2 X 0 wX

(

x X 0 wX / 2

1

dudv =

wX

wX wY

/2

For x > X 0 + wX / 2 and Y0 wY / 2 < y < Y0 + wY / 2,

( )

FXY x, y =

y

X 0 + wX /2

Y0 wY /2 X 0 wX

(

y Y0 wY / 2

1

dudv =

wY

wX wY

/2

)

)

For X 0 wX / 2 < x < X 0 + wX / 2 and Y0 wY / 2 < y < Y0 + wY / 2,

( )

FXY x, y =

y

x

Y0 wY /2 X 0 wX

(

)

(

x X 0 wX / 2

y Y0 wY / 2

1

dudv =

wX

wY

wX wY

/2

)

Joint Probability Density

Function

Combinations of Two Random

Variables

Example

( )

()

( )

If the joint pdf of X and Y is f X x, y = e x u x e y u y

find the pdf of Z = X / Y. Since X and Y are never negative

Z is never negative.

()

() (

) (

FZ z = P Z z = P X / Y z

)

FZ z = P X zY Y > 0 + P X zY Y < 0 Since Y is never negative

()

FZ z = P X zY Y > 0 Combinations of Two Random

Variables

()

zy

FZ z =

zy

( )

f XY x, y dxdy =

e x e y dxdy , z 0

0 0

( )

e

z

FZ z = 1 e e dxdy = e y =

, z0

z + 1

0 z + 1

0

1

, z0

FZ z

2

fZ z =

= z +1

z

0

, z<0

() (

()

fZ

zy

()

(

)

(z) =

( z + 1)

u z

2

)

y z+1

y

(

)

Combinations of Two Random

Variables

Combinations of Two Random

Variables

Example

The joint pdf of X and Y is defined as

6x , x 0, y 0, x + y 1

f XY x, y = 0 , otherwise

Define Z = X Y. Find the pdf of Z.

( )

Given the constraints on X and Y , 1 Z 1.

1+ Z

1 Z

Z = X Y intersects X + Y = 1 at X =

, Y=

.

2

2

Combinations of Two Random

Variables

()

For 0 z 1, FZ z = 1

(1 z )/2 1 y

6xdxdy = 1 0

()

(

)(

(1 z )/2

y+ z

)

0

()

(

)(

1 y

3x dy

y+ z

2

3

3

2

FZ z = 1 1 z 1 z f Z z = 1 z 1+ 3z

4

4

)

Combinations of Two Random

Variables

For 1 z 0,

(1 z )/2 y+ z

(1 z )/2

(1 z )/2

y+ z

2

2

FZ z = 2 6xdxdy = 6 x dy = 6 y + z dy

()

z

1+ z )

(

F (z) =

0

3

Z

4

fZ

(

(z) =

z

3 1+ z

4

0

)

2

z

(

)

Combinations of Two Random

Variables

Joint Probability Density

Function

Conditional Probability

{

Let A = Y y

FX |A

}

FX | Y y

{

)

()

( )

( )

P X x Y y FXY x, y

x =

=

P Y y FY y

()

Let A = y1 < Y y2

}

()

FX | y <Y y x =

1

(

P X x A

x =

P A

2

(

) ( )

F (y ) F (y )

FXY x, y2 FXY x, y1

Y

2

Y

1

Joint Probability Density

Function

{

Let A = Y = y

FX | Y = y

FX | Y = y

}

( ( ))

( ( ))

FXY x, y

FXY x, y + y FXY x, y

y

x = lim

=

y0

d

FY y + y FY y

FY y

dy

FXY x, y

f XY x, y

y

x =

, f X |Y = y x =

FX | Y = y x =

x

fY y

fY y

(

()

()

)

)

(

( ( ))

( )

( )

Similarly, fY |X = x y =

( )

f ( x)

f XY x, y

X

( )

( )

()

(

( ))

( )

( )

Joint Probability Density

Function

In a simplified notation

()

f X |Y x =

( )

f ( y)

f XY x, y

( )

and fY |X y =

( )

f ( x)

f XY x, y

Y

Bayes’ Theorem

X

() ( )

( ) ()

f X |Y x fY y = fY |X y f X x

Marginal pdf’s from joint or conditional pdf’s

( ) f ( x, y ) dy = f ( x ) f ( y ) dy

fX x =

XY

X |Y

Y

( ) f ( x, y ) dx = f ( y ) f ( x ) dx

fY y =

XY

Y |X

X

Joint Probability Mass Function

It can be shown that, analogous to pdf, the conditional joint

PMF of X and Y given Y = y is

(

)

PX |Y x | y =

( )

P ( y)

PXY x, y

(

)

and PY |X y | x =

( )

P ( x)

PXY x, y

Y

Bayes’ Theorem

(

X

) ( )

(

) ()

PX |Y x | y PY y = PY |X y | x PX x

Marginal PMF’s from joint or conditional PMF’s

( ) P ( x, y ) = P ( x | y ) P ( y )

PX x =

XY

ySY

X |Y

Y

ySY

( ) P ( x, y ) = P ( y | x ) P ( x )

PY y =

XY

xS X

Y |X

xS X

X

Independent Random Variables

If two continuous random variables X and Y are independent then

f ( x, y )

(

)

f ( x) = f ( x) =

and f ( y ) = f ( y ) =

.

f ( y)

f ( x)

Therefore f ( x, y ) = f ( x ) f ( y ) and their correlation is the

f XY x, y

X |Y

XY

X

Y |X

Y

Y

XY

X

X

Y

product of their expected values

( ) xy f ( x, y ) dxdy = y f ( y ) dy x f ( x ) dx

E ( XY ) = E ( X ) E (Y )

E XY =

XY

Y

X

Independent Random Variables

If two discrete random variables X and Y are independent then

P ( x, y )

(

)

P ( x | y) = P ( x ) =

and P ( y | x ) = P ( y ) =

.

P ( y)

P ( x)

Therefore P ( x, y ) = P ( x ) P ( y ) and their correlation is the

PXY x, y

X |Y

XY

X

Y |X

Y

Y

XY

X

X

Y

product of their expected values

( ) xy P ( x, y ) = y P ( y ) x P ( x )

E XY =

( )

XY

ySY xS X

( ) ( )

E XY = E X E Y

Y

ySY

X

xS X

Independent Random Variables

Covariance

XY

( )

( )) ( )

= ( x E ( X )) ( y E (Y )) P ( x, y )

= E ( XY ) E ( X ) E (Y )

=

or

( )

*

E X E X Y E Y (

( )) ( y

xE X

*

E Y * f XY x, y dxdy

*

*

XY

ySY xS X

XY

*

*

If X and Y are independent,

( ) ( ) ( ) ( )

XY = E X E Y * E X E Y * = 0

Independent Random Variables

Correlation Coefficient

XY = E

( )Y

X E X

=

ySY xS X

XY =

X

Y

Y

( )

) ( ) ( )=

E XY * E X E Y *

XY

( )

( )

f XY x, y dxdy

( )

y* E Y *

xE X

X

y* E Y *

xE X

(

( )

( )

E Y*

X

or =

*

Y

( )

PXY x, y

XY

XY

If X and Y are independent = 0. If they are perfectly positively

correlated = + 1 and if they are perfectly negatively correlated

Independent Random Variables

If two random variables are independent, their covariance is

zero.

However, if two random variables have a zero covariance

that does not mean they are necessarily independent.

Independence Zero Covariance

Zero Covariance Independence

Independent Random Variables

In the traditional jargon of random variable analysis, two

“uncorrelated” random variables have a covariance of zero.

Unfortunately, this does not also imply that their correlation is

zero. If their correlation is zero they are said to be orthogonal.

X and Y are "Uncorrelated" XY = 0

( )

X and Y are "Uncorrelated" E XY = 0

Bivariate Gaussian Random

Variables

xμ

X

X

exp ( )

f XY x, y =

2

(

2 XY x μ X

)( y μ ) + y μ

XY

(

2 1 2XY

Y

)

2 X Y 1 2XY

Y

2

Y

Bivariate Gaussian Random

Variables

Bivariate Gaussian Random

Variables

Bivariate Gaussian Random

Variables

Bivariate Gaussian Random

Variables

Any cross section of a bivariate Gaussian pdf at any value of x or y

is Gaussian. The marginal pdf’s of X and Y can be found using

( ) f ( x, y ) dy

fX x =

XY

which turns out to be

()

fX x =

Similarly,

( )

fY y =

e

(

x μ X

)2 /2 2X

X 2

e

(

y μY

)2 /2 Y2

Y 2

Bivariate Gaussian Random

Variables

The conditional pdf of X given Y is

(

()

f X |Y x =

) ( (

x μ X XY X / Y

exp 2 2X 1 2XY

(

) ( y μ ))

)

2

Y

2 X 1 2XY

The conditional pdf of Y given X is

(

( )

fY |X y =

) ( (

y μY XY Y / X

exp 2 Y2 1 2XY

(

2 Y 1 2XY

) ( x μ ))

)

X

2