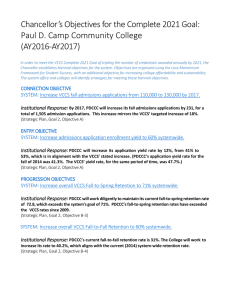

REPORT OF THE VCCS TASK FORCE

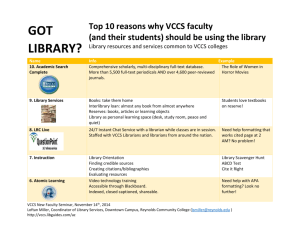

advertisement