Introduction to Numerical Analysis I Handout 9 1

advertisement

Introduction to Numerical Analysis I

Handout 9

1

Example 1.3.

Numerical Differentiation and Integration

f (x) ≈ P2 (x) = f [a] + f [a, a + h] (x − a)

We developed a tool (interpolation) which will serve

us in future as following: Given Pn (x) is a polynomial interpolation of f (x), for each linear operator L

we have

Lf (x) ≈ LPn (x)

f 0 (x) ≈

f (3) (c̃)

(x − a) (x − a − h)

6

Thus,

and the error given by

f (a + h) − f (a) h (2)

− f (c)

h

2

Example 1.4. We previously found sin x ≈ P3 (x) =

4

4 2

d

4

π x − π 2 x , therefore cos x sin’ x ≈ dx P3 (x) = π −

8

π 2 x ≈ 1.27 − 0.81x

f 0 (a) ≈

e (x) = L [f (x) − Pn (x)] = Lf {[x0 , . . . , xn , x] p (x)}

where p (x) =

n

Q

(x − xk ).

k=0

1.1

f (a + h) − f (a)

f (2) (c)

+

((x − a − h) + (x − a)) +

h

2

Numerical Differentiation/Finite Differences

Definition 1.1 (First Numerical Derivative).

Let Pn (x) be polynomial interpolation of f (x) at inn

terpolation points {xk }k=0 , then

f 0 (x) = Pn0 (x) +

d

{f [x0 , . . . , xn , x] p (x)}

dx

Definition 1.5 (Higher order Numerical Differentiation). Let Pn (x) be polynomial interpolation

n

of f (x) at interpolation points {xk }k=0 . Suppose

that f (x) have n + m + 1 derivatives (m ≤ n), then

The error is given by

d

{f [x0 , . . . , xn , x] p (x)} =

dx

d

= f [x0 , . . . , xn , x] p0 (x) + p (x) f [x0 , . . . , xn , x]

dx

e(x) = f 0 (x) − Pn0 (x) =

dm

{f [x0 , . . . , xk , x] p (x)}

dxm

And the error is given by

f (m) (x) = Pn(m) (x) +

dm

{f [x0 , . . . , xk , x] p (x)} =

dxm

f [x0 , . . . , xn , x] p0 (x) + f [x0 , . . . , xn , x, x] p (x) =

d

From the term dx

f [x0 , . . . , xn , x] we learn that we

need to differentiate divided differences.

(m)

e (x) = f (m) (x) − Pn

dm−1

dxm−1

dm−2 f [x0 , . . . , xn , x] p00 (x) + 2f [x0 , . . . , xn , x, x] p0 (x) +

dxm−2

f [x0 , . . . , xn , x, x, x] p (x)} = · · ·

Definition 1.2 (Derivative of DD).

d

f [x0 , . . . , xk , x] =

dx

f [x0 , . . . , xk , x + h] − f [x0 , . . . , xk , x]

lim

=

h→0

h

lim f [x0 , . . . , xk , x, x + h] = f [x0 , . . . , xk , x, x]

Definition 1.6 (Forward (Backward) Differences:).

(partially) uses interpolation points {a + jh}nj=0 {a − jh}nj=0

Definition 1.7 (Central Differences:). (partially)

uses interpolation points {a + jh}nj=0 ∪{a − jh}nj=0 symmetrically.

h→0

Finally, given that f (x) have n + 2 derivatives,

the error formula is

Theorem 1.8. Let m be an integer and let n +

1 = 2m. Given interpolation points x0 ≤ ... ≤ xn

symmetrically distributed around a point a, that is

a − x(m−1)−k = − (a − xm+k ) or alternatively

e (x) = f 0 (x) − Pn0 (x) =

f [x0 , . . . , xn , x] p0 (x) + p (x) f [x0 , . . . , xn , x, x] =

=

(x) =

f (n+2) (c̃)

f (n+1) (c) 0

p (x) +

p (x)

(n + 1)!

(n + 2)!

a=

Note that in the simplest case, one of the polynomial parts p(x) or p0 (x) vanishes.

for any k, then

1

d

dx

x(m−1)−k + xm+k

2

n

Q

(x − xk )

= 0.

k=0

x=a

1.1.1

1.1.2

Developing FD schemes using Polynomial Basis

Consider f 0 (a) ≈ c1 f (a) + c2 f (a − h) + c3 f (a + h)

The Taylor expansion gives

Definition 1.9 (The order of approximation).

The order of approximation is defined to be the minimal p that satisfies the following inequality

p

f (a) = f (a)

f (a ± h) = f (a) ± hf 0 (a) +

p

|e(x)| ≤ ch = O(h ),

1

1

1

min |xi − xj | 6 h 6 max |xi − xj | .

i6=j

Definition 1.11 (Algebraic Order of Exactness).

An approximation method has an algebraic order of

exactness p if p is the maximal degree of a polynomial for which the approximation provides an exact

solution.

1.1.3

Example 1.12. For example consider Polynomial

basis {1, x, x2 , x3 , ...} The forward formula gives a

first order

f

f

f

h3 (3)

f

6

(a) + O h4

0

1

−1

f (a)

0

f (a)

0

1 hf (a) = f (a + h) +O h3

h2 00

1

f (a − h)

f (a)

2

Subtract between the (±) equations

to get f (a + h)−

f (a − h) = 2hf 0 (a) + O h3 then solve for f 0 (a) to

get the central formula. Similarly, add between the

equations to get

f (a + h) − 2f (a) + f (a − h) = h2 f 00 (a) + O h4

f (a + h) − 2f (a) + f (a − h)

⇒ f 00 (a) =

+ O h2

h2

f (a + h) − f (a − h)

+ O(h2 )

f 0 (a) =

2h

f

(a) ±

We don’t need to solve the linear system. One writes

2

f (a ± h) − f (a) = ±hf 0 (a) + h2 f 00 (a) + O h3

Example 1.10. For example the central difference

formula for the first derivative has a first order, that

is of second order, since

0

h2 00

f

2

Rewrite in the matrix form

where e(x) is the error of approximation and

i6=j

Developing FD schemes using Taylor

Expansion

Sensitivity to error

Let f˜ (x) = f (x) + ẽ (x) and assume |ẽ (xj )| 6 ε

and f 3 (x) 6 M . Use the central formula for first

derivative f 0 (a) =

Then

f (a + h) − f (a)

(a) ≈

h

1−1

=1⇒

= 0 = f0

h

x+h−x

= 1 = f0

=x⇒

h

(x + h)2 − x2

2xh + h2

= x2 ⇒

=

= 2x + O (h)

h

h

f (a+h)−f (a−h)

2h

−

f (3) (c) 2

h .

6

f (a + h) − f (a − h) f (3) (c) 2

f˜0 (a) =

−

h +

2h

6

ẽ (a + h) − ẽ (a − h)

2h

Thus, the error is

(3)

f (c) 2 ẽ (a + h) − ẽ (a − h) 6

e (x) = −

h +

6

2h

M 2 |ẽ (a + h)| + |ẽ (a − h)| M 2 ε

h +

→ ∞

= h +

6 2h

6

h h→0

The latest example provide an algorithm to create FD schemes. Let D be differential operator. To

p

P

approximate D at point a by Df (a) =

cj f (a+jh)

j=1

one solves the following linear system of equation

To find the optimal value of h one solves

D1

Dx

..

.

D xp

c1

c2

..

.

cp

=

D1

Dx

..

.

D xp

e0 (x) =

to get h =

Example 1.13. Let f 0 (a) ≈ c1 f (a) + c2 f (a − h).

To find c1 , c2 solve

f = 1 ⇒ c1 + c2 = 0 ⇒ c2 = −c1

f = x ⇒ c1 a + c2 (a − h) = 1

To get the backward formula f 0 (a) ≈

f (a)−f (a−h)

h

2

q

3

3

M ε.

M

ε

h− 2 =0

3

h

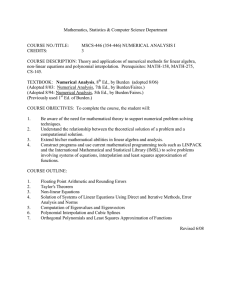

See the graph of e(x) below.