' [ I l

advertisement

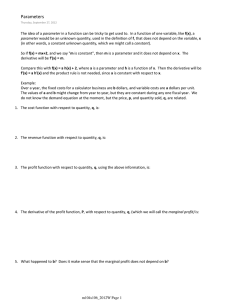

11 Proceedinas - of the 15th IEEE Intemational Symposium [ on Intelligent Control (ISIC 2000) Rio, Patras, GREECE I l l MC4-3 AN ADAPTIVE LEARNING SYSTEM YIELDING UNBIASED PARAMETER ESTIMATES DANIEL w. REPPERGER~ SEDDIK DJOUADI' ' Air Force Research Laboratory, AFRTfHECF', WPAFB,Ohio 45433, USA, D.ReDDereer@IEEE.ORG 'National Research Council, AFRUHECP, WPAFB, Ohio 45433, USA Abstract, A learning system involving model reference adaptive control (MRAC)algorithms is studied in which the Lyapunov function and its associated time derivative are simultaneously quadratic functions of both the position tracking error and the parameter estimation error. An implementation method is described which expands results from [5]. Initially it appears that the condition of persistent excitation (PE) need not be explicitly satisfied, however, this condition is actually implicit in the requirements for a solution. Key Words: Adaptive Control, Learning System A standard solution to this problem is given by Lemma 1 [3]: 1. INTRODUCTION Lemma 1 This paper will address a specific class of learning systems or MRAC algorithms which have the interesting property that both the Lyapunov function and its associated time derivative along the motion trajectory are quadratically dependent on both the tracking error and the parameter estimation error. If this type of algorithm can be successfully implemented, then unbiased parameter estimates can be obtained. The study of model reference adaptive control has been well-established [ I ] when over 1,500 papers have been reported at that time with numerous experimental results obtained. The notation used herein and a brief description of the conventional MRAC method is first discussed. Let a state-space description (x E R", Q E R', v E R", 8 E R") of Figure 1 be of the form: 2. THE DIRECT METHOD OF THE STANDARD MRAC PROBLM Using the nomenclature of [2], the scalar tracking error q ( t ) = yp(t)-y,(t) in Fig. 1 represents the difference between the plant's output yp and the reference model ym. The unknown parameter vector is 8 which is mxl and its adjustment mechanism only allows knowledge of yp(t), %(t), and possibly their respective derivatives. 0-7803-6491 -O/OO/$l 0.00 02000 IEEE x = A x + b [ k €ITv(t)] (1) The remaining matrices are of appropriate dimensions with A being Hurwitz, the scalar k is unknown (except for sign), the pair (A,b) is completely controllable with b known, and v(t) is a measured variable to be defined in the sequel. For the parameter vector, 8 ' represents A - 6 the true value, 8 is the estimate, and 8 = 8 - 8' is the parameter error. With some abuse of notation (mixing both the Laplace transform variable p with the time domain variables), the error vector resulting from equation (2) admits to the form: The transfer function H(p) is strictly positive real (SPR) (stable, minimum phase, and of relative degree no greater than unity). The adaptation law is given by: - 139- parameter estimation problem can be mitigated. An alternative approach to this problem is now provided. where y >O. Q(t) and e (t) are globally bounded and if v(t) is bounded, then Q(t) + 0 as t+ W. Associated with this problem is a Lyapunov function (Y =(x, 4. - e )): V(Y) = V(x, Ikl g T g e- ) = X f P J + Y For this problem, first define a scalar sliding state variable s(r) as follows: (5) and y > 0 controls the rate of parameter adaptation such that the parameters change more slowly than the effects they induce on the error vector e&).. It can-beshown that: =, lim VW)+ (radial unbounded (i) As IYI condition). (ii) V(Y) > 0 if Y # 0 (positive definite property). (iii) To show that 3 (Y ) < 0 tl Y # 0 (negative - definite property of 3 ), if A is Hurwitz, using the Kalman-Yakubovich lemma (there exists positive definite mamces P and Q such that ATP+ PA = -Qand P b = c) and with the fact that H(p) is SPR, then it follows: It is noted that only Q(t) + 0 as t 8 + - - =y p-y , x = x(r)-x,(t) (10) will be used to represent tracking error. The choice is now made of the following Lyapunov function: L L .& where h is identical to the variable used in (9) and will be defined later with the positive constants y3 and y4. The following assumptions are implicit in what is to follow: is guaranteed - to the fact that v The persistent excitation condition can mitigate this situation [3,4]. 3. where the term: Where s(r) is the tracking error of equation (9) and the positive constants yI and yz will be specified later. The time derivative of V I along its motion trajectory is required to satisfy: + 0 may not occur due does not depend explicitly on 8 . and the requirement that A NEW ADAPTATION ALGORITHM [SI 4.1 Assumptions THE PERSISTENT EXCITATION CONDITION (1) The true parameter 8 is constant. (2) s ( f ) satisfies the following relationship [ 5 ] : If the reference model in Figure 1 satisfies: Where the measured position variable via: Where r(t) is the input forcing function to the reference model, the persistent excitation condition would normally require, on = [r, eo]', through the choice of r(t) in Figure 1: v u(t) = x, -2 h x" - h2 v(t) F is specified (14) Hence it is required to measure the variable x, and its next two derivatives as well as obtain measurements of 2 and its first derivative. Where 3 al > 0, I is the identity matrix, and T > 0, for any t > 0. With this condition in place, the biased - The algorithm now follows: 140- 4.2 Derivation of the Algorithm: Then the following linear equation in 2 has to be solved: . The goal is to simultaneously satisfy (1 1) and (12). Differentiating VIof (1 1) yields: VI = e ~ ( t .c(t)+yI ) e' 8 . .. +% 8 e' 1 zy*+-y4z+zy,= 2 (15) - . .. = s [ - h e - s + ev ( r j ] + y t 8 8+%e' e' (21) Since all y > 0, i=1,4 then (21) is Hurwitz for properly selected 1. This leads to the following methodology for the selection of the y, in equations (1 1,12): using the relationship in equation (13), the following results: VI 0 (16) 7. which is required to satisfy V, of equation (12). This will occur if the following relationship holds: METHODOFSELECTIONOF AND IMPLEMENTATION OF THE ALGORITHM A three step procedure will implement this algorithm: Step 1: Picky such that equation (21) is strictly Hurwitz, i.e. the solution of (21) is of the form: This equation will now be simplified and various solutions examined. This extends results from [SI to a larger class of solutions. 5. Where the real part of a3 and a 4 are both >O. Then - Z(t) + 0 lim t+ THE NONLINEAR EQUATION TO BE SATISFIED at)= e - and the parameters are unbiased since e *. For notational simplicity, it is easier to denote Z(t) = 8 = 8 - 8 and since 8 . is constant then: - (23) I =8- 6 i (t) = e* Ster, 2: From (20) this also implies that A(t) must also be of exponential order since: (18) A(t) = - and the adaptation law is then specified independent of Also for knowledge of the true parameter 8'. brevity, the variable A(t) = v ( t ) s ( t ) is known from measured quantities (cf. equations (9) and (14)). Equation (17) now simplifies to the form: 2 2 y2 + Zyl + 1 2 - Y3 z = v(tl s ( t ) (24) A(t) + 0. This which would now satisfy lim t+ -, means that both tracking error variables (containing s(f) in equation (9) and v ( t ) in equation (14)) would have to converge to zero. Thus both tracking error and parameter error convergence are established simultaneously. 1 . 1 - y 4 z 2 = Z [ - A ( f ) - - y? Z] (19) 2 2 Step 3: There still exists a caveat from the procedure so far. What is unknown is: The goal is to provide solutions of (19) which are stable and not trivial. 6. SOME ALTERNATIVE SOLUTIONS OF (19) If the right hand side of equation (19) could be set to zero, the left hand side then becomes linear since the cancels out. If (sufficient condition): term if the estimator 8 (0) = 0 is unbiased, which is usually the case. This implies we know the true parameter 8 if we know Z(0). To circumvent this difficulty, the procedure is modified to determine z ( t ) rather than Z(t) via the following sequence of events: z (20) - 141 - [2] S. Sastry and M. Bodson, Adaptive Control, Stabilitv. Convereence. and Robustness, Prentice Hall, 1989. (a) Solve equation (24) for z(t) and substitute the results into (21). This yields: I 2 2 Y2 + - Y4 2 = 2 (71 1 Y3 ) A(t) (26) [3] J-J E. Slotine and W. Li, Amlied Nonlinear Control,Prentice-Hall Inc., 1991. (b) Now define a new variable: Y(t) = 2 = &t) (27) [4] K. S. Narenda, A. M. Annasswamy, Adaptive Svstems, Prentice-Hall Inc., 1989. (28) [5] D. W. Repperger and J. H. Lilly, “A Study on a Class of MRAC Algorithms,” hoceedines of the 1999 E E d December, 1999, Phoenix, Arizona.. Then Y(t) is Hurwitz and satisfies: Y(O)= 0 1 Y ( t )+ - 2 - Y(t) = f(t) Y4 Y2 m, (29) where f(t) is of exponential order since: f(t) = 2 - A(t) (30) Y2Y3 and A(t) is of exponential order from equation (24). (b) Thus the adaptation algorithm is to calculate Y(t) via (28-29) and then: and &t) = Y(t) (32) Numerical simulations of examples are presented in figures 2, 3 and 4 which will be discussed at the conference during this paper’s presentation. 8. CONCLUSIONS AND DISCUSSION A simple method of providing both parameter error convergence and tracking error convergence is demonstrated by taking a special case solution of a nonlinear equation, which describes potential adaptation algorithms. It is possible to guarantee the tracking error to be Hurwitz as well as the parameter estimation error in a special case solution of this nonlinear equation. 9. REFERNCES [I] K. J. Astrom, “Theory and Applications of Adaptive Control - A Survey,” Automatica, Vol. 19, NO. 5, pp. 471-486, 1983. - 142- Stable "t I c cu0 \ d) 2 d) k & 2 . . . , 1 - 143 - i i 0 1 I 0.5 1 j I I 1.s Time in sacon& 2 3 2.5 Figure 2- Method from [3] .-...-- 1.4 -.. -..---. A ..... -.---.-- .--.. .i ; 3 1 2 ....... t.....--.--.---.+ 4 i 2 * E . f * 1 .- 2 f 0.8 j .) * ; ------------.-; .i ......... j. ........ - .c * 0.6 --..-...-.i-- i.................... i * 1.pagb;ma)ion (M'=t 14 true) - Non PE h(r(t)-0) 4 i....................... i...................... ....--t.-....-..-----... * * 0.2 ........................ * i . ..................... :.-; ................ 4........................ . ......-..--.- .... 0.4 .... i ___._.___.______ _. i..... -. i. New Algorithm 4....................... , ...; . I i ......................... j I I I ..................... . , I ........................ 0 Figure 3-New Method - 144- I i........................ I .-