C G N U

advertisement

CAPACITY OF GAUSSIAN CHANNELS

WITH NOISE UNCERTAINTY

Stojan Z. Denic1 , Charalambos D. Charalambous 1,3, Seddik M. Djouadi2

1

School of Information Technology and Engineering, University of Ottawa, Ottawa, Canada

2

Electrical and Computer Engineering Department, University Tennessee, Knoxville, USA

sdenic@site.uottawa.ca , chadcha@site.uottawa.ca , djouadi@ece.utk.edu

Abstract

In this paper the problem of defining, and computing

the capacity of a communication channel when the

statistic of an additive noise is not fully known, is

addressed. The communication channel is specified as a

continuous time channel with the known transfer

function, where the transmitted signal is constrained in

power, and an additive Gaussian noise channel is

assumed. The power spectral density of the noise

although unknown belongs to a known set defined

through the uncertainty of the filter the shapes the power

spectral density of the noise. The channel capacity is

defined as the max-min of mutual information rate

between the transmitted, and received signals, where the

infimum is taken over the set of all possible power

spectral densities of the noise, and supremum is taken

over all power spectral densities of transmitted signal

with constrained power. It is shown that the so defined

channel capacity is equal to the operational capacity that

represents the supremum of all attainable rates over a

given channel.

Keywords: Channel capacity; Uncertain noise.

1. INTRODUCTION

In the classical information, and communication

theory, it is assumed very often that the communication

channel is fully known to a transmitter and receiver. That

means that both transmitter, and receiver are perfectly

aware of all channel parameters such as the parameters of

the channel frequency or impulse response, and the

statistic of the noise. Although this may be true for some

communication channels when it is possible to measure a

channel with high accuracy, there are many situations

when the channel is not perfectly known to the

transmitter, and receiver, which affects the performance

of a communication system.

Some examples of communication systems with

channel uncertainty include wireless communication

3

systems, communication networks, communication

systems in the presence of jamming. For instance, in

wireless communication, the channel parameters such as

attenuation, delay, phase, and Doppler spread constantly

change with time that gives rise to uncertainty. In order

to enable reliable, and efficient communication, the

receiver has to estimate channel parameters. Also, the

receiver, which operates in communication network, has

to cope with the interference from other users that

transmit signals on the same channel, and whose signals

could have characteristics unknown to the receiver. In the

case of adversary jamming, the parameters of the

jamming signal are usually unknown to the transmitter,

and receiver, making the communication channel

uncertain.

The above discussion just partially explains the

importance of channel uncertainty in communications.

An interested reader is referred to the papers [1], [2], [3]

that give excellent overview of the topic, and represent

good source of other important references.

From above discussion, it can be concluded that there

are two major sources of a channel uncertainty. One is

the channel response uncertainty, and the other is the lack

of knowledge of noise or interference characteristics

affecting the transmitted signal. This paper is concerned

with the information theoretic limits for the latter case,

for a continuous time channel with additive Gaussian

noise when the power spectral density of the noise is just

partially known to the receiver. It is assumed that the

transmitted signal is power limited, and frequency

response of the channel is perfectly known.

The channel capacity in the presence of a noise

uncertainty will be defined, and explicit formula for the

channel capacity will be derived. The problem of

defining, and computing the capacity of the channel with

a noise uncertainty is alleviated by using the appropriate

uncertainty model. In this paper, a basic model borrowed

from the robust control theory is used [4]. In particular,

the additive uncertainty model of frequency response is

employed to model the uncertainty of the power spectral

density of the noise, giving the explicit formula for the

Also with the Department of Electrical and Computer Engineering, University of Cyprus, Cyprus, and Adjunct Professor with the Department of

Electrical and Computer Engineering, McGill University, Montreal, P.Q., Canada. This work was supported by the Natural and Science and

Engineering Research Council of Canada under an operating grant.

channel capacity. The obtained formula describes how

the channel capacity decreases when the uncertainty of

the power spectral density of the noise increases. The

other important result stemming from the channel

capacity is the water-filling formula that shows the effect

of the noise uncertainty on the optimal transmission

power. At the end it is shown that there exists a code that

enables the reliable transmission over the channel with

uncertain noise if the code rate is less then the channel

capacity, and that the channel capacity as defined in the

paper, is equal to the operational capacity.

2. NOISE UNCERTAINTY MODEL

W(f )

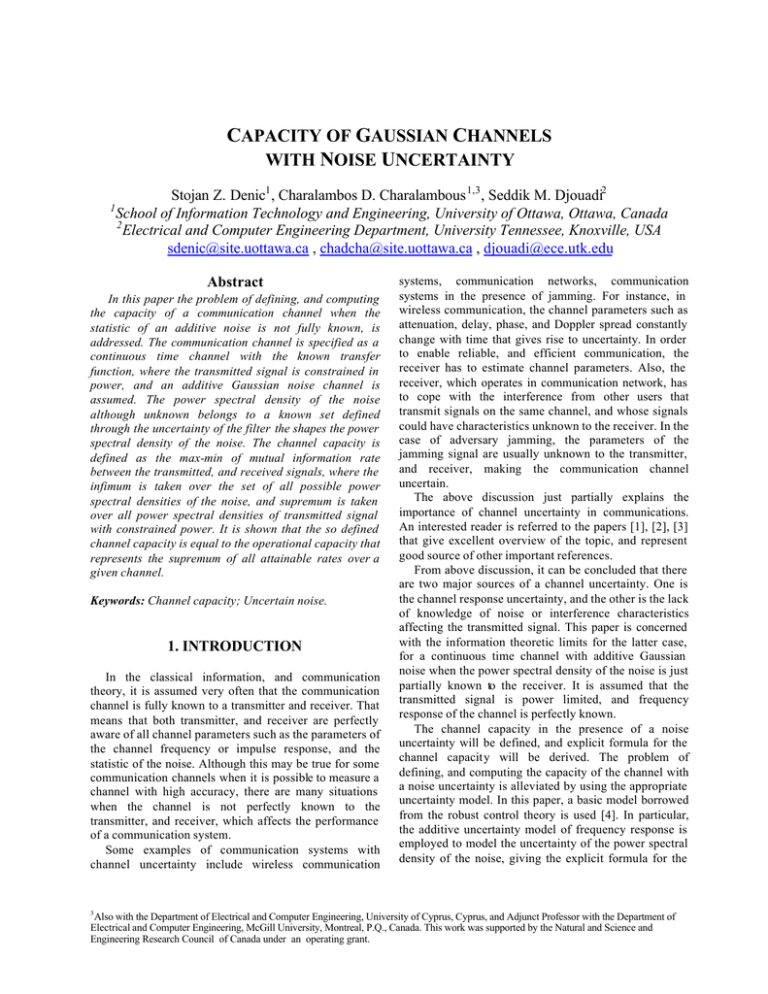

The model of communication system is depicted in

Fig. 1. The input signal x = {x (t );−∞ < t < +∞}, received

{y(t );−∞ < t < +∞} , and noise n

= {n (t );−∞ < t < +∞} are wide-sense stationary processes

with power spectral densities S x ( f ), S y ( f ) , and

S n ( f ) . All three power spectral densities are known.

y

=

The noise n is an additive Gaussian random process. The

frequency response of the channel H ( f ) is a fixed

known transfer function.

The uncertainty in the noise power spectral density is

modeled through the additive uncertainty model of the

filter W ( f ) that shapes the power spectral density of the

noise S n ( f ) . The overall power spectral density of the

noise is S n ( f )W ( f

) 2 . The additive uncertainty model

W ( f ) = Wnom( f ) + W1 ( f )∆( f ), where

is defined by

Wnom ( f ) represents the nominal transfer function that

can be chosen such that it reflects one’s limited

knowledge or belief regarding the power spectral density

of the noise. The second term represents a perturbation

where W1 ( f ) is a fixed known transfer function, and

∆( f ) is unknown transfer function with

∆( f ) ∞ ≤ 1 .

The norm . is called the infinity norm, and it is defined

∞

as H ( f

) ∞ := sup H ( f ) . The set of all transfer functions

f

defined by W1 ( f ) . Thus, the amplitude of uncertainty

varies with frequency and it is determined by the fixed

transfer function W1 ( f ) . The lager W1 ( f ) , the larger

+

Fig. 1 Communication system

signal

is the nominal transfer function Wnom ( f ) , and radius is

3. CHANNEL CAPACITY

y

H(f )

be proven that this space is a Banach space. All transfer

functions mentioned until now belong to this normed

linear space H ∞ . It should be noted that the uncertainty

in the frequency response of the filter W ( f ) can be seen

as

a

ball

in

a

frequency

domain

,

where

the

center

of

the

ball

W ( f ) − Wnom ( f ) ≤ W1 ( f )

the uncertainty. The transfer function W1 ( f ) can be

determined from the measured data. Based on this

uncertainty model the channel capacity will be defined,

and computed in the following section.

n

x

that have a finite . norm is denoted as H ∞ , and it can

∞

Define the following two sets

∞

A1 = S x ( f ); ∫ S x ( f )df ≤ P

−∞

A2 ={W ∈H∞; W( f ) =Wnom( f ) + ∆( f )W1( f ),

Wnom∈H∞, ∆ ∈H∞, W1 ∈H∞, ∆( f )W1( f ) ∞ ≤ γ , γ > 0}

A2 controls the radius of uncertainty.

The larger the radius of uncertainty (e.g., γ ) the larger

Clearly, the set

the uncertainty set A2 .

Definition 1. The capacity of an additive Gaussian

continuous time channel with noise uncertainty, is

defined by

S x ( f )H ( f ) 2

1

df

(1)

C n = sup inf ∫ log 1 +

S ( f )W ( f ) 2

Sx ∈ A1 W ∈ A2 2

n

The interval of integration will become clear from the

discussion below. Although, in (1) the capacity is

determined by the infimum over the set of noises A2 ,

which can be conservative, it provides the limit of

reliable communication, when the noise is unknown and

belongs to an uncertainty set. The better the noise

knowledge, the smaller the uncertainty set, which then

implies a less conservative value for the channel

capacity. Clearly, the channel capacity definition is a

variant of the Shannon capacity for additive Gaussian

continuous time channels, subject to an input power and

frequency constraints [5].

Theorem 1. Consider an additive uncertainty description

H(f

)

2

(

)

(

(

)

S n f Wnom f − W1 ( f ) )

bounded, and integrable, and Wnom ( f ) ≠ W1 ( f ) .

for W ( f ) , and assume

2

is

i) The robust information capacity of an additive

Gaussian continuous time channel with additive

uncertainty shown in Fig. 1, and defined by (1), is given

parametrically by

2

ν * H( f )

1

df

(2)

C n = ∫ log

2

S ( f )( W ( f ) + W ( f ) )

2

nom

1

n

where the Lagrange multiplier ν* is found via

2

ν * − S n ( f )(Wnom( f ) + W1 ( f ) ) df = P

∫

2

H( f )

(3)

noise S n ( f )W ( f

) 2 − S n ( f )(Wnom ( f ) + W1 ( f ) )2 > 0, ν * > 0

of the noise is W ( f

(4)

solution of the equation (3).

ii) The infimum over the channel uncertainty in (1) is

achieved at

∆( f ) = exp (− j arg (W1 ( f )) + j arg(W ( f ))), ∆( f ) ∞ = 1 (5)

and the resulting mutual information rate after the

minimization is given by

2

S x ( f )H ( f )

df

inf ∫ log1 +

2

∆ ∞ ≤1

S ( f )W ( f ) + W ( f )∆( f )

n

nom

1

2

(6)

S

( f )+

Sn ( f )(W nom ( f ) + W1 ( f ) )

2

H (f

)2

W1 ( f ) = 0 , the standard

formula for channel capacity is obtained [5], which

corresponds to the case when the power spectral density

of the noise is perfectly known. If the noise is not known

the amplitude of uncertainty W1 ( f ) is different than

zero, and the channel capacity decreases. If it is assumed

that both the transmitter, and receiver have the partial

knowledge of the channel then the modified water-filling

equation is given by (7) describing how the uncertainty

affects the optimal transmitted power. Formula (7)

suggests how the transmitted power decreases with

uncertainty of the overall noise power spectral density.

In this section, it is shown that under certain

conditions the coding theorem, and its converse hold for

the set of communication channels with uncertain noise

defined by A2 . It means that there exists a code, whose

code rate R is less than the channel capacity Cn given by

formula (2), for which the error probability is arbitrary

small over the set of noises A2 . This result is obtained in

[6], by combining two approaches found in [5], and [7].

First define the frequency response of the equivalent

communication channel by

F ( f ) = (S x ( f ) H ( f

) 2 / Sn ( f )W ( f ) 2 )1/ 2

and denote its inverse Fourier transform by f (t ) . Further

define two sets A3 , and B as follows

A3 = {F ( f );W ( f ) ∈ A2 } ,

B = { f (t ); F ( f ) ∈ A3 , f (t ) satisfies i), ii), iii)}

where

Moreover, the supremum of (6) over A1 yields the waterfilling equation

*

x

) 2 . If

4. CODING AND CONVERSE TO

CODING THEOREMS

in which the integrations in (2), and (3) are over the

frequency

interval

over

which

2

2

ν * > S n ( f )( Wnom ( f ) + W1 ( f ) ) / H ( f ) , and ν* is the

Sx ( f ) H ( f )

df

= ∫ log1 +

2

S ( f )( W ( f ) + W ( f ) )

n

nom

1

affects the capacity. To understand

this point better, assume that the noise n is a white

Gaussian noise with S n ( f ) = 1 W / Hz over all

frequencies such that the overall power spectral density

subject to the condition

ν * H (f

)2

= ν * (7)

Proof. Proof will be omitted due to the space constraint.

The formula for the channel capacity (2) shows how

the uncertainty in overall power spectral density of the

i)

ii)

f (t ) has finite duration δ ,

f (t ) is square integrable ( f (t ) ∈ L2 )

−A

iii)

∫

−∞

F( f

+∞

) 2 df + ∫ F ( f ) 2 df → 0, when A → +∞

A

The set of all f (t ) that satisfy these conditions is

conditionally compact set in L2 (see [7]), and this enables

the proof of coding theorem, and its converse. Note that

the condition i) can be relaxed (see Lemma 4 [8]). Now,

the definition of the code for the set of channels B is

given as well as the definition of the attainable rate R,

and operational capacity C.

The channel code (M , ε , T ) for the set of

communication channels B is defined as the set of M

distinct time-functions {x1 (t ),K, xM (t )}, in the interval

− T / 2 ≤ t ≤ T / 2 , and the set of M disjoint sets D1 , …,

DM, of the space of output signal y such that

1 T /2

x (t )dt ≤ P

T − T∫/ 2 k

model mitigates the computation of the channel capacity,

and provides very intuitive result that describes how the

channel capacity decreases when the size of the

uncertainty set increases. Also, the modified water-filling

equation is derived showing how the optimal transmitted

power changes with the noise uncertainty. At the end, it

is shown that the channel capacity as introduced in the

paper is equal to the operational capacity, i.e., the

channel coding theorem, and its converse hold.

Refereces

for each k, and such that the error probability for each

codeword

is

Pr y(t ) ∈ Dkc | xk (t ) transmitted ≤ ε ,

[1] Medard, M., “Channel uncertainty in

communications,” IEEE Information Theory Society

Newsletters, vol. 53, no. 2, p. 1, pp. 10-12, June,

2003.

{(

[2] Biglieri, E., Proakis, J., Shamai, S., “Fading channels:

information-theoretic and communications aspects,”

IEEE Transactions on Information Theory, vol. 44,

no. 6, pp. 2619-2692, October, 1998.

(

)

k = 1,..., M , for all f (t ) ∈ B .

For a positive number R is said to be an attainable rate

for the set of channels B if there is a sequence of codes

eTn R , ε n , Tn , such that when lim Tn → ∞ , lim ε n → 0 ,

)}

n →∞

n→ ∞

uniformly over set B, where Tn is a codeword time

duration. The operational channel capacity C is defined

as a supremum of attainable rate R.

Theorem 2. The operational capacity C for the set of

communication channels with the noise uncertainty B is

given by formula (2), and is equal to Cn .

Proof. The proof is omitted, and is given in [6].

5. CONCLUSION

This paper concerns the problem of the channel

capacity of continuous time additive Gaussian channels

when the power spectral density of the Gaussian noise is

not completely known. The capacity is defined as the

max-min of a mutual information rate between the

transmitted, and received signals, where the maximum is

taken over all power spectral densities of the transmitted

signal with the constrained power, and minimum is taken

over all power spectral densities of the noise signal that

belong to uncertainty set. The uncertainty set is defined

by using the additive uncertainty model of the filter that

shapes the power spectral density of the noise. This

[3] Lapidoth, A., Narayan, P., “Reliable communication

under channel uncertainty,” IEEE Transactions on

Information Theory, vol. 44, no. 6, pp. 2148-2177,

October, 1998.

[4] Doyle, J.C., Francis, B.A., Tannenbaum, A.R.,

Feedback control theory, New York: McMillan

Publishing Company, 1992.

[5] Gallager, G.R., Information theory and reliable

communication. New York: Wiley, 1968.

[6] Denic, S.Z., Charalambous, C.D., Djouadi, S.M.,

“Robust capacity for additive colored Gaussian

uncertain channels,” preprint.

[7] Root, W.L., Varaiya, P.P., “Capacity of classes of

Gaussian channels,” SIAM J. Appl. Math., vol. 16,

no. 6, pp. 1350-1353, November, 1968.

[8] Forys, L.J., Varaiya, P.P., “The ε-capacity of classes

of unknown channels,” Information and control, vol.

44, pp. 376-406, 1969.