Applied Statistics I Liang Zhang July 10, 2008

advertisement

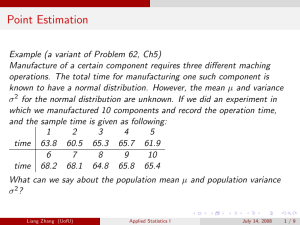

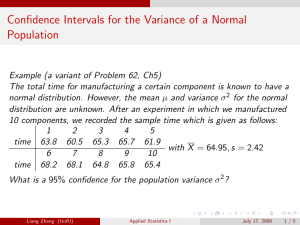

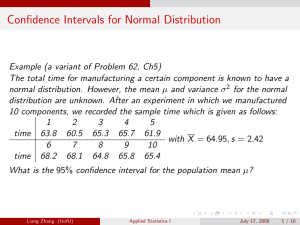

Applied Statistics I Liang Zhang Department of Mathematics, University of Utah July 10, 2008 Liang Zhang (UofU) Applied Statistics I July 10, 2008 1 / 12 Methods of Point Estimation Liang Zhang (UofU) Applied Statistics I July 10, 2008 2 / 12 Methods of Point Estimation Definition Let X1 , X2 , . . . , Xn be a random sample from a distribution with pmf or pdf f (x). For k = 1, 2, 3, . . . , the kth population moment, or kth moment of the distribution f (x), is E (X k ). The kth sample moment P is n1 ni=1 Xik . Liang Zhang (UofU) Applied Statistics I July 10, 2008 2 / 12 Methods of Point Estimation Definition Let X1 , X2 , . . . , Xn be a random sample from a distribution with pmf or pdf f (x). For k = 1, 2, 3, . . . , the kth population moment, or kth moment of the distribution f (x), is E (X k ). The kth sample moment P is n1 ni=1 Xik . Definition Let X1 , X2 , . . . , Xn be a random sample from a distribution with pmf or pdf f (x; θ1 , . . . , θm ), where θ1 , . . . , θm are parameters whose values are unknown. Then the moment estimators θ̂1 , . . . , θ̂m are obtained by equating the first m sample moments to the corresponding first m population moments and solving for θ1 , . . . , θm . Liang Zhang (UofU) Applied Statistics I July 10, 2008 2 / 12 Methods of Point Estimation Liang Zhang (UofU) Applied Statistics I July 10, 2008 3 / 12 Methods of Point Estimation Suppose that a coin is biased, and it is known that the average proportion of heads is one of the three values p = .2, .3, or .8. An experiment consists of tossing the coin twice and observing the number of heads. This could be modeled as a random sample X1 , X2 of size n = 2 from a Bernoulli distribution, Xi ∼ BER(p), where the parameter is one of .2, .3, .8. Liang Zhang (UofU) Applied Statistics I July 10, 2008 3 / 12 Methods of Point Estimation Suppose that a coin is biased, and it is known that the average proportion of heads is one of the three values p = .2, .3, or .8. An experiment consists of tossing the coin twice and observing the number of heads. This could be modeled as a random sample X1 , X2 of size n = 2 from a Bernoulli distribution, Xi ∼ BER(p), where the parameter is one of .2, .3, .8. Consider the joint pdf of the random sample f (x1 , x2 ; p) = p x1 +x2 (1 − p)2−x1 −x2 for xi p .2 .3 .8 = 0 or (0,0) .64 .49 .04 1. The (0,1) .16 .21 .16 Liang Zhang (UofU) values of f (x1 , x2 ; p) are provided as follows (1,0) (1,1) .16 .04 .21 .09 .16 .64 Applied Statistics I July 10, 2008 3 / 12 Methods of Point Estimation Liang Zhang (UofU) Applied Statistics I July 10, 2008 4 / 12 Methods of Point Estimation The values of f (x1 , x2 ; p) are provided as follows p (0,0) (0,1) (1,0) (1,1) .2 .64 .16 .16 .04 .3 .49 .21 .21 .09 .16 .16 .64 .8 .04 Liang Zhang (UofU) Applied Statistics I July 10, 2008 4 / 12 Methods of Point Estimation The values of f (x1 , x2 ; p) are provided as follows p (0,0) (0,1) (1,0) (1,1) .2 .64 .16 .16 .04 .3 .49 .21 .21 .09 .16 .16 .64 .8 .04 The estimate that maximizes the “likelihood” for an observed pair (x1 , x2 ) is .2 if (x1 , x2 ) = (0, 0) p̂ = .3 if (x1 , x2 ) = (0, 1) or (1, 0) .8 if (x1 , x2 ) = (1, 1) Liang Zhang (UofU) Applied Statistics I July 10, 2008 4 / 12 Methods of Point Estimation Liang Zhang (UofU) Applied Statistics I July 10, 2008 5 / 12 Methods of Point Estimation Definition Let X1 , X2 , . . . , Xn have joint pmf or pdf f (x1 , x2 , . . . , xn ; θ) where the parameter θ is unknown. When x1 , . . . , xn are the observed sample values and the above function f is regarded as a function of θ, it is called the likelihood function and often is denoted by L(θ). The maximum likelihood estimate (mle) θ̂ is the value of θ that maximize the likelihood function, so that f (x1 , x2 , . . . , xn ; θ̂) ≥ f (x1 , x2 , . . . , xn ; θ) for all θ When the Xi s are substituted in place of the xi s, the maximu likelihood estimator result. Liang Zhang (UofU) Applied Statistics I July 10, 2008 5 / 12 Point Estimation Liang Zhang (UofU) Applied Statistics I July 10, 2008 6 / 12 Point Estimation Problem: when there are more then one point estimator for parameter θ, which one of them should we use? Liang Zhang (UofU) Applied Statistics I July 10, 2008 6 / 12 Point Estimation Problem: when there are more then one point estimator for parameter θ, which one of them should we use? There are a few criteria for us to select the best point estimator: Liang Zhang (UofU) Applied Statistics I July 10, 2008 6 / 12 Point Estimation Problem: when there are more then one point estimator for parameter θ, which one of them should we use? There are a few criteria for us to select the best point estimator: unbiasedness, Liang Zhang (UofU) Applied Statistics I July 10, 2008 6 / 12 Point Estimation Problem: when there are more then one point estimator for parameter θ, which one of them should we use? There are a few criteria for us to select the best point estimator: unbiasedness, minimum variance, Liang Zhang (UofU) Applied Statistics I July 10, 2008 6 / 12 Point Estimation Problem: when there are more then one point estimator for parameter θ, which one of them should we use? There are a few criteria for us to select the best point estimator: unbiasedness, minimum variance, and mean square error. Liang Zhang (UofU) Applied Statistics I July 10, 2008 6 / 12 Point Estimation Liang Zhang (UofU) Applied Statistics I July 10, 2008 7 / 12 Point Estimation Definition A point estimator θ̂ is said to be an unbiased estimator of θ if E (θ̂) = θ for every possible value of θ. If θ̂ is not unbiased, the difference E (θ̂) − θ is called the bias of θ̂. Liang Zhang (UofU) Applied Statistics I July 10, 2008 7 / 12 Point Estimation Definition A point estimator θ̂ is said to be an unbiased estimator of θ if E (θ̂) = θ for every possible value of θ. If θ̂ is not unbiased, the difference E (θ̂) − θ is called the bias of θ̂. Principle of Unbiased Estimation When choosing among several different estimators of θ, select one that is unbiased. Liang Zhang (UofU) Applied Statistics I July 10, 2008 7 / 12 Point Estimation Liang Zhang (UofU) Applied Statistics I July 10, 2008 8 / 12 Point Estimation Proposition Let X1 , X2 , . . . , Xn be a random sample from a distribution with mean µ and variance σ 2 . Then the estimators Pn Pn (Xi − X )2 2 2 i=1 Xi µ̂ = X = and σ̂ = S = i=1 n n−1 are unbiased estimator of µ and σ 2 , respectively. e and If in addition the distribution is continuous and symmetric, then X any trimmed mean are also unbiased estimators of µ. Liang Zhang (UofU) Applied Statistics I July 10, 2008 8 / 12 Point Estimation Liang Zhang (UofU) Applied Statistics I July 10, 2008 9 / 12 Point Estimation Principle of Minimum Variance Unbiased Estimation Among all estimators of θ that are unbiased, choose the one that has minimum variance. The resulting θ̂ is called the minimum variance unbiased estimator ( MVUE) of θ. Liang Zhang (UofU) Applied Statistics I July 10, 2008 9 / 12 Point Estimation Principle of Minimum Variance Unbiased Estimation Among all estimators of θ that are unbiased, choose the one that has minimum variance. The resulting θ̂ is called the minimum variance unbiased estimator ( MVUE) of θ. Theorem Let X1 , X2 , . . . , Xn be a random sample from a normal distribution with mean µ and variance σ 2 . Then the estimator µ̂ = X is the MVUE for µ. Liang Zhang (UofU) Applied Statistics I July 10, 2008 9 / 12 Point Estimation Liang Zhang (UofU) Applied Statistics I July 10, 2008 10 / 12 Point Estimation Definition Let θ̂ be a point estimator of parameter θ. Then the quantity E [(θ̂ − θ)2 ] is called the mean square error (MSE) of θ̂. Liang Zhang (UofU) Applied Statistics I July 10, 2008 10 / 12 Point Estimation Definition Let θ̂ be a point estimator of parameter θ. Then the quantity E [(θ̂ − θ)2 ] is called the mean square error (MSE) of θ̂. Proposition MSE = E [(θ̂ − θ)2 ] = V (θ̂) + [E (θ̂) − θ]2 Liang Zhang (UofU) Applied Statistics I July 10, 2008 10 / 12 Point Estimation Liang Zhang (UofU) Applied Statistics I July 10, 2008 11 / 12 Point Estimation Definition The standard error of an estimator θ̂ is its standard deviation q σθ̂ = V (θ̂). If the standard error itself involves unknown parameters whose values can be estimated, substitution of these estimates into σθ̂ yields the estimated standard error (estimated standard deviation) of the estimator. The estimated standard error can be denoted either by σ̂θ̂ or by sθ̂ . Liang Zhang (UofU) Applied Statistics I July 10, 2008 11 / 12 Methods of Point Estimation Liang Zhang (UofU) Applied Statistics I July 10, 2008 12 / 12 Methods of Point Estimation The Invariance Principle Let θ̂ be the mle of the parameter θ. Then the mle of any function h(θ) of this parameter is the function h(θ̂). Liang Zhang (UofU) Applied Statistics I July 10, 2008 12 / 12 Methods of Point Estimation The Invariance Principle Let θ̂ be the mle of the parameter θ. Then the mle of any function h(θ) of this parameter is the function h(θ̂). Proposition Under very general conditions on the joint distribution of the sample, when the sample size n is large, the maximum likelihood estimator of any parameter θ is approximately unbiased [E (θ̂) ≈ θ] and has variance that is nearly as small as can be achieved by any estimator. Stated another way, the mle θ̂ is approximately the MVUE of θ. Liang Zhang (UofU) Applied Statistics I July 10, 2008 12 / 12