Point Estimation

Point Estimation

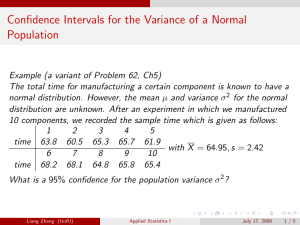

Example (a variant of Problem 62, Ch5)

Manufacture of a certain component requires three different maching operations. The total time for manufacturing one such component is known to have a normal distribution. However, the mean µ and variance

σ

2 for the normal distribution are unknown. If we did an experiment in which we manufactured 10 components and record the operation time, and the sample time is given as following:

1 2 3 4 5 time 63.8

60.5

65.3

65.7

61.9

6 7 8 9 10 time 68.2

68.1

64.8

65.8

65.4

What can we say about the population mean µ and population variance

σ

2

?

Liang Zhang (UofU)

July 14, 2008 1 / 9

Point Estimation

Example (a variant of Problem 64, Ch5)

Suppose the waiting time for a certain bus in the morning is uniformly distributed on [0 , θ ] , where θ is unknown. If we record 10 waiting times as follwos:

1 2 3 4 5 time 7.6

1.8

4.8

3.9

7.1

6 7 8 9 10 time 6.1

3.6

0.1

6.5

3.5

What can we say about the parameter θ ?

Liang Zhang (UofU)

July 14, 2008 2 / 9

Point Estimation

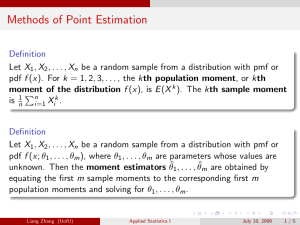

Definition

A point estimate of a parameter θ is a single number that can be regarded as a sensible value for θ . A point estimate is obtained by selecting a suitable statistic and computing its value from the given sample data. The selected statistic is called the point estimator of θ .

e.g. X = P

10 i =1

X i

/ 10 is a point estimator for µ for the normal distribution example.

The largest sample data X

10 , 10 distribution example.

is a point estimator for θ for the uniform

Liang Zhang (UofU)

July 14, 2008 3 / 9

Point Estimation

Problem: when there are more then one point estimator for parameter θ , which one of them should we use?

There are a few criteria for us to select the best point estimator : unbiasedness , minimum variance , and mean square error .

Liang Zhang (UofU)

July 14, 2008 4 / 9

Point Estimation

Definition

A point estimator ˆ is said to be an unbiased estimator of θ if E θ ) = θ for every possible value of θ . If ˆ is not unbiased, the difference E θ ) − θ is called the bias of ˆ .

Principle of Unbiased Estimation

When choosing among several different estimators of θ , select one that is unbiased.

Liang Zhang (UofU)

July 14, 2008 5 / 9

Point Estimation

Proposition

Let X

1

, X

2

, . . . , X n be a random sample from a distribution with mean µ and variance σ

2

. Then the estimators

ˆ = X =

P n i =1 n

X i and ˆ

2

= S

2

=

P n i =1

( X i

− X )

2 n − 1 are unbiased estimator of µ and σ

2

, respectively.

If in addition the distribution is continuous and symmetric, then e any trimmed mean are also unbiased estimators of µ .

Liang Zhang (UofU)

July 14, 2008 6 / 9

Point Estimation

Principle of Minimum Variance Unbiased Estimation

Among all estimators of θ that are unbiased, choose the one that has minimum variance. The resulting

ˆ is called the minimum variance unbiased estimator ( MVUE ) of θ .

Theorem

Let X

1

, X

2

, . . . , X n be a random sample from a normal distribution with mean µ and variance σ

2

. Then the estimator ˆ = X is the MVUE for µ .

Liang Zhang (UofU)

July 14, 2008 7 / 9

Point Estimation

Definition

Let ˆ be a point estimator of parameter θ . Then the quantity E [(ˆ − θ )

2

] is called the mean square error ( MSE ) of ˆ .

Proposition

MSE = E [(ˆ − θ )

2

] = V (ˆ ) + [ E (ˆ ) − θ ]

2

Liang Zhang (UofU)

July 14, 2008 8 / 9

Point Estimation

Definition

The standard error of an estimator ˆ is its standard deviation q

σ = V (ˆ ). If the standard error itself involves unknown parameters whose values can be estimated, substitution of these estimates into σ yields the estimated standard error (estimated standard deviation) of or by s .

Liang Zhang (UofU)

July 14, 2008 9 / 9