Abstract

advertisement

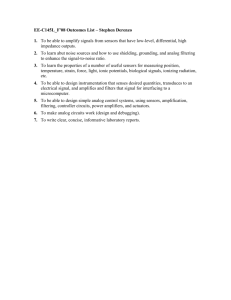

Low-Power Analog Deep Learning Architecture for Image Processing Jeremy Holleman, Itamar Arel Department of Electrical Engineering and Computer Science University of Tennessee, Knoxville Knoxville, TN, USA 37996 Abstract We present an analog implementation of the DeSTIN deep learning architecture. It utilizes floating-gate analog memories to store parameters and current-mode computation and features online unsupervised trainability. The circuit occupies 0.36 mm2 in a 0.13 μm CMOS process. At 8.3 kHz, it consumes 11.4 μW from a 3 V supply, achieving 1×1012 ops/s/W. Measurement demonstrates accuracy comparable to software. Keywords: Analog signal processing; deep machine learning; neuromorphic engineering Introduction Automated processing of large data sets has a wide range of applications [1], but the high dimensionality necessitates unsupervised automated feature extraction. Deep machine learning (DML) architectures have recently emerged as a promising bio-inspired framework, which mimics the hierarchical representation of information in the neocortex to achieve robust automated feature extraction [2]. However, their computational requirements result in prohibitive power consumption when implemented on conventional digital processors or GPUs. Custom analog circuitry provides a means to dramatically reduce the energy costs of the moderate-resolution computation needed for learning applications [3-8]. In this paper we describe an analog implementation of a deep machine learning system first presented in [9]. It features unsupervised online trainability driven by the input data only. The proposed Analog Deep Learning Engine (ADE) utilizes floating-gate memory to provide non-volatile storage, facilitating operation with harvested energy. The memory has analog current output, interfacing naturally with the other components in the system, and is compatible with standard digital CMOS technology. Architecture and Algorithm The analog deep machine learning engine (ADE) implements Deep Spatiotemporal Inference Network (DesTIN) [10], a state-of-art compositional DML framework, illustrated in Figure 1. Seven identical nodes form a 4-2-1 hierarchy. Each node captures the regularities in its inputs through an unsupervised learning process and constructs belief states, which it passes up to the layer above. The bottom layer acts on raw data (e.g. pixels of an image) and the information becomes increasingly sparse and abstract as it moves up through the hierarchy. The beliefs formed at the Figure 1. Analog deep machine learning engine architecture and possible application scenarios. top layer are then used as rich features with reduced dimensionality for post-processing. The node learns through an online k-means clustering algorithm [11], which extracts the salient features of the inputs by recognizing patterns of similarity in the input space. Each recognized cluster is represented with a centroid, characterized by estimated mean and variance for each dimension. The node, shown in Figure 2, incorporates an 8×4 array of reconfigurable analog computation cells (RAC), grouped into 4 centroids, each with 8dimensional input. A training cycle begins with the classification phase, in which an input vector is assigned to the nearest centroid. The RACs calculate the 1-D Euclidean distance between the centroid mean and the input element o i , which are then wire-summed in current form to yield the total Euclidean distances between the observation and each centroid. The nearest centroid is then selected and its mean and variance estimates are updated such that the FGMs calculate an exponential moving average estimate of the cluster mean and variance. The RAC then incorporates the centroids’ variances to compute the Mahalanobis distance, which is used to probabilistically assign the observation to each centroid. To take full advantage of the algorithm’s robustness and avoid performance degradation due to analog error sources, extensive systemlevel simulations were performed [12]. The results Output Belief State B Distance Processing Unit (DPU) 4 Inverse Normalization (IN) Winner-Take-All (WTA) DEUC/DMAH Sel AAE FGM μ S/H Starvation Trace (ST) AAE ... FGM σ2 Memory Writing Logic Dim1 Centroid1 8 2 FGM μ AAE ... FGM σ2 Memory Writing Logic Dim1 FGM μ 8 Dim1 Centroid2 Input o Observation ... FGM σ2 Memory Writing Logic 8 2 ( ) FGM μ Memory Writing Logic Dim1 16 Training Control μ Ctrl Training Control σ2 ... FGM σ2 2 Centroid3 8 Error AAE 16 8 2 ... 8 Dim1 Centroid4 Reconfigurable Analog Computation Cell (RAC) 8×4 2 Training Control (TC)×8 Figure 2. The node architecture. Each node includes four 8-D centroids as well as control and processing circuitry. Distance Processing Unit × ¼ X IN Z X2 Y M3 IOUT = λ/IIN ΣIOUT = INORM INORM VC M2 X o ˆ M1 VB DEUC/ DMAH IOUT S/H IIN B To Higher Layer From RAC M6 Figure 3. Schematic of analog arithmetic element. informed the noise and matching specifications for the computational circuits. Circuit Implementation The Reconfigurable Analog Computation cell (RAC) performs three different operations using subthreshold current-mode computation and reconfigurable current routing. The input current o and the centroid mean ̂ stored in the FGM_μ are added with opposite polarity and the difference current ̂ is rectified by the absolute value circuit Abs. The unidirectional output current is then fed into the X2/Y operator circuit, where the Y component can be either the centroid variance memory output , or a constant C to compute DMAH or DEUC, respectively. The absolute error | ̂ | is used to generate the update pulse for the mean memory, while the is computed to determine the quantity | ̂| update for the variance memory. The schematic of the analog arithmetic element (AAE) is shown in Figure 3. Amplifier A and the class-B structure M1/M2 provide a virtual ground at the input, allowing high-speed resolution of small current differences. The X2/Y operator circuit employs the translinear principle [13] to implement efficient currentmode arithmetic. Parameters are stored in an analog floating-gate memory (FGM)[14]. M7 M5 Sel IB/2 ST M4 WTA IB Figure 4. Schematic of one channel of the distance processing unit. The translinear loop formed by M1 and M2 yields an inverse relationship between their two drain currents, while the current source INorm constrains all output currents IOut to be normalized to a constant sum. The Distance Processing Unit (DPU) performs inverse normalization and a winner-take-all operation [16] on the combined distance outputs from the four centroids. The simplified schematic of one channel is shown in Figure 4. The DPU also implements the starvation trace [15], which allows poorly initialized centroids to be slowly drawn towards populated areas of the data space. The training control circuit converts the memory error current to a pulse width to control the memory adaptation. For each dimension, two TC cells are implemented, one for mean and one for variance, shared across centroids. Table 1. Performance Summary Techonology Power Supply Active Area Memory Memory SNR Training Algorithm Input Referred Noise System SNR I/O Type 1P8M 0.13μm CMOS 3V 0.9mm×0.4mm Non-Volatile Floating Gate 46dB Unsupervised Online Clustering 56.23pArms 45dB Analog Current Training Mode 4.5kHz Operating Frequency Recognition Mode 8.3kHz Training Mode 27μW Power Consumption Recognition Mode 11.4μW Training Mode 480GOPS/W Energy Efficiency Recognition Mode 1.04TOPS/W Figure 5. Clustering test results with bad initial condition without (left) and with the starvation trace enabled. ADE Neural Network Classifier Input Pattern Classification Result 4-D 32-D Rich Features Raw Data Belief States 10 5 0 0 20 40 Clustering Test To test the clustering performance of the node, an input dataset of 40,000 8-D vectors was drawn from 4 underlying Gaussian distributions with different mean and variance. During the test, the centroid means were read out every 0.5 s; the locations are overlaid on the data scatter in Figure 5. For easier visual interpretation, 2-D results are shown. The performance of the starvation trace is verified by presenting the node with an ill-posed clustering problem where one centroid is initialized far from the input data, and is therefore never updated without the ST enabled (Figure 5, left). With the starvation trace enabled, the starved centroid is slowly pulled toward the area populated by the data, achieving a correct clustering result, shown in Figure 5 (right). Feature Extraction Test We demonstrate the full functionality of the chip by performing feature extraction for pattern recognition with the setup shown in Figure 6(a). The input patterns are 16×16 bitmaps corrupted by random pixel errors. An 8×4 moving window selects the ADE’s 32 inputs. The ADE is first trained on unlabeled patterns. After training, adaptation can be disabled and the circuit operates in recognition mode. The 4 belief states Sum of Beliefs 1 0.5 Beliefs 0 0 80 Training Sample (x1k) 50 Input Pattern 10% Corruption 100 Sample (c) (b) 100% Noise Performance To measure input-referred noise, we construct a histogram of classifications near a decision boundary with adaptation disabled. Assuming additive Gaussian noise, the relative frequency of one of the two classification results approximates the cumulative density function (cdf) of a normal distribution with standard deviation equal to the RMS current noise. The measured input-referred current noise is 56.23 pArms, which with an input full scale range of 10 nA corresponds to an SNR of 45 dB, or 7.5 bit resolution. 60 94% Classification Accuracy The ADE occupies an active area of 0.36 mm2 in a 0.13 μm CMOS process. With a 3 V power supply, it consumes 27 μW in training mode, and 11.4 μW in recognition mode. (a) Centroid Means Measurement Results 1 0.8 0.6 0.4 0.2 0 0 Classification Input Pattern Result 20% Corruption Measured Mean 95% CI Baseline 10 20 30 40 50 Percentage Corruption (d) Figure 6. (a) Feature extraction test setup. (b) The convergence of centroids during training. (c) Rich features output from the top layer, showing the effectiveness of normalization. (d) Measured classification accuracy using the features extracted by the chip. The plot on the right shows the mean accuracy and 95% confidence interval (2 ) from the three chips tested, compared to the software baseline. from the top layer are used as rich features, achieving a dimension reduction from 32 to 4. A software neural network then classifies the reduced-dimension patterns. Three chips were tested and average recognition accuracies of 100% with pixel corruption level lower than 10% and 94% with 20% corruption are obtained, which is comparable to the floating-point software baseline, as shown in Figure 6(d), demonstrating robustness to the non-idealities of analog computation. Efficiency Comparison The measured performance of the ADE is summarized in Table 1. It achieves an energy efficiency of 480 GOPS/W in training mode and 1.04 TOPS/W in recognition mode. Table 2 shows that, compared to other mixed-signal computational circuits, this work achieves very high energy efficiency in both modes. Table 2. Performance Comparison Process Purpose This Work 0.13 μm DML Extraction Non-volatile Memory Power Peak Energy Efficiency Floating-Gate 11.4 μW 1.04 TOPS/W Feature JSSC ’13 [5] 0.13 μm Neural-Fuzzy Processor ISSCC ‘13[6] 0.13 μm Object Recognition JSSC ’10 [7] 0.13 μm Object Recognition N/A 57 mW 655 GOPS/W N/A 260 mW 646 GOPS/W N/A 496 mW 290 GOPS/W Additionally, a digital equivalent of the ADE was implemented in the same process using logic synthesized from standard cells with 8-bit resolution and 12-bit memory width. According to post-layout power estimation, this digital equivalent running at 2 MHz in training mode consumes 3.46 W, yielding an energy efficiency of 1.66 GOPS/W, 288 times lower than this analog implementation. Conclusions In this paper, we describe an analog deep machinelearning system. It overcomes the limitations of conventional digital implementations through the efficiency of analog signal processing while exploiting algorithmic robustness to mitigate the effects of the nonidealities of analog computation. It demonstrates efficiency more than two orders of magnitude greater than custom digital VLSI with accuracy comparable to a floating-point software implementation. The low power consumption and non-volatile memory make it attractive for autonomous sensory applications and as a building block for large-scale learning systems. Acknowledgements This work was partially supported by the NSF through grant #CCF-1218492 and by DARPA through agreement #HR0011-13-2-0016. The views and conclusions contained herein are those of the authors and should not be interpreted as representing the official policies or endorsements, either expressed or implied, of DARPA, the NSF, or the U.S. Government. REFERENCES [1] "Machine Learning Surveys," [Online]. Available: http://www.mlsurveys.com/. [2] I. Arel, D. Rose and T. Karnowski, "Deep machine learning - a new frontier in artificial intelligence research," Computational Intelligence Magazine, IEEE, vol. 5, no. 4, pp. 13-18, 2010. [3] R. Sarpeshkar, "Analog versus digital: extrapolating from electronics to neurobiology," Neural Comput., vol. 10, pp. 16011638, Oct. 1998. [4] J. Holleman, S. Bridges, B. Otis and C. Diorio, "A 3 uW CMOS true random number generator with adaptive floating-gate offset cancellation," IEEE J. Solid-State Circuits, vol. 43, no. 5, pp. 13241336, May 2008. [5] J. Oh, G. Kim, B.-G. Nam and H.-J. Yoo, "A 57 mW 12.5 µJ/Epoch embedded mixed-mode neuro-fuzzy processor for mobile real-time object recognition," IEEE J. Solid-State Circuits, vol. 48, no. 11, pp. 2894-2907, Nov. 2013. [6] J. Park, I. Hong, G. Kim, Y. Kim, K. Lee, S. Park, K. Bong and H.J. Yoo, "A 646GOPS/W multi-classifier many-core processor with cortex-like architecture for super-resolution recognition," in IEEE Int. Solid-State Circuits Conf. (ISSCC) Dig. Tech. Papers, Feb. 2013, pp. 17-21. [7] J.-Y. Kim, M. Kim, S. Lee, J. Oh, K. Kim and H.-J. Yoo, "A 201.4 GOPS 496 mW real-time multi-object recognition processor with bio-inspired neural perception engine," IEEE J. Solid-State Circuits, vol. 45, no. 1, pp. 32-45, Jan. 2010. [8] S. Chakrabartty and G. Cauwenberghs, "Sub-microwatt analog VLSI trainable pattern classifier," IEEE J. Solid-State Circuits, vol. 42, no. 5, pp. 1169-1179, May 2007. [9] J. Lu, S. Young, I. Arel and J. Holleman, "A 1TOPS/W analog deep machine-learning engine with floating-gate storage in 0.13μm CMOS," in IEEE Int. Solid-State Circuits Conf. (ISSCC) Dig. Tech. Papers, Feb. 2014, pp. 504-505. [10] S. Young, A. Davis, A. Mishtal and I. Arel, "Hierarchical spatiotemporal feature extraction using recurrent online clustering," Pattern Recognition Letters, vol. 37, pp. 115-123, Feg. 2014. [11] J. Lu, S. Young, I. Arel and J. Holleman, "An analog online clustering circuit in 130nm CMOS," in Proc. IEEE Asian SolidState Circuits Conf. (A-SSCC), Nov. 2013, pp. 177-180. [12] S. Young, J. Lu, J. Holleman and I. Arel, "On the impact of approximate computation in an analog DeSTIN architecture," IEEE Trans. Neural Netw. Learn. Syst., vol. PP, no. 99, p. 1, Oct. 2013. [13] B. Gilbert, "Translinear circuits: a proposed classification," Electron. Lett., vol. 11, no. 1, pp. 14-16, 1975. [14] J. Lu and J. Holleman, "A floating-gate analog memory with bidirectional sigmoid updates in a standard digital process," in Proc. IEEE Int. Symp. Circuits Syst. (ISCAS), May 2013, vol. 2, pp. 1600-1603. [15] S. Young, I. Arel, T. Karnowski and D. Rose, "A fast and stable incremental clustering algorithm," in proc. 7th International Conference on Information Technology, Apr. 2010. [16] J. Lazzaro, S. Ryckebusch, M. A. Mahowald and C. Mead, "Winner-take-all networks of O(n) complexity," Advances in Neural Information Processing Systems 1, pp. 703-711, Morgan Kaufmann Publishers, San Francisco, CA, 1989.